数据采集与融合技术实践3

作业要求

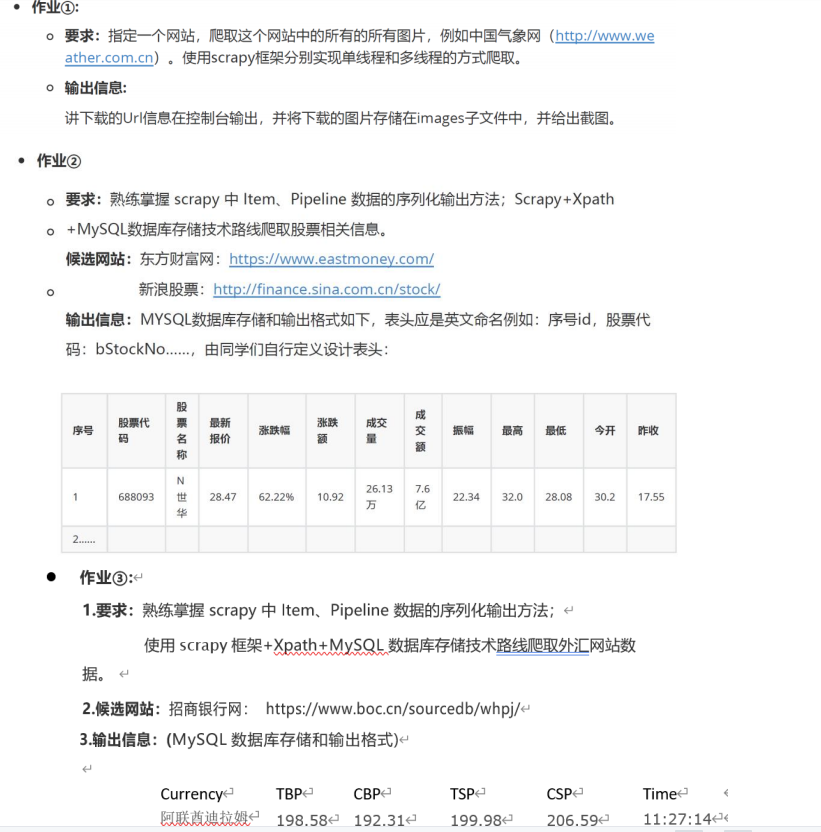

第一题

pipelines:

单线程

import threading

from itemadapter import ItemAdapter

import urllib.request

import os

import pathlib

import pymysql

from Practical_work3.items import work1_Item

def process_item(self, item, spider):

url = item['img_url']

print(url)

img_data = urllib.request.urlopen(url=url).read()

img_path = self.desktopDir + '\\images\\' + str(self.count)+'.jpg'

with open(img_path, 'wb') as fp:

fp.write(img_data)

self.count = self.count + 1

return item

多线程

import threading

from itemadapter import ItemAdapter

import urllib.request

import os

import pathlib

import pymysql

from Practical_work3.items import work1_Item

def process_item(self, item, spider):

if isinstance(item,work1_Item):

url = item['img_url']

print(url)

T=threading.Thread(target=self.download_img,args=(url,))

T.setDaemon(False)

T.start()

self.threads.append(T)

return item

setting

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.0.0 Safari/537.36 Edg/118.0.2088.76'

ROBOTSTXT_OBEY = False

LOG_LEVEL = 'ERROR'

ITEM_PIPELINES = {

'Practical_work3.pipelines.work1_Pipeline': 224,

}

items

import scrapy

class work1_Item(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

img_url = scrapy.Field()

运行

import scrapy

from Practical_work3.items import work1_Item

class Work1Spider(scrapy.Spider):

name = 'work1'

# allowed_domains = ['www.weather.com.cn']

start_urls = ['http://www.weather.com.cn/']

def parse(self, response):

data = response.body.decode()

selector=scrapy.Selector(text=data)

img_datas = selector.xpath('//a/img/@src')

for img_data in img_datas:

item = work1_Item()

item['img_url'] = img_data.extract()

yield item

运行结果

心得

单线程和多线程相比下载速度差了非常多,在编译过程中没有考虑到异常处理,导致代码运行一直出错,后续查了相关资料后订正。

第二题:

pipeline

import threading

from itemadapter import ItemAdapter

import urllib.request

import os

import pathlib

import pymysql

from Practical_work3.items import work2_Item

class work2_Pipeline:

def open_spider(self,spider):

# 在爬虫启动时执行的操作

try:

self.db = pymysql.connect(host='127.0.0.1', user='root', passwd='1a2345678', port=3306,charset='utf8',database='ccm')

self.cursor = self.db.cursor()

self.cursor.execute('DROP TABLE IF EXISTS gupiao')

sql = """CREATE TABLE gupiao(Latest_quotation Double,Chg Double,up_down_amount Double,turnover Double,transaction_volume Double,

amplitude Double,id varchar(10) PRIMARY KEY,name varchar(30),highest Double, lowest Double,today Double,yesterday Double)"""

self.cursor.execute(sql)

except Exception as e:

print(e)

def process_item(self, item, spider):

# 处理工作2的item

if isinstance(item, work2_Item):

sql = """INSERT INTO gupiao VALUES (%f,%f,%f,%f,%f,%f,"%s","%s",%f,%f,%f,%f)""" % (item['f2'],item['f3'],item['f4'],item['f5'],item['f6'],

item['f7'],item['f12'],item['f14'],item['f15'],item['f16'],item['f17'],item['f18'])

self.cursor.execute(sql)

self.db.commit()

return item

def close_spider(self,spider):

# 在爬虫关闭时执行的操作

self.cursor.close()

self.db.close()

setting

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.0.0 Safari/537.36 Edg/118.0.2088.76'

ROBOTSTXT_OBEY = False

LOG_LEVEL = 'ERROR'

ITEM_PIPELINES = {

'Practical_work3.pipelines.work2_Pipeline': 620,

}

items

import scrapy

class work2_Item(scrapy.Item):

f2 = scrapy.Field()

f3 = scrapy.Field()

f4 = scrapy.Field()

f5 = scrapy.Field()

f6 = scrapy.Field()

f7 = scrapy.Field()

f12 = scrapy.Field()

f14 = scrapy.Field()

f15 = scrapy.Field()

f16 = scrapy.Field()

f17 = scrapy.Field()

f18 = scrapy.Field()

运行

import scrapy

import re

import json

from Practical_work3.items import work2_Item

class Work2Spider(scrapy.Spider):

name = 'work2'

# allowed_domains = ['25.push2.eastmoney.com']

start_urls = ['http://25.push2.eastmoney.com/api/qt/clist/get?cb=jQuery1124021313927342030325_1696658971596&pn=1&pz=20&po=1&np=1&ut=bd1d9ddb04089700cf9c27f6f7426281&fltt=2&invt=2&wbp2u=|0|0|0|web&fid=f3&fs=m:0+t:6,m:0+t:80,m:1+t:2,m:1+t:23,m:0+t:81+s:2048&fields=f2,f3,f4,f5,f6,f7,f12,f14,f15,f16,f17,f18&_=1696658971636']

def parse(self, response):

data = response.body.decode()

item = work2_Item()

data = re.compile('"diff":\[(.*?)\]',re.S).findall(data)

columns={'f2':'最新价','f3':'涨跌幅(%)','f4':'涨跌额','f5':'成交量','f6':'成交额','f7':'振幅(%)','f12':'代码','f14':'名称','f15':'最高',

'f16':'最低','f17':'今开','f18':'昨收'}

for one_data in re.compile('\{(.*?)\}',re.S).findall(data[0]):

data_dic = json.loads('{' + one_data + '}')

for k,v in data_dic.items():

item[k] = v

yield item

运行结果

心得

查找相关资料,学会了在电脑上安装和配置mysql,并学会了将结果保存在mysql上并输出。

第三题:

pipeline

import threading

from itemadapter import ItemAdapter

import urllib.request

import os

import pathlib

import pymysql

from Practical_work3.items import work3_Item

class work3_Pipeline:

def open_spider(self,spider):

# 在爬虫启动时执行的操作

try:

self.db = pymysql.connect(host='127.0.0.1', user='root', passwd='1a2345678', port=3306,charset='utf8',database='ccm')

self.cursor = self.db.cursor()

self.cursor.execute('DROP TABLE IF EXISTS qian')

sql = """CREATE TABLE qian(Currency varchar(33),p1 varchar(15),p2 varchar(15),p3 varchar(15),p4 varchar(15),p5 varchar(15),Time varchar(33))"""

self.cursor.execute(sql)

except Exception as e:

print(e)

def process_item(self, item, spider):

# 处理工作3的item

if isinstance(item, work3_Item):

sql = 'INSERT INTO qian VALUES ("%s","%s","%s","%s","%s","%s","%s")' % (item['name'],item['price1'],item['price2'],

item['price3'],item['price4'],item['price5'],item['date'])

self.cursor.execute(sql)

self.db.commit()

return item

def close_spider(self,spider):

# 在爬虫关闭时执行的操作

self.cursor.close()

self.db.close()

setting

BOT_NAME = 'Practical_work3'

SPIDER_MODULES = ['Practical_work3.spiders']

NEWSPIDER_MODULE = 'Practical_work3.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/118.0.0.0 Safari/537.36 Edg/118.0.2088.76'

# Obey robots.txt rules

ROBOTSTXT_OBEY = False

LOG_LEVEL = 'ERROR'

ITEM_PIPELINES = {

'Practical_work3.pipelines.work3_Pipeline': 1555,

}

items

import scrapy

class work3_Item(scrapy.Item):

name = scrapy.Field()

price1 = scrapy.Field()

price2 = scrapy.Field()

price3 = scrapy.Field()

price4 = scrapy.Field()

price5 = scrapy.Field()

date = scrapy.Field()

运行

import scrapy

from Practical_work3.items import work3_Item

class Work3Spider(scrapy.Spider):

name = 'work3'

# allowed_domains = ['www.boc.cn']

start_urls = ['https://www.boc.cn/sourcedb/whpj/']

def parse(self, response):

data = response.body.decode()

selector=scrapy.Selector(text=data)

data_lists = selector.xpath('//table[@align="left"]/tr')

for data_list in data_lists:

datas = data_list.xpath('.//td')

if datas != []:

item = work3_Item()

keys = ['name','price1','price2','price3','price4','price5','date']

str_lists = datas.extract()

for i in range(len(str_lists)-1):

item[keys[i]] = str_lists[i].strip('<td class="pjrq"></td>').strip()

yield item

运行结果

心得

和上一个任务很类似,只不过是对代码、数据库表结构以及XPath选择器进行微调。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 提示词工程——AI应用必不可少的技术

· 地球OL攻略 —— 某应届生求职总结

· 字符编码:从基础到乱码解决

· SpringCloud带你走进微服务的世界