zookeeper笔记之基于zk实现分布式锁

一、分布式锁概述

Java中基于AQS框架提供了一系列的锁,但是当需要在集群中的多台机器上互斥执行一段代码或使用资源时Java提供的这种单机锁就没了用武之地,此时需要使用分布式锁协调它们。分布式锁有很多实现,基于redis、基于数据库等等,本次讨论的是基于zk实现分布式锁。

免责声明:下面的分布式锁是本人学习zk时根据其特性摸索出来的实现,并不代表业内权威做法,仅作为不同的思想碰撞出灵感的小火花之用,如有错误之处还望多多指教。

二、不可重入的分布式锁(有羊群效应,不公平锁)

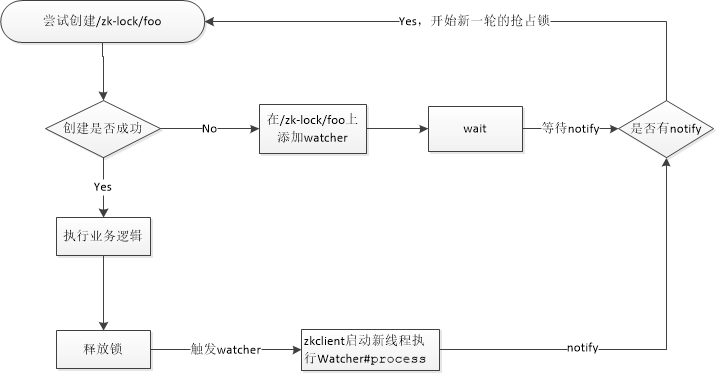

首先比较容易想到的就是约定一个路径的创建作为抢占锁的条件,比如路径/zk-lock/foo,然后集群中每台机器上的程序都尝试去创建这样一个临时节点,谁创建成功了谁就抢到了锁,没有创建成功的把自己休眠,休眠之前在/zk-lock/foo节点上添加watcher,watcher中当/zk-lock/foo节点被删除时将自己叫醒重新抢占锁,一直重复这个过程直到所有线程都抢到过锁。

再来说抢到锁的机器,这台机器抢到锁之后就开始执行一些业务逻辑等乱七八糟的事情,执行完之后需要unlock释放锁,这里所谓的释放锁实际上就是将/zk-lock/foo节点删除,之前说过了其它没有抢到锁的机器都在这个节点上面添加了一个watcher,所以删除/zk-lock/foo实际上就是将其它休眠的机器唤醒让它们重新抢占锁。

上述过程流程图:

代码实现,首先是分布式锁的接口定义:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | package cc11001100.zookeeper.lock;/** * @author CC11001100 */public interface DistributeLock { void lock() throws InterruptedException; void unlock(); /** * 释放此分布式锁所需要的资源,比如zookeeper连接 */ void releaseResource();} |

按上述流程图对分布式锁的一个实现:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 | package cc11001100.zookeeper.lock;import cc11001100.zookeeper.utils.ZooKeeperUtil;import org.apache.zookeeper.CreateMode;import org.apache.zookeeper.KeeperException;import org.apache.zookeeper.ZooDefs;import org.apache.zookeeper.ZooKeeper;import java.io.IOException;import java.nio.charset.StandardCharsets;import java.util.UUID;/** * 不可重入有羊群效应的分布式锁(不公平锁) * * @author CC11001100 */public class NotReentrantDistributeLockWithHerdEffect implements DistributeLock { private final Thread currentThread; private String lockBasePath; private String lockName; private String lockFullPath; private ZooKeeper zooKeeper; private String myId; private String myName; public NotReentrantDistributeLockWithHerdEffect(String lockBasePath, String lockName) throws IOException { this.lockBasePath = lockBasePath; this.lockName = lockName; this.lockFullPath = this.lockBasePath + "/" + lockName; this.zooKeeper = ZooKeeperUtil.getZooKeeper(); this.currentThread = Thread.currentThread(); this.myId = UUID.randomUUID().toString(); this.myName = Thread.currentThread().getName(); createLockBasePath(); } private void createLockBasePath() { try { if (zooKeeper.exists(lockBasePath, null) == null) { zooKeeper.create(lockBasePath, "".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); } } catch (KeeperException | InterruptedException ignored) { } } @Override public void lock() throws InterruptedException { try { zooKeeper.create(lockFullPath, myId.getBytes(StandardCharsets.UTF_8), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL); System.out.println(myName + ": get lock"); } catch (KeeperException.NodeExistsException e) { // 如果节点已经存在,则监听此节点,当节点被删除时再抢占锁 try { zooKeeper.exists(lockFullPath, event -> { synchronized (currentThread) { currentThread.notify(); System.out.println(myName + ": wake"); } }); } catch (KeeperException.NoNodeException e1) { // 间不容发之际,其它人释放了锁 lock(); } catch (KeeperException | InterruptedException e1) { e1.printStackTrace(); } synchronized (currentThread) { currentThread.wait(); } lock(); } catch (KeeperException | InterruptedException e) { e.printStackTrace(); } } @Override public void unlock() { try { byte[] nodeBytes = zooKeeper.getData(lockFullPath, false, null); String currentHoldLockNodeId = new String(nodeBytes, StandardCharsets.UTF_8); // 只有当前锁的持有者是自己的时候,才能删除节点 if (myId.equalsIgnoreCase(currentHoldLockNodeId)) { zooKeeper.delete(lockFullPath, -1); } } catch (KeeperException | InterruptedException e) { e.printStackTrace(); } System.out.println(myName + ": releaseResource lock"); } @Override public void releaseResource() { try { // 将zookeeper连接释放掉 zooKeeper.close(); } catch (InterruptedException e) { e.printStackTrace(); } }} |

测试代码如下:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 | package cc11001100.zookeeper.lock;import java.io.IOException;import java.time.LocalDateTime;import java.time.format.DateTimeFormatter;import java.util.concurrent.TimeUnit;/** * @author CC11001100 */public class NotReentrantDistributeLockWithHerdEffectTest { private static String now() { return LocalDateTime.now().format(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss")); } public static void main(String[] args) { int nodeNum = 10; for (int i = 0; i < nodeNum; i++) { new Thread(() -> { DistributeLock lock = null; try { lock = new NotReentrantDistributeLockWithHerdEffect("/zk-lock", "foo"); lock.lock(); String myName = Thread.currentThread().getName(); System.out.println(myName + ": hold lock, now=" + now()); TimeUnit.SECONDS.sleep(3); lock.unlock(); } catch (IOException | InterruptedException e) { e.printStackTrace(); } finally { if (lock != null) { lock.releaseResource(); } } }, "thread-name-" + i).start(); }// output:// thread-name-8: get lock// thread-name-8: hold lock, now=2019-01-14 13:31:48// thread-name-8: releaseResource lock// thread-name-2: wake// thread-name-5: wake// thread-name-3: wake// thread-name-1: wake// thread-name-4: wake// thread-name-9: wake// thread-name-0: wake// thread-name-7: wake// thread-name-6: wake// thread-name-2: get lock// thread-name-2: hold lock, now=2019-01-14 13:31:51// thread-name-7: wake// thread-name-2: releaseResource lock// thread-name-6: wake// thread-name-0: wake// thread-name-9: wake// thread-name-1: wake// thread-name-3: wake// thread-name-5: wake// thread-name-4: wake// thread-name-0: get lock// thread-name-0: hold lock, now=2019-01-14 13:31:54// thread-name-6: wake// thread-name-1: wake// thread-name-7: wake// thread-name-4: wake// thread-name-3: wake// thread-name-5: wake// thread-name-0: releaseResource lock// thread-name-9: wake// thread-name-1: get lock// thread-name-1: hold lock, now=2019-01-14 13:31:57// thread-name-9: wake// thread-name-6: wake// thread-name-7: wake// thread-name-3: wake// thread-name-5: wake// thread-name-4: wake// thread-name-1: releaseResource lock// thread-name-9: get lock// thread-name-9: hold lock, now=2019-01-14 13:32:00// thread-name-7: wake// thread-name-4: wake// thread-name-5: wake// thread-name-3: wake// thread-name-9: releaseResource lock// thread-name-6: wake// thread-name-3: get lock// thread-name-3: hold lock, now=2019-01-14 13:32:03// thread-name-6: wake// thread-name-5: wake// thread-name-4: wake// thread-name-3: releaseResource lock// thread-name-7: wake// thread-name-6: get lock// thread-name-6: hold lock, now=2019-01-14 13:32:06// thread-name-7: wake// thread-name-6: releaseResource lock// thread-name-4: wake// thread-name-5: wake// thread-name-7: get lock// thread-name-7: hold lock, now=2019-01-14 13:32:09// thread-name-4: wake// thread-name-7: releaseResource lock// thread-name-5: wake// thread-name-5: get lock// thread-name-5: hold lock, now=2019-01-14 13:32:12// thread-name-5: releaseResource lock// thread-name-4: wake// thread-name-4: get lock// thread-name-4: hold lock, now=2019-01-14 13:32:15// thread-name-4: releaseResource lock }} |

三、不可重入的分布式锁(无羊群效应,公平锁)

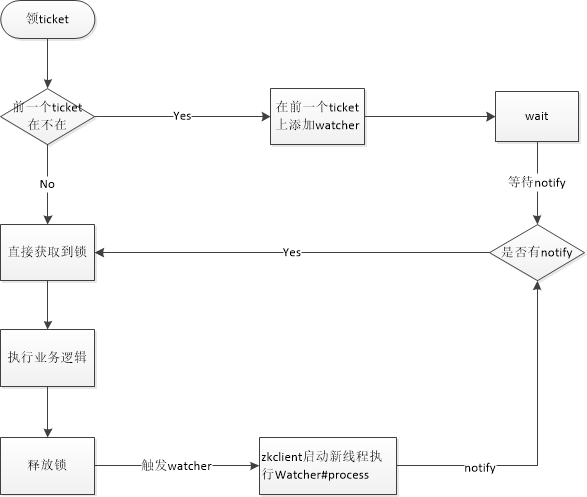

上面实现的代码虽然实现了基本的锁的功能,但是它有一个比较致命的问题,就是当释放锁的时候会把其它所有机器全部唤醒,但是最终只有一台机器会抢到锁,没抢到的还得继续休眠,尤其是当机器数比较多的时候,叫醒了好几十台机器,结果还是只有一台机器能够抢到锁(堪比12306的抢票难度...),但是不公平锁就是这样啊,需要大家一起抢,否则怎么能体现出不公平呢,这个现象被称为羊群效应。

其实这个问题可以变通一下,参考Java中AQS框架的CLH队列的实现,我们可以让其排队,每台机器来抢锁的时候都要排队,排队的时候领一个号码称为自己的ticket,这个ticket是有序的,每次增加1,所以只需要前面的人释放锁的时候叫醒后面的第一个人就可以了,即每台机器在领完号码的时候都往自己前面的机器的ticket上添加一个watcher,当前面的执行完业务逻辑释放锁的时候将自己叫醒,当前面没有人的时候说明已经轮到了自己,即直接获得了锁,关于获取前面一个人的ticket,因为ticket是每次递增1的,所以完全可以通过自己的ticket计算出前面一个人的ticket,没必须要非获取父节点下的所有children。然后就是号码的发放,使用zookeeper的顺序临时节点可以保证每次发放的号码都是有序的,同时当持有锁的机器意外挂掉的时候能够自动释放锁。

上述过程流程图:

代码实现:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 | package cc11001100.zookeeper.lock;import cc11001100.zookeeper.utils.ZooKeeperUtil;import org.apache.zookeeper.CreateMode;import org.apache.zookeeper.KeeperException;import org.apache.zookeeper.ZooDefs;import org.apache.zookeeper.ZooKeeper;import org.apache.zookeeper.data.Stat;import java.io.IOException;/** * 不可重入没有羊群效应的锁(公平锁) * * @author CC11001100 */public class NotReentrantDistributeLock implements DistributeLock { private final Thread currentThread; private String lockBasePath; private String lockPrefix; private String lockFullPath; private ZooKeeper zooKeeper; private String myName; private int myTicket; public NotReentrantDistributeLock(String lockBasePath, String lockPrefix) throws IOException { this.lockBasePath = lockBasePath; this.lockPrefix = lockPrefix; this.lockFullPath = lockBasePath + "/" + lockPrefix; this.zooKeeper = ZooKeeperUtil.getZooKeeper(); this.currentThread = Thread.currentThread(); this.myName = currentThread.getName(); createLockBasePath(); } private void createLockBasePath() { try { if (zooKeeper.exists(lockBasePath, null) == null) { zooKeeper.create(lockBasePath, "".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); } } catch (KeeperException | InterruptedException ignored) { } } @Override public void lock() throws InterruptedException { log("begin get lock"); try { String path = zooKeeper.create(lockFullPath, "".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL); myTicket = extractMyTicket(path); String previousNode = buildPath(myTicket - 1); Stat exists = zooKeeper.exists(previousNode, event -> { synchronized (currentThread) { currentThread.notify(); log("wake"); } }); if (exists != null) { synchronized (currentThread) { currentThread.wait(); } } log("get lock success"); } catch (KeeperException e) { e.printStackTrace(); } } private int extractMyTicket(String path) { int splitIndex = path.lastIndexOf("/"); return Integer.parseInt(path.substring(splitIndex + 1).replace(lockPrefix, "")); } private String buildPath(int ticket) { return String.format("%s%010d", lockFullPath, ticket); } @Override public void unlock() { log("begin release lock"); try { zooKeeper.delete(buildPath(myTicket), -1); } catch (KeeperException | InterruptedException e) { e.printStackTrace(); } log("end release lock"); } @Override public void releaseResource() { try { // 将zookeeper连接释放掉 zooKeeper.close(); } catch (InterruptedException e) { e.printStackTrace(); } } private void log(String msg) { System.out.printf("[%d] %s : %s\n", myTicket, myName, msg); }} |

测试代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 | package cc11001100.zookeeper.lock;import java.io.IOException;import java.time.LocalDateTime;import java.time.format.DateTimeFormatter;import java.util.concurrent.TimeUnit;/** * @author CC11001100 */public class NotReentrantDistributeLockTest { private static String now() { return LocalDateTime.now().format(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss")); } public static void main(String[] args) { int nodeNum = 10; for (int i = 0; i < nodeNum; i++) { new Thread(() -> { DistributeLock lock = null; try { lock = new NotReentrantDistributeLock("/zk-lock", "bar"); lock.lock(); String myName = Thread.currentThread().getName(); System.out.println(myName + ": get lock success, now=" + now()); TimeUnit.SECONDS.sleep(3); lock.unlock(); } catch (IOException | InterruptedException e) { e.printStackTrace(); } finally { if (lock != null) { lock.releaseResource(); } } }, "thread-name-" + i).start(); }// output:// [0] thread-name-6 : begin get lock// [0] thread-name-3 : begin get lock// [0] thread-name-5 : begin get lock// [0] thread-name-4 : begin get lock// [0] thread-name-8 : begin get lock// [0] thread-name-9 : begin get lock// [0] thread-name-2 : begin get lock// [0] thread-name-7 : begin get lock// [0] thread-name-1 : begin get lock// [0] thread-name-0 : begin get lock// [170] thread-name-1 : get lock success// thread-name-1: get lock success, now=2019-01-14 17:59:56// [170] thread-name-1 : begin release lock// [170] thread-name-1 : end release lock// [171] thread-name-9 : wake// [171] thread-name-9 : get lock success// thread-name-9: get lock success, now=2019-01-14 17:59:59// [171] thread-name-9 : begin release lock// [171] thread-name-9 : end release lock// [172] thread-name-4 : wake// [172] thread-name-4 : get lock success// thread-name-4: get lock success, now=2019-01-14 18:00:02// [172] thread-name-4 : begin release lock// [173] thread-name-0 : wake// [172] thread-name-4 : end release lock// [173] thread-name-0 : get lock success// thread-name-0: get lock success, now=2019-01-14 18:00:05// [173] thread-name-0 : begin release lock// [174] thread-name-6 : wake// [173] thread-name-0 : end release lock// [174] thread-name-6 : get lock success// thread-name-6: get lock success, now=2019-01-14 18:00:08// [174] thread-name-6 : begin release lock// [175] thread-name-7 : wake// [174] thread-name-6 : end release lock// [175] thread-name-7 : get lock success// thread-name-7: get lock success, now=2019-01-14 18:00:11// [175] thread-name-7 : begin release lock// [176] thread-name-3 : wake// [175] thread-name-7 : end release lock// [176] thread-name-3 : get lock success// thread-name-3: get lock success, now=2019-01-14 18:00:14// [176] thread-name-3 : begin release lock// [177] thread-name-8 : wake// [176] thread-name-3 : end release lock// [177] thread-name-8 : get lock success// thread-name-8: get lock success, now=2019-01-14 18:00:17// [177] thread-name-8 : begin release lock// [178] thread-name-2 : wake// [177] thread-name-8 : end release lock// [178] thread-name-2 : get lock success// thread-name-2: get lock success, now=2019-01-14 18:00:20// [178] thread-name-2 : begin release lock// [179] thread-name-5 : wake// [178] thread-name-2 : end release lock// [179] thread-name-5 : get lock success// thread-name-5: get lock success, now=2019-01-14 18:00:23// [179] thread-name-5 : begin release lock// [179] thread-name-5 : end release lock }} |

四、可重入的分布式锁(无羊群效应,公平锁)

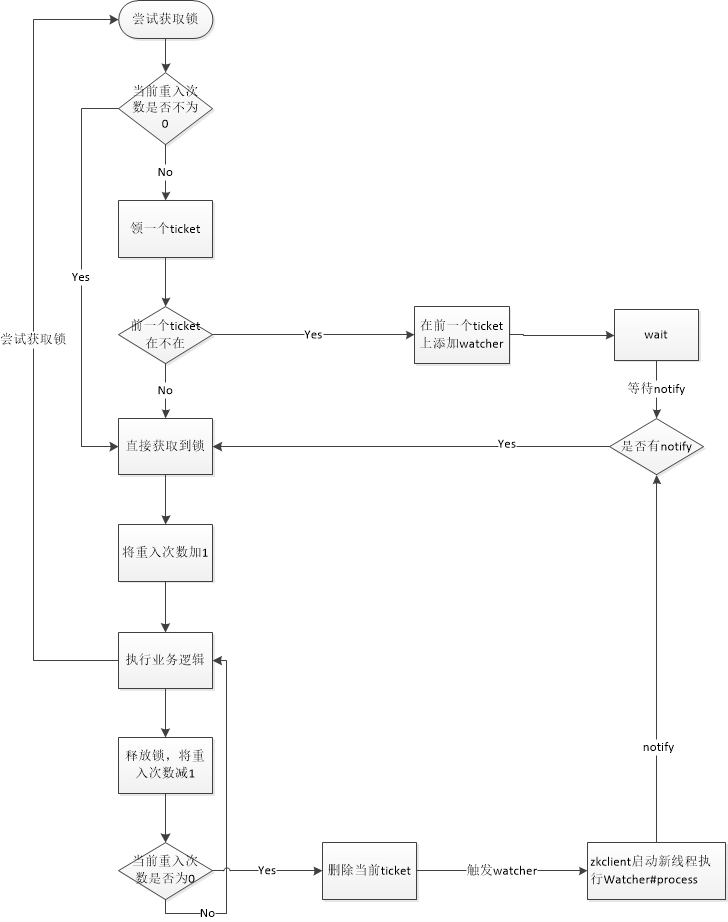

我们的锁实现得越来越好了,但是它还有一个比较致命的问题,就是它不能重入,所谓的重入就是递归获取锁,当已经持有锁时再尝试获取锁时,可重入锁会直接获取成功,同时将重入次数加1,当释放锁时,将重入次数减1,只有当重入次数为0时才彻底释放掉锁。而上面的锁属于不可重入锁,当尝试递归获取锁时会产生死锁永远等待下去,这很明显是不合理的,这一小节就讨论一下如何将上一节的不可重入锁转换为重入锁。

其实我的想法很简单,首先能够确定的是肯定需要在哪里保存锁的重入次数,因为已经获得了锁的机器的重入次数并不需要让其它机器知道,其它机器只要知道现在锁在这哥们手里攥着就可以了,所以可以简单的将重入次数作为DistributeLock的一个成员变量,比如叫做reentrantCount,每次尝试获取锁的时候先判断这个变量是否不为0,如果不为0的话说明当前机器已经获得了锁,根据重入锁的特性,直接将重入次数加1即可,只有当reentrantCount为0的时候才需要去排队等待获取锁。还有释放锁的时候也是判断当前reentrantCount的值,如果这个值不为0的话说明当前还是在递归嵌套中,直接将reentrantCount减1即可,然后判断reentrantCount的值如果为0的话说明锁的所有重入都已经释放掉了,直接将当前锁的ticket删除,因为队列中的后一台机器已经在当前的ticket上添加了watcher,所以删除当前ticket的动作相当于叫醒下一个排队的人。

上述过程流程图:

代码实现:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 | package cc11001100.zookeeper.lock;import cc11001100.zookeeper.utils.ZooKeeperUtil;import org.apache.zookeeper.CreateMode;import org.apache.zookeeper.KeeperException;import org.apache.zookeeper.ZooDefs;import org.apache.zookeeper.ZooKeeper;import org.apache.zookeeper.data.Stat;import java.io.IOException;import java.time.LocalDateTime;import java.time.format.DateTimeFormatter;/** * 可重入无羊群效应的分布式锁(公平锁) * * @author CC11001100 */public class ReentrantDistributeLock implements DistributeLock { private final Thread currentThread; private String lockBasePath; private String lockPrefix; private String lockFullPath; private ZooKeeper zooKeeper; private String myName; private int myTicket; // 使用一个本机变量记载重复次数,这个值就不存储在zookeeper上了, // 一则修改zk节点值还需要网络会慢一些,二是其它节点只需要知道有个节点当前持有锁就可以了,至于重入几次不关它们的事呀 private int reentrantCount = 0; public ReentrantDistributeLock(String lockBasePath, String lockName) throws IOException { this.lockBasePath = lockBasePath; this.lockPrefix = lockName; this.lockFullPath = lockBasePath + "/" + lockName; this.zooKeeper = ZooKeeperUtil.getZooKeeper(); this.currentThread = Thread.currentThread(); this.myName = currentThread.getName(); createLockBasePath(); } private void createLockBasePath() { try { if (zooKeeper.exists(lockBasePath, null) == null) { zooKeeper.create(lockBasePath, "".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); } } catch (KeeperException | InterruptedException ignored) { } } @Override public void lock() throws InterruptedException { log("begin get lock"); // 如果reentrantCount不为0说明当前节点已经持有锁了,无需等待,直接增加重入次数即可 if (reentrantCount != 0) { reentrantCount++; log("get lock success"); return; } // 说明还没有获取到锁,需要设置watcher监听上一个节点释放锁事件 try { String path = zooKeeper.create(lockFullPath, "".getBytes(), ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL); myTicket = extractMyTicket(path); String previousNode = buildPathByTicket(myTicket - 1); Stat exists = zooKeeper.exists(previousNode, event -> { synchronized (currentThread) { currentThread.notify(); log("wake"); } }); if (exists != null) { synchronized (currentThread) { currentThread.wait(); } } reentrantCount++; log("get lock success"); } catch (KeeperException e) { e.printStackTrace(); } } private int extractMyTicket(String path) { int splitIndex = path.lastIndexOf("/"); return Integer.parseInt(path.substring(splitIndex + 1).replace(lockPrefix, "")); } private String buildPathByTicket(int ticket) { return String.format("%s%010d", lockFullPath, ticket); } @Override public void unlock() { log("begin release lock"); // 每次unlock的时候将递归次数减1,没有减到0说明还在递归中 reentrantCount--; if (reentrantCount != 0) { log("end release lock"); return; } // 只有当重入次数为0的时候才删除节点,将锁释放掉 try { zooKeeper.delete(buildPathByTicket(myTicket), -1); } catch (KeeperException | InterruptedException e) { e.printStackTrace(); } log("end release lock"); } @Override public void releaseResource() { try { // 将zookeeper连接释放掉 zooKeeper.close(); } catch (InterruptedException e) { e.printStackTrace(); } } private void log(String msg) { System.out.printf("[%s], ticket=%d, reentrantCount=%d, threadName=%s, msg=%s\n", now(), myTicket, reentrantCount, myName, msg); } private String now() { return LocalDateTime.now().format(DateTimeFormatter.ofPattern("yyyy-MM-dd HH:mm:ss")); }} |

测试代码:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 | package cc11001100.zookeeper.lock;import java.io.IOException;import java.util.Random;import java.util.concurrent.TimeUnit;/** * 测试分布式可重入锁,每个锁随机重入,看是否会发生错误 * * @author CC11001100 */public class ReentrantDistributeLockTest { public static void main(String[] args) { int nodeNum = 10; for (int i = 0; i < nodeNum; i++) { new Thread(() -> { DistributeLock lock = null; try { lock = new ReentrantDistributeLock("/zk-lock", "bar"); lock.lock(); TimeUnit.SECONDS.sleep(1); int reentrantTimes = new Random().nextInt(10); int reentrantCount = 0; for (int j = 0; j < reentrantTimes; j++) { lock.lock(); reentrantCount++; if (Math.random() < 0.5) { lock.unlock(); reentrantCount--; } } while (reentrantCount-- > 0) { lock.unlock(); } lock.unlock(); } catch (IOException | InterruptedException e) { e.printStackTrace(); } finally { if (lock != null) { lock.releaseResource(); } } }, "thread-name-" + i).start(); }// output:// [2019-01-14 17:57:43], ticket=0, reentrantCount=0, threadName=thread-name-4, msg=begin get lock// [2019-01-14 17:57:43], ticket=0, reentrantCount=0, threadName=thread-name-5, msg=begin get lock// [2019-01-14 17:57:43], ticket=0, reentrantCount=0, threadName=thread-name-8, msg=begin get lock// [2019-01-14 17:57:43], ticket=0, reentrantCount=0, threadName=thread-name-0, msg=begin get lock// [2019-01-14 17:57:43], ticket=0, reentrantCount=0, threadName=thread-name-6, msg=begin get lock// [2019-01-14 17:57:43], ticket=0, reentrantCount=0, threadName=thread-name-1, msg=begin get lock// [2019-01-14 17:57:43], ticket=0, reentrantCount=0, threadName=thread-name-7, msg=begin get lock// [2019-01-14 17:57:43], ticket=0, reentrantCount=0, threadName=thread-name-9, msg=begin get lock// [2019-01-14 17:57:43], ticket=0, reentrantCount=0, threadName=thread-name-3, msg=begin get lock// [2019-01-14 17:57:43], ticket=0, reentrantCount=0, threadName=thread-name-2, msg=begin get lock// [2019-01-14 17:57:43], ticket=160, reentrantCount=1, threadName=thread-name-0, msg=get lock success// [2019-01-14 17:57:44], ticket=160, reentrantCount=1, threadName=thread-name-0, msg=begin get lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=2, threadName=thread-name-0, msg=get lock success// [2019-01-14 17:57:44], ticket=160, reentrantCount=2, threadName=thread-name-0, msg=begin release lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=1, threadName=thread-name-0, msg=end release lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=1, threadName=thread-name-0, msg=begin get lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=2, threadName=thread-name-0, msg=get lock success// [2019-01-14 17:57:44], ticket=160, reentrantCount=2, threadName=thread-name-0, msg=begin get lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=3, threadName=thread-name-0, msg=get lock success// [2019-01-14 17:57:44], ticket=160, reentrantCount=3, threadName=thread-name-0, msg=begin release lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=2, threadName=thread-name-0, msg=end release lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=2, threadName=thread-name-0, msg=begin get lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=3, threadName=thread-name-0, msg=get lock success// [2019-01-14 17:57:44], ticket=160, reentrantCount=3, threadName=thread-name-0, msg=begin release lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=2, threadName=thread-name-0, msg=end release lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=2, threadName=thread-name-0, msg=begin release lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=1, threadName=thread-name-0, msg=end release lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=1, threadName=thread-name-0, msg=begin release lock// [2019-01-14 17:57:44], ticket=160, reentrantCount=0, threadName=thread-name-0, msg=end release lock// [2019-01-14 17:57:44], ticket=161, reentrantCount=0, threadName=thread-name-6, msg=wake// [2019-01-14 17:57:44], ticket=161, reentrantCount=1, threadName=thread-name-6, msg=get lock success// [2019-01-14 17:57:45], ticket=161, reentrantCount=1, threadName=thread-name-6, msg=begin get lock// [2019-01-14 17:57:45], ticket=161, reentrantCount=2, threadName=thread-name-6, msg=get lock success// [2019-01-14 17:57:45], ticket=161, reentrantCount=2, threadName=thread-name-6, msg=begin get lock// [2019-01-14 17:57:45], ticket=161, reentrantCount=3, threadName=thread-name-6, msg=get lock success// [2019-01-14 17:57:45], ticket=161, reentrantCount=3, threadName=thread-name-6, msg=begin release lock// [2019-01-14 17:57:45], ticket=161, reentrantCount=2, threadName=thread-name-6, msg=end release lock// [2019-01-14 17:57:45], ticket=161, reentrantCount=2, threadName=thread-name-6, msg=begin release lock// [2019-01-14 17:57:45], ticket=161, reentrantCount=1, threadName=thread-name-6, msg=end release lock// [2019-01-14 17:57:45], ticket=161, reentrantCount=1, threadName=thread-name-6, msg=begin release lock// [2019-01-14 17:57:45], ticket=162, reentrantCount=0, threadName=thread-name-5, msg=wake// [2019-01-14 17:57:45], ticket=161, reentrantCount=0, threadName=thread-name-6, msg=end release lock// [2019-01-14 17:57:45], ticket=162, reentrantCount=1, threadName=thread-name-5, msg=get lock success// [2019-01-14 17:57:46], ticket=162, reentrantCount=1, threadName=thread-name-5, msg=begin release lock// [2019-01-14 17:57:46], ticket=162, reentrantCount=0, threadName=thread-name-5, msg=end release lock// [2019-01-14 17:57:46], ticket=163, reentrantCount=0, threadName=thread-name-8, msg=wake// [2019-01-14 17:57:46], ticket=163, reentrantCount=1, threadName=thread-name-8, msg=get lock success// [2019-01-14 17:57:47], ticket=163, reentrantCount=1, threadName=thread-name-8, msg=begin get lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=2, threadName=thread-name-8, msg=get lock success// [2019-01-14 17:57:47], ticket=163, reentrantCount=2, threadName=thread-name-8, msg=begin release lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=1, threadName=thread-name-8, msg=end release lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=1, threadName=thread-name-8, msg=begin get lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=2, threadName=thread-name-8, msg=get lock success// [2019-01-14 17:57:47], ticket=163, reentrantCount=2, threadName=thread-name-8, msg=begin get lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=3, threadName=thread-name-8, msg=get lock success// [2019-01-14 17:57:47], ticket=163, reentrantCount=3, threadName=thread-name-8, msg=begin get lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=4, threadName=thread-name-8, msg=get lock success// [2019-01-14 17:57:47], ticket=163, reentrantCount=4, threadName=thread-name-8, msg=begin get lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=5, threadName=thread-name-8, msg=get lock success// [2019-01-14 17:57:47], ticket=163, reentrantCount=5, threadName=thread-name-8, msg=begin release lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=4, threadName=thread-name-8, msg=end release lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=4, threadName=thread-name-8, msg=begin release lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=3, threadName=thread-name-8, msg=end release lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=3, threadName=thread-name-8, msg=begin release lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=2, threadName=thread-name-8, msg=end release lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=2, threadName=thread-name-8, msg=begin release lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=1, threadName=thread-name-8, msg=end release lock// [2019-01-14 17:57:47], ticket=163, reentrantCount=1, threadName=thread-name-8, msg=begin release lock// [2019-01-14 17:57:47], ticket=164, reentrantCount=0, threadName=thread-name-2, msg=wake// [2019-01-14 17:57:47], ticket=163, reentrantCount=0, threadName=thread-name-8, msg=end release lock// [2019-01-14 17:57:47], ticket=164, reentrantCount=1, threadName=thread-name-2, msg=get lock success// [2019-01-14 17:57:48], ticket=164, reentrantCount=1, threadName=thread-name-2, msg=begin release lock// [2019-01-14 17:57:48], ticket=165, reentrantCount=0, threadName=thread-name-9, msg=wake// [2019-01-14 17:57:48], ticket=164, reentrantCount=0, threadName=thread-name-2, msg=end release lock// [2019-01-14 17:57:48], ticket=165, reentrantCount=1, threadName=thread-name-9, msg=get lock success// [2019-01-14 17:57:49], ticket=165, reentrantCount=1, threadName=thread-name-9, msg=begin get lock// [2019-01-14 17:57:49], ticket=165, reentrantCount=2, threadName=thread-name-9, msg=get lock success// [2019-01-14 17:57:49], ticket=165, reentrantCount=2, threadName=thread-name-9, msg=begin release lock// [2019-01-14 17:57:49], ticket=165, reentrantCount=1, threadName=thread-name-9, msg=end release lock// [2019-01-14 17:57:49], ticket=165, reentrantCount=1, threadName=thread-name-9, msg=begin get lock// [2019-01-14 17:57:49], ticket=165, reentrantCount=2, threadName=thread-name-9, msg=get lock success// [2019-01-14 17:57:49], ticket=165, reentrantCount=2, threadName=thread-name-9, msg=begin get lock// [2019-01-14 17:57:49], ticket=165, reentrantCount=3, threadName=thread-name-9, msg=get lock success// [2019-01-14 17:57:49], ticket=165, reentrantCount=3, threadName=thread-name-9, msg=begin release lock// [2019-01-14 17:57:49], ticket=165, reentrantCount=2, threadName=thread-name-9, msg=end release lock// [2019-01-14 17:57:49], ticket=165, reentrantCount=2, threadName=thread-name-9, msg=begin release lock// [2019-01-14 17:57:49], ticket=165, reentrantCount=1, threadName=thread-name-9, msg=end release lock// [2019-01-14 17:57:49], ticket=165, reentrantCount=1, threadName=thread-name-9, msg=begin release lock// [2019-01-14 17:57:49], ticket=166, reentrantCount=0, threadName=thread-name-1, msg=wake// [2019-01-14 17:57:49], ticket=165, reentrantCount=0, threadName=thread-name-9, msg=end release lock// [2019-01-14 17:57:49], ticket=166, reentrantCount=1, threadName=thread-name-1, msg=get lock success// [2019-01-14 17:57:50], ticket=166, reentrantCount=1, threadName=thread-name-1, msg=begin release lock// [2019-01-14 17:57:50], ticket=167, reentrantCount=0, threadName=thread-name-4, msg=wake// [2019-01-14 17:57:50], ticket=166, reentrantCount=0, threadName=thread-name-1, msg=end release lock// [2019-01-14 17:57:50], ticket=167, reentrantCount=1, threadName=thread-name-4, msg=get lock success// [2019-01-14 17:57:51], ticket=167, reentrantCount=1, threadName=thread-name-4, msg=begin get lock// [2019-01-14 17:57:51], ticket=167, reentrantCount=2, threadName=thread-name-4, msg=get lock success// [2019-01-14 17:57:51], ticket=167, reentrantCount=2, threadName=thread-name-4, msg=begin release lock// [2019-01-14 17:57:51], ticket=167, reentrantCount=1, threadName=thread-name-4, msg=end release lock// [2019-01-14 17:57:51], ticket=167, reentrantCount=1, threadName=thread-name-4, msg=begin get lock// [2019-01-14 17:57:51], ticket=167, reentrantCount=2, threadName=thread-name-4, msg=get lock success// [2019-01-14 17:57:51], ticket=167, reentrantCount=2, threadName=thread-name-4, msg=begin get lock// [2019-01-14 17:57:51], ticket=167, reentrantCount=3, threadName=thread-name-4, msg=get lock success// [2019-01-14 17:57:51], ticket=167, reentrantCount=3, threadName=thread-name-4, msg=begin release lock// [2019-01-14 17:57:51], ticket=167, reentrantCount=2, threadName=thread-name-4, msg=end release lock// [2019-01-14 17:57:51], ticket=167, reentrantCount=2, threadName=thread-name-4, msg=begin release lock// [2019-01-14 17:57:51], ticket=167, reentrantCount=1, threadName=thread-name-4, msg=end release lock// [2019-01-14 17:57:51], ticket=167, reentrantCount=1, threadName=thread-name-4, msg=begin release lock// [2019-01-14 17:57:51], ticket=168, reentrantCount=0, threadName=thread-name-3, msg=wake// [2019-01-14 17:57:51], ticket=167, reentrantCount=0, threadName=thread-name-4, msg=end release lock// [2019-01-14 17:57:51], ticket=168, reentrantCount=1, threadName=thread-name-3, msg=get lock success// [2019-01-14 17:57:52], ticket=168, reentrantCount=1, threadName=thread-name-3, msg=begin get lock// [2019-01-14 17:57:52], ticket=168, reentrantCount=2, threadName=thread-name-3, msg=get lock success// [2019-01-14 17:57:52], ticket=168, reentrantCount=2, threadName=thread-name-3, msg=begin get lock// [2019-01-14 17:57:52], ticket=168, reentrantCount=3, threadName=thread-name-3, msg=get lock success// [2019-01-14 17:57:52], ticket=168, reentrantCount=3, threadName=thread-name-3, msg=begin release lock// [2019-01-14 17:57:52], ticket=168, reentrantCount=2, threadName=thread-name-3, msg=end release lock// [2019-01-14 17:57:52], ticket=168, reentrantCount=2, threadName=thread-name-3, msg=begin release lock// [2019-01-14 17:57:52], ticket=168, reentrantCount=1, threadName=thread-name-3, msg=end release lock// [2019-01-14 17:57:52], ticket=168, reentrantCount=1, threadName=thread-name-3, msg=begin release lock// [2019-01-14 17:57:52], ticket=169, reentrantCount=0, threadName=thread-name-7, msg=wake// [2019-01-14 17:57:52], ticket=168, reentrantCount=0, threadName=thread-name-3, msg=end release lock// [2019-01-14 17:57:52], ticket=169, reentrantCount=1, threadName=thread-name-7, msg=get lock success// [2019-01-14 17:57:53], ticket=169, reentrantCount=1, threadName=thread-name-7, msg=begin get lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=2, threadName=thread-name-7, msg=get lock success// [2019-01-14 17:57:53], ticket=169, reentrantCount=2, threadName=thread-name-7, msg=begin get lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=get lock success// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=begin release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=2, threadName=thread-name-7, msg=end release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=2, threadName=thread-name-7, msg=begin get lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=get lock success// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=begin get lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=4, threadName=thread-name-7, msg=get lock success// [2019-01-14 17:57:53], ticket=169, reentrantCount=4, threadName=thread-name-7, msg=begin release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=end release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=begin get lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=4, threadName=thread-name-7, msg=get lock success// [2019-01-14 17:57:53], ticket=169, reentrantCount=4, threadName=thread-name-7, msg=begin release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=end release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=begin get lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=4, threadName=thread-name-7, msg=get lock success// [2019-01-14 17:57:53], ticket=169, reentrantCount=4, threadName=thread-name-7, msg=begin release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=end release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=begin get lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=4, threadName=thread-name-7, msg=get lock success// [2019-01-14 17:57:53], ticket=169, reentrantCount=4, threadName=thread-name-7, msg=begin get lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=5, threadName=thread-name-7, msg=get lock success// [2019-01-14 17:57:53], ticket=169, reentrantCount=5, threadName=thread-name-7, msg=begin get lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=6, threadName=thread-name-7, msg=get lock success// [2019-01-14 17:57:53], ticket=169, reentrantCount=6, threadName=thread-name-7, msg=begin release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=5, threadName=thread-name-7, msg=end release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=5, threadName=thread-name-7, msg=begin release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=4, threadName=thread-name-7, msg=end release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=4, threadName=thread-name-7, msg=begin release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=end release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=3, threadName=thread-name-7, msg=begin release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=2, threadName=thread-name-7, msg=end release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=2, threadName=thread-name-7, msg=begin release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=1, threadName=thread-name-7, msg=end release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=1, threadName=thread-name-7, msg=begin release lock// [2019-01-14 17:57:53], ticket=169, reentrantCount=0, threadName=thread-name-7, msg=end release lock }} |

1 | |

.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!

· 周边上新:园子的第一款马克杯温暖上架