深度学习--卷积网络 Autoencoder降噪还原手写数字识别--89

目录

import numpy as np

import tensorflow as tf

import tensorflow.keras as K

import matplotlib.pyplot as plt

from tensorflow.keras.layers import Dense, Conv2D, MaxPooling2D, UpSampling2D

np.random.seed(11)

tf.random.set_seed(11)

batch_size = 128

max_epochs = 50

filters = [32,32,16]

(x_train, _), (x_test, _) = K.datasets.mnist.load_data()

x_train = x_train / 255.

x_test = x_test / 255.

x_train = np.reshape(x_train, (len(x_train),28, 28, 1))

x_test = np.reshape(x_test, (len(x_test), 28, 28, 1))

noise = 0.5

x_train_noisy = x_train + noise * np.random.normal(loc=0.0, scale=1.0, size=x_train.shape)

x_test_noisy = x_test + noise * np.random.normal(loc=0.0, scale=1.0, size=x_test.shape)

x_train_noisy = np.clip(x_train_noisy, 0, 1)

x_test_noisy = np.clip(x_test_noisy, 0, 1)

x_train_noisy = x_train_noisy.astype('float32')

x_test_noisy = x_test_noisy.astype('float32')

#print(x_test_noisy[1].dtype)

class Encoder(K.layers.Layer):

def __init__(self, filters):

super(Encoder, self).__init__()

self.conv1 = Conv2D(filters=filters[0], kernel_size=3, strides=1, activation='relu', padding='same')

self.conv2 = Conv2D(filters=filters[1], kernel_size=3, strides=1, activation='relu', padding='same')

self.conv3 = Conv2D(filters=filters[2], kernel_size=3, strides=1, activation='relu', padding='same')

self.pool = MaxPooling2D((2, 2), padding='same')

def call(self, input_features):

x = self.conv1(input_features)

#print("Ex1", x.shape)

x = self.pool(x)

#print("Ex2", x.shape)

x = self.conv2(x)

x = self.pool(x)

x = self.conv3(x)

x = self.pool(x)

return x

class Decoder(K.layers.Layer):

def __init__(self, filters):

super(Decoder, self).__init__()

self.conv1 = Conv2D(filters=filters[2], kernel_size=3, strides=1, activation='relu', padding='same')

self.conv2 = Conv2D(filters=filters[1], kernel_size=3, strides=1, activation='relu', padding='same')

self.conv3 = Conv2D(filters=filters[0], kernel_size=3, strides=1, activation='relu', padding='valid')

self.conv4 = Conv2D(1, 3, 1, activation='sigmoid', padding='same')

self.upsample = UpSampling2D((2, 2))

def call(self, encoded):

x = self.conv1(encoded)

#print("dx1", x.shape)

x = self.upsample(x)

#print("dx2", x.shape)

x = self.conv2(x)

x = self.upsample(x)

x = self.conv3(x)

x = self.upsample(x)

return self.conv4(x)

class Autoencoder(K.Model):

def __init__(self, filters):

super(Autoencoder, self).__init__()

self.loss = []

self.encoder = Encoder(filters)

self.decoder = Decoder(filters)

def call(self, input_features):

#print(input_features.shape)

encoded = self.encoder(input_features)

#print(encoded.shape)

reconstructed = self.decoder(encoded)

#print(reconstructed.shape)

return reconstructed

model = Autoencoder(filters)

model.compile(loss='binary_crossentropy', optimizer='adam')

loss = model.fit(x_train_noisy,

x_train,

validation_data=(x_test_noisy, x_test),

epochs=max_epochs,

batch_size=batch_size)

Epoch 1/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m15s[0m 26ms/step - loss: 0.2898 - val_loss: 0.1548

Epoch 2/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 27ms/step - loss: 0.1477 - val_loss: 0.1335

Epoch 3/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 27ms/step - loss: 0.1319 - val_loss: 0.1246

Epoch 4/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 27ms/step - loss: 0.1246 - val_loss: 0.1197

Epoch 5/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1201 - val_loss: 0.1162

Epoch 6/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 27ms/step - loss: 0.1169 - val_loss: 0.1136

Epoch 7/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 27ms/step - loss: 0.1144 - val_loss: 0.1117

Epoch 8/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1125 - val_loss: 0.1101

Epoch 9/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 27ms/step - loss: 0.1109 - val_loss: 0.1087

Epoch 10/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1096 - val_loss: 0.1075

Epoch 11/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1085 - val_loss: 0.1066

Epoch 12/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1076 - val_loss: 0.1058

Epoch 13/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1068 - val_loss: 0.1051

Epoch 14/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1061 - val_loss: 0.1046

Epoch 15/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1055 - val_loss: 0.1040

Epoch 16/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1050 - val_loss: 0.1037

Epoch 17/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1045 - val_loss: 0.1033

Epoch 18/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1041 - val_loss: 0.1030

Epoch 19/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1038 - val_loss: 0.1027

Epoch 20/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1034 - val_loss: 0.1023

Epoch 21/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1031 - val_loss: 0.1021

Epoch 22/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1028 - val_loss: 0.1018

Epoch 23/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m14s[0m 29ms/step - loss: 0.1025 - val_loss: 0.1016

Epoch 24/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 29ms/step - loss: 0.1023 - val_loss: 0.1014

Epoch 25/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m14s[0m 30ms/step - loss: 0.1021 - val_loss: 0.1012

Epoch 26/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 29ms/step - loss: 0.1019 - val_loss: 0.1011

Epoch 27/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m14s[0m 29ms/step - loss: 0.1017 - val_loss: 0.1009

Epoch 28/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1015 - val_loss: 0.1008

Epoch 29/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1013 - val_loss: 0.1007

Epoch 30/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 27ms/step - loss: 0.1012 - val_loss: 0.1006

Epoch 31/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 27ms/step - loss: 0.1010 - val_loss: 0.1005

Epoch 32/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1009 - val_loss: 0.1004

Epoch 33/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1008 - val_loss: 0.1003

Epoch 34/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m14s[0m 29ms/step - loss: 0.1007 - val_loss: 0.1003

Epoch 35/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1006 - val_loss: 0.1002

Epoch 36/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1005 - val_loss: 0.1001

Epoch 37/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1003 - val_loss: 0.1000

Epoch 38/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1002 - val_loss: 0.0999

Epoch 39/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1001 - val_loss: 0.0998

Epoch 40/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1001 - val_loss: 0.0998

Epoch 41/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.1000 - val_loss: 0.0997

Epoch 42/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.0999 - val_loss: 0.0997

Epoch 43/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.0998 - val_loss: 0.0996

Epoch 44/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.0997 - val_loss: 0.0995

Epoch 45/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.0996 - val_loss: 0.0996

Epoch 46/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.0996 - val_loss: 0.0996

Epoch 47/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.0995 - val_loss: 0.0996

Epoch 48/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m13s[0m 28ms/step - loss: 0.0994 - val_loss: 0.0994

Epoch 49/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m14s[0m 30ms/step - loss: 0.0993 - val_loss: 0.0994

Epoch 50/50

[1m469/469[0m [32m━━━━━━━━━━━━━━━━━━━━[0m[37m[0m [1m14s[0m 29ms/step - loss: 0.0993 - val_loss: 0.0994

plt.plot(range(max_epochs), loss.history['loss'])

plt.xlabel('Epochs')

plt.ylabel('Loss')

plt.show()

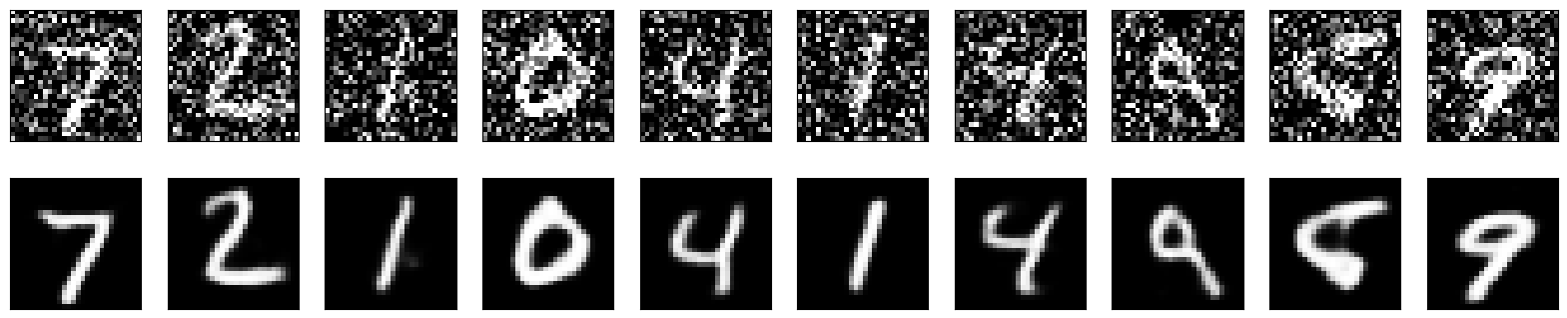

number = 10 # how many digits we will display

plt.figure(figsize=(20, 4))

for index in range(number):

# display original

ax = plt.subplot(2, number, index + 1)

plt.imshow(x_test_noisy[index].reshape(28, 28), cmap='gray')

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

# display reconstruction

ax = plt.subplot(2, number, index + 1 + number)

plt.imshow(tf.reshape(model(x_test_noisy)[index], (28, 28)), cmap='gray')

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

plt.show()