【Testing】API Testing

参考:

https://zhuanlan.zhihu.com/p/29990696

API测试准备工作

· 待测API的目的是什么,谁是使用者

· 待测API会在什么环境下使用

· 待测API在异常环境下会不会有非期望响应

· 这个测试需要测什么功能点

· 各个功能点的测试优先级

· 如何定义期望返回的结果是成功还是失败

· 待测API会不会和其他系统有交互(修改代码后影响其他系统)

API主要测试什么

1、检查API是不是根据你输入的数据返回期望的结果

2、验证API是不是不返回结果或者返回异常结果

3、验证API是不是正确触发其他event或者正确调了其他API

4、验证API是不是正确更新了数据等等

安全测试,验证API能否包含了必要的认证以及敏感数据能否做了脱敏处理,能否支持加密或明码的http访问

API测试要注意什么

- API测试用例要进行分类分组

- 在测试执行时,优先执行API测试(基于TestPyramid)

- 测试用例应该尽可能做到可独立执行

- 为了确保覆盖率,应该为API的所有可能输入进行测试数据规划

API测试能发现什么bug

- 无法正确处理错误的深入条件

- 缺少或重复功能

- 可靠性问题

- 安全问题

- 多线程问题

- 性能问题

- 响应数据结构不规范问题

- 有效参数值不能正确处理

API测试有哪些工具

- SoapUI

- JMeter

- PostMan

- 自己写代码

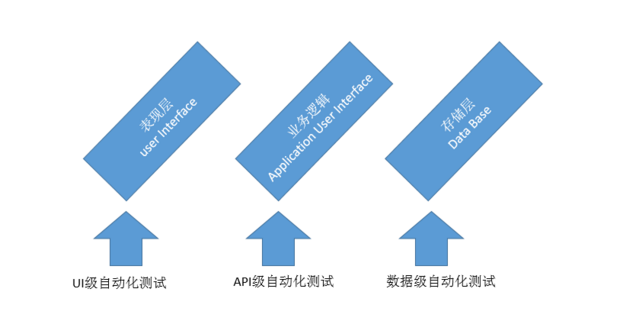

常用的API测试分类:

模块接口测试和web接口测试。

模块接口测试主要测试模块的调用和返回。对于我们的项目来说,模块接口测试集中在core service, 主要测试数据库和底层模块之间的相互调用和返回值。(这部分主要是后台开发人员在做,如果是测试人员来做,需要白盒程度很高才可以)

当然,测试的手段遵循测试的一些要点。

1、检查接口返回的数据是否与预期结果一致。

2、检查接口的容错性,假如传递数据的类型错误时是否可以处理。例如上面的例子是支持整数,传递的是小数或字符串呢?

3、接口参数的边界值。例如,传递的参数足够大或为负数时,接口是否可以正常处理。

4、接口的性能,接口处理数据的时间也是测试的一个方法。牵扯到内部就是算法与代码的优化。

5、接口的安全性,如果是外部接口的话,这点尤为重要。

web接口测试是指前端和后端之间的通讯,用户输入数据到前端页面上,通过http等协议的get和post请求来实现前后端数据传递。我们常做的接口测试就集中在这里。可以利用fiddler等抓包工具来分析请求的数据和返回值。

web接口测试有哪些测试要点:

1、请求是否正确,默认请求成功是200,如果请求错误也能返回404、500等。

2、检查返回数据的正确性与格式;json是一种非常创建的格式。

3、接口的安全性,一般web都不会暴露在网上任意被调用,需要做一些限制,比如鉴权或认证。

4、接口的性能,web接口同样注重性能,这直接影响用户的使用体验。

参考:

https://www.soapui.org/learn/functional-testing/

Test-driven development

TDD – Test-Driven Development – grew from the test-first ideas of Extreme Programming. At its most basic level, it says that the developer should work on any task in the following order:

1. Fail: Write an automated test that defines a piece of improvement or new functionality. Check that the test initially fails.

2. Pass: Write the minimum amount of code required to make the test pass.

3. Refactor: change the new code to acceptable standards. All existing tests (regressions) still have to pass.

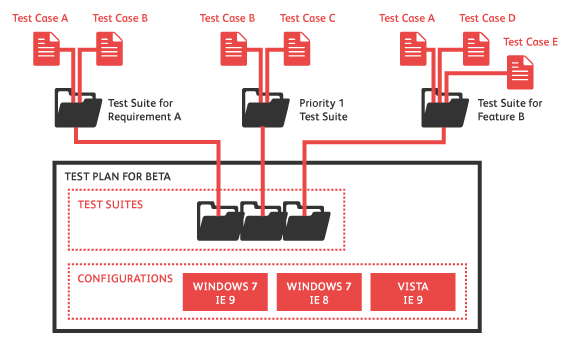

Structuring Your Tests

At the lowest level, a test step verifies a single action. Grouping test steps into a test case allows verifying a delimited piece of functionality - something your application needs. Several test cases make up a test suite that verifies the complete functionality of one of the deliverables – something your customer wants. Grouping the test suites into a test project, allows for verification of functionality for a complete product.

Smoke Tests

Smoke tests should be run at the end of every single build. A good rule of thumb is that the entire smoke test suite should take no more than 30 minutes to run, since nobody wants to wait a long time for a new build. Another good rule is that all the tests in the smoke test suite should not make any internal calls, only communicate with external APIs, such as JDBC calls. If you use non-intrusive smoke tests (that do not modify any customer data), then they work well as a production monitoring tool.

Sanity Tests

Sanity tests add higher level of testing to the smoke tests. Sanity tests verify that the smoke tests are getting back something reasonable.

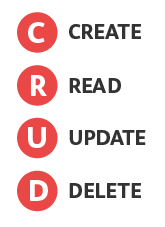

CRUD Tests

CRUD tests are tests that verify that object are being written correctly in the database: Create – Read – Update – Delete. It is a good idea to verify the data directly in the database after each operation (perhaps using a JDBC – Java DataBase Connectivity – call). This will help you get familiar with the structure of the database, and give you confidence that

Negative Tests

Boundary Tests

Security Tests

Scenario-Based Testing

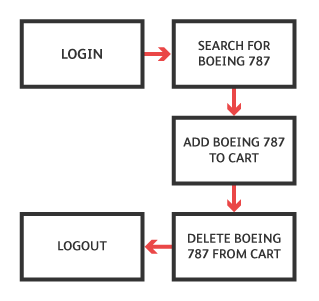

Scenario Tests

When you have all the test pieces, as well as sufficient in-depth understanding of the AUT, you can start building much broader tests to verify the overall functionality. Verifying business requirements, sometimes referred to as “use cases”. Behavior-Driven Development – BDD – is a very good approach for this sort of testing.

When it comes to testing the API, the Scenario tests should cover all of the business logic. That way, the UI team is allowed to concentrate on the user aspects: presentation and usability.

Scenario-based tests should be directly tied to user stories, which were probably provided to you by the product owner or some business stakeholder.

The product owner has to report on the current status of the project to stakeholders, and so it will help you to make these tests as understandable for her as possible. If your speciality is test automation, you may not have the in-depth domain knowledge to be able to correctly set up each of the use cases.

Test Data

Time-Sensitive Data

Large Procedures

Follow the Data

Best Practices: Deciding What to Test

rist-based testing

参考: https://blog.csdn.net/weixin_34315189/article/details/85365838

基于风险的测试定义:根据软件产品的风险度通过出错的严重程度和出现的概率来计算,测试可以根据不同的风险度来决定测试的优先级和测试的覆盖率。

三 基于风险的测试分析流程

1 列出软件的所有功能和特性

2 确定每个功能出错的可能性

3 如果某个功能出错或欠缺某个特征,对顾客的影响有多大

4 计算风险度

5 根据可能出错的迹象,来修改风险度

6 决定测试的范围,编写测试方案

四 基于风险的测试实践三步

1 如何识别风险(头脑风暴会议,和专家的讨论,以及检查表等

2 如何评估识别出的风险(利用二维可能性与结果模型表述)

可能性相关的属性有:

- 使用频度

- 使用复杂度

- 实现复杂度

与风险的结果相关的属性有:

- 用户结果

- 业务结果

- 测试的结果

如下表是经典的基于测试风险的分析表,仅参考:

|

序号 |

风险特性 |

需求变更频繁 |

架构设计扩展性 |

编码人员经验欠缺 |

。。。 |

风险概率 |

用户影响 |

业务影响 |

测试策略 |

|

1 |

特性1 |

|

|

|

|

|

|

|

|

|

2 |

特性2 |

|

|

|

|

|

|

|

|

|

3 |

特性3 |

|

|

|

|

|

|

|

|

3 如何确定合理的减轻风险的活动(用一组适合的测试用例来覆盖每一个风险项)

每一个高风险必须被正向测试用例及负向测试用例所覆盖。另外,至少50%与高风险相关的测试用例应该具有最高等级的测试用例优先级。中间等级的风险主要由正向测试用例覆盖,并且可以分布于最高的三个测试用例优先级中等。

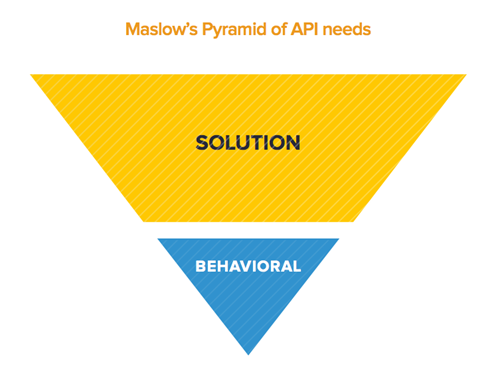

API architect James Higginbotham, Executive API Consultant at LaunchAny, explains. He breaks down API testing into three essential aspects:

- Behavioral API testing ensures that it delivers expected behavior and handles unexpected behavior properly. This is the lowest, most internal value. Behavioral testing ensures that the REST API delivers on the expected behavior and handles unexpected behavior properly. Does the code work?

- Contractual API testing ensures that what is specified by the definition is what has actually been shipped via code. This falls at the middle level of needs. Contractual testing ensures that what is specified by the definition is what has actually been shipped via code. Does the API contract continue to function as we have defined it? With the right inputs? Outputs? Data formats?

- Solution-oriented API testing ensures that the API as a whole supports the intended use cases that it was designed to solve. This falls as the highest, mostly external value. Solution-oriented testing ensures that the API as a whole supports the intended use cases that it was designed to solve. Does the API solve real problems that our customers have? Does it do something that people actually care about?

Higginbotham calls this Maslow’s Pyramid of API needs, made up of growing concerns from the lowest—the internal—to the highest—external—levels. “Teams need to look beyond just testing for functional and behavioral completeness. They need to move upward to ensure what they are externalizing to internal and/or external developers is complete.”

浙公网安备 33010602011771号

浙公网安备 33010602011771号