EM算法的python实现

本文参考自:https://www.jianshu.com/p/154ee3354b59 和 李航博士的《统计学习方法》

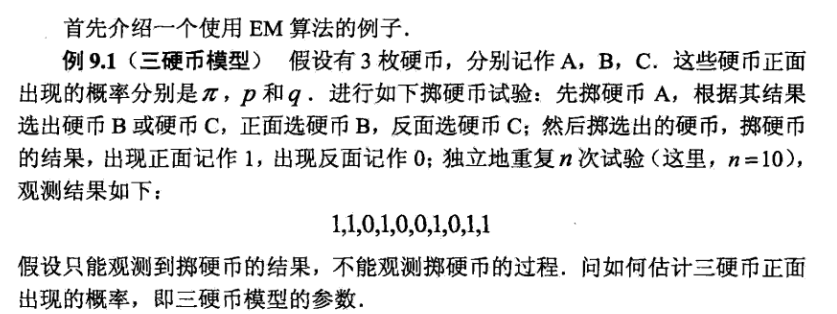

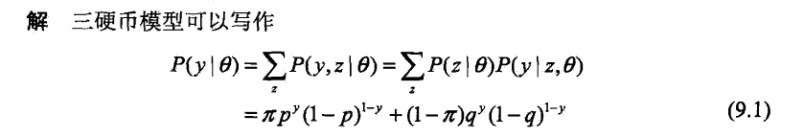

1.

2. 创建观测结果数据

def createData(m,n):

y = np.mat(np.zeros((m,n)))

for i in range(m):

for j in range(n):

# 通过产生随机数,每一行表示一次实验结果

y[i,j] = random.randint(0,1)

return y

输出一下,观察一下结果:

data = createData(1,10) #一共做了三次实验,每次观测到了10个硬币C出现的结果 data

结果: matrix([[0., 0., 1., 1., 1., 1., 0., 1., 0., 1.]])

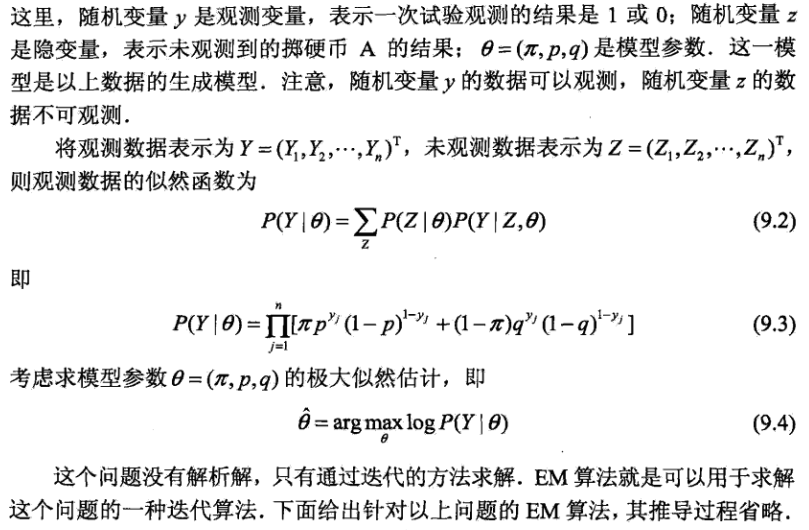

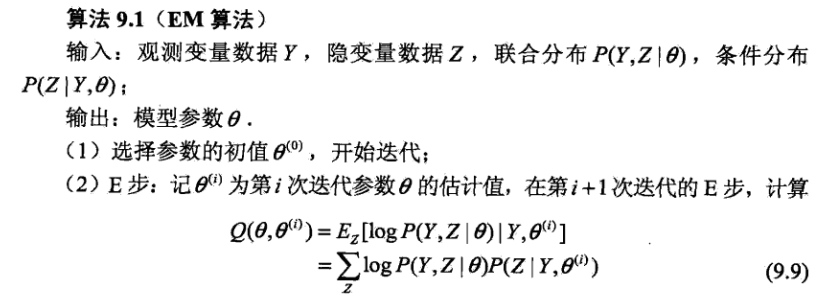

3. EM算法的实现过程

def EM(arr_y,theta,tol,num_iter):

#初始化参数

PI = 0

P = 0

Q = 0

m,n = np.shape(arr_y)

mat_y = arr_y.getA() #返回的是一个numpy array 的数组

for i in range(num_iter):

miu = []

PI = np.copy(theta[0]) # 深拷贝

P = np.copy(theta[1])

Q = np.copy(theta[2])

for j in range(m):

miu_value = (PI*(P**mat_y[j]) *((1-P)**(1-mat_y[j]))) / \

(PI*(P**mat_y[j])*((1-P)**(1-mat_y[j])) + (1-PI)*(Q**mat_y[j])*((1-Q)**(1-mat_y[j])))

miu.append(miu_value)

sum1 = 0.0

for j in range(m):

sum1 += miu[j]

theta[0] = sum1 / m

sum1 = 0.0

sum2 = 0.0

for j in range(m):

sum1 += miu[j] * mat_y[j]

sum2 += miu[j]

theta[1] = sum1 / sum2

sum1 = 0.0

sum2 = 0.0

for j in range(m):

sum1 += (1-miu[j])* mat_y[j]

sum2 += (1-miu[j])

theta[2] = sum1 / sum2

print("-----------------------------")

print(theta)

if (abs(theta[0] - PI) <= tol and abs(theta[1] - P) <= tol

and abs(theta[2] - Q <= tol)):

print("迭代完毕,参数已经收敛")

break

return PI,P,Q

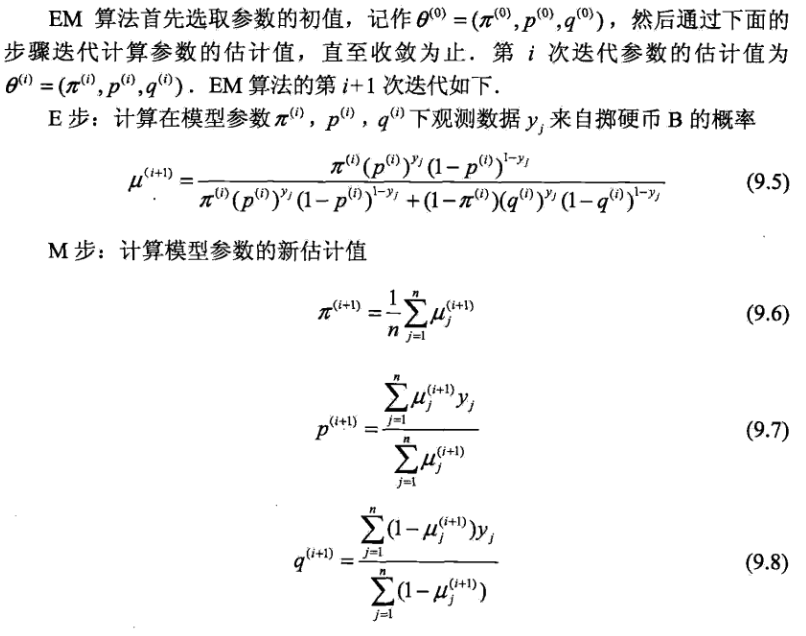

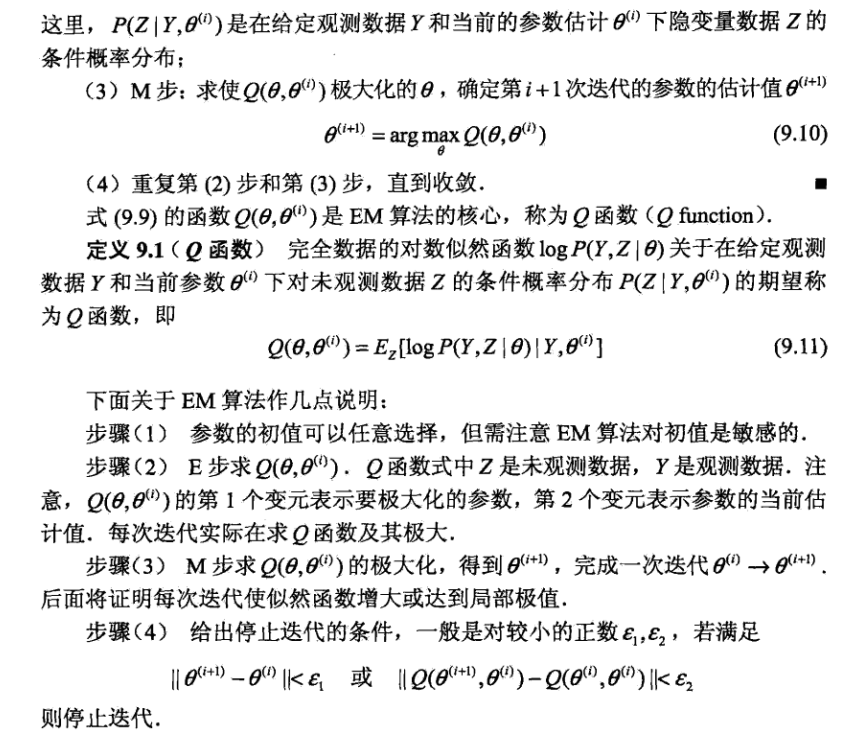

4. 主函数的实现 (注意:这里的输入数据(与《统计学习方法》的输入数据一样))

if __name__ == "__main__":

mat_y = np.mat(np.zeros((10, 1)))

mat_y[0,0] = 1

mat_y[1,0] = 1

mat_y[2,0] = 0

mat_y[3,0] = 1

mat_y[4,0] = 0

mat_y[5,0] = 0

mat_y[6,0] = 1

mat_y[7,0] = 0

mat_y[8,0] = 1

mat_y[9,0] = 1

theta = [0.5, 0.5, 0.5]

print(mat_y)

PI,P,Q = EM(mat_y,theta,0.001,100)

print(PI,P,Q)

#本文的输出结果

[[1.] [1.] [0.] [1.] [0.] [0.] [1.] [0.] [1.] [1.]] ----------------------------- [array([0.5]), array([0.6]), array([0.6])] ----------------------------- [array([0.5]), array([0.6]), array([0.6])] 迭代完毕,参数已经收敛 [0.5] [0.6] [0.6]

和书上的输出结果是一样的

浙公网安备 33010602011771号

浙公网安备 33010602011771号