Mongodb

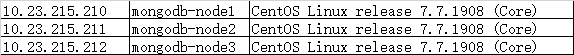

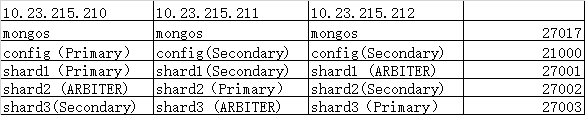

环境:

目标

前期准备:

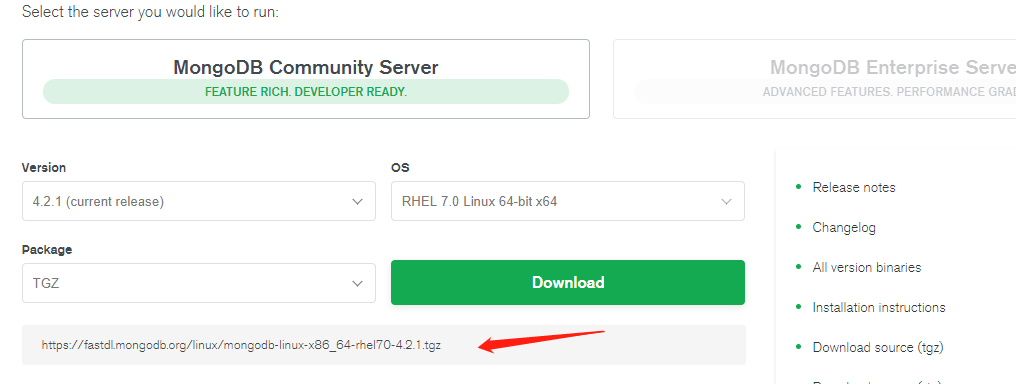

1.选择适合自己的版本。

官方下载地址:https://www.mongodb.com/download-center/community?jmp=docs

wget https://fastdl.mongodb.org/linux/mongodb-linux-x86_64-rhel70-4.0.13.tgz

2.环境设置

1.ulimit设置

红帽企业Linux和CentOS 6和7强制执行一个单独的最大进程限制nproc,该限制将覆盖ulimit设置。该值在以下配置文件中定义,具体取决于版本:

红帽企业Linux和CentOS 6和7强制执行一个单独的最大进程限制nproc,该限制将覆盖ulimit设置。该值在以下配置文件中定义,具体取决于版本: 版 值 文件 RHEL / CentOS 7 4096 /etc/security/limits.d/20-nproc.conf RHEL / CentOS 6 1024 /etc/security/limits.d/90-nproc.conf

官方推荐ulimit设置

-f (文件大小): unlimited -t (CPU时间): unlimited -v(虚拟内存):unlimited [1] -l (锁定的内存大小): unlimited -n (打开的文件): 64000 -m(内存大小):unlimited [1] [2] -u (进程/线程): 64000

cat /etc/security/limits.d/99-mongodb-nproc.conf mongod - fsize unlimited mongod - cpu unlimited mongod - as unlimited mongod - nofile 64000 mongod - rss unlimited mongod - nproc 64000 #如使用普通用户mongod运行mongodb 可以添加以上配置

临时生效可以使用ulimit命令 例如

ulimit -f unlimited ulimit -t unlimited ulimit -v unlimited [1] ulimit -l unlimited ulimit -n 64000 ulimit -m unlimited [1] [2] ulimit -u 64000 #解释 -f (文件大小): unlimited -t (CPU时间): unlimited -v(虚拟内存):unlimited [1] -l (锁定的内存大小): unlimited -n (打开的文件): 64000 -m(内存大小):unlimited [1] [2] -u (进程/线程): 64000

2.关闭seliunx,firewall

systemctl disable firewalld #禁止开机启动 systemctl stop firewalld #停止防火墙 setenforce 0 #临时关闭selinux vi /etc/selinux/config #永久关闭selinux 把SELINUX=enforcing改成disabled getenforce #查看selinux状态 Permissive即是临时关闭 默认是Enforcing状态

3.规划mongodb目录

mkdir -p /opt/mongodb/{bin,conf} #放启动文件,配置文件 mkdir -p /opt/mongodb/{mongos,config,shard1,shard2,shard3}/{data,log,run} #放数据,日志,pid目录

[root@test-mongodb-node1 mongodb]# tree . ├── bin ├── conf ├── config │ ├── data │ ├── log │ └── run ├── mongos │ ├── data │ ├── log │ └── run ├── shard1 │ ├── data │ ├── log │ └── run ├── shard2 │ ├── data │ ├── log │ └── run └── shard3 |── data ├── log └── run

安装mongodb

1.解压压缩包,把压缩包内的文件复制到/opt/mongodb/bin目录下

[root@test-mongodb-node1 package]# tar zxvf mongodb-linux-x86_64-rhel70-4.0.13.tgz [root@test-mongodb-node1 package]# cd mongodb-linux-x86_64-rhel70-4.0.13/bin [root@test-mongodb-node1 bin]# ls bsondump install_compass mongo mongod mongodump mongoexport mongofiles mongoimport mongoreplay mongorestore mongos mongostat mongotop [root@test-mongodb-node1 bin]# mv * /opt/mongodb/bin/ [root@test-mongodb-node1 bin]# ls /opt/mongodb/bin/ bsondump install_compass mongo mongod mongodump mongoexport mongofiles mongoimport mongoreplay mongorestore mongos mongostat mongotop [root@test-mongodb-node1 bin]# cd /opt/mongodb/conf/

2.创建配置文件

[root@test-mongodb-node1 conf]# cat config.yml systemLog: quiet: false path: /opt/mongodb/config/log/conifg.log logAppend: true destination: file processManagement: fork: true pidFilePath: /opt/mongodb/config/run/config.pid net: bindIp: 0.0.0.0 port: 21000 storage: dbPath: /opt/mongodb/config/data directoryPerDB: false engine: wiredTiger syncPeriodSecs: 61 wiredTiger: engineConfig: cacheSizeGB: 1 journalCompressor: snappy directoryForIndexes: false collectionConfig: blockCompressor: snappy indexConfig: prefixCompression: true sharding: clusterRole: configsvr replication: replSetName: configs

[root@test-mongodb-node1 conf]# cat mongos.yml systemLog: quiet: false path: /opt/mongodb/mongos/log/mongods.log logAppend: true destination: file sharding: configDB: configs/10.23.215.210:21000,10.23.215.211:21000,10.23.215.212:21000 processManagement: fork: true pidFilePath: /opt/mongodb/mongos/data/mongos.pid net: bindIp: 0.0.0.0 port: 27017 maxIncomingConnections: 1024 wireObjectCheck: true ipv6: false

[root@test-mongodb-node1 conf]# cat shard1.yml systemLog: quiet: false path: /opt/mongodb/shard1/log/shard1.log logAppend: true destination: file processManagement: fork: true pidFilePath: /opt/mongodb/shard1/run/shard1.pid net: bindIp: 0.0.0.0 port: 27001 maxIncomingConnections: 65536 wireObjectCheck: true ipv6: false storage: dbPath: /opt/mongodb/shard1/data engine: wiredTiger wiredTiger: engineConfig: cacheSizeGB: 6 journalCompressor: snappy directoryForIndexes: false collectionConfig: blockCompressor: snappy indexConfig: prefixCompression: true replication: replSetName: shard1 sharding: clusterRole: shardsvr

[root@test-mongodb-node1 conf]# cat shard2.yml systemLog: quiet: false path: /opt/mongodb/shard2/log/shard2.log logAppend: true destination: file processManagement: fork: true pidFilePath: /opt/mongodb/shard2/run/shard2.pid net: bindIp: 0.0.0.0 port: 27002 maxIncomingConnections: 65536 wireObjectCheck: true ipv6: false storage: dbPath: /opt/mongodb/shard2/data engine: wiredTiger wiredTiger: engineConfig: cacheSizeGB: 6 journalCompressor: snappy directoryForIndexes: false collectionConfig: blockCompressor: snappy indexConfig: prefixCompression: true replication: replSetName: shard2 sharding: clusterRole: shardsvr

[root@test-mongodb-node1 conf]# cat shard3.yml systemLog: quiet: false path: /opt/mongodb/shard3/log/shard3.log logAppend: true destination: file processManagement: fork: true pidFilePath: /opt/mongodb/shard3/run/shard3.pid net: bindIp: 0.0.0.0 port: 27003 maxIncomingConnections: 65536 wireObjectCheck: true ipv6: false storage: dbPath: /opt/mongodb/shard3/data engine: wiredTiger wiredTiger: engineConfig: cacheSizeGB: 6 journalCompressor: snappy directoryForIndexes: false collectionConfig: blockCompressor: snappy indexConfig: prefixCompression: true replication: replSetName: shard3 sharding: clusterRole: shardsvr

3.将文件拷贝其他2台服务器

[root@test-mongodb-node1 opt]# scp -r ./mongodb/ root@10.23.215.212:/opt [root@test-mongodb-node1 opt]# scp -r ./mongodb/ root@10.23.215.211:/opt

4.添加mongodb环境变量(3台均添加)#在文件末尾增加相关信息

[root@test-mongodb-node1 opt]# vi /etc/profile

#mongodb

export PATH=$PATH:/opt/mongodb/bin

[root@test-mongodb-node1 opt]# source /etc/profile

5.启用config.yml 进行配置

[root@test-mongodb-node1 conf]# mongod -f /opt/mongodb/conf/config.yml about to fork child process, waiting until server is ready for connections. forked process: 17500 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongod -f /opt/mongodb/conf/config.yml about to fork child process, waiting until server is ready for connections. forked process: 16779 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongod -f /opt/mongodb/conf/config.yml about to fork child process, waiting until server is ready for connections. forked process: 17062 child process started successfully, parent exiting

登陆任意台执行

use admin config = { _id : "configs", members : [ {_id : 0, host : "10.23.215.210:21000" }, {_id : 1, host : "10.23.215.211:21000" }, {_id : 2, host : "10.23.215.212:21000" } ] } rs.initiate(config) rs.status()

[root@test-mongodb-node1 conf]# mongo --port 21000 MongoDB shell version v4.0.13 connecting to: mongodb://127.0.0.1:21000/?gssapiServiceName=mongodb Implicit session: session { "id" : UUID("8530b89e-4287-4819-9627-951c5f2507df") } MongoDB server version: 4.0.13 Server has startup warnings: 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database. 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted. 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended. 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2019-12-10T11:23:32.173+0800 I CONTROL [initandlisten] --- Enable MongoDB's free cloud-based monitoring service, which will then receive and display metrics about your deployment (disk utilization, CPU, operation statistics, etc). The monitoring data will be available on a MongoDB website with a unique URL accessible to you and anyone you share the URL with. MongoDB may use this information to make product improvements and to suggest MongoDB products and deployment options to you. To enable free monitoring, run the following command: db.enableFreeMonitoring() To permanently disable this reminder, run the following command: db.disableFreeMonitoring() --- > use admin switched to db admin > config = {config = { ... _id : "configs", _id : "configs", ... members : [ members : [ ... {_id : 0, host : "10.23.215.210:21000" }, {_id : 0, host : "10.23.215.210:21000" }, ... {_id : 1, host : "10.23.215.211:21000" }, {_id : 1, host : "10.23.215.211:21000" }, ... {_id : 2, host : "10.23.215.212:21000" } {_id : 2, host : "10.23.215.212:21000" } ... ] ] ... } } { "_id" : "configs", "members" : [ { "_id" : 0, "host" : "10.23.215.210:21000" }, { "_id" : 1, "host" : "10.23.215.211:21000" }, { "_id" : 2, "host" : "10.23.215.212:21000" } ] } > rs.initiate(config) { "ok" : 1, "operationTime" : Timestamp(1575948391, 1), "$gleStats" : { "lastOpTime" : Timestamp(1575948391, 1), "electionId" : ObjectId("000000000000000000000000") }, "lastCommittedOpTime" : Timestamp(0, 0), "$clusterTime" : { "clusterTime" : Timestamp(1575948391, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } configs:SECONDARY> rs.status() { "set" : "configs", "date" : ISODate("2019-12-10T03:27:10.827Z"), "myState" : 1, "term" : NumberLong(1), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "configsvr" : true, "heartbeatIntervalMillis" : NumberLong(2000), "optimes" : { "lastCommittedOpTime" : { "ts" : Timestamp(1575948422, 1), "t" : NumberLong(1) }, "readConcernMajorityOpTime" : { "ts" : Timestamp(1575948422, 1), "t" : NumberLong(1) }, "appliedOpTime" : { "ts" : Timestamp(1575948422, 1), "t" : NumberLong(1) }, "durableOpTime" : { "ts" : Timestamp(1575948422, 1), "t" : NumberLong(1) } }, "lastStableCheckpointTimestamp" : Timestamp(1575948404, 1), "members" : [ { "_id" : 0, "name" : "10.23.215.210:21000", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 219, "optime" : { "ts" : Timestamp(1575948422, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2019-12-10T03:27:02Z"), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "could not find member to sync from", "electionTime" : Timestamp(1575948403, 1), "electionDate" : ISODate("2019-12-10T03:26:43Z"), "configVersion" : 1, "self" : true, "lastHeartbeatMessage" : "" }, { "_id" : 1, "name" : "10.23.215.211:21000", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 38, "optime" : { "ts" : Timestamp(1575948422, 1), "t" : NumberLong(1) }, "optimeDurable" : { "ts" : Timestamp(1575948422, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2019-12-10T03:27:02Z"), "optimeDurableDate" : ISODate("2019-12-10T03:27:02Z"), "lastHeartbeat" : ISODate("2019-12-10T03:27:09.247Z"), "lastHeartbeatRecv" : ISODate("2019-12-10T03:27:10.056Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "10.23.215.210:21000", "syncSourceHost" : "10.23.215.210:21000", "syncSourceId" : 0, "infoMessage" : "", "configVersion" : 1 }, { "_id" : 2, "name" : "10.23.215.212:21000", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 38, "optime" : { "ts" : Timestamp(1575948422, 1), "t" : NumberLong(1) }, "optimeDurable" : { "ts" : Timestamp(1575948422, 1), "t" : NumberLong(1) }, "optimeDate" : ISODate("2019-12-10T03:27:02Z"), "optimeDurableDate" : ISODate("2019-12-10T03:27:02Z"), "lastHeartbeat" : ISODate("2019-12-10T03:27:09.247Z"), "lastHeartbeatRecv" : ISODate("2019-12-10T03:27:10.035Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "10.23.215.210:21000", "syncSourceHost" : "10.23.215.210:21000", "syncSourceId" : 0, "infoMessage" : "", "configVersion" : 1 } ], "ok" : 1, "operationTime" : Timestamp(1575948422, 1), "$gleStats" : { "lastOpTime" : Timestamp(1575948391, 1), "electionId" : ObjectId("7fffffff0000000000000001") }, "lastCommittedOpTime" : Timestamp(1575948422, 1), "$clusterTime" : { "clusterTime" : Timestamp(1575948422, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } configs:PRIMARY>

6.启用shard1.yml 进行配置

[root@test-mongodb-node1 conf]# mongod -f /opt/mongodb/conf/shard1.yml about to fork child process, waiting until server is ready for connections. forked process: 17612 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongod -f /opt/mongodb/conf/shard1.yml about to fork child process, waiting until server is ready for connections. forked process: 16884 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongod -f /opt/mongodb/conf/shard1.yml about to fork child process, waiting until server is ready for connections. forked process: 17168 child process started successfully, parent exiting

代码如下

use admin config = { _id : "shard1", members : [ {_id : 0, host : "10.23.215.210:27001" }, {_id : 1, host : "10.23.215.211:27001" }, {_id : 2, host : "10.23.215.212:27001",arbiterOnly: true } ] } rs.initiate(config) rs.status()

执行结果 212节点为shard1的仲裁分片(登陆210节点执行)

[root@test-mongodb-node1 conf]# mongo --port 27001 MongoDB shell version v4.0.13 connecting to: mongodb://127.0.0.1:27001/?gssapiServiceName=mongodb Implicit session: session { "id" : UUID("66ab958d-f8af-4998-bc0d-e0d64248be7f") } MongoDB server version: 4.0.13 Server has startup warnings: 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database. 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted. 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended. 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2019-12-10T11:48:17.192+0800 I CONTROL [initandlisten] --- Enable MongoDB's free cloud-based monitoring service, which will then receive and display metrics about your deployment (disk utilization, CPU, operation statistics, etc). The monitoring data will be available on a MongoDB website with a unique URL accessible to you and anyone you share the URL with. MongoDB may use this information to make product improvements and to suggest MongoDB products and deployment options to you. To enable free monitoring, run the following command: db.enableFreeMonitoring() To permanently disable this reminder, run the following command: db.disableFreeMonitoring() --- > use adminuse admin switched to db admin > config = {config = { ... _id : "shard1", _id : "shard1", ... members : [ members : [ ... {_id : 0, host : "10.23.215.210:27001" }, {_id : 0, host : "10.23.215.210:27001" }, ... {_id : 1, host : "10.23.215.211:27001" }, {_id : 1, host : "10.23.215.211:27001" }, ... {_id : 2, host : "10.23.215.212:27001",arbiterOnly: true } {_id : 2, host : "10.23.215.212:27001",arbiterOnly: true } ... ] ] ... } } { "_id" : "shard1", "members" : [ { "_id" : 0, "host" : "10.23.215.210:27001" }, { "_id" : 1, "host" : "10.23.215.211:27001" }, { "_id" : 2, "host" : "10.23.215.212:27001", "arbiterOnly" : true } ] } > rs.initiate(config) rs.initiate(config) { "ok" : 1, "operationTime" : Timestamp(1575949794, 1), "$clusterTime" : { "clusterTime" : Timestamp(1575949794, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } shard1:SECONDARY> rs.status()rs.status() { "set" : "shard1", "date" : ISODate("2019-12-10T03:50:00.857Z"), "myState" : 2, "term" : NumberLong(0), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "heartbeatIntervalMillis" : NumberLong(2000), "optimes" : { "lastCommittedOpTime" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "appliedOpTime" : { "ts" : Timestamp(1575949794, 1), "t" : NumberLong(-1) }, "durableOpTime" : { "ts" : Timestamp(1575949794, 1), "t" : NumberLong(-1) } }, "lastStableCheckpointTimestamp" : Timestamp(0, 0), "members" : [ { "_id" : 0, "name" : "10.23.215.210:27001", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 105, "optime" : { "ts" : Timestamp(1575949794, 1), "t" : NumberLong(-1) }, "optimeDate" : ISODate("2019-12-10T03:49:54Z"), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "could not find member to sync from", "configVersion" : 1, "self" : true, "lastHeartbeatMessage" : "" }, { "_id" : 1, "name" : "10.23.215.211:27001", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 6, "optime" : { "ts" : Timestamp(1575949794, 1), "t" : NumberLong(-1) }, "optimeDurable" : { "ts" : Timestamp(1575949794, 1), "t" : NumberLong(-1) }, "optimeDate" : ISODate("2019-12-10T03:49:54Z"), "optimeDurableDate" : ISODate("2019-12-10T03:49:54Z"), "lastHeartbeat" : ISODate("2019-12-10T03:50:00.596Z"), "lastHeartbeatRecv" : ISODate("2019-12-10T03:50:00.639Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "configVersion" : 1 }, { "_id" : 2, "name" : "10.23.215.212:27001", "health" : 1, "state" : 7, "stateStr" : "ARBITER", "uptime" : 6, "lastHeartbeat" : ISODate("2019-12-10T03:50:00.596Z"), "lastHeartbeatRecv" : ISODate("2019-12-10T03:50:00.063Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "configVersion" : 1 } ], "ok" : 1, "operationTime" : Timestamp(1575949794, 1), "$clusterTime" : { "clusterTime" : Timestamp(1575949794, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } shard1:SECONDARY>

7.启用shard2.yml 进行配置

[root@test-mongodb-node1 conf]# mongod -f /opt/mongodb/conf/shard2.yml about to fork child process, waiting until server is ready for connections. forked process: 17696 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongod -f /opt/mongodb/conf/shard2.yml about to fork child process, waiting until server is ready for connections. forked process: 16964 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongod -f /opt/mongodb/conf/shard2.yml about to fork child process, waiting until server is ready for connections. forked process: 17218 child process started successfully, parent exiting

代码如下

use admin config = { _id : "shard2", members : [ {_id : 0, host : "10.23.215.211:27002" }, {_id : 1, host : "10.23.215.212:27002" }, {_id : 2, host : "10.23.215.210:27002",arbiterOnly: true } ] } rs.initiate(config) rs.status()

执行结果 210节点为shard2的仲裁分片(登陆211节点执行)

[root@test-mongodb-node2 opt]# mongo --port 27002 MongoDB shell version v4.0.13 connecting to: mongodb://127.0.0.1:27002/?gssapiServiceName=mongodb Implicit session: session { "id" : UUID("2db5f040-425b-4e33-99a1-a3fc8bc6dfd1") } MongoDB server version: 4.0.13 Server has startup warnings: 2019-12-10T11:51:50.117+0800 I CONTROL [initandlisten] 2019-12-10T11:51:50.117+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database. 2019-12-10T11:51:50.117+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted. 2019-12-10T11:51:50.117+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended. 2019-12-10T11:51:50.118+0800 I CONTROL [initandlisten] 2019-12-10T11:51:50.118+0800 I CONTROL [initandlisten] 2019-12-10T11:51:50.118+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. 2019-12-10T11:51:50.118+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2019-12-10T11:51:50.118+0800 I CONTROL [initandlisten] 2019-12-10T11:51:50.118+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2019-12-10T11:51:50.118+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2019-12-10T11:51:50.118+0800 I CONTROL [initandlisten] --- Enable MongoDB's free cloud-based monitoring service, which will then receive and display metrics about your deployment (disk utilization, CPU, operation statistics, etc). The monitoring data will be available on a MongoDB website with a unique URL accessible to you and anyone you share the URL with. MongoDB may use this information to make product improvements and to suggest MongoDB products and deployment options to you. To enable free monitoring, run the following command: db.enableFreeMonitoring() To permanently disable this reminder, run the following command: db.disableFreeMonitoring() --- > use adminuse admin switched to db admin > config = {config = { ... _id : "shard2", _id : "shard2", ... members : [ members : [ ... {_id : 0, host : "10.23.215.211:27002" }, {_id : 0, host : "10.23.215.211:27002" }, ... {_id : 1, host : "10.23.215.212:27002" }, {_id : 1, host : "10.23.215.212:27002" }, ... {_id : 2, host : "10.23.215.210:27002",arbiterOnly: true } {_id : 2, host : "10.23.215.210:27002",arbiterOnly: true } ... ] ] ... } } { "_id" : "shard2", "members" : [ { "_id" : 0, "host" : "10.23.215.211:27002" }, { "_id" : 1, "host" : "10.23.215.212:27002" }, { "_id" : 2, "host" : "10.23.215.210:27002", "arbiterOnly" : true } ] } > rs.initiate(config) rs.initiate(config) { "ok" : 1, "operationTime" : Timestamp(1575950113, 1), "$clusterTime" : { "clusterTime" : Timestamp(1575950113, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } shard2:SECONDARY> rs.status()rs.status() { "set" : "shard2", "date" : ISODate("2019-12-10T03:55:25.978Z"), "myState" : 1, "term" : NumberLong(1), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "heartbeatIntervalMillis" : NumberLong(2000), "optimes" : { "lastCommittedOpTime" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "appliedOpTime" : { "ts" : Timestamp(1575950125, 2), "t" : NumberLong(1) }, "durableOpTime" : { "ts" : Timestamp(1575950125, 2), "t" : NumberLong(1) } }, "lastStableCheckpointTimestamp" : Timestamp(0, 0), "members" : [ { "_id" : 0, "name" : "10.23.215.211:27002", "health" : 1, "state" : 1, "stateStr" : "PRIMARY", "uptime" : 217, "optime" : { "ts" : Timestamp(1575950125, 2), "t" : NumberLong(1) }, "optimeDate" : ISODate("2019-12-10T03:55:25Z"), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "could not find member to sync from", "electionTime" : Timestamp(1575950124, 1), "electionDate" : ISODate("2019-12-10T03:55:24Z"), "configVersion" : 1, "self" : true, "lastHeartbeatMessage" : "" }, { "_id" : 1, "name" : "10.23.215.212:27002", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 12, "optime" : { "ts" : Timestamp(1575950113, 1), "t" : NumberLong(-1) }, "optimeDurable" : { "ts" : Timestamp(1575950113, 1), "t" : NumberLong(-1) }, "optimeDate" : ISODate("2019-12-10T03:55:13Z"), "optimeDurableDate" : ISODate("2019-12-10T03:55:13Z"), "lastHeartbeat" : ISODate("2019-12-10T03:55:24.261Z"), "lastHeartbeatRecv" : ISODate("2019-12-10T03:55:25.737Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "configVersion" : 1 }, { "_id" : 2, "name" : "10.23.215.210:27002", "health" : 1, "state" : 7, "stateStr" : "ARBITER", "uptime" : 12, "lastHeartbeat" : ISODate("2019-12-10T03:55:24.259Z"), "lastHeartbeatRecv" : ISODate("2019-12-10T03:55:25.637Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "configVersion" : 1 } ], "ok" : 1, "operationTime" : Timestamp(1575950125, 2), "$clusterTime" : { "clusterTime" : Timestamp(1575950125, 2), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } shard2:PRIMARY>

8.启用shard3.yml 进行配置

[root@test-mongodb-node1 conf]# mongod -f /opt/mongodb/conf/shard3.yml about to fork child process, waiting until server is ready for connections. forked process: 17739 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongod -f /opt/mongodb/conf/shard3.yml about to fork child process, waiting until server is ready for connections. forked process: 17048 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongod -f /opt/mongodb/conf/shard3.yml about to fork child process, waiting until server is ready for connections. forked process: 17291 child process started successfully, parent exiting

代码如下

use admin config = { _id : "shard3", members : [ {_id : 0, host : "10.23.215.212:27003" }, {_id : 1, host : "10.23.215.211:27003",arbiterOnly: true }, {_id : 2, host : "10.23.215.210:27003" } ] } rs.initiate(config) rs.status()

执行结果 211节点为shard3的仲裁分片(登陆212节点执行)

[root@test-mongodb-node2 opt]# mongo --port 27003 MongoDB shell version v4.0.13 connecting to: mongodb://127.0.0.1:27003/?gssapiServiceName=mongodb Implicit session: session { "id" : UUID("5acb082e-193f-4fdc-a113-43094955bb0c") } MongoDB server version: 4.0.13 Server has startup warnings: 2019-12-10T11:57:00.421+0800 I CONTROL [initandlisten] 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database. 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted. 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] ** WARNING: You are running this process as the root user, which is not recommended. 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/enabled is 'always'. 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] ** WARNING: /sys/kernel/mm/transparent_hugepage/defrag is 'always'. 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] ** We suggest setting it to 'never' 2019-12-10T11:57:00.422+0800 I CONTROL [initandlisten] --- Enable MongoDB's free cloud-based monitoring service, which will then receive and display metrics about your deployment (disk utilization, CPU, operation statistics, etc). The monitoring data will be available on a MongoDB website with a unique URL accessible to you and anyone you share the URL with. MongoDB may use this information to make product improvements and to suggest MongoDB products and deployment options to you. To enable free monitoring, run the following command: db.enableFreeMonitoring() To permanently disable this reminder, run the following command: db.disableFreeMonitoring() --- > use adminuse admin switched to db admin > config = {config = { ... _id : "shard3", _id : "shard3", ... members : [ members : [ ... {_id : 0, host : "10.23.215.212:27003" }, {_id : 0, host : "10.23.215.212:27003" }, ... {_id : 1, host : "10.23.215.211:27003",arbiterOnly: true }, {_id : 1, host : "10.23.215.211:27003",arbiterOnly: true }, ... {_id : 2, host : "10.23.215.210:27003" } {_id : 2, host : "10.23.215.210:27003" } ... ] ] ... } } { "_id" : "shard3", "members" : [ { "_id" : 0, "host" : "10.23.215.212:27003" }, { "_id" : 1, "host" : "10.23.215.211:27003", "arbiterOnly" : true }, { "_id" : 2, "host" : "10.23.215.210:27003" } ] } > rs.initiate(config) rs.initiate(config) { "ok" : 1, "operationTime" : Timestamp(1575950353, 1), "$clusterTime" : { "clusterTime" : Timestamp(1575950353, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } shard3:SECONDARY> rs.status()rs.status() { "set" : "shard3", "date" : ISODate("2019-12-10T03:59:21.249Z"), "myState" : 2, "term" : NumberLong(0), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "heartbeatIntervalMillis" : NumberLong(2000), "optimes" : { "lastCommittedOpTime" : { "ts" : Timestamp(0, 0), "t" : NumberLong(-1) }, "appliedOpTime" : { "ts" : Timestamp(1575950353, 1), "t" : NumberLong(-1) }, "durableOpTime" : { "ts" : Timestamp(1575950353, 1), "t" : NumberLong(-1) } }, "lastStableCheckpointTimestamp" : Timestamp(0, 0), "members" : [ { "_id" : 0, "name" : "10.23.215.212:27003", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 142, "optime" : { "ts" : Timestamp(1575950353, 1), "t" : NumberLong(-1) }, "optimeDate" : ISODate("2019-12-10T03:59:13Z"), "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "could not find member to sync from", "configVersion" : 1, "self" : true, "lastHeartbeatMessage" : "" }, { "_id" : 1, "name" : "10.23.215.211:27003", "health" : 1, "state" : 7, "stateStr" : "ARBITER", "uptime" : 7, "lastHeartbeat" : ISODate("2019-12-10T03:59:20.872Z"), "lastHeartbeatRecv" : ISODate("2019-12-10T03:59:19.842Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "configVersion" : 1 }, { "_id" : 2, "name" : "10.23.215.210:27003", "health" : 1, "state" : 2, "stateStr" : "SECONDARY", "uptime" : 7, "optime" : { "ts" : Timestamp(1575950353, 1), "t" : NumberLong(-1) }, "optimeDurable" : { "ts" : Timestamp(1575950353, 1), "t" : NumberLong(-1) }, "optimeDate" : ISODate("2019-12-10T03:59:13Z"), "optimeDurableDate" : ISODate("2019-12-10T03:59:13Z"), "lastHeartbeat" : ISODate("2019-12-10T03:59:20.873Z"), "lastHeartbeatRecv" : ISODate("2019-12-10T03:59:20.931Z"), "pingMs" : NumberLong(0), "lastHeartbeatMessage" : "", "syncingTo" : "", "syncSourceHost" : "", "syncSourceId" : -1, "infoMessage" : "", "configVersion" : 1 } ], "ok" : 1, "operationTime" : Timestamp(1575950353, 1), "$clusterTime" : { "clusterTime" : Timestamp(1575950353, 1), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } shard3:SECONDARY>

9.启用mongos.yml 配置

[root@test-mongodb-node2 opt]# mongos -f /opt/mongodb/conf/mongos.yml about to fork child process, waiting until server is ready for connections. forked process: 17471 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongos -f /opt/mongodb/conf/mongos.yml about to fork child process, waiting until server is ready for connections. forked process: 17179 child process started successfully, parent exiting [root@test-mongodb-node1 conf]# mongos -f /opt/mongodb/conf/mongos.yml about to fork child process, waiting until server is ready for connections. forked process: 17908 child process started successfully, parent exiting

10.启用分片

代码如下

use admin sh.addShard("shard1/10.23.215.210:27001,10.23.215.211:27001,10.23.215.212:27001") sh.addShard("shard2/10.23.215.210:27002,10.23.215.211:27002,10.23.215.212:27002") sh.addShard("shard3/10.23.215.210:27003,10.23.215.211:27003,10.23.215.212:27003") sh.status()

执行结果

[root@test-mongodb-node2 opt]# mongod -f /opt/mongodb/conf/shard3.yml about to fork child process, waiting until server is ready for connections. forked process: 17048 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongo^C You have new mail in /var/spool/mail/root [root@test-mongodb-node2 opt]# mongos -f /opt/mongodb/conf/mongos.yml about to fork child process, waiting until server is ready for connections. forked process: 17179 child process started successfully, parent exiting [root@test-mongodb-node2 opt]# mongo MongoDB shell version v4.0.13 connecting to: mongodb://127.0.0.1:27017/?gssapiServiceName=mongodb Implicit session: session { "id" : UUID("100c11ca-f6ea-478f-8a34-1e07b6ce66bc") } MongoDB server version: 4.0.13 Server has startup warnings: 2019-12-10T12:40:41.622+0800 I CONTROL [main] 2019-12-10T12:40:41.622+0800 I CONTROL [main] ** WARNING: Access control is not enabled for the database. 2019-12-10T12:40:41.622+0800 I CONTROL [main] ** Read and write access to data and configuration is unrestricted. 2019-12-10T12:40:41.622+0800 I CONTROL [main] ** WARNING: You are running this process as the root user, which is not recommended. 2019-12-10T12:40:41.622+0800 I CONTROL [main] mongos> use adminuse admin switched to db admin mongos> mongos> sh.addShard("shard1/10.23.215.210:27001,10.23.215.211:27001,10.23.215.212:27001")sh.addShard("shard1/10.23.215.210:27001,10.23.215.211:27001,10.23.215.212:27001") sh.addShard("shard2/10.23.215.210:27002,10.23.215.211:27002,10.23.215.212:27002") sh.addShard("shard3/10.23.215.210:27003,10.23.215.211:27003,10.23.215.212:27003"){ "shardAdded" : "shard1", "ok" : 1, "operationTime" : Timestamp(1575953092, 5), "$clusterTime" : { "clusterTime" : Timestamp(1575953092, 5), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } mongos> sh.addShard("shard2/10.23.215.210:27002,10.23.215.211:27002,10.23.215.212:27002")sh.addShard("shard2/10.23.215.210:27002,10.23.215.211:27002,10.23.215.212:27002") { "shardAdded" : "shard2", "ok" : 1, "operationTime" : Timestamp(1575953092, 10), "$clusterTime" : { "clusterTime" : Timestamp(1575953092, 10), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } mongos> sh.addShard("shard3/10.23.215.210:27003,10.23.215.211:27003,10.23.215.212:27003")sh.addShard("shard3/10.23.215.210:27003,10.23.215.211:27003,10.23.215.212:27003") { "shardAdded" : "shard3", "ok" : 1, "operationTime" : Timestamp(1575953093, 4), "$clusterTime" : { "clusterTime" : Timestamp(1575953093, 4), "signature" : { "hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="), "keyId" : NumberLong(0) } } } mongos> sh.status()sh.status() --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("5def10752e92cd44ec668008") } shards: { "_id" : "shard1", "host" : "shard1/10.23.215.210:27001,10.23.215.211:27001", "state" : 1 } { "_id" : "shard2", "host" : "shard2/10.23.215.211:27002,10.23.215.212:27002", "state" : 1 } { "_id" : "shard3", "host" : "shard3/10.23.215.210:27003,10.23.215.212:27003", "state" : 1 } active mongoses: "4.0.13" : 2 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "config", "primary" : "config", "partitioned" : true } mongos>

测试集群

[root@test-mongodb-node2 opt]# mongo mongos>use admin #指定testdb分片生效 mongos>db.runCommand( { enablesharding :"testdb"}); #指定数据库里需要分片的集合和片键 mongos>db.runCommand( { shardcollection : "testdb.table1",key : {id: "hashed"} } ) mongos>for (var i = 1 ; i <= 100; i++) db.table1.save({id:i,"test1":"test"}); mongos> sh.status() --- Sharding Status --- sharding version: { "_id" : 1, "minCompatibleVersion" : 5, "currentVersion" : 6, "clusterId" : ObjectId("5def10752e92cd44ec668008") } shards: { "_id" : "shard1", "host" : "shard1/10.23.215.210:27001,10.23.215.211:27001", "state" : 1 } { "_id" : "shard2", "host" : "shard2/10.23.215.211:27002,10.23.215.212:27002", "state" : 1 } { "_id" : "shard3", "host" : "shard3/10.23.215.210:27003,10.23.215.212:27003", "state" : 1 } active mongoses: "4.0.13" : 2 autosplit: Currently enabled: yes balancer: Currently enabled: yes Currently running: no Failed balancer rounds in last 5 attempts: 0 Migration Results for the last 24 hours: No recent migrations databases: { "_id" : "config", "primary" : "config", "partitioned" : true } config.system.sessions shard key: { "_id" : 1 } unique: false balancing: true chunks: shard1 1 { "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0) { "_id" : "testdb", "primary" : "shard2", "partitioned" : true, "version" : { "uuid" : UUID("429b25d6-6ecf-4826-97be-0a3f7b575e3b"), "lastMod" : 1 } } testdb.table1 shard key: { "id" : "hashed" } unique: false balancing: true chunks: shard1 2 shard2 2 shard3 2 { "id" : { "$minKey" : 1 } } -->> { "id" : NumberLong("-6148914691236517204") } on : shard1 Timestamp(1, 0) { "id" : NumberLong("-6148914691236517204") } -->> { "id" : NumberLong("-3074457345618258602") } on : shard1 Timestamp(1, 1) { "id" : NumberLong("-3074457345618258602") } -->> { "id" : NumberLong(0) } on : shard2 Timestamp(1, 2) { "id" : NumberLong(0) } -->> { "id" : NumberLong("3074457345618258602") } on : shard2 Timestamp(1, 3) { "id" : NumberLong("3074457345618258602") } -->> { "id" : NumberLong("6148914691236517204") } on : shard3 Timestamp(1, 4) { "id" : NumberLong("6148914691236517204") } -->> { "id" : { "$maxKey" : 1 } } on : shard3 Timestamp(1, 5) mongos>

浙公网安备 33010602011771号

浙公网安备 33010602011771号