3--二进制安装k8s

一、节点规划

| 主机名称 | IP | 域名解析 |

|---|---|---|

| k8s-m-01 | 192.168.12.51 | m1 |

| k8s-m-02 | 192.168.12.52 | m2 |

| k8s-m-03 | 192.168.12.53 | m3 |

| k8s-n-01 | 192.168.12.54 | n1 |

| k8s-n-02 | 192.168.12.55 | n2 |

| k8s-m-vip | 192.168.12.56 | vip |

二、插件规划

#1.master节点规划

kube-apiserver # 中央管理器,调度管理集群

kube-controller-manager # 控制器: 管理容器,监控容器

kube-scheduler # 调度器:调度容器

flannel # 提供集群间网络

etcd # 数据库

kubelet # 部署容器,监控容器(只监控自己的那一台)

kube-proxy # 提供容器间的网络

#2.node节点规划

kubelet # 部署容器,监控容器(只监控自己的那一台)

kube-proxy # 提供容器间的网络

Docker # 负载节点上的容器的各种操作

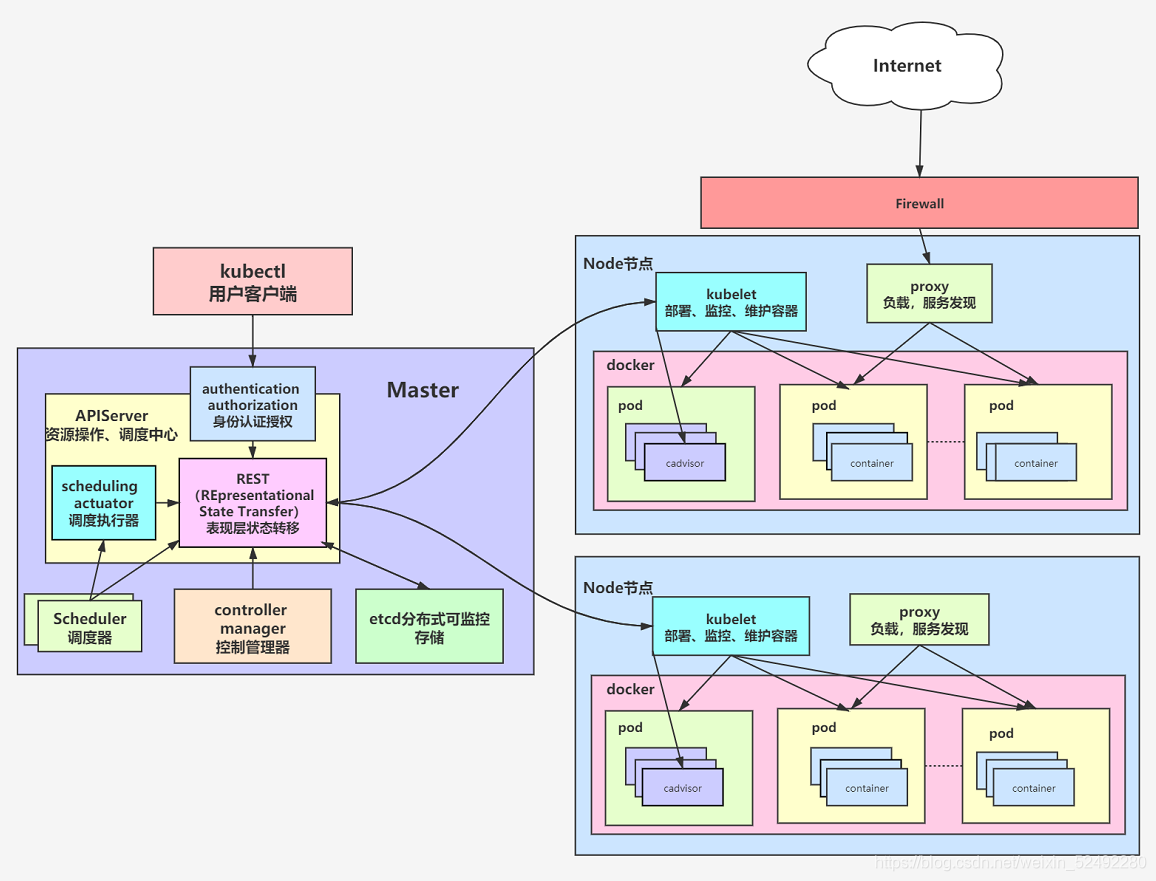

在架构图中,我们把服务分为运行在工作节点上的服务和组成在集群级别控制板的服务

Kubernetes主要由以下几个核心组件组成:

1. etcd保存整个集群的状态

2. apiserver提供了资源的唯一入口,并提供认证、授权、访问控制、API注册和发现等

3. controller manager负责维护集群的状态,比如故障检测、自动扩展、滚动更新等

4. scheduler负责资源的调度,按照预定的调度策略将Pod调度到相应的机器上

5. kubelet负责维护容器的生命周期,同时也负责Volume(CVI)和网络(CNI)的管理

6. Container runtime负责镜像的管理以及Pod和容器的真正运行(CRI)

7. kube-poxy负责为Service提供cluster内部的服务发现和负载均衡

除了核心组件,还有一些推荐的组件:

8. kube-dns负责为整个集群提供DNS服务

9. Ingress Controller 为服务提供外网入口

10. Heapster提供资源监控

11. Dashboard提供GUIFederation提供跨可用区的集群

12. Fluentd-elasticsearch提供集群日志采集,存储与查询

三、系统优化(所有master节点)

1.关闭swap分区

#1.一旦触发 swap,会导致系统性能急剧下降,所以一般情况下,K8S 要求关闭 swap

vim /etc/fstab

用#注释掉UUID swap分区那一行

swapoff -a

echo 'KUBELET_EXTRA_ARGS="--fail-swap-on=false"' > /etc/sysconfig/kubelet #忽略swap错误

2.关闭selinux、firewalld

sed -i 's#enforcing#disabled#g' /etc/selinux/config

setenforce 0 #临时关闭selinux

systemctl disable firewalld

3.修改主机名并且做域名解析

#1.修改主机名

hostnamectl set-hostname k8s-m-01

hostnamectl set-hostname k8s-m-02

hostnamectl set-hostname k8s-m-03

#2.修改hosts文件 (主节点)

vim /etc/hosts

192.168.15.51 k8s-m-01 m1

192.168.15.52 k8s-m-02 m2

192.168.15.53 k8s-m-03 m3

192.168.15.54 k8s-n-01 n1

192.168.15.55 k8s-n-02 n2

192.168.15.56 k8s-m-vip vip

4.配置免密登录、分发公钥(m01节点)

sed -i 's/#UseDNS yes/UseDNS no/g' /etc/ssh/sshd_config

systemctl restart sshd

ssh-keygen -t rsa

for i in m1 m2 m3;do ssh-copy-id -i ~/.ssh/id_rsa.pub root@$i;done

5.同步集群时间

在集群中,时间是一个很重要的概念,一旦集群当中某台机器视觉按跟集群时间不一致,可能会导致集群面临很多问题。所以,在部署集群之前,需要同步集群当中的所有机器时间

yum install ntpdate -y

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' > /etc/timezone

ntpdate time2.aliyun.com

#写入定时任务

crontab -e

*/1 * * * * ntpdate time2.aliyun.com > /dev/null 2>&1

6.配置镜像源

#1.默认情况下,centos使用的是官方yum源,所以一般情况下在国内使用时非常慢的,所以我们可以替换成国内的一些比较成熟的yum源,例如:清华大学镜像源,网易云镜像源等等。

rm -rf /ect/yum.repos.d/*

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

#2.刷新缓存

yum clean all

yum makecache

7.更新系统

yum update -y --exclud=kernel*

8.安装基础常用软件

yum install wget expect vim net-tools ntp bash-completion ipvsadm ipset jq iptables conntrack sysstat libseccomp -y

9.更新系统内核(docker对系统内核要求比较高,最好用4.4+)

#如果是centos8则不需要升级内核

cd /opt/

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-5.4.137-1.el7.elrepo.x86_64.rpm

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.137-1.el7.elrepo.x86_64.rpm

10.安装系统内容

yum localinstall -y kernel-lt*

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg #调到默认启动

grubby --default-kernel #查看当前默认启动的内核

reboot #重启

11.安装IPVS

#IPVS是系统内核中的一个模块,其网络转发性能很高。一般情况下我们首选ipvs

yum install -y conntrack-tools ipvsadm ipset conntrack libseccomp

vim /etc/sysconfig/modules/ipvs.modules #加载IPVS模块

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in \${ipvs_modules}; do

/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe \${kernel_module}

fi

done

# 授权(所有节点)

[root@k8s-n-01 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

12.修改内核启动参数

#内核参数优化的主要目的是使其更合适kubernetes的正常运行

vim /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp.keepaliv.probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp.max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp.max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.top_timestamps = 0

net.core.somaxconn = 16384

sysctl --system #立即生效

四、安装docker(所有节点)

# 1).如果之前安装过docker,需要自行卸载

sudo yum remove docker docker-common docker-selinux docker-engine -y

# 2).初始化系统环境

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

# 3).安装yum源

wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

或者

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# 4).修改源

sed -i 's+download.docker.com+repo.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

# 5).更新yum软件包索引

yum makecache fast

# 6).安装docker

yum install docker-ce-19.03.9 -y #指定版本安装 ce是社区版 ee是企业版

# 7).docker优化

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://8mh75mhz.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload

sudo systemctl restart docker

# 8).设置开机自启

systemctl enable --now docker

五、生成+颁发集群证书(master01节点)

kubernetes组件众多,这些组件之间通过HTTP/GRPC互相通信,来协同完成集群中的应用部署和管理工作。

1.准备证书生成工具

#cfssl证书生成工具是一款把预先的证书机构、使用期等时间写在json文件里面会更加高效和自动化。

#cfssl采用go语言编写,是一个开源的证书管理工具,cfssljson用来从cfssl程序获取json输出,并将证书、密钥、csr和bundle写入文件中。

# 安装证书生成工具

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

# 设置执行权限

chmod +x cfssljson_linux-amd64

chmod +x cfssl_linux-amd64

# 移动到/usr/local/bin

mv cfssljson_linux-amd64 cfssljson

mv cfssl_linux-amd64 cfssl

mv cfssljson cfssl /usr/local/bin

2.生成根证书配置文件

#根证书:是CA认证中心与用户建立信任关系的基础,用户的数字证书必须有一个受信任的根证书,用户的数字证书才有效。

#证书包含三部分,用户信息、用户的公钥、证书签名。CA负责数字认证的批审、发放、归档、撤销等功能,CA颁发的数字证书拥有CA的数字签名,所以除了CA自身,其他机构无法不被察觉的改动。

mkdir -p /opt/cert/ca

cat > /opt/cert/ca/ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "8760h"

}

}

}

}

EOF

详解: default:默认策略:默认有效1年

profiles:定义使用场景,指定不同的过期时间,使用场景等参数

singing:表示该证书可用于签名其他证书,生成的ca.pem证书

server auth:表示client可以用该CA对server提供的证书进行校验

client auth:表示server可以用该CA对client提供的证书进行验证

3.生成根证书请求文件

cat > /opt/cert/ca/ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names":[{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai"

}]

}

EOF

详解:C: 国家

ST: 省

L: 城市

O: 组织

OU:组织别名

4.生成根证书

[root@k8s-m-01 ~]# cd /opt/cert/ca

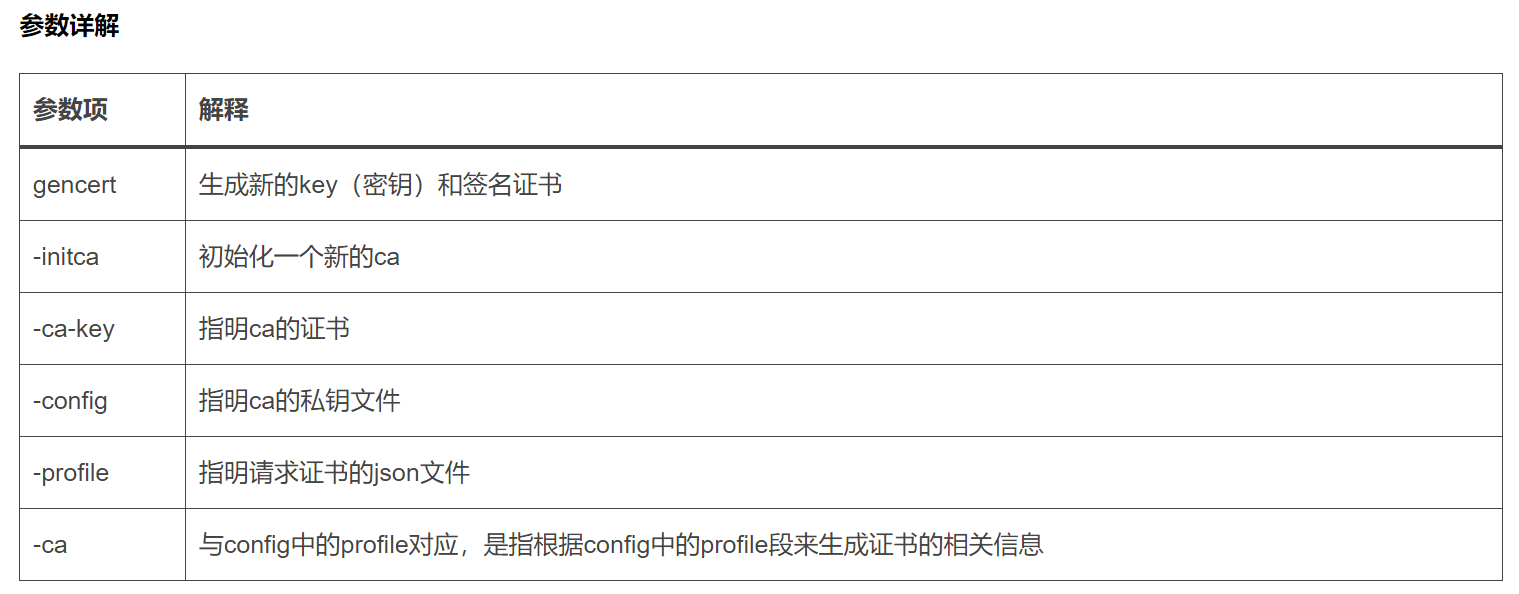

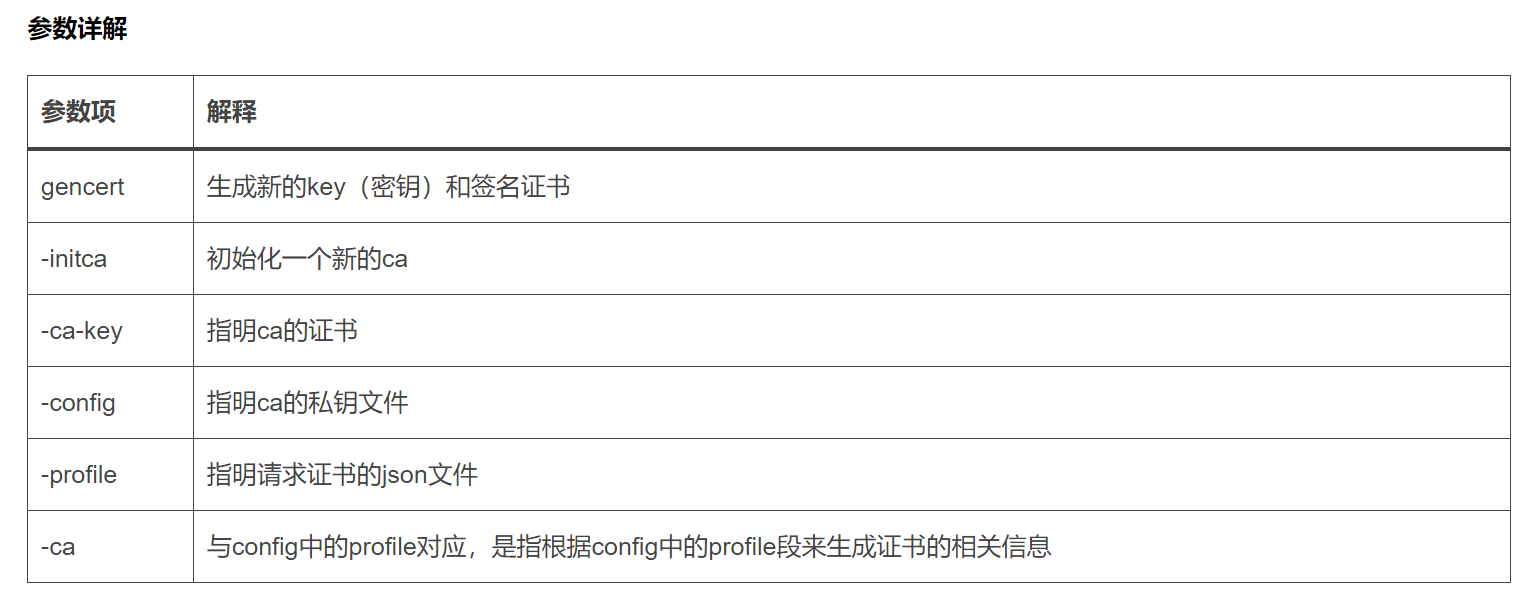

[root@k8s-m-01 /opt/cert/ca]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca - #gencert生成新的key(密钥)和帧数签名 --initca初始化一个新的CA证书

2021/03/26 17:34:55 [INFO] generating a new CA key and certificate from CSR

2021/03/26 17:34:55 [INFO] generate received request

2021/03/26 17:34:55 [INFO] received CSR

2021/03/26 17:34:55 [INFO] generating key: rsa-2048

2021/03/26 17:34:56 [INFO] encoded CSR

2021/03/26 17:34:56 [INFO] signed certificate with serial number 661764636777400005196465272245416169967628201792

[root@k8s-m-01 /opt/cert/ca]# ll

total 20

-rw-r--r-- 1 root root 285 Mar 26 17:34 ca-config.json

-rw-r--r-- 1 root root 960 Mar 26 17:34 ca.csr

-rw-r--r-- 1 root root 153 Mar 26 17:34 ca-csr.json

-rw------- 1 root root 1675 Mar 26 17:34 ca-key.pem

-rw-r--r-- 1 root root 1281 Mar 26 17:34 ca.pem

六、部署ETCD集群(master01节点)

ETCD是基于Raft的分布式key-value存储系统,常用于服务发现,共享配置,以及并发控制(如leader选举,分布式锁等等)。kubernetes使用etcd进行状态和数据存储!

1.节点规划

| Ip | 主机名 | 域名解析 |

|---|---|---|

| 192.168.12.51 | k8s-m-01 | m1 |

| 192.168.12.52 | k8s-m-02 | m2 |

| 192.168.12.53 | k8s-m-03 | m3 |

2.创建ETCD集群证书

[root@k8s-m-01 ca]# vim /etc/hosts

172.16.1.51 k8s-m-01 m1 etcd-1

172.16.1.52 k8s-m-02 m2 etcd-2

172.16.1.53 k8s-m-03 m3 etcd-3

172.16.1.54 k8s-n-01 n1

172.16.1.55 k8s-n-02 n2

# 虚拟VIP

172.16.1.56 k8s-m-vip vip

# 注: ectd也可以不用写,因为就在master节点上了

mkdir -p /opt/cert/etcd

cd /opt/cert/etcd

cat > etcd-csr.json << EOF

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"172.16.1.51",

"172.16.1.52",

"172.16.1.53",

"172.16.1.54",

"172.16.1.55",

"172.16.1.56"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "ShangHai",

"L": "ShangHai"

}

]

}

EOF

3.生成ETCD证书

cd /opt/cert/etcd

[root@k8s-m-01 /opt/cert/etcd]# cfssl gencert -ca=../ca/ca.pem -ca-key=../ca/ca-key.pem -config=../ca/ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

2021/03/26 17:38:57 [INFO] generate received request

2021/03/26 17:38:57 [INFO] received CSR

2021/03/26 17:38:57 [INFO] generating key: rsa-2048

2021/03/26 17:38:58 [INFO] encoded CSR

2021/03/26 17:38:58 [INFO] signed certificate with serial number 179909685000914921289186132666286329014949215773

2021/03/26 17:38:58 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

4.分发ETCD证书

[root@k8s-m-01 /opt/cert/etcd]# for ip in m1 m2 m3;do

ssh root@${ip} "mkdir -pv /etc/etcd/ssl"

scp ../ca/ca*.pem root@${ip}:/etc/etcd/ssl

scp ./etcd*.pem root@${ip}:/etc/etcd/ssl

done

[root@k8s-m-01 /opt/cert/etcd]# ll /etc/etcd/ssl/

total 16

-rw------- 1 root root 1675 Mar 26 17:41 ca-key.pem

-rw-r--r-- 1 root root 1281 Mar 26 17:41 ca.pem

-rw------- 1 root root 1675 Mar 26 17:41 etcd-key.pem

-rw-r--r-- 1 root root 1379 Mar 26 17:41 etcd.pem

5.部署ETCD证书

# 下载ETCD安装包

wget https://mirrors.huaweicloud.com/etcd/v3.3.24/etcd-v3.3.24-linux-amd64.tar.gz

# 解压

tar xf etcd-v3.3.24-linux-amd64.tar.gz

# 分发至其他节点

for i in m1 m2 m3;do scp ./etcd-v3.3.24-linux-amd64/etcd* root@$i:/usr/local/bin/;done

#查看etcd版本信息

[root@k8s-m-01 /opt/etcd-v3.3.24-linux-amd64]# etcd --version

etcd Version: 3.3.24

Git SHA: bdd57848d

Go Version: go1.12.17

Go OS/Arch: linux/amd64

6.注册ETCD服务(三台master节点)

- 在三台master节点上同时执行

- 利用变量主机名与ip,让其在每台master节点注册

[root@k8s-m-01 ~]# vim etcd.sh

#创建存放目录

mkdir -pv /etc/kubernetes/conf/etcd && \

#定义变量

ETCD_NAME=`hostname`

INTERNAL_IP=`hostname -i`

INITIAL_CLUSTER=k8s-m-01=https://172.16.1.51:2380,k8s-m-02=https://172.16.1.52:2380,k8s-m-03=https://172.16.1.53:2380

#准备配置文件

cat << EOF | sudo tee /usr/lib/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/bin/etcd \\

--name ${ETCD_NAME} \\

--cert-file=/etc/etcd/ssl/etcd.pem \\

--key-file=/etc/etcd/ssl/etcd-key.pem \\

--peer-cert-file=/etc/etcd/ssl/etcd.pem \\

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \\

--trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \\

--peer-client-cert-auth \\

--client-cert-auth \\

--initial-advertise-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-peer-urls https://${INTERNAL_IP}:2380 \\

--listen-client-urls https://${INTERNAL_IP}:2379,https://127.0.0.1:2379 \\

--advertise-client-urls https://${INTERNAL_IP}:2379 \\

--initial-cluster-token etcd-cluster \\

--initial-cluster ${INITIAL_CLUSTER} \\

--initial-cluster-state new \\

--data-dir=/var/lib/etcd \\

Restart=on-failure \\

RestartSec=5 \\

[Install] \\

WantedBy=multi-user.target \\

EOF

#加载etcd服务

systemctl daemon-reload \\

# 启动ETCD服务

systemctl enable --now etcd.service \\

# 验证ETCD服务

systemctl status etcd.service

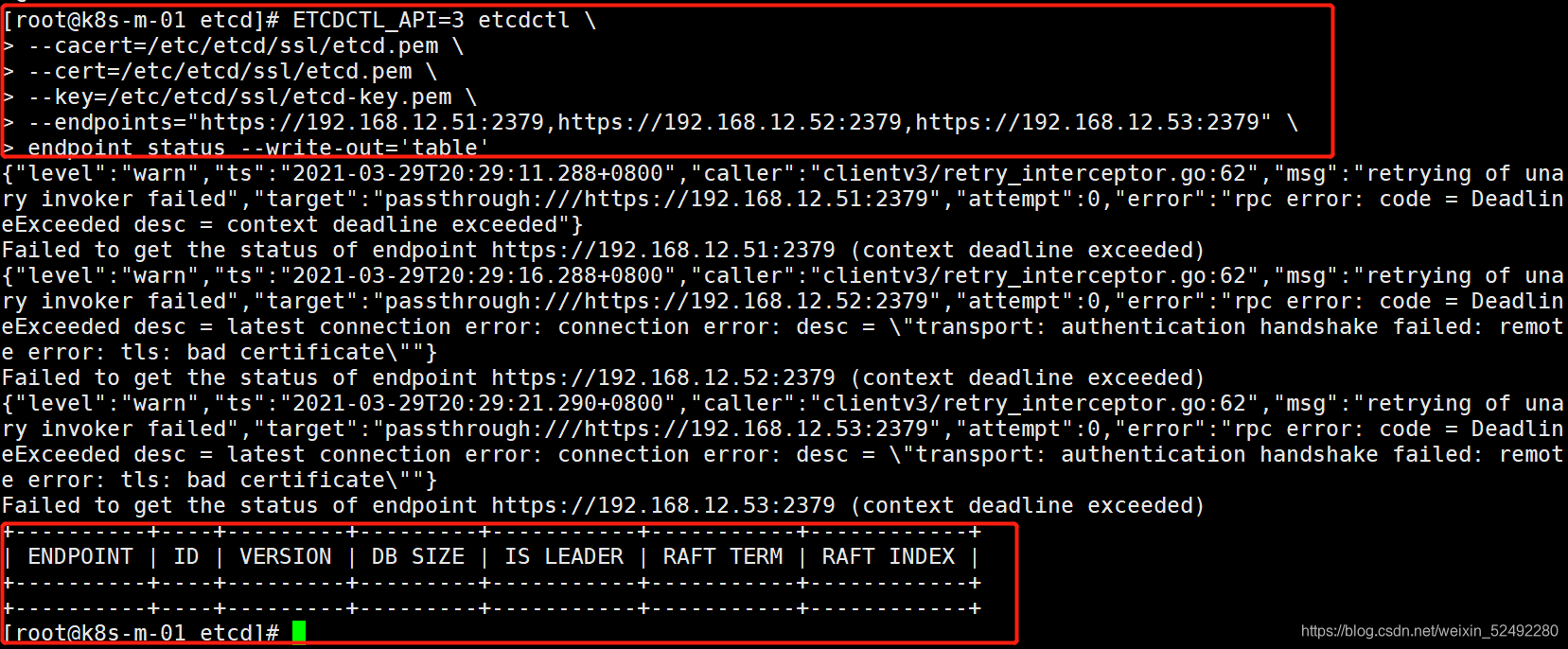

7.测试ETCD服务(三台master节点)

- 在一台master节点执行即可(如master01)

# 第一种方式

ETCDCTL_API=3 etcdctl \

--cacert=/etc/etcd/ssl/etcd.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://172.16.1.51:2379,https://172.16.1.52:2379,https://172.16.1.53:2379" \

endpoint status --write-out='table'

测试结果

+--------------------------+------------------+---------+---------+-----------+-----------+------------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX |

+--------------------------+------------------+---------+---------+-----------+-----------+------------+

| https://172.16.1.51:2379 | 2760f98de9dc762 | 3.3.24 | 20 kB | true | 55 | 9 |

| https://172.16.1.52:2379 | 18273711b3029818 | 3.3.24 | 20 kB | false | 55 | 9 |

| https://172.16.1.53:2379 | f42951486b449d48 | 3.3.24 | 20 kB | false | 55 | 9 |

+--------------------------+------------------+---------+---------+-----------+-----------+------------+

# 第二种方式

ETCDCTL_API=3 etcdctl \

--cacert=/etc/etcd/ssl/etcd.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://172.16.1.51:2379,https://172.16.1.52:2379,https://172.16.1.53:2379" \

member list --write-out='table'

测试结果

+------------------+---------+----------+--------------------------+--------------------------+

| ID | STATUS | NAME | PEER ADDRS | CLIENT ADDRS |

+------------------+---------+----------+--------------------------+--------------------------+

| 2760f98de9dc762 | started | k8s-m-01 | https://172.16.1.51:2380 | https://172.16.1.51:2379 |

| 18273711b3029818 | started | k8s-m-02 | https://172.16.1.52:2380 | https://172.16.1.52:2379 |

| f42951486b449d48 | started | k8s-m-03 | https://172.16.1.53:2380 | https://172.16.1.53:2379 |

+------------------+---------+----------+--------------------------+--------------------------+

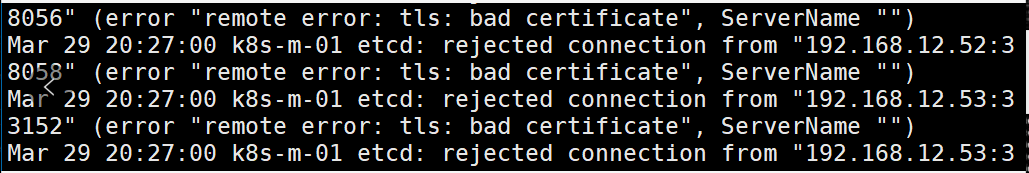

补充:

如果测试不成功出现如下图报错

清理缓存数据,重新启动即可

[root@k8s-m-01 ~]# cd /var/lib/etcd/

[root@k8s-m-01 etcd]# ll

总用量 0

drwx------ 4 root root 29 3月 29 20:37 member

[root@k8s-m-01 etcd]# rm -rf member/ #删除缓存数据

[root@k8s-m-01 etcd]#

[root@k8s-m-01 etcd]# systemctl start etcd

[root@k8s-m-01 etcd]# systemctl status etcd

七、部署master节点

主要把master节点上的各个组件部署成功。

(kube-apiserver、控制器、调度器、flannel、etcd、kubelet、kube-proxy、DNS)

1.节点规划

同上

2.创建集群CA证书(master01节点)

[root@kubernetes-master-01 k8s]# mkdir /opt/cert/k8s

[root@kubernetes-master-01 k8s]# cd /opt/cert/k8s

[root@kubernetes-master-01 k8s]# pwd

/opt/cert/k8s

[root@kubernetes-master-01 k8s]# cat > ca-config.json << EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

[root@kubernetes-master-01 k8s]# cat > ca-csr.json << EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai"

}

]

}

EOF

[root@kubernetes-master-01 k8s]# ll

total 8

-rw-r--r-- 1 root root 294 Sep 13 19:59 ca-config.json

-rw-r--r-- 1 root root 212 Sep 13 20:01 ca-csr.json

#生成CA证书

[root@kubernetes-master-01 k8s]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

2020/09/13 20:01:45 [INFO] generating a new CA key and certificate from CSR

2020/09/13 20:01:45 [INFO] generate received request

2020/09/13 20:01:45 [INFO] received CSR

2020/09/13 20:01:45 [INFO] generating key: rsa-2048

2020/09/13 20:01:46 [INFO] encoded CSR

2020/09/13 20:01:46 [INFO] signed certificate with serial number 588993429584840635805985813644877690042550093427

[root@kubernetes-master-01 k8s]# ll

total 20

-rw-r--r-- 1 root root 294 Sep 13 19:59 ca-config.json

-rw-r--r-- 1 root root 960 Sep 13 20:01 ca.csr

-rw-r--r-- 1 root root 212 Sep 13 20:01 ca-csr.json

-rw------- 1 root root 1679 Sep 13 20:01 ca-key.pem

-rw-r--r-- 1 root root 1273 Sep 13 20:01 ca.pem

3.创建集群普通证书

1)创建kube-apiserver的证书(master01节点)

[root@k8s-m-01 /opt/cert/k8s]# mkdir /opt/cert/k8s

[root@k8s-m-01 /opt/cert/k8s]# cd /opt/cert/k8s

#host:localhost 地址 + master 部署节点的 ip 地址 + etcd 节点的部署地址 + 负载均衡指定的

VIP(192.168.12.56) + service ip 段的第一个合法地址(10.96.0.1) + k8s 默认指定的一些地址。

[root@k8s-m-01 /opt/cert/k8s]# cat > server-csr.json << EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"172.16.1.51",

"172.16.1.52",

"172.16.1.53",

"172.16.1.54",

"172.16.1.55",

"172.16.1.56",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai"

}

]

}

EOF

#生成证书

[root@k8s-m-01 /opt/cert/k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

2021/03/29 09:31:02 [INFO] generate received request

2021/03/29 09:31:02 [INFO] received CSR

2021/03/29 09:31:02 [INFO] generating key: rsa-2048

2021/03/29 09:31:02 [INFO] encoded CSR

2021/03/29 09:31:02 [INFO] signed certificate with serial number 475285860832876170844498652484239182294052997083

2021/03/29 09:31:02 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-m-01 /opt/cert/k8s]# ll

total 36

-rw-r--r-- 1 root root 294 Mar 29 09:13 ca-config.json

-rw-r--r-- 1 root root 960 Mar 29 09:16 ca.csr

-rw-r--r-- 1 root root 214 Mar 29 09:14 ca-csr.json

-rw------- 1 root root 1675 Mar 29 09:16 ca-key.pem

-rw-r--r-- 1 root root 1281 Mar 29 09:16 ca.pem

-rw-r--r-- 1 root root 1245 Mar 29 09:31 server.csr

-rw-r--r-- 1 root root 603 Mar 29 09:29 server-csr.json

-rw------- 1 root root 1675 Mar 29 09:31 server-key.pem

-rw-r--r-- 1 root root 1574 Mar 29 09:31 server.pem

2)创建controller-manager的证书(master01节点)

[root@k8s-m-01 /opt/cert/k8s]# cat > kube-controller-manager-csr.json << EOF

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"172.16.1.51",

"172.16.1.52",

"172.16.1.53",

"172.16.1.54",

"172.16.1.55",

"172.16.1.56"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai",

"O": "system:kube-controller-manager",

"OU": "System"

}

]

}

EOF

#生成证书

# ca路径一定要指定正确,否则生成是失败!

[root@k8s-m-01 /opt/cert/k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2021/03/29 09:33:31 [INFO] generate received request

2021/03/29 09:33:31 [INFO] received CSR

2021/03/29 09:33:31 [INFO] generating key: rsa-2048

2021/03/29 09:33:31 [INFO] encoded CSR

2021/03/29 09:33:31 [INFO] signed certificate with serial number 159207911625502250093013220742142932946474251607

2021/03/29 09:33:31 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-m-01 k8s]# ll #查看证书

total 52

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 960 Jul 16 19:17 ca.csr

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

-rw------- 1 root root 1679 Jul 16 19:17 ca-key.pem

-rw-r--r-- 1 root root 1281 Jul 16 19:17 ca.pem

-rw-r--r-- 1 root root 1163 Jul 16 19:24 kube-controller-manager.csr

-rw-r--r-- 1 root root 493 Jul 16 19:23 kube-controller-manager-csr.json

-rw------- 1 root root 1675 Jul 16 19:24 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1497 Jul 16 19:24 kube-controller-manager.pem

-rw-r--r-- 1 root root 1245 Jul 16 19:19 server.csr

-rw-r--r-- 1 root root 591 Jul 16 19:19 server-csr.json

-rw------- 1 root root 1679 Jul 16 19:19 server-key.pem

-rw-r--r-- 1 root root 1574 Jul 16 19:19 server.pem

3)创建kube-scheduler的证书(master01节点)

[root@k8s-m-01 /opt/cert/k8s]# cat > kube-scheduler-csr.json << EOF

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"172.16.1.51",

"172.16.1.52",

"172.16.1.53",

"172.16.1.54",

"172.16.1.55",

"172.16.1.56"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai",

"O": "system:kube-scheduler",

"OU": "System"

}

]

}

EOF

#生成证书

[root@k8s-m-01 /opt/cert/k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2021/03/29 09:34:57 [INFO] generate received request

2021/03/29 09:34:57 [INFO] received CSR

2021/03/29 09:34:57 [INFO] generating key: rsa-2048

2021/03/29 09:34:58 [INFO] encoded CSR

2021/03/29 09:34:58 [INFO] signed certificate with serial number 38647006614878532408684142936672497501281226307

2021/03/29 09:34:58 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-m-01 k8s]# ll #查看证书

total 68

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 960 Jul 16 19:17 ca.csr

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

-rw------- 1 root root 1679 Jul 16 19:17 ca-key.pem

-rw-r--r-- 1 root root 1281 Jul 16 19:17 ca.pem

-rw-r--r-- 1 root root 1163 Jul 16 19:24 kube-controller-manager.csr

-rw-r--r-- 1 root root 493 Jul 16 19:23 kube-controller-manager-csr.json

-rw------- 1 root root 1675 Jul 16 19:24 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1497 Jul 16 19:24 kube-controller-manager.pem

-rw-r--r-- 1 root root 1135 Jul 16 19:29 kube-scheduler.csr

-rw-r--r-- 1 root root 473 Jul 16 19:29 kube-scheduler-csr.json

-rw------- 1 root root 1679 Jul 16 19:29 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1468 Jul 16 19:29 kube-scheduler.pem

-rw-r--r-- 1 root root 1245 Jul 16 19:19 server.csr

-rw-r--r-- 1 root root 591 Jul 16 19:19 server-csr.json

-rw------- 1 root root 1679 Jul 16 19:19 server-key.pem

-rw-r--r-- 1 root root 1574 Jul 16 19:19 server.pem

[root@k8s-m-01 k8s]# ll #查看证书

total 84

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 960 Jul 16 19:17 ca.csr

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

-rw------- 1 root root 1679 Jul 16 19:17 ca-key.pem

-rw-r--r-- 1 root root 1281 Jul 16 19:17 ca.pem

-rw-r--r-- 1 root root 1163 Jul 16 19:24 kube-controller-manager.csr

-rw-r--r-- 1 root root 493 Jul 16 19:23 kube-controller-manager-csr.json

-rw------- 1 root root 1675 Jul 16 19:24 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1497 Jul 16 19:24 kube-controller-manager.pem

-rw-r--r-- 1 root root 1029 Jul 16 19:31 kube-proxy.csr

-rw-r--r-- 1 root root 294 Jul 16 19:31 kube-proxy-csr.json

-rw------- 1 root root 1679 Jul 16 19:31 kube-proxy-key.pem

-rw-r--r-- 1 root root 1383 Jul 16 19:31 kube-proxy.pem

-rw-r--r-- 1 root root 1135 Jul 16 19:29 kube-scheduler.csr

-rw-r--r-- 1 root root 473 Jul 16 19:29 kube-scheduler-csr.json

-rw------- 1 root root 1679 Jul 16 19:29 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1468 Jul 16 19:29 kube-scheduler.pem

-rw-r--r-- 1 root root 1245 Jul 16 19:19 server.csr

-rw-r--r-- 1 root root 591 Jul 16 19:19 server-csr.json

-rw------- 1 root root 1679 Jul 16 19:19 server-key.pem

-rw-r--r-- 1 root root 1574 Jul 16 19:19 server.pem

04)创建kube-proxy证书(master01节点)

[root@k8s-m-01 /opt/cert/k8s]# cat > kube-proxy-csr.json << EOF

{

"CN":"system:kube-proxy",

"hosts":[],

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"ShangHai",

"ST":"ShangHai",

"O":"system:kube-proxy",

"OU":"System"

}

]

}

EOF

[root@k8s-m-01 /opt/cert/k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

2021/03/29 09:37:44 [INFO] generate received request

2021/03/29 09:37:44 [INFO] received CSR

2021/03/29 09:37:44 [INFO] generating key: rsa-2048

2021/03/29 09:37:44 [INFO] encoded CSR

2021/03/29 09:37:44 [INFO] signed certificate with serial number 703321465371340829919693910125364764243453439484

2021/03/29 09:37:44 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

[root@k8s-m-01 k8s]# ll # 查看证书

total 100

-rw-r--r-- 1 root root 1009 Jul 16 19:33 admin.csr

-rw-r--r-- 1 root root 260 Jul 16 19:33 admin-csr.json

-rw------- 1 root root 1679 Jul 16 19:33 admin-key.pem

-rw-r--r-- 1 root root 1363 Jul 16 19:33 admin.pem

-rw-r--r-- 1 root root 294 Jul 16 19:16 ca-config.json

-rw-r--r-- 1 root root 960 Jul 16 19:17 ca.csr

-rw-r--r-- 1 root root 214 Jul 16 19:16 ca-csr.json

-rw------- 1 root root 1679 Jul 16 19:17 ca-key.pem

-rw-r--r-- 1 root root 1281 Jul 16 19:17 ca.pem

-rw-r--r-- 1 root root 1163 Jul 16 19:24 kube-controller-manager.csr

-rw-r--r-- 1 root root 493 Jul 16 19:23 kube-controller-manager-csr.json

-rw------- 1 root root 1675 Jul 16 19:24 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1497 Jul 16 19:24 kube-controller-manager.pem

-rw-r--r-- 1 root root 1029 Jul 16 19:31 kube-proxy.csr

-rw-r--r-- 1 root root 294 Jul 16 19:31 kube-proxy-csr.json

-rw------- 1 root root 1679 Jul 16 19:31 kube-proxy-key.pem

-rw-r--r-- 1 root root 1383 Jul 16 19:31 kube-proxy.pem

-rw-r--r-- 1 root root 1135 Jul 16 19:29 kube-scheduler.csr

-rw-r--r-- 1 root root 473 Jul 16 19:29 kube-scheduler-csr.json

-rw------- 1 root root 1679 Jul 16 19:29 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1468 Jul 16 19:29 kube-scheduler.pem

-rw-r--r-- 1 root root 1245 Jul 16 19:19 server.csr

-rw-r--r-- 1 root root 591 Jul 16 19:19 server-csr.json

-rw------- 1 root root 1679 Jul 16 19:19 server-key.pem

-rw-r--r-- 1 root root 1574 Jul 16 19:19 server.pem

5)创建集群管理员证书(master01节点)

#为了能让集群客户端工具安全的访问集群,所以要为集群客户端创建证书,使其具有所有集群权限

[root@k8s-m-01 /opt/cert/k8s]# cat > admin-csr.json << EOF

{

"CN":"admin",

"key":{

"algo":"rsa",

"size":2048

},

"names":[

{

"C":"CN",

"L":"BeiJing",

"ST":"BeiJing",

"O":"system:masters",

"OU":"System"

}

]

}

EOF

[root@k8s-m-01 /opt/cert/k8s]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2021/03/29 09:36:26 [INFO] generate received request

2021/03/29 09:36:26 [INFO] received CSR

2021/03/29 09:36:26 [INFO] generating key: rsa-2048

2021/03/29 09:36:26 [INFO] encoded CSR

2021/03/29 09:36:26 [INFO] signed certificate with serial number 258862825289855717894394114308507213391711602858

2021/03/29 09:36:26 [WARNING] This certificate lacks a "hosts" field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 ("Information Requirements").

6)颁发创建的所有证书(master01节点)

#Master 节点所需证书:ca、kube-apiservver、kube-controller-manager、kube-scheduler、用户证书、Etcd证书。

[root@k8s-m-01 /opt/cert/k8s]# mkdir -pv /etc/kubernetes/ssl

[root@k8s-m-01 /opt/cert/k8s]# cp -p ./{ca*pem,server*pem,kube-controller-manager*pem,kube-scheduler*.pem,kube-proxy*pem,admin*.pem} /etc/kubernetes/ssl #备份一份

[root@k8s-m-01 /opt/cert/k8s]# for i in m1 m2 m3;do

ssh root@$i "mkdir -pv /etc/kubernetes/ssl"

scp /etc/kubernetes/ssl/* root@$i:/etc/kubernetes/ssl

done

# 3、查看证书(所有节点)

[root@k8s-m-01 k8s]# ll /etc/kubernetes/ssl

total 48

-rw------- 1 root root 1679 Aug 1 14:23 admin-key.pem

-rw-r--r-- 1 root root 1359 Aug 1 14:23 admin.pem

-rw------- 1 root root 1679 Aug 1 14:23 ca-key.pem

-rw-r--r-- 1 root root 1273 Aug 1 14:23 ca.pem

-rw------- 1 root root 1679 Aug 1 14:23 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1489 Aug 1 14:23 kube-controller-manager.pem

-rw------- 1 root root 1679 Aug 1 14:23 kube-proxy-key.pem

-rw-r--r-- 1 root root 1379 Aug 1 14:23 kube-proxy.pem

-rw------- 1 root root 1675 Aug 1 14:23 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1464 Aug 1 14:23 kube-scheduler.pem

-rw------- 1 root root 1679 Aug 1 14:23 server-key.pem

-rw-r--r-- 1 root root 1570 Aug 1 14:23 server.pem

4.编写配置文件以及下载安装包

1)下载安装包(master01节点)

#创建存放目录

mkdir /opt/data

cd /opt/data

# 下载二进制组件

## 方式一:下载server安装包

[root@k8s-m-01 /opt/data]# wget https://dl.k8s.io/v1.18.8/kubernetes-server-linux-amd64.tar.gz

## 方式二:从容器中复制(如果下载不成功可执行这步)

[root@k8s-m-01 /opt/data]# docker run -it registry.cn-hangzhou.aliyuncs.com/k8sos/k8s:v1.18.8.1 bash

## 方式三:从自己博客中复制(如果下载不成功可执行这步) # 推荐这种

[root@k8s-m-01 /opt/data]# wget http://www.mmin.xyz:81/package/k8s/kubernetes-server-linux-amd64.tar.gz

## 分发组件

[root@k8s-m-01 /opt/data]# tar -xf kubernetes-server-linux-amd64.tar.gz

[root@k8s-m-01 /opt/data]# cd kubernetes/server/bin

[root@kubernetes-master-01 bin]# for i in m1 m2 m3; do scp kube-apiserver kube-controller-manager kube-scheduler kubectl root@$i:/usr/local/bin/; done

# 4、下载其他组件 (选做)

[root@k8s-m-01 ~]# cd /opt/data/

[root@k8s-m-01 data]# ll

total 0

[root@k8s-m-01 data]# docker cp 4511c1146868:kubernetes-server-linux-amd64.tar.gz . 其他软件依次复制

[root@k8s-m-01 data]# docker cp 4511c1146868:kubernetes-client-linux-amd64.tar.gz .

[root@k8s-m-01 data]# ll

total 487492

-rw-r--r-- 1 root root 14503878 Aug 18 2020 etcd-v3.3.24-linux-amd64.tar.gz

-rw-r--r-- 1 root root 9565743 Jan 29 2019 flannel-v0.11.0-linux-amd64.tar.gz

-rw-r--r-- 1 root root 13237066 Aug 14 2020 kubernetes-client-linux-amd64.tar.gz

-rw-r--r-- 1 root root 97933232 Aug 14 2020 kubernetes-node-linux-amd64.tar.gz

-rw-r--r-- 1 root root 363943527 Aug 14 2020 kubernetes-server-linux-amd64.tar.gz

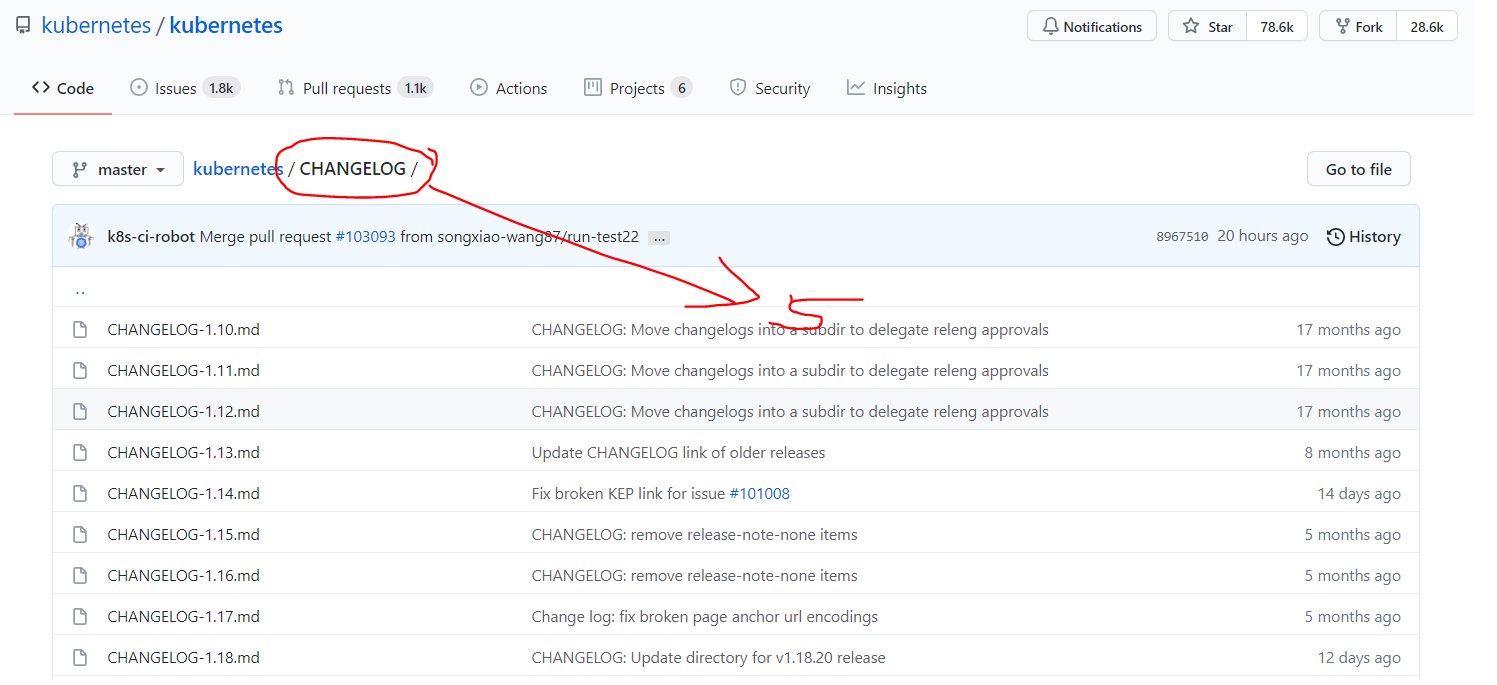

github.com 安装kubernetes-server

)

2)创建集群配置文件

1>创建kube-controller-manager.kubeconfig(master01节点)

#在kuberbetes中,我们需要创建一个配置文件,用来配置集群、用户、命名空间及身份认证等信息

cd /opt/cert/k8s

#创建kube-controller-manager.kubeconfig

export KUBE_APISERVER="https://172.16.1.56:8443" #vip地址

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-controller-manager.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials "kube-controller-manager" \

--client-certificate=/etc/kubernetes/ssl/kube-controller-manager.pem \

--client-key=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=kube-controller-manager.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kube-controller-manager" \

--kubeconfig=kube-controller-manager.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=kube-controller-manager.kubeconfig

2>创建kube-scheduler.kubeconfig(master01节点)

# 创建kube-scheduler.kubeconfig

export KUBE_APISERVER="https://172.16.1.56:8443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-scheduler.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials "kube-scheduler" \

--client-certificate=/etc/kubernetes/ssl/kube-scheduler.pem \

--client-key=/etc/kubernetes/ssl/kube-scheduler-key.pem \

--embed-certs=true \

--kubeconfig=kube-scheduler.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kube-scheduler" \

--kubeconfig=kube-scheduler.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=kube-scheduler.kubeconfig

3>创建kube-proxy.kubeconfig集群配置文件(master01节点)

## 创建kube-proxy.kubeconfig集群配置文件

export KUBE_APISERVER="https://172.16.1.56:8443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials "kube-proxy" \

--client-certificate=/etc/kubernetes/ssl/kube-proxy.pem \

--client-key=/etc/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kube-proxy" \

--kubeconfig=kube-proxy.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

4>创建超级管理员的集群配置文件(master01节点)

export KUBE_APISERVER="https://172.16.1.56:8443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=admin.kubeconfig

# 设置客户端认证参数

kubectl config set-credentials "admin" \

--client-certificate=/etc/kubernetes/ssl/admin.pem \

--client-key=/etc/kubernetes/ssl/admin-key.pem \

--embed-certs=true \

--kubeconfig=admin.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="admin" \

--kubeconfig=admin.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=admin.kubeconfig

5>颁发集群配置文件(master01节点)

[root@k8s-m-01 /opt/cert/k8s]# for i in m1 m2 m3; do

ssh root@$i "mkdir -pv /etc/kubernetes/cfg"

scp ./*.kubeconfig root@$i:/etc/kubernetes/cfg

done

[root@k8s-m-01 /opt/cert/k8s]# ll /etc/kubernetes/cfg/

total 32

-rw------- 1 root root 6103 Mar 29 10:32 admin.kubeconfig

-rw------- 1 root root 6319 Mar 29 10:32 kube-controller-manager.kubeconfig

-rw------- 1 root root 6141 Mar 29 10:32 kube-proxy.kubeconfig

-rw------- 1 root root 6261 Mar 29 10:32 kube-scheduler.kubeconfig

6>创建集群token(master01节点)

# 1、只需要创建一次

[root@k8s-m-01 /opt/cert/k8s]# TLS_BOOTSTRAPPING_TOKEN=`head -c 16 /dev/urandom | od -An -t x | tr -d ' '`

# 2、必须要用自己机器创建的Token

[root@k8s-m-01 /opt/cert/k8s]#cat > token.csv << EOF

${TLS_BOOTSTRAPPING_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

# 3、分发集群token,用于集群TLS认证 (保证所有节点token值一样)

[root@k8s-m-01 k8s]# for i in m1 m2 m3;do scp token.csv root@$i:/etc/kubernetes/cfg/;done

5.部署各个组件

安装各个组件,使其可以正常工作

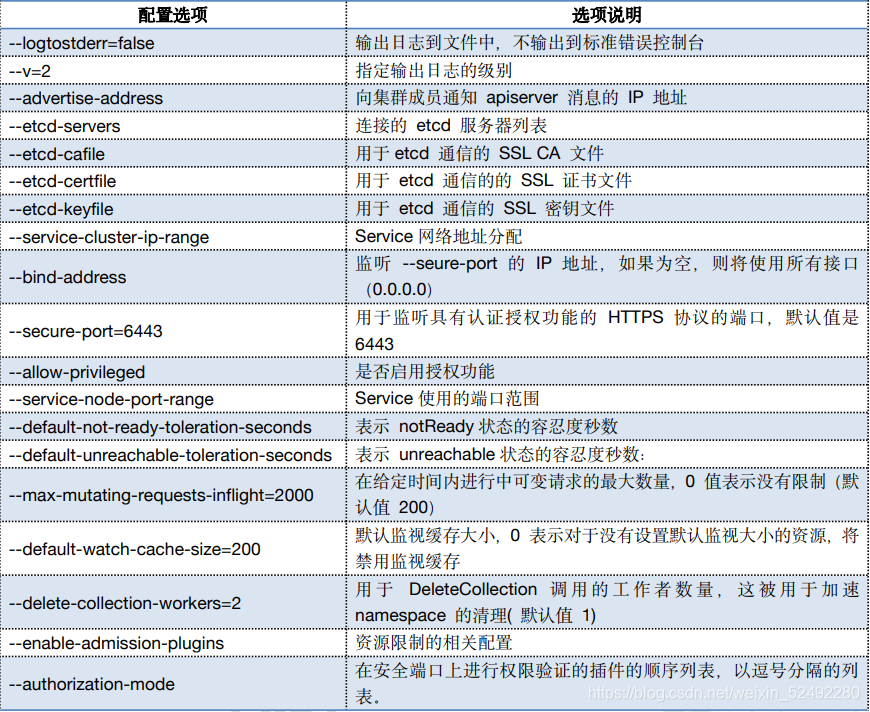

1)部署kube-apiserver(所有master节点)

#1.创建kube-apiserver的配置文件

KUBE_APISERVER_IP=`hostname -i`

cat > /etc/kubernetes/cfg/kube-apiserver.conf << EOF

KUBE_APISERVER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--advertise-address=${KUBE_APISERVER_IP} \\

--default-not-ready-toleration-seconds=360 \\

--default-unreachable-toleration-seconds=360 \\

--max-mutating-requests-inflight=2000 \\

--max-requests-inflight=4000 \\

--default-watch-cache-size=200 \\

--delete-collection-workers=2 \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--allow-privileged=true \\

--service-cluster-ip-range=10.96.0.0/16 \\

--service-node-port-range=30000-52767 \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\

--authorization-mode=RBAC,Node \\

--enable-bootstrap-token-auth=true \\

--token-auth-file=/etc/kubernetes/cfg/token.csv \\

--kubelet-client-certificate=/etc/kubernetes/ssl/server.pem \\

--kubelet-client-key=/etc/kubernetes/ssl/server-key.pem \\

--tls-cert-file=/etc/kubernetes/ssl/server.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/server-key.pem \\

--client-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--audit-log-maxage=30 \\

--audit-log-maxbackup=3 \\

--audit-log-maxsize=100 \\

--audit-log-path=/var/log/kubernetes/k8s-audit.log \\

--etcd-servers=https://192.168.12.51:2379,https://192.168.12.52:2379,https://192.168.12.53:2379 \\

--etcd-cafile=/etc/etcd/ssl/ca.pem \\

--etcd-certfile=/etc/etcd/ssl/etcd.pem \\

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem"

EOF

#2.注册kube-apiserver的服务

[root@k8s-m-01 /opt/cert/k8s]# cat > /usr/lib/systemd/system/kube-apiserver.service << EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver \$KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=10

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#3.重载配置文件

[root@k8s-m-01 /opt/cert/k8s]# mkdir -p /var/log/kubernetes/

[root@k8s-m-01 /opt/cert/k8s]# systemctl daemon-reload

[root@k8s-m-01 /opt/cert/k8s]# systemctl enable --now kube-apiserver.service #启动服务

# 4、检测状态是否启动

[root@k8s-m-01 k8s]# systemctl status kube-apiserver.service

● kube-apiserver.service - Kubernetes API Server

Loaded: loaded (/usr/lib/systemd/system/kube-apiserver.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2021-07-16 20:51:07 CST; 42s ago

2)对kube-apiserver做高可用

1>安装高可用软件(所有master节点)

#负载均衡器有很多种,只要能实现api-server高可用都行

# keeplived + haproxy

[root@k8s-m-01 ~]# yum install -y keepalived haproxy

2>修改keepalived配置文件(所有master节点)

# 根据节点的不同,修改的配置也不同

mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf_bak

cd /etc/keepalived

KUBE_APISERVER_IP=`hostname -i`

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_kubernetes {

script "/etc/keepalived/check_kubernetes.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

mcast_src_ip ${KUBE_APISERVER_IP}

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.12.56

}

}

EOF

[root@k8s-m-01 keepalived]# systemctl daemon-reload

[root@k8s-m-01 /etc/keepalived]# systemctl enable --now keepalived

# 4、验证keepalived是否启动

[root@k8s-m-01 keepalived]# systemctl status keepalived.service

● keepalived.service - LVS and VRRP High Availability Monitor

Loaded: loaded (/usr/lib/systemd/system/keepalived.service; enabled; vendor preset: disabled)

Active: active (running) since Sun 2021-08-01 14:48:23 CST; 27s ago

[root@k8s-m-01 keepalived]# ip a |grep 56

inet 172.16.1.56/32 scope global eth1

3>修改haproxy配置文件(所有master节点)

# 高可用软件

cat > /etc/haproxy/haproxy.cfg <<EOF

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server kubernetes-master-01 192.168.12.51:6443 check inter 2000 fall 2 rise 2 weight 100

server kubernetes-master-02 192.168.12.52:6443 check inter 2000 fall 2 rise 2 weight 100

server kubernetes-master-03 192.168.12.53:6443 check inter 2000 fall 2 rise 2 weight 100

EOF

[root@k8s-m-01 /etc/keepalived]# systemctl enable --now haproxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/haproxy.service to /usr/lib/systemd/system/haproxy.service.

3、检查集群状态

[root@k8s-m-01 keepalived]# systemctl status haproxy.service

● haproxy.service - HAProxy Load Balancer

Loaded: loaded (/usr/lib/systemd/system/haproxy.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2021-07-16 21:12:00 CST; 27s ago

Main PID: 4997 (haproxy-systemd)

3)部署TLS

TLS bootstrapping功能就是让node节点上的kubelet组件先使用一个预定的低权限用户连接到apiserver,然后向apiserver申请证书,由apiserver 动态签署颁发到Node节点,实现证书签署自动化

1>创建集群配置文件(master01节点)

cd /opt/cert/k8s

export KUBE_APISERVER="https://192.168.12.56:8443"

# 设置集群参数

kubectl config set-cluster kubernetes \

--certificate-authority=/etc/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kubelet-bootstrap.kubeconfig

#查看自己的token

[root@k8s-m-01 k8s]# cat token.csv

6d652b0944001e04876f59c5164dcbe8,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

# 设置客户端认证参数,此处token必须用上叙token.csv中的token

kubectl config set-credentials "kubelet-bootstrap" \

--token=6d652b0944001e04876f59c5164dcbe8 \ # 使用自己的token.csv里面的token

--kubeconfig=kubelet-bootstrap.kubeconfig

# 设置上下文参数(在上下文参数中将集群参数和用户参数关联起来)

kubectl config set-context default \

--cluster=kubernetes \

--user="kubelet-bootstrap" \

--kubeconfig=kubelet-bootstrap.kubeconfig

# 配置默认上下文

kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig

2>颁发证书(master01节点)

# 颁发集群配置文件

[root@k8s-m-01 /opt/cert/k8s]# for i in m1 m2 m3; do

scp kubelet-bootstrap.kubeconfig root@$i:/etc/kubernetes/cfg/

done

3>创建TLS低权限用户(master01节点)

# 1、创建一个低权限用户

[root@k8s-m-01 /opt/cert/k8s]# kubectl create clusterrolebinding kubelet-bootstrap \

--clusterrole=system:node-bootstrapper \

--user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

# 2、报错问题解决 (如果没有问题忽略)

1、删除签名

kubectl delete clusterrolebindings kubelet-bootstrap

2、重新创建成功

[root@localhost kubeconfig]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

clusterrolebinding.rbac.authorization.k8s.io/kubelet-bootstrap created

4)部署contorller-manager

Controller Manager 作为集群内部的管理控制中心,负责集群内的 Node、Pod 副本、服务端点(Endpoint)、命名空间(Namespace)、服务账号(ServiceAccount)、资源定额(ResourceQuota)的管理,当某个 Node意外宕机时,Controller Manager 会及时发现并执行自动化修复流程,确保集群始终处于预期的工作状态。如果多个控制器管理器同时生效,则会有一致性问题,所以 kube-controller-manager 的高可用,只能是主备模式,而 kubernetes 集群是采用租赁锁实现 leader 选举,需要在启动参数中加入 --leader-elect=true。

1>编辑配置文件(所有master节点)

cat > /etc/kubernetes/cfg/kube-controller-manager.conf << EOF

KUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--leader-elect=true \\

--cluster-name=kubernetes \\

--bind-address=127.0.0.1 \\

--allocate-node-cidrs=true \\

--cluster-cidr=10.244.0.0/12 \\

--service-cluster-ip-range=10.96.0.0/16 \\

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--root-ca-file=/etc/kubernetes/ssl/ca.pem \\

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \\

--kubeconfig=/etc/kubernetes/cfg/kube-controller-manager.kubeconfig \\

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \\

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \\

--experimental-cluster-signing-duration=87600h0m0s \\

--controllers=*,bootstrapsigner,tokencleaner \\

--use-service-account-credentials=true \\

--node-monitor-grace-period=10s \\

--horizontal-pod-autoscaler-use-rest-clients=true"

EOF

2>注册服务(所有master节点)

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager \$KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

3>启动服务

#重载配置文件+启动服务

[root@k8s-m-01 /opt/cert/k8s]# systemctl daemon-reload

[root@k8s-m-01 /opt/cert/k8s]# systemctl enable --now kube-controller-manager.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-controller-manager.service to /usr/lib/systemd/system/kube-controller-manager.service.

5)部署kube-scheduler

kube-scheduler 是 Kubernetes 集群的默认调度器,并且是集群 控制面 的一部分。对每一个新创建的 Pod或者是未被调度的 Pod,kube-scheduler 会过滤所有的 node,然后选择一个最优的 Node 去运行这个 Pod。kube-scheduler 调度器是一个策略丰富、拓扑感知、工作负载特定的功能,调度器显著影响可用性、性能和容量。调度器需要考虑个人和集体的资源要求、服务质量要求、硬件/软件/政策约束、亲和力和反亲和力规范、数据局部性、负载间干扰、完成期限等。工作负载特定的要求必要时将通过 API

1>编写配置文件(所有master节点)

cat > /etc/kubernetes/cfg/kube-scheduler.conf << EOF

KUBE_SCHEDULER_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--kubeconfig=/etc/kubernetes/cfg/kube-scheduler.kubeconfig \\

--leader-elect=true \\

--master=http://127.0.0.1:8080 \\

--bind-address=127.0.0.1 "

EOF

2>注册服务(所有master节点)

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler \$KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

3>启动(所有master节点)

#重载配置文件+启动服务

[root@k8s-m-01 /opt/cert/k8s]# systemctl daemon-reload

[root@k8s-m-01 /opt/cert/k8s]# systemctl enable --now kube-scheduler.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-scheduler.service to /usr/lib/systemd/system/kube-scheduler.service.

6)查看集群状态

[root@k8s-m-01 /opt/cert/k8s]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

7)部署kubelet服务

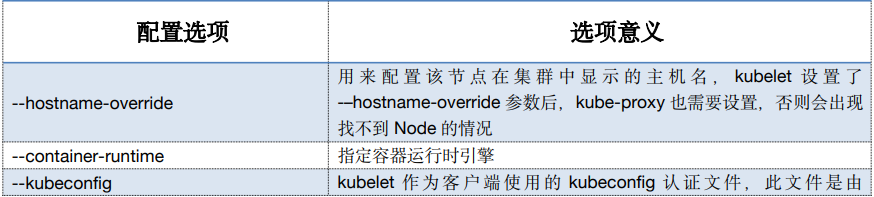

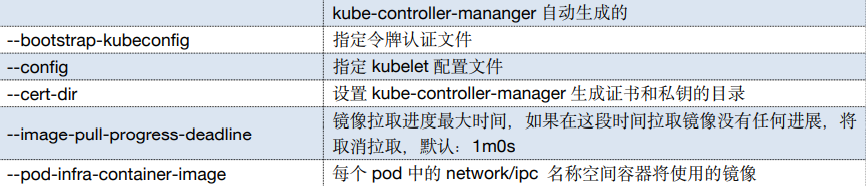

1>创建kubelet服务配置文件(所有master节点)

KUBE_HOSTNAME=`hostname`

cat > /etc/kubernetes/cfg/kubelet.conf << EOF

KUBELET_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--hostname-override=${KUBE_HOSTNAME} \\

--container-runtime=docker \\

--kubeconfig=/etc/kubernetes/cfg/kubelet.kubeconfig \\

--bootstrap-kubeconfig=/etc/kubernetes/cfg/kubelet-bootstrap.kubeconfig \\

--config=/etc/kubernetes/cfg/kubelet-config.yml \\

--cert-dir=/etc/kubernetes/ssl \\

--image-pull-progress-deadline=15m \\

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/k8sos/pause:3.2"

EOF

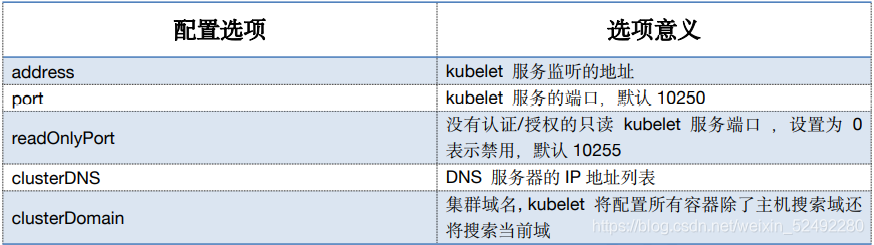

2>创建kubelet-config.yaml (所有master节点)

KUBE_HOSTNAME=`hostname -i`

cat > /etc/kubernetes/cfg/kubelet-config.yml << EOF

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: ${KUBE_HOSTNAME}

port: 10250

readOnlyPort: 10255

cgroupDriver: cgroupfs

clusterDNS:

- 10.96.0.2

clusterDomain: cluster.local

failSwapOn: false

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /etc/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

maxOpenFiles: 1000000

maxPods: 110

EOF

3>注册kubelet服务(所有master节点)

cat > /usr/lib/systemd/system/kubelet.service << EOF

[Unit]

Description=Kubernetes Kubelet

After=docker.service

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kubelet.conf

ExecStart=/usr/local/bin/kubelet \$KUBELET_OPTS

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

4>启动(所有master节点)

#重载配置文件+启动服务

[root@k8s-m-01 /opt/cert/k8s]# systemctl daemon-reload

[root@k8s-m-01 /opt/cert/k8s]# systemctl enable --now kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

8)部署kube-proxy

kube-proxy 是 Kubernetes 的核心组件,部署在每个 Node 节点上,它是实现 Kubernetes Service 的通信与负载均衡机制的重要组件; kube-proxy 负责为 Pod 创建代理服务,从 apiserver 获取所有server 信息,并根据server 信息创建代理服务,实现 server 到 Pod 的请求路由和转发,从而实现 K8s 层级的虚拟转发网

1>创建配置文件(所有master节点)

cat > /etc/kubernetes/cfg/kube-proxy.conf << EOF

KUBE_PROXY_OPTS="--logtostderr=false \\

--v=2 \\

--log-dir=/var/log/kubernetes \\

--config=/etc/kubernetes/cfg/kube-proxy-config.yml"

EOF

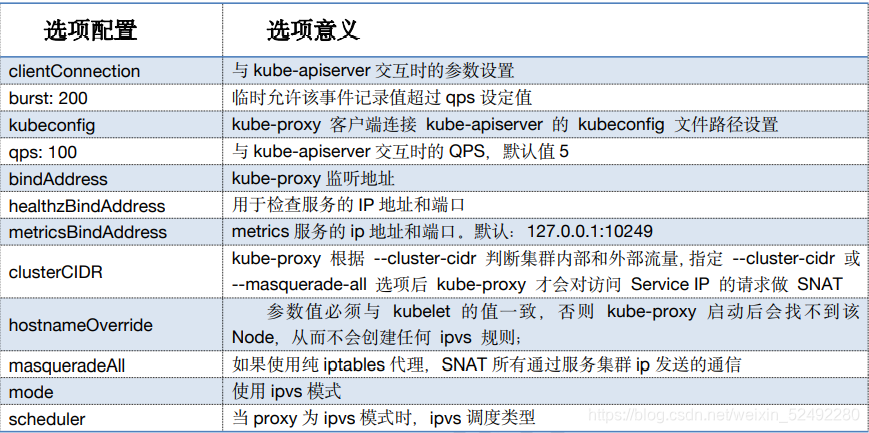

2>创建kube-proxy-config.yml(所有master节点)

KUBE_HOSTNAME=`hostname -i`

HOSTNAME=`hostname`

cat > /etc/kubernetes/cfg/kube-proxy-config.yml << EOF

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: ${KUBE_HOSTNAME}

healthzBindAddress: ${KUBE_HOSTNAME}:10256

metricsBindAddress: ${KUBE_HOSTNAME}:10249

clientConnection:

burst: 200

kubeconfig: /etc/kubernetes/cfg/kube-proxy.kubeconfig

qps: 100

hostnameOverride: ${HOSTNAME}

clusterCIDR: 10.96.0.0/16

enableProfiling: true

mode: "ipvs"

kubeProxyIPTablesConfiguration:

masqueradeAll: false

kubeProxyIPVSConfiguration:

scheduler: rr

excludeCIDRs: []

EOF

3>注册服务(所有master节点)

cat > /usr/lib/systemd/system/kube-proxy.service << EOF

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/etc/kubernetes/cfg/kube-proxy.conf

ExecStart=/usr/local/bin/kube-proxy \$KUBE_PROXY_OPTS

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

4>启动(所有master节点)

#重新加载配置文件+启动服务

[root@k8s-m-01 /opt/cert/k8s]# systemctl daemon-reload

[root@k8s-m-01 /opt/cert/k8s]# systemctl enable --now kube-proxy.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kube-proxy.service to /usr/lib/systemd/system/kube-proxy.service.

9)加入集群节点

1>查看集群节点加入请求(master01节点)

[root@k8s-m-01 /opt/cert/k8s]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-5AWYEWZ0DkF4DzHTOP00M2_Ne6on7XMwvryxbwsh90M 6m3s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-8_Rjm9D7z-04h400v_8RDHHCW3UGILeSRhxx-KkIWNI 6m3s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-wlHMJiNAkMuPsQPoD6dan8QF4AIlm-x_hVYJt9DukIg 6m2s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

2>批准加入(master01节点)

[root@k8s-m-01 /opt/cert/k8s]# kubectl certificate approve `kubectl get csr | grep "Pending" | awk '{print $1}'`

certificatesigningrequest.certificates.k8s.io/node-csr-5AWYEWZ0DkF4DzHTOP00M2_Ne6on7XMwvryxbwsh90M approved

certificatesigningrequest.certificates.k8s.io/node-csr-8_Rjm9D7z-04h400v_8RDHHCW3UGILeSRhxx-KkIWNI approved

certificatesigningrequest.certificates.k8s.io/node-csr-wlHMJiNAkMuPsQPoD6dan8QF4AIlm-x_hVYJt9DukIg approved

[root@k8s-m-01 /opt/cert/k8s]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-m-01 Ready <none> 13s v1.18.8

k8s-m-02 Ready <none> 12s v1.18.8

k8s-m-03 Ready <none> 12s v1.18.8

10)安装网络插件

kubernetes 设计了网络模型,但却将它的实现交给了网络插件,CNI 网络插件最主要的功能就是实现 POD资源能够跨主机进行通讯。常见的 CNI 网络插件:

Flannel

Calico

Canal

Contiv

OpenContrail

NSX-T

Kube-router

本次选择使用flannel网络插件

1>下载flannel安装包并安装(master01节点)

# 1、切换在data目录下

[root@k8s-m-01 k8s]# cd /opt/data/

# 2、下载flannel插件

方式一:github下载

[root@k8s-m-01 data]# wget https://github.com/coreos/flannel/releases/download/v0.13.1-rc1/flannel-v0.13.1-rc1-linux-amd64.tar.gz

方式二:自己网站下载 # 推荐

[root@k8s-m-01 data]# wget http://www.mmin.xyz:81/package/k8s/flannel-v0.11.0-linux-amd64.tar.gz

[root@k8s-m-01 data]# tar xf flannel-v0.13.1-rc1-linux-amd64.tar.gz

# 3、分发flannel插件到其他节点

[root@k8s-m-01 /opt/data]# for i in m1 m2 m3;do

scp flanneld mk-docker-opts.sh root@$i:/usr/local/bin/

done

# 4、查看flannel插件 (所有节点)

[root@k8s-m-01 data]# ll /usr/local/bin/

total 545492

-rwxr-xr-x 1 root root 10376657 Jul 25 01:02 cfssl

-rwxr-xr-x 1 root root 2277873 Jul 25 01:01 cfssljson

-rwxr-xr-x 1 root root 22820544 Aug 1 14:03 etcd

-rwxr-xr-x 1 root root 18389632 Aug 1 14:03 etcdctl

-rwxr-xr-x 1 root root 35249016 Aug 1 15:14 flanneld

-rwxr-xr-x 1 root root 120684544 Aug 1 14:35 kube-apiserver

-rwxr-xr-x 1 root root 110080000 Aug 1 14:35 kube-controller-manager

-rwxr-xr-x 1 root root 44040192 Aug 1 14:35 kubectl

-rwxr-xr-x 1 root root 113300248 Aug 1 14:35 kubelet

-rwxr-xr-x 1 root root 38383616 Aug 1 14:35 kube-proxy

-rwxr-xr-x 1 root root 42962944 Aug 1 14:35 kube-scheduler

-rwxr-xr-x 1 root root 2139 Aug 1 15:14 mk-docker-opts.sh

2>将flannel配置写入集群数据库(master01节点)

etcdctl \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--endpoints="https://192.168.12.51:2379,https://192.168.12.52:2379,https://192.168.12.53:2379" \

mk /coreos.com/network/config '{"Network":"10.244.0.0/12", "SubnetLen": 21, "Backend": {"Type": "vxlan", "DirectRouting": true}}'

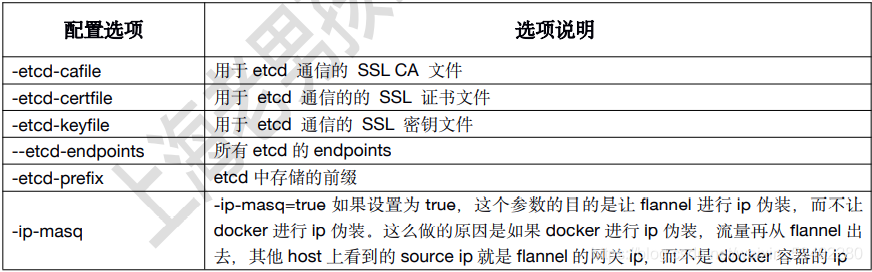

3>注册flannel服务(所有master节点)

cat > /usr/lib/systemd/system/flanneld.service << EOF

[Unit]

Description=Flanneld address

After=network.target

After=network-online.target

Wants=network-online.target

After=etcd.service

Before=docker.service

[Service]

Type=notify

ExecStart=/usr/local/bin/flanneld \\

-etcd-cafile=/etc/etcd/ssl/ca.pem \\

-etcd-certfile=/etc/etcd/ssl/etcd.pem \\

-etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \\

-etcd-endpoints=https://192.168.12.51:2379,https://192.168.12.52:2379,https://192.168.12.53:2379 \\

-etcd-prefix=/coreos.com/network \\

-ip-masq

ExecStartPost=/usr/local/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=always

RestartSec=5

StartLimitInterval=0

[Install]

WantedBy=multi-user.target

RequiredBy=docker.service

EOF

4>修改docker启动文件(所有master节点)

# 让flannel接管docker网络,形成集群统一管理的网络

sed -i '/ExecStart/s/\(.*\)/#\1/' /usr/lib/systemd/system/docker.service

sed -i '/ExecReload/a ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS -H fd:// --containerd=/run/containerd/containerd.sock' /usr/lib/systemd/system/docker.service

sed -i '/ExecReload/a EnvironmentFile=-/run/flannel/subnet.env' /usr/lib/systemd/system/docker.service

5>启动(所有master节点)

# 先启动flannel,再启动docker

[root@k8s-m-01 ~]# systemctl daemon-reload

[root@k8s-m-01 ~]# systemctl enable --now flanneld.service

Created symlink from /etc/systemd/system/multi-user.target.wants/flanneld.service to /usr/lib/systemd/system/flanneld.service.

Created symlink from /etc/systemd/system/docker.service.requires/flanneld.service to /usr/lib/systemd/system/flanneld.service.

[root@k8s-m-01 ~]# systemctl restart docker

11)验证集群网络

# 集群节点互ping对方的flannel网络

ip a 查看flannel.1的IP===》用ping验证(3台节点互相通)

12)安装集群DNS(master01节点)

CoreDNS 用于集群中 Pod 解析 Service 的名字,Kubernetes 基于 CoreDNS 用于服务发现功能。

# 1、下载DNS安装配置文件包

官网:https://github.com/coredns/deployment

方式一: giitub下载

[root@k8s-m-01 ~]# wget https://github.com/coredns/deployment/archive/refs/heads/master.zip

方式二: 自己网站下载 # 推荐

[root@k8s-m-01 ~]# wget http://www.mmin.xyz:81/package/k8s/deployment-master.zip

# 2、解压并查看镜像

[root@k8s-m-01 ~]# unzip master.zip

[root@k8s-m-01 ~]# cd deployment-master/kubernetes

[root@k8s-m-01 kubernetes]# cat coredns.yaml.sed |grep image

image: coredns/coredns:1.8.4

imagePullPolicy: IfNotPresent

# 3、拉取镜像 (所有机器执行)

[root@k8s-m-02 kubernetes]# docker pull coredns/coredns:1.8.4

# 4、执行部署命令

[root@k8s-m-01 ~/deployment-master/kubernetes]# ./deploy.sh -i 10.96.0.2 -s | kubectl apply -f -

# 5、验证集群DNS

[root@k8s-m-01 ~/deployment-master/kubernetes]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6ff445f54-m28gw 1/1 Running 0 48s

13)验证集群

# 绑定一下超管的用户权限到集群(只需要在一台服务器上执行即可)

[root@k8s-m-01 ~/deployment-master/kubernetes]# kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubernetes

clusterrolebinding.rbac.authorization.k8s.io/cluster-system-anonymous created

# 验证集群DNS和集群网络成功(所有master节点)

[root@k8s-m-01 ~/deployment-master/kubernetes]# kubectl run test -it --rm --image=busybox:1.28.3

If you don't see a command prompt, try pressing enter.

/ # nslookup kubernetes

Server: 10.96.0.2

Address 1: 10.96.0.2 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

八、部署node节点

Node 节点主要负责提供应用运行环境,其最主要的组件就是 kube-proxy 和 kubelet。

1.节点规划

| 主机名 | IP | 域名解析 |

|---|---|---|

| k8s-n-01 | 192.168.12.54 | n1 |

| k8s-n-02 | 192.168.12.55 | n2 |

2.集群优化

# 1.安装并启动docker(两台node都安装docker) #如果安装docker了,就无需执行了

方式一:华为源

[root@k8s-m-01 ~]# vim docker.sh

# 1、清空已安装的docker

sudo yum remove docker docker-common docker-selinux docker-engine &&\

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 &&\

# 2、安装doceker源

wget -O /etc/yum.repos.d/docker-ce.repo https://repo.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo &&\

# 3、软件仓库地址替换

sudo sed -i 's+download.docker.com+repo.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo &&\

# 4、重新生成源

yum clean all &&\

yum makecache &&\

# 5、安装docker

sudo yum makecache fast &&\

sudo yum install docker-ce -y &&\

# 6、设置docker开机自启动

systemctl enable --now docker.service

# 7、创建docker目录、启动服务(所有节点) ------ 单独执行加速docekr运行速度

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://k7eoap03.mirror.aliyuncs.com"]

}

EOF

方式二:阿里云

[root@k8s-n-01 ~]# vim docker.sh

# step 1: 安装必要的一些系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2 &&\

# Step 2: 添加软件源信息

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo &&\

# Step 3

sudo sed -i 's+download.docker.com+mirrors.aliyun.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo &&\

# Step 4: 更新并安装Docker-CE

sudo yum makecache fast &&\

sudo yum -y install docker-ce &&\

# Step 4: 开启Docker服务

systemctl enable --now docker.service &&\

# Step 5: Docker加速优化服务

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://k7eoap03.mirror.aliyuncs.com"]

}

EOF

# 2.设置免密登录(所有node节点)

[root@k8s-n-01 ~]# ssh-keygen -t rsa

[root@k8s-n-01 ~]# ssh-keygen -t rsa

# 3.推公钥(master01)

[root@k8s-m-01 k8s]# for i in n1 n2;do

ssh-copy-id -i ~/.ssh/id_rsa.pub root@$i

done

3.分发软件包

[root@k8s-m-01 /opt/data]# for i in n1 n2;do scp flanneld mk-docker-opts.sh kubernetes/server/bin/kubelet kubernetes/server/bin/kube-proxy root@$i:/usr/local/bin; done

4.分发证书

[root@k8s-m-01 /opt/data]# for i in n1 n2; do ssh root@$i "mkdir -pv /etc/kubernetes/ssl"; scp -pr /etc/kubernetes/ssl/{ca*.pem,admin*pem,kube-proxy*pem} root@$i:/etc/kubernetes/ssl; done

5.分发配置文件

# flanneld、etcd的证书、docker.service

# 分发ETCD证书

[root@k8s-m-01 /etc/etcd/ssl]# for i in n1 n2 ;do ssh root@$i "mkdir -pv /etc/etcd/ssl"; scp ./* root@$i:/etc/etcd/ssl; done

# 分发flannel和docker的启动脚本

[root@k8s-m-01 /etc/etcd/ssl]# for i in n1 n2;do scp /usr/lib/systemd/system/docker.service root@$i:/usr/lib/systemd/system/docker.service; scp /usr/lib/systemd/system/flanneld.service root@$i:/usr/lib/systemd/system/flanneld.service; done

6.部署kubelet

[root@k8s-m-01 ~]# for i in n1 n2 ;do

ssh root@$i "mkdir -pv /etc/kubernetes/cfg";

scp /etc/kubernetes/cfg/kubelet.conf root@$i:/etc/kubernetes/cfg/kubelet.conf;

scp /etc/kubernetes/cfg/kubelet-config.yml root@$i:/etc/kubernetes/cfg/kubelet-config.yml;

scp /usr/lib/systemd/system/kubelet.service root@$i:/usr/lib/systemd/system/kubelet.service;

scp /etc/kubernetes/cfg/kubelet.kubeconfig root@$i:/etc/kubernetes/cfg/kubelet.kubeconfig;

scp /etc/kubernetes/cfg/kubelet-bootstrap.kubeconfig root@$i:/etc/kubernetes/cfg/kubelet-bootstrap.kubeconfig;

scp /etc/kubernetes/cfg/token.csv root@$i:/etc/kubernetes/cfg/token.csv;

done

# 修改配置文件kubelet-config.yml和kubelet.conf

1、修改k8s-n-01的文件

[root@k8s-n-01 opt]# grep 'address' /etc/kubernetes/cfg/kubelet-config.yml

address: 172.16.1.54

[root@k8s-n-01 opt]# grep 'hostname-override' /etc/kubernetes/cfg/kubelet.conf

--hostname-override=k8s-n-01

2、修改k8s-n-02的文件

[root@k8s-n-02 opt]# grep 'address' /etc/kubernetes/cfg/kubelet-config.yml

address: 172.16.1.55

[root@k8s-n-02 opt]# grep 'hostname-override' /etc/kubernetes/cfg/kubelet.conf

--hostname-override=k8s-n-02

# 启动kubelet

[root@k8s-n-02 ~]# systemctl enable --now kubelet.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kubelet.service to /usr/lib/systemd/system/kubelet.service.

7.部署kube-proxy

[root@k8s-m-01 ~]# for i in n1 n2 ; do

scp /etc/kubernetes/cfg/kube-proxy.conf root@$i:/etc/kubernetes/cfg/kube-proxy.conf;

scp /etc/kubernetes/cfg/kube-proxy-config.yml root@$i:/etc/kubernetes/cfg/kube-proxy-config.yml ;

scp /usr/lib/systemd/system/kube-proxy.service root@$i:/usr/lib/systemd/system/kube-proxy.service;

scp /etc/kubernetes/cfg/kube-proxy.kubeconfig root@$i:/etc/kubernetes/cfg/kube-proxy.kubeconfig;

done

# 修改kube-proxy-config.yml中IP和主机名

1、修改k8s-n-01的文件

[root@k8s-n-01 opt]# vim /etc/kubernetes/cfg/kube-proxy-config.yml

bindAddress: 172.16.1.54

healthzBindAddress: 172.16.1.54:10256

metricsBindAddress: 172.16.1.54:10249

hostnameOverride: k8s-n-01

2、修改k8s-n-02的文件

[root@k8s-n-02 opt]# vim /etc/kubernetes/cfg/kube-proxy-config.yml

bindAddress: 172.16.1.55

healthzBindAddress: 172.16.1.55:10256

metricsBindAddress: 172.16.1.55:10249

hostnameOverride: k8s-n-02

# 启动

[root@k8s-n-02 ~]# systemctl enable --now kube-proxy.service

8.加入集群(master01节点)

# 查看集群状态

[root@k8s-m-01 ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

# 查看加入集群请求

[root@k8s-m-01 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-_yClVuQCNzDb566yZV5sFJmLsoU13Wba0FOhQ5pmVPY 12m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

node-csr-m3kFnO7GPBYeBcen5GQ1RdTlt77_rhedLPe97xO_5hw 12m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Pending

# 批准加入

[root@k8s-m-01 ~]# kubectl certificate approve `kubectl get csr | grep "Pending" | awk '{print $1}'`

certificatesigningrequest.certificates.k8s.io/node-csr-_yClVuQCNzDb566yZV5sFJmLsoU13Wba0FOhQ5pmVPY approved

certificatesigningrequest.certificates.k8s.io/node-csr-m3kFnO7GPBYeBcen5GQ1RdTlt77_rhedLPe97xO_5hw approved

# 查看加入状态

[root@k8s-m-01 ~]# kubectl get csr

NAME AGE SIGNERNAME REQUESTOR CONDITION

node-csr-_yClVuQCNzDb566yZV5sFJmLsoU13Wba0FOhQ5pmVPY 14m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

node-csr-m3kFnO7GPBYeBcen5GQ1RdTlt77_rhedLPe97xO_5hw 14m kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap Approved,Issued

# 查看加入节点

[root@k8s-m-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-m-01 Ready <none> 21h v1.18.8

k8s-m-02 Ready <none> 21h v1.18.8

k8s-m-03 Ready <none> 21h v1.18.8

k8s-n-01 Ready <none> 36s v1.18.8

k8s-n-02 Ready <none> 36s v1.18.8

9.设置集群角色(master01节点)

[root@k8s-m-01 ~]# kubectl label nodes k8s-m-01 node-role.kubernetes.io/master=k8s-m-01

s.io/node=k8s-n-01

kubectl label nodes k8s-n-02 node-role.kubernetes.io/node=k8s-n-02node/k8s-m-01 labeled

[root@k8s-m-01 ~]# kubectl label nodes k8s-m-02 node-role.kubernetes.io/master=k8s-m-02

node/k8s-m-02 labeled

[root@k8s-m-01 ~]# kubectl label nodes k8s-m-03 node-role.kubernetes.io/master=k8s-m-03

node/k8s-m-03 labeled

[root@k8s-m-01 ~]#

[root@k8s-m-01 ~]# kubectl label nodes k8s-n-01 node-role.kubernetes.io/node=k8s-n-01

node/k8s-n-01 labeled

[root@k8s-m-01 ~]# kubectl label nodes k8s-n-02 node-role.kubernetes.io/node=k8s-n-02

node/k8s-n-02 labeled

[root@k8s-m-01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-m-01 Ready master 21h v1.18.8

k8s-m-02 Ready master 21h v1.18.8

k8s-m-03 NotReady master 21h v1.18.8

k8s-n-01 Ready node 4m5s v1.18.8

k8s-n-02 Ready node 4m5s v1.18.8

浙公网安备 33010602011771号

浙公网安备 33010602011771号