Tensorflow暑期实践——基于多隐层神经网络的手写数字识别(全部代码+tensorboard可视化)

import tensorflow as tf import numpy as np import os os.environ["CUDA_VISIBLE_DEVICES"] = "-1" print(tf.__version__) print(tf.test.is_gpu_available()) from tensorflow.examples.tutorials.mnist import input_data mnist = input_data.read_data_sets("MNIST_data/", one_hot=True) # 定义占位符 tf.reset_default_graph() #清除default graph和不断增加的节点 # 输入层 x = tf.placeholder(tf.float32, [None, 784], name="X") # 输出层 y = tf.placeholder(tf.float32, [None, 10], name="Y") image_shaped_input = tf.reshape(x,[-1,28,28,1]) H1_NN = 512 H2_NN = 256 regularizer = 0.0001 def get_weight(shape, regularizer): w = tf.Variable(tf.truncated_normal(shape, stddev=0.1)) if regularizer != None: tf.add_to_collection('losses', tf.contrib.layers.l2_regularizer(regularizer)(w)) return w w1 = get_weight([784, H1_NN], regularizer) b1 = tf.Variable(tf.zeros(H1_NN)) y1 = tf.nn.relu(tf.matmul(x, w1) + b1) w2 = get_weight([H1_NN, H2_NN], regularizer) b2 = tf.Variable(tf.zeros(H2_NN)) y2 = tf.nn.relu(tf.matmul(y1, w2) + b2) w3 = get_weight([H2_NN, 10], regularizer) b3 = tf.Variable(tf.zeros(10)) pred = tf.matmul(y2,w3) + b3 BATCH_SIZE = 250 LEARNING_RATE_BASE = 0.1 LEARNING_RATE_DECAY = 0.99 REGULARIZER = 0.0001 STEPS = 10000 MOVING_AVERAGE_DECAY = 0.99 global_step = tf.Variable(0, trainable=False) # 含正则化的loss ce = tf.nn.sparse_softmax_cross_entropy_with_logits(logits=pred, labels=tf.argmax(y,1)) cem = tf.reduce_mean(ce) loss = cem + tf.add_n(tf.get_collection('losses')) learning_rate = tf.train.exponential_decay( LEARNING_RATE_BASE, global_step, mnist.train.num_examples / BATCH_SIZE, LEARNING_RATE_DECAY, staircase=True) train_step = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss, global_step=global_step) # 滑动平均值 ema = tf.train.ExponentialMovingAverage(MOVING_AVERAGE_DECAY, global_step) ema_op = ema.apply(tf.trainable_variables()) with tf.control_dependencies([train_step, ema_op]): train_op = tf.no_op(name="train") # 定义准确率 correct_prediction = tf.equal(tf.argmax(y, 1),tf.argmax(pred,1)) accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) image_shaped_input=tf.reshape(x,[-1,28,28,1]) tf.summary.image('input', image_shaped_input,10) tf.summary.histogram('forward',pred) tf.summary.scalar('loss',loss) tf.summary.scalar('accuracy',accuracy) merged_summary_op = tf.summary.merge_all() from time import time startTime = time() MODEL_SAVE_PATH="./model2/" saver = tf.train.Saver() with tf.Session() as sess: writer = tf.summary.FileWriter('log/',sess.graph) init_op = tf.global_variables_initializer() sess.run(init_op) '''有效防止宿舍断电,参数白跑的情况''' ckpt=tf.train.get_checkpoint_state(MODEL_SAVE_PATH) if ckpt and ckpt.model_checkpoint_path: #把ckpt恢复到当前会话 saver.restore(sess,ckpt.model_checkpoint_path) print("Restore model from"+ckpt.model_checkpoint_path) for i in range(STEPS): xs, ys = mnist.train.next_batch(BATCH_SIZE) _, loss_value, step,acc = sess.run([train_op, loss, global_step,accuracy], feed_dict={x: xs, y:ys}) summary_str = sess.run(merged_summary_op,feed_dict={x:xs,y:ys}) writer.add_summary(summary_str, i) if i % 500 == 0: print("After %d training step(s) .loss on training batch is %g." % (step, loss_value), "Accuracy=","{:.4f}".format(acc)) saver.save(sess, os.path.join(MODEL_SAVE_PATH, "mnist_model"),global_step=global_step) duration = time() - startTime correct_prediction = tf.equal(tf.argmax(pred,1), tf.argmax(y,1)) accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) with tf.Session() as sess: ckpt = tf.train.get_checkpoint_state(MODEL_SAVE_PATH) if ckpt and ckpt.model_checkpoint_path: saver.restore(sess, ckpt.model_checkpoint_path) global_step = ckpt.model_checkpoint_path.split('/')[-1].split('-')[-1] accuracy_score = sess.run(accuracy, feed_dict={x: mnist.test.images, y: mnist.test.labels}) print("After %s training step(s) , test accuracy = %g." % (global_step, accuracy_score))

会在当前运行的文件所在的文件夹下生成一个log文件夹

在log下打开cmd输入tensorboard --logdir=C:\Users\28746\Desktop\SummerProject\Day4_多层神经网络_手写识别\log

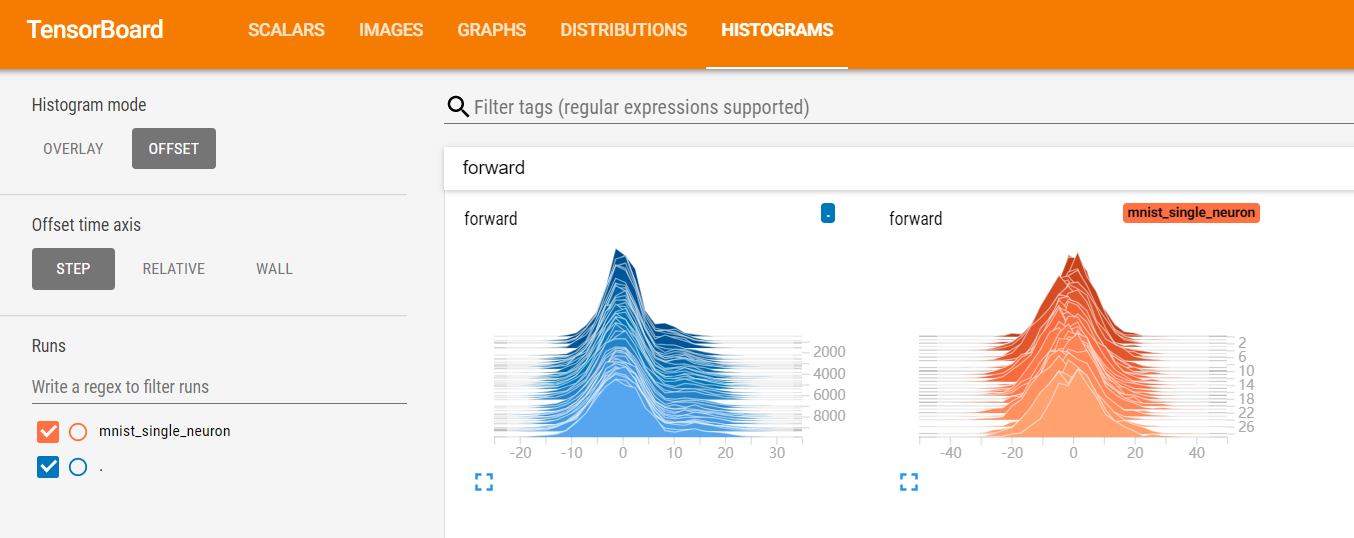

在网页中打开localhost:6006就可以看到具体运行日志

Acc和Loss:

输入的图片:

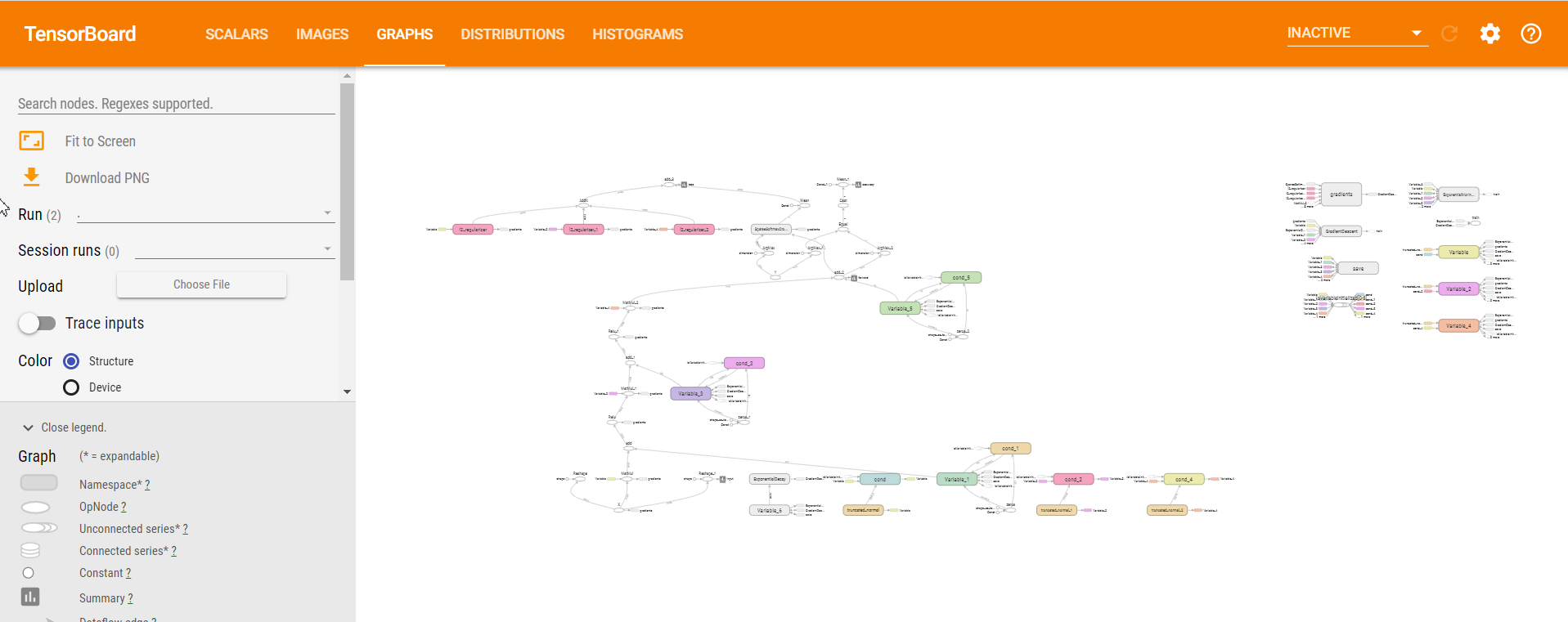

神经网络搭建的结构图: