Tensorflow暑期实践——基于单个神经元的手写数字识别

版权说明:浙江财经大学专业实践深度学习tensorflow——齐峰

基于单个神经元的手写数字识别

目录

本章内容介绍

** 本章将介绍如何使用Tensorflow建立神经网络,训练、评估模型并使用训练好的模型识别手写数字MNIST。 **

** 关于MNIST数据集的介绍 **

详见:mnist_introduce.ipynb

** 由简入繁,首先,构建由单个神经元组成的神经网络,然后尝试将模型加宽和加深,以提高分类准确率。 **

** 参考范例程序: **

- 单个神经元:mnist_single_neuron.ipynb

- 单隐层神经网络:mnist_h256.ipynb

- 多隐层神经网络:mnist_h256_h256.ipynb

Tensorflow实现基于单个神经元的手写数字识别

神经元函数及优化方法

单个神经元的网络模型

** 网络模型 **

图1. 单个神经元的网络模型

图1. 单个神经元的网络模型

计算公式如下:

其中,\(z\)为输出结果,\(x_i\)为输入,\(w_i\)为相应的权重,\(b\)为偏置,\(f\)为激活函数。

** 正向传播 **

数据是从输入端流向输出端的,当赋予\(w\)和\(b\)合适的值并结合合适的激活函数时,可产生很好的拟合效果。

** 反向传播 **

- 反向传播的意义在于,告诉我们需要将w和b调整到多少。

- 在刚开始没有得到合适的w和b时,正向传播所产生的结果与真实值之间存在误差,反向传播就是利用这个误差信号修正w和b的取值,从而获得一个与真实值更加接近的输出。

- 在实际训练过程中,往往需要多次调整\(w\)和\(b\),直至模型输出值与真实值小于某个阈值。

激活函数

运行时激活神经网络中部分神经元,将激活信息向后传入下一层神经网络。

激活函数的主要作用是,加入非线性因素,以解决线性模型表达能力不足的问题。

** 常用激活函数 **

- (1)** Sigmoid **,在Tensorflow中对应函数为:tf.nn.sigmoid(x, name=None)

- (2)** Tanh **,在Tensorflow中对应函数为:tf.nn.tanh(x, name=None)

- (3)** Relu **,在Tensorflow中对应函数为:tf.nn.relu(x, name=None)

载入数据

import tensorflow as tf

import os

os.environ["CUDA_VISIBLE_DEVICES"] = "-1"

print(tf.__version__)

print(tf.test.is_gpu_available())

1.12.0

False

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

WARNING:tensorflow:From <ipython-input-2-8bf8ae5a5303>:2: read_data_sets (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use alternatives such as official/mnist/dataset.py from tensorflow/models.

WARNING:tensorflow:From E:\ProgramData\Anaconda3\lib\site-packages\tensorflow\contrib\learn\python\learn\datasets\mnist.py:260: maybe_download (from tensorflow.contrib.learn.python.learn.datasets.base) is deprecated and will be removed in a future version.

Instructions for updating:

Please write your own downloading logic.

WARNING:tensorflow:From E:\ProgramData\Anaconda3\lib\site-packages\tensorflow\contrib\learn\python\learn\datasets\mnist.py:262: extract_images (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use tf.data to implement this functionality.

Extracting MNIST_data/train-images-idx3-ubyte.gz

WARNING:tensorflow:From E:\ProgramData\Anaconda3\lib\site-packages\tensorflow\contrib\learn\python\learn\datasets\mnist.py:267: extract_labels (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use tf.data to implement this functionality.

Extracting MNIST_data/train-labels-idx1-ubyte.gz

WARNING:tensorflow:From E:\ProgramData\Anaconda3\lib\site-packages\tensorflow\contrib\learn\python\learn\datasets\mnist.py:110: dense_to_one_hot (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use tf.one_hot on tensors.

Extracting MNIST_data/t10k-images-idx3-ubyte.gz

Extracting MNIST_data/t10k-labels-idx1-ubyte.gz

WARNING:tensorflow:From E:\ProgramData\Anaconda3\lib\site-packages\tensorflow\contrib\learn\python\learn\datasets\mnist.py:290: DataSet.__init__ (from tensorflow.contrib.learn.python.learn.datasets.mnist) is deprecated and will be removed in a future version.

Instructions for updating:

Please use alternatives such as official/mnist/dataset.py from tensorflow/models.

构建模型

** 定义\(x\)和\(y\)的占位符 **

tf.reset_default_graph() #清除default graph和不断增加的节点

x = tf.placeholder(tf.float32, [None, 784]) # mnist 中每张图片共有28*28=784个像素点

y = tf.placeholder(tf.float32, [None, 10]) # 0-9 一共10个数字=> 10 个类别

** 创建变量 **

在神经网络中,权值\(W\)的初始值通常设为正态分布的随机数,偏置项\(b\)的初始值通常也设为正态分布的随机数或常数。在Tensorflow中,通常利用以下函数实现正态分布随机数的生成:

** tf.random_normal **

norm = tf.random_normal([100]) #生成100个随机数

with tf.Session() as sess:

norm_data=norm.eval()

print(norm_data[:10]) #打印前10个随机数

[-0.8566289 1.2637379 -0.37130925 0.76486456 0.3292667 0.22041267

0.2905729 0.04571301 -0.43698135 -0.11702691]

import matplotlib.pyplot as plt

plt.hist(norm_data)

plt.show()

<Figure size 640x480 with 1 Axes>

在本案例中,以正态分布的随机数初始化权重\(W\),以常数0初始化偏置\(b\)

W = tf.Variable(tf.random_normal([784, 10]))

b = tf.Variable(tf.zeros([10]))

** 用单个神经元构建神经网络 **

forward=tf.matmul(x, W) + b # 前向输出

tf.summary.histogram('forward',forward)#将前向输出值以直方图显示

<tf.Tensor 'forward:0' shape=() dtype=string>

示例中在执行训练后在tensorboard界面可查看到如下关于forward的直方图:

<img src="forward_hist_single.jpg"width=400 height=400 />

** 关于Softmax Regression **

当我们处理多分类任务时,通常需要使用Softmax Regression模型。

Softmax Regression会对每一类别估算出一个概率。

** 工作原理: **将判定为某一类的特征相加,然后将这些特征转化为判定是这一类的概率。

pred = tf.nn.softmax(forward) # Softmax分类

训练模型

** 设置训练参数 **

train_epochs = 30

batch_size = 100

total_batch= int(mnist.train.num_examples/batch_size)

display_step = 1

learning_rate=0.01

** 定义损失函数 **

loss_function = tf.reduce_mean(-tf.reduce_sum(y*tf.log(pred), reduction_indices=1)) # 交叉熵

tf.summary.scalar('loss', loss_function)#将损失以标量显示

<tf.Tensor 'loss:0' shape=() dtype=string>

** 选择优化器 **

optimizer = tf.train.GradientDescentOptimizer(learning_rate).minimize(loss_function) #梯度下降

** 定义准确率 **

# 检查预测类别tf.argmax(pred, 1)与实际类别tf.argmax(y, 1)的匹配情况

correct_prediction = tf.equal(tf.argmax(pred, 1), tf.argmax(y, 1))

# 准确率

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32)) # 将布尔值转化为浮点数,并计算平均值

tf.summary.scalar('accuracy', accuracy)#将准确率以标量显示

<tf.Tensor 'accuracy:0' shape=() dtype=string>

sess = tf.Session() #声明会话

init = tf.global_variables_initializer() # 变量初始化

sess.run(init)

merged_summary_op = tf.summary.merge_all()#合并所有summary

writer = tf.summary.FileWriter('log/mnist_single_neuron', sess.graph) #创建写入符

# 开始训练

for epoch in range(train_epochs ):

for batch in range(total_batch):

xs, ys = mnist.train.next_batch(batch_size)# 读取批次数据

sess.run(optimizer,feed_dict={x: xs,y: ys}) # 执行批次训练

#生成summary

summary_str = sess.run(merged_summary_op,feed_dict={x: xs,y: ys})

writer.add_summary(summary_str, epoch)#将summary 写入文件

#total_batch个批次训练完成后,使用验证数据计算误差与准确率

loss,acc = sess.run([loss_function,accuracy],

feed_dict={x: mnist.validation.images, y: mnist.validation.labels})

# 打印训练过程中的详细信息

if (epoch+1) % display_step == 0:

print("Train Epoch:", '%02d' % (epoch+1), "Loss=", "{:.9f}".format(loss)," Accuracy=","{:.4f}".format(acc))

print("Train Finished!")

Train Epoch: 01 Loss= 5.594824791 Accuracy= 0.2620

Train Epoch: 02 Loss= 3.323985577 Accuracy= 0.4562

Train Epoch: 03 Loss= 2.485962391 Accuracy= 0.5586

Train Epoch: 04 Loss= 2.052196503 Accuracy= 0.6244

Train Epoch: 05 Loss= 1.785376191 Accuracy= 0.6610

Train Epoch: 06 Loss= 1.601655483 Accuracy= 0.6886

Train Epoch: 07 Loss= 1.467257380 Accuracy= 0.7118

Train Epoch: 08 Loss= 1.363661408 Accuracy= 0.7296

Train Epoch: 09 Loss= 1.280923843 Accuracy= 0.7432

Train Epoch: 10 Loss= 1.212942958 Accuracy= 0.7546

Train Epoch: 11 Loss= 1.156675100 Accuracy= 0.7652

Train Epoch: 12 Loss= 1.108838320 Accuracy= 0.7742

Train Epoch: 13 Loss= 1.067099810 Accuracy= 0.7810

Train Epoch: 14 Loss= 1.031150222 Accuracy= 0.7884

Train Epoch: 15 Loss= 0.999342263 Accuracy= 0.7938

Train Epoch: 16 Loss= 0.971206069 Accuracy= 0.7996

Train Epoch: 17 Loss= 0.945592284 Accuracy= 0.8046

Train Epoch: 18 Loss= 0.923156917 Accuracy= 0.8094

Train Epoch: 19 Loss= 0.901953936 Accuracy= 0.8142

Train Epoch: 20 Loss= 0.882337689 Accuracy= 0.8188

Train Epoch: 21 Loss= 0.864885747 Accuracy= 0.8220

Train Epoch: 22 Loss= 0.848752856 Accuracy= 0.8242

Train Epoch: 23 Loss= 0.833763659 Accuracy= 0.8264

Train Epoch: 24 Loss= 0.820160329 Accuracy= 0.8276

Train Epoch: 25 Loss= 0.806553364 Accuracy= 0.8286

Train Epoch: 26 Loss= 0.794732749 Accuracy= 0.8310

Train Epoch: 27 Loss= 0.783382237 Accuracy= 0.8350

Train Epoch: 28 Loss= 0.772144020 Accuracy= 0.8370

Train Epoch: 29 Loss= 0.762202621 Accuracy= 0.8372

Train Epoch: 30 Loss= 0.752200246 Accuracy= 0.8388

Train Finished!

从上述打印结果可以看出损失值** Loss 是趋于更小的,同时,准确率 Accuracy **越来越高。

此外,我们还可以通过Tensorboard查看loss和accuracy的执行过程。

通过tensorboard --logdir=log/mnist_single_neuron进入可视化界面,在scalar版面中可以查看loss和accuracy,如下图所示:

评估模型

** 完成训练后,在测试集上评估模型的准确率 **

print("Test Accuracy:", sess.run(accuracy,

feed_dict={x: mnist.test.images, y: mnist.test.labels}))

Test Accuracy: 0.8358

进行预测

** 在建立模型并进行训练后,若认为准确率可以接受,则可以使用此模型进行预测。 **

prediction_result=sess.run(tf.argmax(pred,1), # 由于pred预测结果是one-hot编码格式,所以需要转换为0~9数字

feed_dict={x: mnist.test.images })

** 查看预测结果 **

prediction_result[0:10] #查看预测结果中的前10项

array([7, 6, 1, 0, 4, 1, 4, 9, 6, 9], dtype=int64)

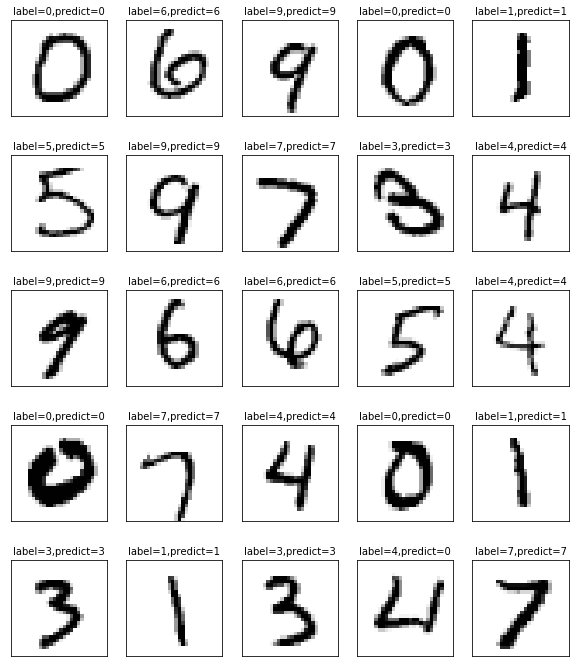

** 定义可视化函数 **

import matplotlib.pyplot as plt

import numpy as np

def plot_images_labels_prediction(images,labels,

prediction,idx,num=10):

fig = plt.gcf()

fig.set_size_inches(10, 12)

if num>25: num=25

for i in range(0, num):

ax=plt.subplot(5,5, 1+i)

ax.imshow(np.reshape(images[idx],(28, 28)),

cmap='binary')

title= "label=" +str(np.argmax(labels[idx]))

if len(prediction)>0:

title+=",predict="+str(prediction[idx])

ax.set_title(title,fontsize=10)

ax.set_xticks([]);ax.set_yticks([])

idx+=1

plt.show()

plot_images_labels_prediction(mnist.test.images,

mnist.test.labels,

prediction_result,0)

从上面结果可知,通过30次迭代所训练的由** 单个神经元 构成的神经网络模型,在测试集上能够取得百分之八十以上的准确率。接下来,我们将尝试 加宽 和 加深 **模型,看看能否得到更高的准确率。

# 预测结果

plot_images_labels_prediction(mnist.test.images,

mnist.test.labels,

prediction_result,10,25)