大数据处理框架之Strom:DRPC

环境

虚拟机:VMware 10

Linux版本:CentOS-6.5-x86_64

客户端:Xshell4

FTP:Xftp4

jdk1.8

storm-0.9

一、DRPC

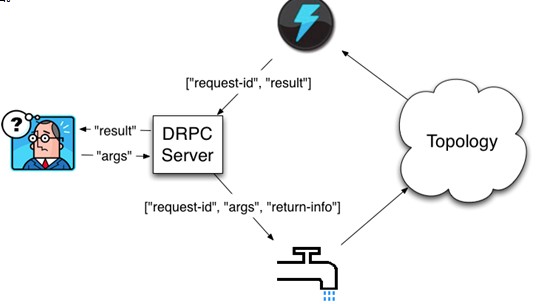

DRPC:Distributed remote procedure call,分布式远程过程调用,DRPC是通过一个DRPC服务端(DRPC server)来实现分布式 RPC 功能的。

Strom DRPC设计目的:

为了充分利用Storm的计算能力实现高密度的并行实时计算:Storm接收若干个数据流输入,数据在Topology当中运行完成,然后通过DRPC将结果进行输出。

DRPC Server负责接收RPC请求,并将该请求发送到Storm中运行的 Topology,等待接收 Topology 发送的处理结果,并将该结果返回给发送请求的客户端。(其实,从客户端的角度来说,DPRC 与普通的 RPC 调用并没有什么区别。)

二、strom DRPC处理流程

客户端通过向DRPC服务器发送待执行函数的名称以及该函数的参数来获取处理结果。实现该函数的拓扑使用一个DRPCSpout 从 DRPC 服务器中接收一个函数调用流。DRPC 服务器会为每个函数调用都标记了一个唯一的 id。随后拓扑会执行函数来计算结果,并在拓扑的最后使用一个名为 ReturnResults 的 bolt 连接到 DRPC 服务器,根据函数调用的 id 来将函数调用的结果返回。

三、定义DRPC拓扑

方法1:

通过LinearDRPCTopologyBuilder (该方法已过期,不建议使用)

该方法会自动为我们设定Spout、将结果返回给DRPC Server等,我们只需要将Topology实现

/** * Licensed to the Apache Software Foundation (ASF) under one * or more contributor license agreements. See the NOTICE file * distributed with this work for additional information * regarding copyright ownership. The ASF licenses this file * to you under the Apache License, Version 2.0 (the * "License"); you may not use this file except in compliance * with the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ package com.sxt.storm.drpc; import backtype.storm.Config; import backtype.storm.LocalCluster; import backtype.storm.LocalDRPC; import backtype.storm.StormSubmitter; import backtype.storm.drpc.LinearDRPCTopologyBuilder; import backtype.storm.topology.BasicOutputCollector; import backtype.storm.topology.OutputFieldsDeclarer; import backtype.storm.topology.base.BaseBasicBolt; import backtype.storm.tuple.Fields; import backtype.storm.tuple.Tuple; import backtype.storm.tuple.Values; /** * This topology is a basic example of doing distributed RPC on top of Storm. It * implements a function that appends a "!" to any string you send the DRPC * function. * <p/> * See https://github.com/nathanmarz/storm/wiki/Distributed-RPC for more * information on doing distributed RPC on top of Storm. */ public class BasicDRPCTopology { public static class ExclaimBolt extends BaseBasicBolt { @Override public void execute(Tuple tuple, BasicOutputCollector collector) { String input = tuple.getString(1); collector.emit(new Values(tuple.getValue(0), input + "!")); } @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { declarer.declare(new Fields("id", "result")); } } public static void main(String[] args) throws Exception { //线性创建拓扑 参数为函数名或drpc服务名 //LinearDRPCTopologyBuilder封装了spout和返回结果的bolt LinearDRPCTopologyBuilder builder = new LinearDRPCTopologyBuilder("exclamation"); //按照顺序添加业务计算单元 builder.addBolt(new ExclaimBolt(), 3); Config conf = new Config(); if (args == null || args.length == 0) { LocalDRPC drpc = new LocalDRPC(); LocalCluster cluster = new LocalCluster(); cluster.submitTopology("drpc-demo", conf, builder.createLocalTopology(drpc)); for (String word : new String[] { "hello", "goodbye" }) { //执行函数或服务 System.err.println("Result for \"" + word + "\": " + drpc.execute("exclamation", word)); } //关闭集群 cluster.shutdown(); //关闭drpc drpc.shutdown(); } else { conf.setNumWorkers(3); StormSubmitter.submitTopologyWithProgressBar(args[0], conf, builder.createRemoteTopology()); //StormSubmitter.submitTopology(args[0], conf, builder.createTopology()); } } }

方法2:

直接通过普通的拓扑构造方法TopologyBuilder来创建DRPC拓扑

需要手动设定好开始的DRPCSpout以及结束的ReturnResults

/** * Licensed to the Apache Software Foundation (ASF) under one * or more contributor license agreements. See the NOTICE file * distributed with this work for additional information * regarding copyright ownership. The ASF licenses this file * to you under the Apache License, Version 2.0 (the * "License"); you may not use this file except in compliance * with the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ package com.sxt.storm.drpc; import backtype.storm.Config; import backtype.storm.LocalCluster; import backtype.storm.LocalDRPC; import backtype.storm.drpc.DRPCSpout; import backtype.storm.drpc.ReturnResults; import backtype.storm.topology.BasicOutputCollector; import backtype.storm.topology.OutputFieldsDeclarer; import backtype.storm.topology.TopologyBuilder; import backtype.storm.topology.base.BaseBasicBolt; import backtype.storm.tuple.Fields; import backtype.storm.tuple.Tuple; import backtype.storm.tuple.Values; public class ManualDRPC { public static class ExclamationBolt extends BaseBasicBolt { @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { declarer.declare(new Fields("result", "return-info")); } @Override public void execute(Tuple tuple, BasicOutputCollector collector) { String arg = tuple.getString(0); Object retInfo = tuple.getValue(1); collector.emit(new Values(arg + "!!!", retInfo)); } } public static void main(String[] args) { TopologyBuilder builder = new TopologyBuilder(); LocalDRPC drpc = new LocalDRPC(); //自己定义spout和返回结果bolt-ReturnResults DRPCSpout spout = new DRPCSpout("exclamation", drpc); builder.setSpout("drpc", spout); builder.setBolt("exclaim", new ExclamationBolt(), 3).shuffleGrouping("drpc"); builder.setBolt("return", new ReturnResults(), 3).shuffleGrouping("exclaim"); LocalCluster cluster = new LocalCluster(); Config conf = new Config(); cluster.submitTopology("exclaim", conf, builder.createTopology()); System.err.println(drpc.execute("exclamation", "aaa")); System.err.println(drpc.execute("exclamation", "bbb")); } }

四、运行模式

1、本地模式

参考上述方法2.

2、集群模式

(1)修改配置文件conf/storm.yaml

drpc.servers:

- "node1"

(2)启动DRPC Server

bin/storm drpc &

(3)提交jar

./storm jar drpc.jar com.sxt.storm.drpc.BasicDRPCTopology drpc

(4)客户端调用DRPC

package com.sxt.storm.drpc; import org.apache.thrift7.TException; import backtype.storm.generated.DRPCExecutionException; import backtype.storm.utils.DRPCClient; public class MyDRPCclient { public static void main(String[] args) { //连接DRPC服务端端口3772通信 DRPCClient client = new DRPCClient("node1", 3772); try { String result = client.execute("exclamation", "11,22"); System.out.println(result); } catch (TException e) { e.printStackTrace(); } catch (DRPCExecutionException e) { e.printStackTrace(); } } }

五、案例

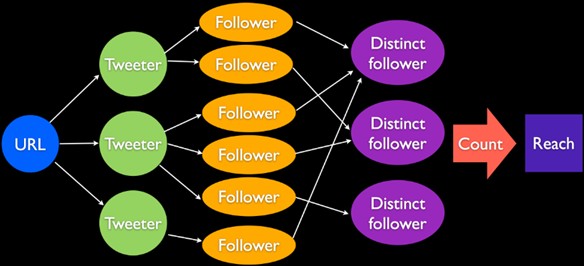

Twitter 中某个URL的受众人数统计(这篇twitter到底有多少人看到过)

分析:

首先看一下这篇文章被哪些人看到

1、这篇文章的发送者;

2、发送者的粉丝;

其次,不同的发送者粉丝有重复的,需要去重

最后,累加在一起count

/** * Licensed to the Apache Software Foundation (ASF) under one * or more contributor license agreements. See the NOTICE file * distributed with this work for additional information * regarding copyright ownership. The ASF licenses this file * to you under the Apache License, Version 2.0 (the * "License"); you may not use this file except in compliance * with the License. You may obtain a copy of the License at * * http://www.apache.org/licenses/LICENSE-2.0 * * Unless required by applicable law or agreed to in writing, software * distributed under the License is distributed on an "AS IS" BASIS, * WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. * See the License for the specific language governing permissions and * limitations under the License. */ package com.sxt.storm.drpc; import java.util.Arrays; import java.util.HashMap; import java.util.HashSet; import java.util.List; import java.util.Map; import java.util.Set; import backtype.storm.Config; import backtype.storm.LocalCluster; import backtype.storm.LocalDRPC; import backtype.storm.StormSubmitter; import backtype.storm.coordination.BatchOutputCollector; import backtype.storm.drpc.LinearDRPCTopologyBuilder; import backtype.storm.task.TopologyContext; import backtype.storm.topology.BasicOutputCollector; import backtype.storm.topology.OutputFieldsDeclarer; import backtype.storm.topology.base.BaseBasicBolt; import backtype.storm.topology.base.BaseBatchBolt; import backtype.storm.tuple.Fields; import backtype.storm.tuple.Tuple; import backtype.storm.tuple.Values; /** * This is a good example of doing complex Distributed RPC on top of Storm. This * program creates a topology that can compute the reach for any URL on Twitter * in realtime by parallelizing the whole computation. * <p/> * Reach is the number of unique people exposed to a URL on Twitter. To compute * reach, you have to get all the people who tweeted the URL, get all the * followers of all those people, unique that set of followers, and then count * the unique set. It's an intense computation that can involve thousands of * database calls and tens of millions of follower records. * <p/> * This Storm topology does every piece of that computation in parallel, turning * what would be a computation that takes minutes on a single machine into one * that takes just a couple seconds. * <p/> * For the purposes of demonstration, this topology replaces the use of actual * DBs with in-memory hashmaps. * <p/> * See https://github.com/nathanmarz/storm/wiki/Distributed-RPC for more * information on Distributed RPC. */ public class ReachTopology { //发tweeter的信息库 public static Map<String, List<String>> TWEETERS_DB = new HashMap<String, List<String>>() { { put("foo.com/blog/1", Arrays.asList("sally", "bob", "tim", "george", "nathan")); put("engineering.twitter.com/blog/5", Arrays.asList("adam", "david", "sally", "nathan")); put("tech.backtype.com/blog/123", Arrays.asList("tim", "mike", "john")); } }; //粉丝信息库 public static Map<String, List<String>> FOLLOWERS_DB = new HashMap<String, List<String>>() { { put("sally", Arrays.asList("bob", "tim", "alice", "adam", "jim", "chris", "jai")); put("bob", Arrays.asList("sally", "nathan", "jim", "mary", "david", "vivian")); put("tim", Arrays.asList("alex")); put("nathan", Arrays.asList("sally", "bob", "adam", "harry", "chris", "vivian", "emily", "jordan")); put("adam", Arrays.asList("david", "carissa")); put("mike", Arrays.asList("john", "bob")); put("john", Arrays.asList("alice", "nathan", "jim", "mike", "bob")); } }; //获取发微博人 public static class GetTweeters extends BaseBasicBolt { @Override public void execute(Tuple tuple, BasicOutputCollector collector) { //第一个参数是request-id Object id = tuple.getValue(0); //第二个value是客户端请求的参数 url String url = tuple.getString(1); //根据请求url 获取发微博的人 List<String> tweeters = TWEETERS_DB.get(url); if (tweeters != null) { for (String tweeter : tweeters) { //向后推送发微博的人-博主 collector.emit(new Values(id, tweeter)); } } } @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { declarer.declare(new Fields("id", "tweeter")); } } //获取粉丝 public static class GetFollowers extends BaseBasicBolt { @Override public void execute(Tuple tuple, BasicOutputCollector collector) { //request-id Object id = tuple.getValue(0); //第二个参数是博主 String tweeter = tuple.getString(1); //根据博主获取对应粉丝 List<String> followers = FOLLOWERS_DB.get(tweeter); if (followers != null) { for (String follower : followers) { //将粉丝信息推送出去 collector.emit(new Values(id, follower)); } } } @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { declarer.declare(new Fields("id", "follower")); } } public static class PartialUniquer extends BaseBatchBolt { BatchOutputCollector _collector; Object _id; Set<String> _followers = new HashSet<String>(); @Override public void prepare(Map conf, TopologyContext context, BatchOutputCollector collector, Object id) { _collector = collector; _id = id; } @Override public void execute(Tuple tuple) { //接收粉丝信息放进Set 达到去重的目的 _followers.add(tuple.getString(1)); } @Override public void finishBatch() { //等到这一批数据统计完成之后将这一波统计信息发送出去 _collector.emit(new Values(_id, _followers.size())); } @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { declarer.declare(new Fields("id", "partial-count")); } } public static class CountAggregator extends BaseBatchBolt { BatchOutputCollector _collector; Object _id; int _count = 0; @Override public void prepare(Map conf, TopologyContext context, BatchOutputCollector collector, Object id) { _collector = collector; _id = id; } @Override public void execute(Tuple tuple) { //累加 _count += tuple.getInteger(1); } @Override public void finishBatch() { //将统计结果推送出去 _collector.emit(new Values(_id, _count)); } @Override public void declareOutputFields(OutputFieldsDeclarer declarer) { declarer.declare(new Fields("id", "reach")); } } public static LinearDRPCTopologyBuilder construct() { LinearDRPCTopologyBuilder builder = new LinearDRPCTopologyBuilder("reach"); builder.addBolt(new GetTweeters(), 4); builder.addBolt(new GetFollowers(), 12).shuffleGrouping(); builder.addBolt(new PartialUniquer(), 6).fieldsGrouping(new Fields("id", "follower")); builder.addBolt(new CountAggregator(), 3).fieldsGrouping(new Fields("id")); return builder; } public static void main(String[] args) throws Exception { LinearDRPCTopologyBuilder builder = construct(); Config conf = new Config(); if (args == null || args.length == 0) { conf.setMaxTaskParallelism(3); LocalDRPC drpc = new LocalDRPC(); LocalCluster cluster = new LocalCluster(); cluster.submitTopology("reach-drpc", conf, builder.createLocalTopology(drpc)); String[] urlsToTry = new String[] { "foo.com/blog/1", "engineering.twitter.com/blog/5", "notaurl.com" }; for (String url : urlsToTry) { System.err.println("Reach of " + url + ": " + drpc.execute("reach", url)); } cluster.shutdown(); drpc.shutdown(); } else { conf.setNumWorkers(6); StormSubmitter.submitTopologyWithProgressBar(args[0], conf, builder.createRemoteTopology()); } } }

备注:在实际应用中,storm异步统计分析用的多一些,实时统计分析用spark多一些。

浙公网安备 33010602011771号

浙公网安备 33010602011771号