nvidia jetson mount plugin

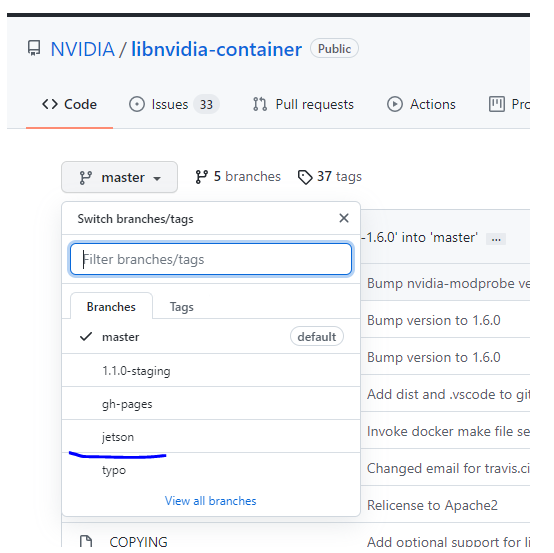

libnvidia-container有专门的jetson分支,其中提到https://github.com/NVIDIA/libnvidia-container/blob/jetson/design/mount_plugins.md提到mount plugin技术,简单来说,就是在裸机上安装tensorrt、opencv、cudnn、cuda等,在用nvidia-docker run创建一个容器时,可以把裸机上的这些软件mount进容器中。

我们在安装nvidia-docker时,需要 sudo apt-get update && sudo apt-get install nvidia-container-toolkit && sudo apt-get install nvidia-docker2,直接用jetpack的apt-get源安装,如果用预先下载好的docker包,可能会出现问题。

然后用下面的命令,会自动在/etc/nvidia-container-runtime/host-files-for-container.d/生成.csv文件,

sudo apt-get install nvidia-container-csv-cuda

sudo apt-get install nvidia-container-csv-cudnn

sudo apt-get install nvidia-container-csv-tensorrt

查看到:

/etc/nvidia-container-runtime/host-files-for-container.d/l4t.csv

/etc/nvidia-container-runtime/host-files-for-container.d/cuda.csv

/etc/nvidia-container-runtime/host-files-for-container.d/cudnn.csv

/etc/nvidia-container-runtime/host-files-for-container.d/tensorrt.csv

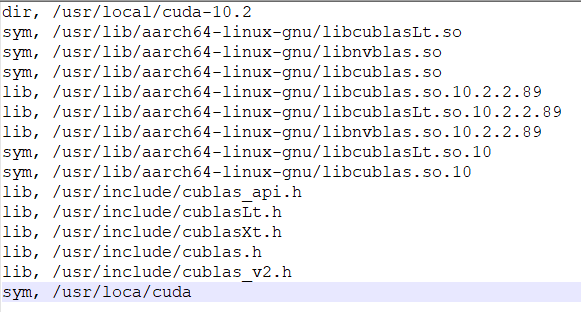

打开cuda.csv查看到下面的内容,dir、sym、lib、dev分别表示文件的格式是目录、软连接、库、设备,

除了apt-get安装能生成.csv文件,我们也可以在/etc/nvidia-container-runtime/host-files-for-container.下自己创建.csv文件,例如我想把opencv mount进去,我就可以这样写一个libopencv.csv文件:

dir, /usr/local/lib/python3.6/dist-packages/cv2

dir, /usr/local/lib/python2.7/dist-packages

sym, /usr/local/lib/libopencv_videostab.so

sym, /usr/local/lib/libopencv_videostab.so.3.4

sym, /usr/local/lib/libopencv_superres.so

sym, /usr/local/lib/libopencv_superres.so.3.4

sym, /usr/local/lib/libopencv_stitching.so

sym, /usr/local/lib/libopencv_stitching.so.3.4

sym, /usr/local/lib/libopencv_cudaoptflow.so

sym, /usr/local/lib/libopencv_cudaoptflow.so.3.4

sym, /usr/local/lib/libopencv_cudaobjdetect.so

sym, /usr/local/lib/libopencv_cudaobjdetect.so.3.4

sym, /usr/local/lib/libopencv_cudalegacy.so

sym, /usr/local/lib/libopencv_cudalegacy.so.3.4

sym, /usr/local/lib/libopencv_objdetect.so

sym, /usr/local/lib/libopencv_objdetect.so.3.4

sym, /usr/local/lib/libopencv_highgui.so

sym, /usr/local/lib/libopencv_highgui.so.3.4

sym, /usr/local/lib/libopencv_cudastereo.so

sym, /usr/local/lib/libopencv_cudastereo.so.3.4

sym, /usr/local/lib/libopencv_cudafeatures2d.so

sym, /usr/local/lib/libopencv_cudafeatures2d.so.3.4

sym, /usr/local/lib/libopencv_cudacodec.so

sym, /usr/local/lib/libopencv_cudacodec.so.3.4

sym, /usr/local/lib/libopencv_calib3d.so

sym, /usr/local/lib/libopencv_calib3d.so.3.4

sym, /usr/local/lib/libopencv_videoio.so

sym, /usr/local/lib/libopencv_videoio.so.3.4

sym, /usr/local/lib/libopencv_shape.so

sym, /usr/local/lib/libopencv_shape.so.3.4

lib, /usr/local/lib/libopencv_shape.so.3.4.10

sym, /usr/local/lib/libopencv_photo.so

sym, /usr/local/lib/libopencv_photo.so.3.4

sym, /usr/local/lib/libopencv_imgcodecs.so

sym, /usr/local/lib/libopencv_imgcodecs.so.3.4

sym, /usr/local/lib/libopencv_features2d.so

sym, /usr/local/lib/libopencv_features2d.so.3.4

sym, /usr/local/lib/libopencv_dnn.so

sym, /usr/local/lib/libopencv_dnn.so.3.4

sym, /usr/local/lib/libopencv_cudawarping.so

sym, /usr/local/lib/libopencv_cudawarping.so.3.4

sym, /usr/local/lib/libopencv_cudaimgproc.so

sym, /usr/local/lib/libopencv_cudaimgproc.so.3.4

sym, /usr/local/lib/libopencv_cudafilters.so

sym, /usr/local/lib/libopencv_cudafilters.so.3.4

sym, /usr/local/lib/libopencv_cudabgsegm.so

sym, /usr/local/lib/libopencv_cudabgsegm.so.3.4

sym, /usr/local/lib/libopencv_video.so

sym, /usr/local/lib/libopencv_video.so.3.4

sym, /usr/local/lib/libopencv_ml.so

sym, /usr/local/lib/libopencv_ml.so.3.4

sym, /usr/local/lib/libopencv_imgproc.so

sym, /usr/local/lib/libopencv_imgproc.so.3.4

sym, /usr/local/lib/libopencv_flann.so

sym, /usr/local/lib/libopencv_flann.so.3.4

sym, /usr/local/lib/libopencv_cudaarithm.so

sym, /usr/local/lib/libopencv_cudaarithm.so.3.4

sym, /usr/local/lib/libopencv_core.so

sym, /usr/local/lib/libopencv_core.so.3.4

sym, /usr/local/lib/libopencv_cudev.so

sym, /usr/local/lib/libopencv_cudev.so.3.4

lib, /usr/local/lib/libopencv_superres.so.3.4.10

lib, /usr/local/lib/libopencv_videostab.so.3.4.10

lib, /usr/local/lib/libopencv_stitching.so.3.4.10

lib, /usr/local/lib/libopencv_cudaoptflow.so.3.4.10

lib, /usr/local/lib/libopencv_cudaobjdetect.so.3.4.10

lib, /usr/local/lib/libopencv_cudalegacy.so.3.4.10

lib, /usr/local/lib/libopencv_objdetect.so.3.4.10

lib, /usr/local/lib/libopencv_highgui.so.3.4.10

lib, /usr/local/lib/libopencv_cudafeatures2d.so.3.4.10

lib, /usr/local/lib/libopencv_cudastereo.so.3.4.10

lib, /usr/local/lib/libopencv_cudacodec.so.3.4.10

lib, /usr/local/lib/libopencv_calib3d.so.3.4.10

lib, /usr/local/lib/libopencv_videoio.so.3.4.10

lib, /usr/local/lib/libopencv_photo.so.3.4.10

lib, /usr/local/lib/libopencv_imgcodecs.so.3.4.10

lib, /usr/local/lib/libopencv_features2d.so.3.4.10

lib, /usr/local/lib/libopencv_dnn.so.3.4.10

lib, /usr/local/lib/libopencv_cudawarping.so.3.4.10

lib, /usr/local/lib/libopencv_cudaimgproc.so.3.4.10

lib, /usr/local/lib/libopencv_cudafilters.so.3.4.10

lib, /usr/local/lib/libopencv_cudabgsegm.so.3.4.10

lib, /usr/local/lib/libopencv_video.so.3.4.10

lib, /usr/local/lib/libopencv_imgproc.so.3.4.10

lib, /usr/local/lib/libopencv_ml.so.3.4.10

lib, /usr/local/lib/libopencv_flann.so.3.4.10

lib, /usr/local/lib/libopencv_cudaarithm.so.3.4.10

lib, /usr/local/lib/libopencv_core.so.3.4.10

lib, /usr/local/lib/libopencv_cudev.so.3.4.10

lib, /usr/lib/aarch64-linux-gnu/libtbb.so.2

sym, /usr/lib/aarch64-linux-gnu/libtbb.so

sym, /usr/lib/aarch64-linux-gnu/libgtk-x11-2.0.so.0

lib, /usr/lib/aarch64-linux-gnu/libgtk-x11-2.0.so.0.2400.32

dev, /dev/nvhost-nvdec1

dev, /dev/nvhost-ctrl-nvdla0

dev, /dev/nvhost-ctrl-nvdla1

dev, /dev/nvhost-nvdla0

dev, /dev/nvhost-nvdla1

dev, /dev//nvidiactl

dev, /dev/nvhost-nvenc1

我们从第二行可以看到,其实可以在文件中把任意的包mount进去,nvidia-docker应该就是做了一个标准的docker mount操作。

此外需要注意的是,因为libnvidia docker 是用c++写的,我们这里需要在每一行结束时多输入几个空格(我用的notepad++或vim编辑的)否则识别不出来。

写好csv文件后,我们正常的nvidia-docker run,就能在对应路径下找到mount的文件。

此外需要注意的是,只有nvidia的L4T系统才能mount进去,标准的ubuntu系统是无法mount进去的。估计是libnvidia做了操作系统的判断。这个可以理解,因为L4T包括bootloader, kernel, necessary firmwares, NVIDIA drivers这些东西,特别是driver,这个是是用jetson的必备的,而普通的ubuntu系统并没有driver。

可以在这里下载L4T系统的镜像https://ngc.nvidia.com/catalog/containers/nvidia:l4t-base

PS:

1、nvidia docker 做了什么?

正常创建一个容器的流程是这样的:

docker --> dockerd --> containerd--> containerd-shim -->runc --> container-processdocker客户端将创建容器的请求发送给dockerd, 当dockerd收到请求任务之后将请求发送给containerd, containerd经过查看校验启动containerd-shim或者自己来启动容器进程。

创建一个使用GPU的容器

创建GPU容器的流程如下:

docker--> dockerd --> containerd --> containerd-shim--> nvidia-container-runtime --> nvidia-container-runtime-hook --> libnvidia-container --> runc -- > container-process基本流程和不使用GPU的容器差不多,只是把docker默认的运行时替换成了NVIDIA自家的nvidia-container-runtime。

这样当nvidia-container-runtime创建容器时,先执行nvidia-container-runtime-hook这个hook去检查容器是否需要使用GPU(通过环境变NVIDIA_VISIBLE_DEVICES来判断)。如果需要则调用libnvidia-container来暴露GPU给容器使用。否则走默认的runc逻辑。

2、卸载nvidia-docker

如果已经安装了nvidia-docker,可按照以下方式卸载

apt-get remove nvidia-container-toolkit

dpkg --purge nvidia-docker2

dpkg --purge libnvidia-container-tools

dpkg --purge libnvidia-container0:arm64

dpkg --purge libnvidia-container1:arm64

参考:

GitHub - NVIDIA/libnvidia-container: NVIDIA container runtime library libnvidia docker

libnvidia-container/mount_plugins.md at jetson · NVIDIA/libnvidia-container · GitHub mount plugin

Cloud-Native on Jetson | NVIDIA Developer

Your First Jetson Container | NVIDIA Developer

Docker Images :: NVIDIA SDK Manager Documentation

NVIDIA Jetson板子上安装nvidia docker需要注意的问题_XCCCCZ的博客-CSDN博客

OpenCV and nvidia-l4t-base · Issue #5 · dusty-nv/jetson-containers · GitHub

Disable mount plugins - Jetson & Embedded Systems / Jetson AGX Xavier - NVIDIA Developer Forums

server/jetson.md at main · triton-inference-server/server · GitHub jetpack4.6后开始支持triton

posted on 2021-11-22 16:54 MissSimple 阅读(656) 评论(0) 编辑 收藏 举报