评价指标的计算:accuracy、precision、recall、F1-score等

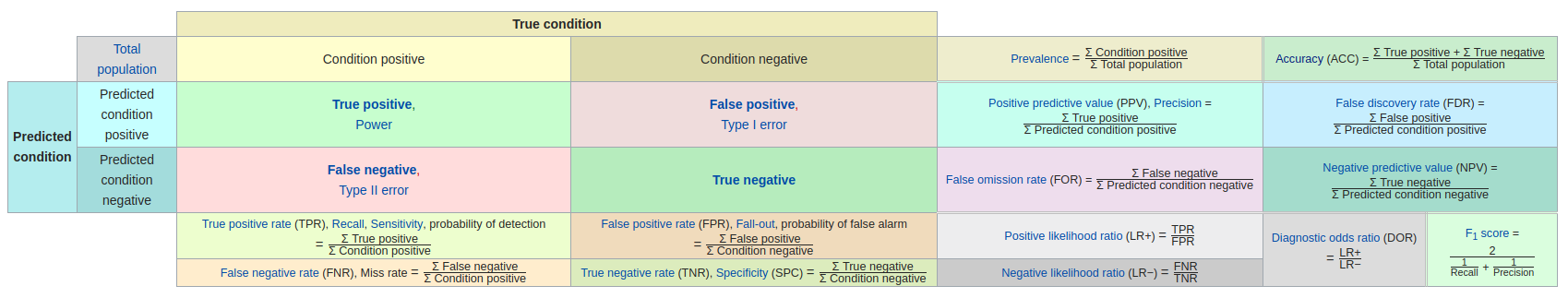

记正样本为P,负样本为N,下表比较完整地总结了准确率accuracy、精度precision、召回率recall、F1-score等评价指标的计算方式:

(右键点击在新页面打开,可查看清晰图像)

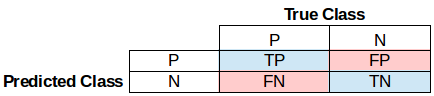

简单版:

precision = TP / (TP + FP) # 预测为正的样本中实际正样本的比例 recall = TP / (TP + FN) # 实际正样本中预测为正的比例 accuracy = (TP + TN) / (P + N) F1-score = 2 / [(1 / precision) + (1 / recall)]

from sklearn.metrics import accuracy_score, precision_score, recall_score def cul_accuracy_precision_recall(y_true, y_pred, pos_label=1): return {"accuracy": float("%.5f" % accuracy_score(y_true=y_true, y_pred=y_pred)), "precision": float("%.5f" % precision_score(y_true=y_true, y_pred=y_pred, pos_label=pos_label)), "recall": float("%.5f" % recall_score(y_true=y_true, y_pred=y_pred, pos_label=pos_label))}

***********************************************************************************************************************************

(下面写的内容纯属个人推导,如有错误,望指正)

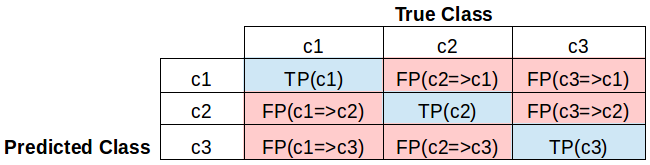

一般来说,精度和召回率是针对具体类别来计算的,例如:

precision(c1) = TP(c1) / Pred(c1) = TP(c1) / [TP(c1) + FP(c2=>c1) + FP(c3=>c1)]

recall(c1) = TP(c1) / True(c1) = TP(c1) / [TP(c1) + FP(c1=>c2) + FP(c1=>c3)]

有时需要衡量模型的整体性能,有:

total_precision = sum[TP(ci)] / sum[Pred(ci)] = [TP(c1) + TP(c2) + TP(c3)] / len(Pred) total_recall = sum[TP(ci)] / sum[True(ci)] = [TP(c1) + TP(c2) + TP(c3)] / len(True) total_accuracy = sum[TP(ci)] / total_num = [TP(c1) + TP(c2) + TP(c3)] / total_num

其中i取值自[1,2,...,n]

到这里很惊讶地发现,针对整体而言,一般有 len(Pred) == len(True) == total_num

也就是说, total_precision == total_recall == total_accuracy ,所以衡量模型整体性能用其中一个就可以了

针对概率输出型的的模型,很多时候会通过设置阈值梯度,得到映射关系 F(threshold) ==> (precision, recall)

在卡阈值的情况下,除了total_precision,还可以计算一个广义召回率:

generalized_recall = sum[TP(ci)] / sum[True(ci)] = [TP(c1) + TP(c2) + TP(c3)] / [len(True) + OutOfThreshold]

其中OutOfThreshold表示因低于指定阈值而被筛选去掉的样本数。

参考:

https://en.wikipedia.org/wiki/Receiver_operating_characteristic

https://www.cnblogs.com/shixiangwan/p/7215926.html?utm_source=itdadao&utm_medium=referral

浙公网安备 33010602011771号

浙公网安备 33010602011771号