敏感词校验 DFA 算法

为什么需要过滤

在Web系统中,用户需要进行评论/回复,或者发布一些观点,服务器需要对用户发布的内容进行过滤(如果想被请去喝茶的话可以选择不过滤),过滤的内容当然包括色情、暴力、政府相关等

在分析需求后,google 相关的其他系统是如何实现的. DFA 算法,可以比较高效的进行匹配 。如果使用 for 循环逐个匹配效率肯定是不行的

什么是DFA算法

Deterministic Finite Automaton ,也就是确定有穷自动机 ,通过 event 与 state 得到下一个 state ,即状态的转换

DFA 算法的实现

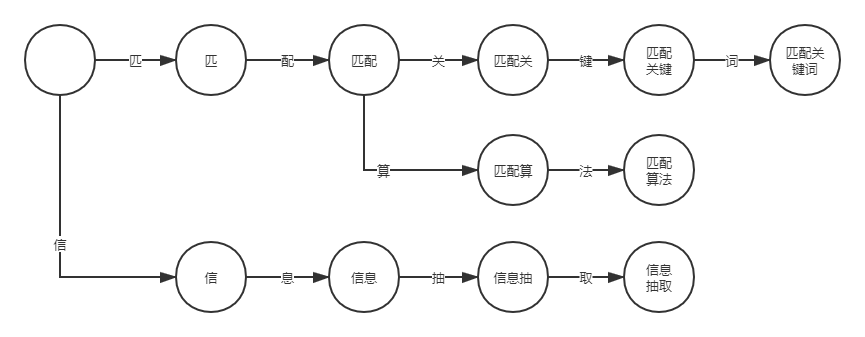

- 构建存储结构(构建类似树型结构匹配)

- 关键词匹配

通过加载数据库数据关键词,判断内容是否合法

/** * 修改为每次都读取一次 ,也可以系统启动读取一次(不实时) */ @Component public class SensitiveWordFilter { private final SensitiveWordDao sensitiveWordDao; @SuppressWarnings("rawtypes") private Map sensitiveWordMap = null; public static int minMatchTYpe = 1; public static int maxMatchType = 2; public SensitiveWordFilter(SensitiveWordDao sensitiveWordDao){ this.sensitiveWordDao = sensitiveWordDao; // Set<String> keyWordSet = sensitiveWordDao.list(); // addSensitiveWordToHashMap(keyWordSet); } @SuppressWarnings({ "rawtypes", "unchecked" }) private void addSensitiveWordToHashMap(Set<String> keyWordSet) { sensitiveWordMap = new HashMap(keyWordSet.size()); String key = null; Map nowMap = null; Map<String, String> newWorMap = null; Iterator<String> iterator = keyWordSet.iterator(); while(iterator.hasNext()){ key = iterator.next(); nowMap = sensitiveWordMap; for(int i = 0 ; i < key.length() ; i++){ char keyChar = key.charAt(i); Object wordMap = nowMap.get(keyChar); if(wordMap != null){ nowMap = (Map) wordMap; } else{ newWorMap = new HashMap<String,String>(); newWorMap.put("isEnd", "0"); nowMap.put(keyChar, newWorMap); nowMap = newWorMap; } if(i == key.length() - 1){ nowMap.put("isEnd", "1"); } } } } public boolean isContainSensitiveWord(String txt, int matchType){ boolean flag = false; for(int i = 0 ; i < txt.length() ; i++){ int matchFlag = this.CheckSensitiveWord(txt, i, matchType); if(matchFlag > 0){ flag = true; } } return flag; } public Set<String> getSensitiveWord(String txt , int matchType){ Set<String> sensitiveWordList = new HashSet<String>(); for(int i = 0 ; i < txt.length() ; i++){ int length = CheckSensitiveWord(txt, i, matchType); if(length > 0){ sensitiveWordList.add(txt.substring(i, i+length)); i = i + length - 1; } } return sensitiveWordList; } public String replaceSensitiveWord(String txt,int matchType,String replaceChar){ String resultTxt = txt; Set<String> set = getSensitiveWord(txt, matchType); Iterator<String> iterator = set.iterator(); String word = null; String replaceString = null; while (iterator.hasNext()) { word = iterator.next(); replaceString = getReplaceChars(replaceChar, word.length()); resultTxt = resultTxt.replaceAll(word, replaceString); } return resultTxt; } private String getReplaceChars(String replaceChar,int length){ String resultReplace = replaceChar; for(int i = 1 ; i < length ; i++){ resultReplace += replaceChar; } return resultReplace; } @SuppressWarnings({ "rawtypes"}) public int CheckSensitiveWord(String txt,int beginIndex,int matchType){ Set<String> keyWordSet = sensitiveWordDao.list(); addSensitiveWordToHashMap(keyWordSet); boolean flag = false; int matchFlag = 0; char word = 0; Map nowMap = sensitiveWordMap; for(int i = beginIndex; i < txt.length() ; i++){ word = txt.charAt(i); nowMap = (Map) nowMap.get(word); if(nowMap != null){ matchFlag++; if("1".equals(nowMap.get("isEnd"))){ flag = true; if(SensitiveWordFilter.minMatchTYpe == matchType){ break; } } } else{ break; } } if(matchFlag < 2 || !flag){ matchFlag = 0; } return matchFlag; } }

参考:

https://blog.csdn.net/weixin_43378396/article/details/105910145