一 同步http

func Http_curl(url string, payload_str string, method string) []byte{

payload := strings.NewReader(payload_str)

req, _ := http.NewRequest(method, url, payload)

req.Header.Add("content-type", "application/x-www-form-urlencoded")

req.Header.Add("cache-control", "no-cache")

res, _ := http.DefaultClient.Do(req)

defer res.Body.Close()

body, _ := ioutil.ReadAll(res.Body)

//fmt.Println(string(body))

return body

}

二 异步http

func Http_curl_async(url string, payload_str string, method string){

go func() {

Http_curl(url,payload_str,method)

}()

}

三 控制异步并发速度

var(

chSem = make(chan int, 5)

)

func Http_curl_async(url string, payload_str string, method string){

go func() {

chSem <- 1

Http_curl(url,payload_str,method)

<- chSem

}()

}

四 异步并发实现

package utils

import (

"fmt"

"io/ioutil"

"net/http"

"strings"

"sync"

)

var(

chSem = make(chan int, 5)

chSemWg sync.WaitGroup

)

func Http_curl(url string, payload_str string, method string) []byte{

payload := strings.NewReader(payload_str)

req, _ := http.NewRequest(method, url, payload)

req.Header.Add("content-type", "application/x-www-form-urlencoded")

req.Header.Add("cache-control", "no-cache")

res, _ := http.DefaultClient.Do(req)

defer res.Body.Close()

body, _ := ioutil.ReadAll(res.Body)

//fmt.Println(string(body))

return body

}

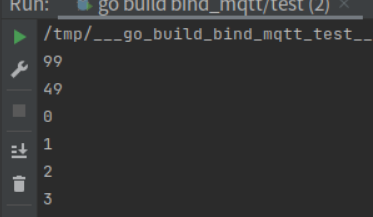

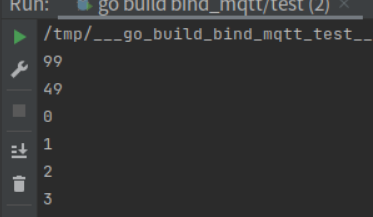

func Http_curl_async_loop(looptimes int,url string, payload_str string, method string){

chSemWg.Add(looptimes)

for i := 0; i < looptimes; i++ {

cur_i := i

go func() {

chSem <- 1

fmt.Println(cur_i)

Http_curl(url,payload_str,method)

<- chSem

chSemWg.Done()

}()

}

chSemWg.Wait()

}

utils.Http_curl_async_loop(100,"http://www.baidu.com", "s=s01","POST")

五 说明

- 如果不用wg,轮询没走完程序就会退出

- 要控制并发速度,不然协程多了,开启和维护的资源也不少