中文词云代码调试

词云是个很有意思的东西。

用jieba断词,小说文本存入"mori.txt",停用词列表在"stopword.txt"中,断词结果好坏,停用词很重要,需要不断调整补充。

from wordcloud import WordCloud import jieba f = open(u'mori.txt','r').read() ##cuttext=" ".join(jieba.cut(f)) cuttext= jieba.cut(f) final= [] stopwords=open(u'stopword.txt','r').read() for seg in cuttext: ##seg = seg.encode('utf-8') if seg[0] not in ['0','1','2','3','4','5','6','7','8','9']:##忽略数字 if seg not in stopwords: final.append( seg) ## 列表添加 font=r"c:/Windows/Fonts/simsun.ttc"##中文显示必须加 wordcloud = WordCloud(font_path=font,background_color="white",width=1000, height=860, margin=2).generate(" ".join(final)) import matplotlib.pyplot as plt plt.imshow(wordcloud) plt.axis("off") plt.show()

wordcloud.to_file('test.png')

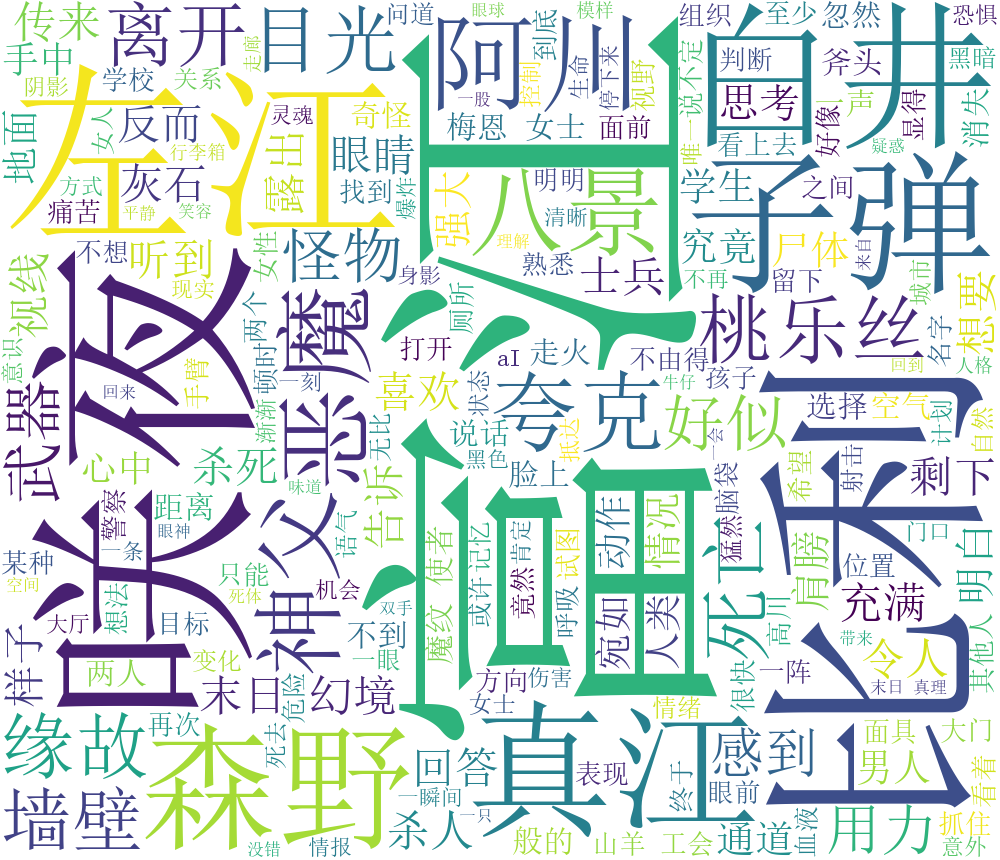

效果图:

下面是词频统计排序,词长排序的代码。

##统计词频 freqD2 = {} for word2 in final: freqD2[word2] = freqD2.get(word2, 0) + 1 ##按词频排序输出 counter_list = sorted(freqD2.items(), key=lambda x: x[1], reverse=True) _2000=counter_list[0][1] + 1 print(_2000)##用于词长词频排序用 fp = open('sort.txt',"w+",encoding='utf-8') for d in counter_list: fp.write(d[0]+':'+str(d[1])) fp.write('\n') fp.close() ##按词长词频排序输出 counter_list = sorted(freqD2.items(), key=lambda x: len(x[0])*_2000+x[1], reverse=True) fp = open('sortlen.txt',"w+",encoding='utf-8') for d in counter_list: fp.write(d[0]+':'+str(d[1])) fp.write('\n') fp.close()

排序代码很方便,也值得借鉴,Python是个好东西,强大,易重用。