D-Bus 性能分析

- 结构化的名字空间;

- 独立于架构的数据格式;

- 支持消息中的大部分通用数据元素;

- 带有异常处理的通用远程调用接口;

- 支持广播类型的通信。

参考: 《Building object-oriented software with the D-Bus messaging system》

《Object-oriented IPC, D-bus and CORBA》

OO-IPC一般架构

Because OO-IPC usually defines platform independant abstract interface and wire message protocol, the caller and the callee can be implemented by different object model, in different language, on different system and processor. The proxy / adapter or object broker is usually implemented in libraries, and programmer only needs to define their own object and interface, and implements these objects and interfaces. Usually there is a interface definition language (IDL) used in a certain type of OO-IPC, such as XML. The topology of communication connection can be a software bus which support multicast, or a point to point network. Such underlying topology is usually transparent to the programmer.

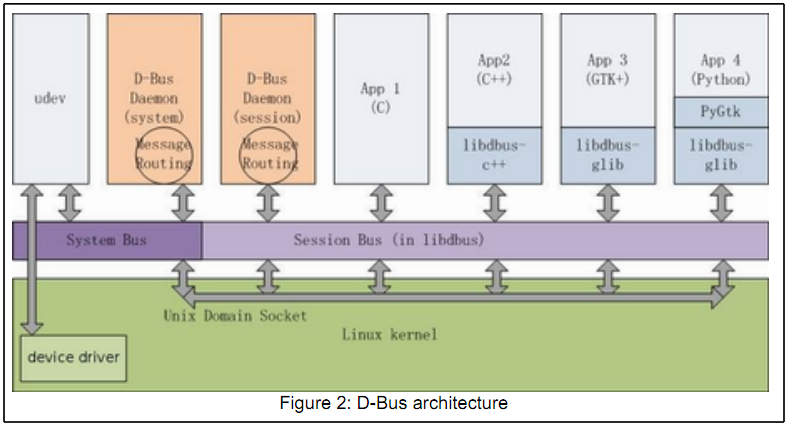

D-Bus architecture

The topology of D-Bus looks like a software bus shared by every process connected to it, so that any process can communicate with another process, or multicast / broadcast an event. This topology is implemented in a hub-spoke way, i.e. all processes connect to the daemon. Any process running on D-Bus has a connection to the bus with a unique bus address.

这一段道破了D-Bus的拓扑结构——所有进程都需要连接到daemon上,获取D-Bus消息。

D-Bus support two basic IPC operations - signal and method. Signal implements a publisher / subscriber model and it is usually used to deliver certain event to other application. Method implements an one-to-one caller / callee model, which is the same as RPC. All operations will eventually generates bus messages and be transported through Unix domain socket. Message is routed by the daemon. For method call, routing is based on source and destination. For signal, routing is based on various match rules on the attributes of message, such as message type, bus name, object path, interface name, etc.

Problem of D-Bus

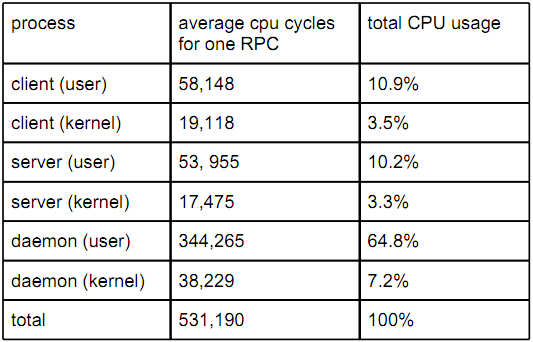

However, there are some problems that have not been addressed. First, many event-driven system and application need certain real-timeness feature, which Linux and D-Bus does not provide. Second, in many cases D-Bus binding infrastructure such as GKT, QT and Java are not easily and usually employed in embedded systems. So new libraries, lightweight language bindings, event driven middleware and application framework are more desirable. Third, and the most important, current D-Bus implementations has significant overhead in embedded environment with restricted CPU speed and memory space. The overhead is fourfold. One is abstraction overhead caused by multiple layers of encapsulation in the library (four layers, including transport layer, message protocol layer, object layer, and event layer). Another is security overhead caused by argument assertion and validation, safe string operation, safe memory allocation and freeing. Then another is object model overhead caused by serializing and deserializing (memory copy), introspecting (XML parsing), and method name binding (D-Bus interface v-table access and indirect call). The last one is routing overhead in the daemon caused by matching, filtering, policing, and forwarding. One RPC may cause four context switches because the calling message first wakes up the daemon for routing and then the callee, and the return message dose two wakeups again. A performance data of the echo client server is listed below, obtained by Linux perf tools. This test is made using just libdbus (without C++ object layer overhead) with 1 million method calls. The calling message argument is a 5 byte character string and the return value is a 4 byte status and a 4 byte integer, which is typical for D-Bus message call. In our test, the average delay of one RPC is about 1.22 ms (on a Core 2 Duo E8400 with 4GB memory), which is considerably long.

以上分析鞭辟入里,尽管D-Bus一直被称为是“轻量化”的IPC,仍然存在着“显著的”开销——对于嵌入式设备来说,也是一种沉重负担。并举例一次RPC操作所带来的4次上下文切换。当然对于一般的图形化设备来说,这个“沉重的”开销就不值一提了。

D-Bus usage

Despite those problems, nearly all desktop GUI applications on Linux use D-Bus, simply because those are not problems for desktop machines and traffic over D-Bus is really light. These applications include basic desktop environment components such as GNOME panel and session manager, most desktop environment management gadgets such as network manager and display manager, crucial system utilities such as Nautilus[12] file system manager and screen saver, device applets such as bluetooth applet, system controller such as UPower[13] and UDisk[14], kernel component configuration interface such as PolicyKit[15], hardware event monitor such as udev, and most GUI user applications such as Audacious music player and Brasero disk burner[16].

Another important usage of D-Bus is delivering hardware state information to user space applications. Hardware event is usually generated by device drivers inside the kernel, which uses the uevent[17] interface to send these events to udev[5]. The systemd[18] daemon (formally udevd) interprets these information and translates them to predefined D-Bus signals, and broadcast it on D-Bus. Any processor that is interested in hardware event can add a match rule so that such signals will be listened. Using this publisher / subscriber way, the hardware event interface become more flexible. Although D-Bus is mainly used as OO-IPC mechanism in desktop environment, one can try to port or implement it in embedded environment which is not suffered from those problems mentioned before.

With further middleware, one can build event-driven application framework for certain embedded systems, especially those systems which needs interactive communication or using sensor / actuator model, such as robotics. Magnenat and Mondada proposed such a scheme on robots called ASEBA[19]. Sarker and Dahl also designed a communication system based on D-Bus named HEAD[20]. Such system are implemented on embedded linux and peripheral MCUs.

from: https://dbus.freedesktop.org/doc/dbus-specification.html

Table of Contents

浙公网安备 33010602011771号

浙公网安备 33010602011771号