centos搭建单节点hadoop

由于本地机器资源有限,搭建单节点hadoop供开发、测试。

1、安装java

mkdir /usr/local/java

cd /usr/local/java

tar zxvf jdk-8u181-linux-x64.tar.gz

rm -f jdk-8u181-linux-x64.tar.gz

---设置jdk环境变量

vi /etc/profile

JAVA_HOME=/usr/local/java/jdk1.8.0_181

JRE_HOME=/usr/local/java/jdk1.8.0_181/jre

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

PATH=$JAVA_HOME/bin:$PATH

export PATH JAVA_HOME CLASSPATH

source /etc/profile

java -version

2、增加用户

useradd hadoop

配置免密登陆(需开启ssh服务)

yum install -y openssh-server

yum install -y openssh-clients

systemctl start sshd.service

su - hadoop

ssh-keygen -t rsa -P ''

cat id_rsa.pub > ~/.ssh/authorized_keys

chmod 700 ~/.ssh

chmod 600 ~/.ssh/authorized_keys

3、创建目录和解压缩

mkdir -p /opt/hadoop

上传hadoop-2.8.5.tar.gz 至/opt/hadoop

chown -R hadoop:hadoop /opt/hadoop

mkdir -p /u01/hadoop

mkdir /u01/hadoop/tmp

mkdir /u01/hadoop/var

mkdir /u01/hadoop/dfs

mkdir /u01/hadoop/dfs/name

mkdir /u01/hadoop/dfs/data

chown -R hadoop.hadoop /u01/hadoop

su - hadoop

cd /opt/hadoop

tar -xzvf hadoop-2.8.5.tar.gz

rm -f hadoop-2.8.5.tar.gz

4、配置环境变量

vi ~/.bashrc

export HADOOP_HOME=/opt/hadoop/hadoop-2.8.5

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib:$HADOOP_COMMON_LIB_NATIVE_DIR"

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

source ~/.bashrc

5、修改配置文件

路径:/opt/hadoop/hadoop-2.8.5/etc/hadoop

- hadoop-env.sh

JAVA_HOME=/usr/local/java/jdk1.8.0_181

- core-site.xml

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://hadoop01:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/u01/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

</configuration>

- hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>/u01/hadoop/dfs/name</value>

<description>Path on the local filesystem where theNameNode stores the namespace and transactions logs persistently.</description>

</property>

<property>

<name>dfs.data.dir</name>

<value>/u01/hadoop/dfs/data</value>

<description>Comma separated list of paths on the localfilesystem of a DataNode where it should store its blocks.</description>

</property>

<property>

<name>dfs.permissions</name>

<value>true</value>

<description>need not permissions</description>

</property>

</configuration>

- mapred-site.xml

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>hadoop01:49001</value>

</property>

<property>

<name>mapred.local.dir</name>

<value>/u01/hadoop/var</value>

</property>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

- yarn-site.xml

- slaves

hadoop01

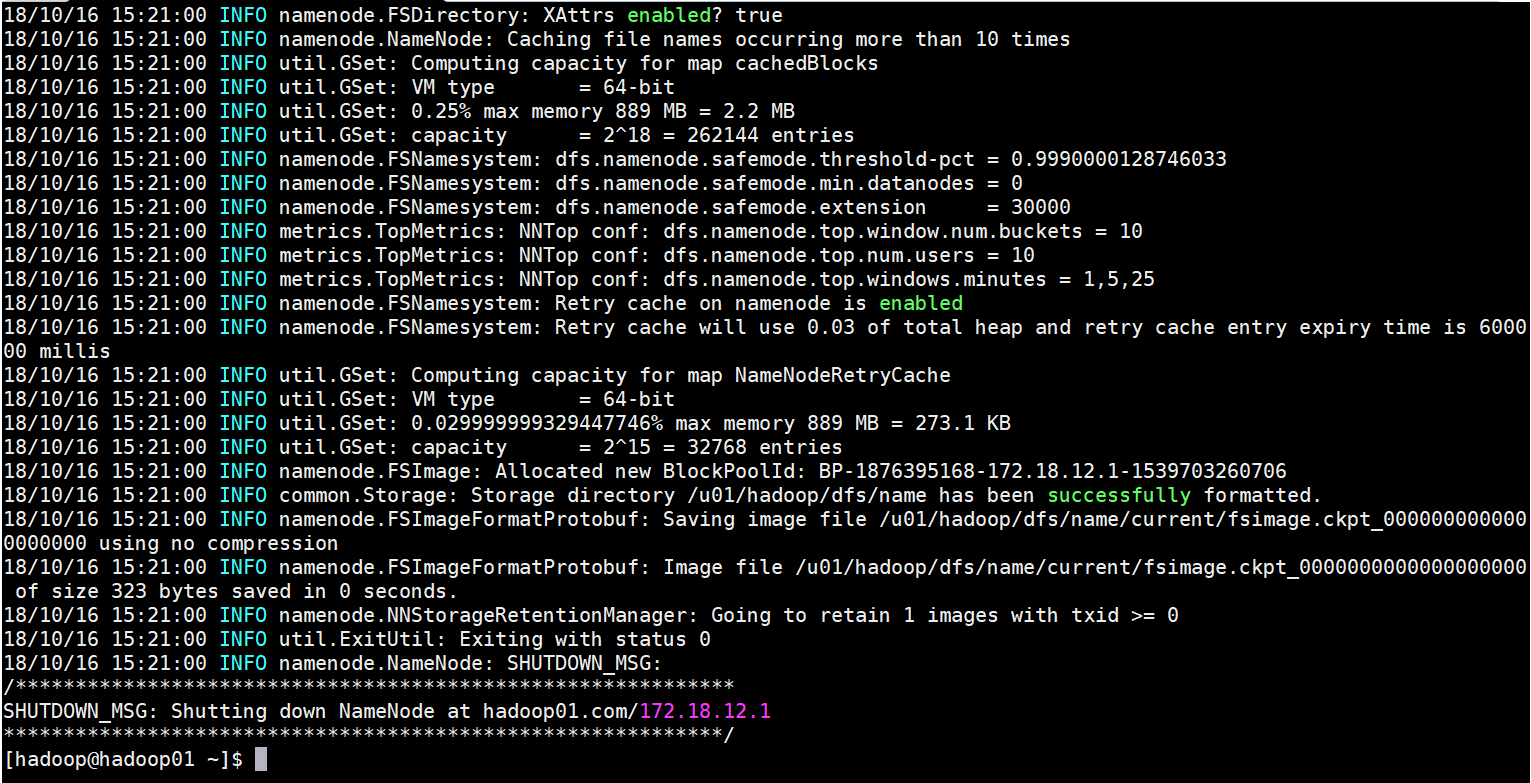

6、启动服务

---初始化HDFS文件系统

hdfs namenode -format

---启动Hadoop服务

start-dfs.sh #启动Hadoop

start-yarn.sh #启动yarn

jps #查看服务状态

如图有5个服务起来

如果启动报错可以通过以下单独启动

hadoop-daemon.sh start namenode

hadoop-daemon.sh start datanode

登录验证:

http://172.18.12.1:8088/cluster

尝试创建文件

hdfs dfs -mkdir /user

hdfs dfs -mkdir /user/hadoop

hdfs dfs -put start-dfs.sh /user/hadoop

![clipboard[1] clipboard[1]](https://img2018.cnblogs.com/blog/1511013/201810/1511013-20181016233320192-782837134.png)

![clipboard[2] clipboard[2]](https://img2018.cnblogs.com/blog/1511013/201810/1511013-20181016233321642-2048650473.png)

![clipboard[3] clipboard[3]](https://img2018.cnblogs.com/blog/1511013/201810/1511013-20181016233323338-1034520837.png)

![clipboard[4] clipboard[4]](https://img2018.cnblogs.com/blog/1511013/201810/1511013-20181016233324918-233490188.png)