Airflow遇到问题 Task received SIGTERM signal

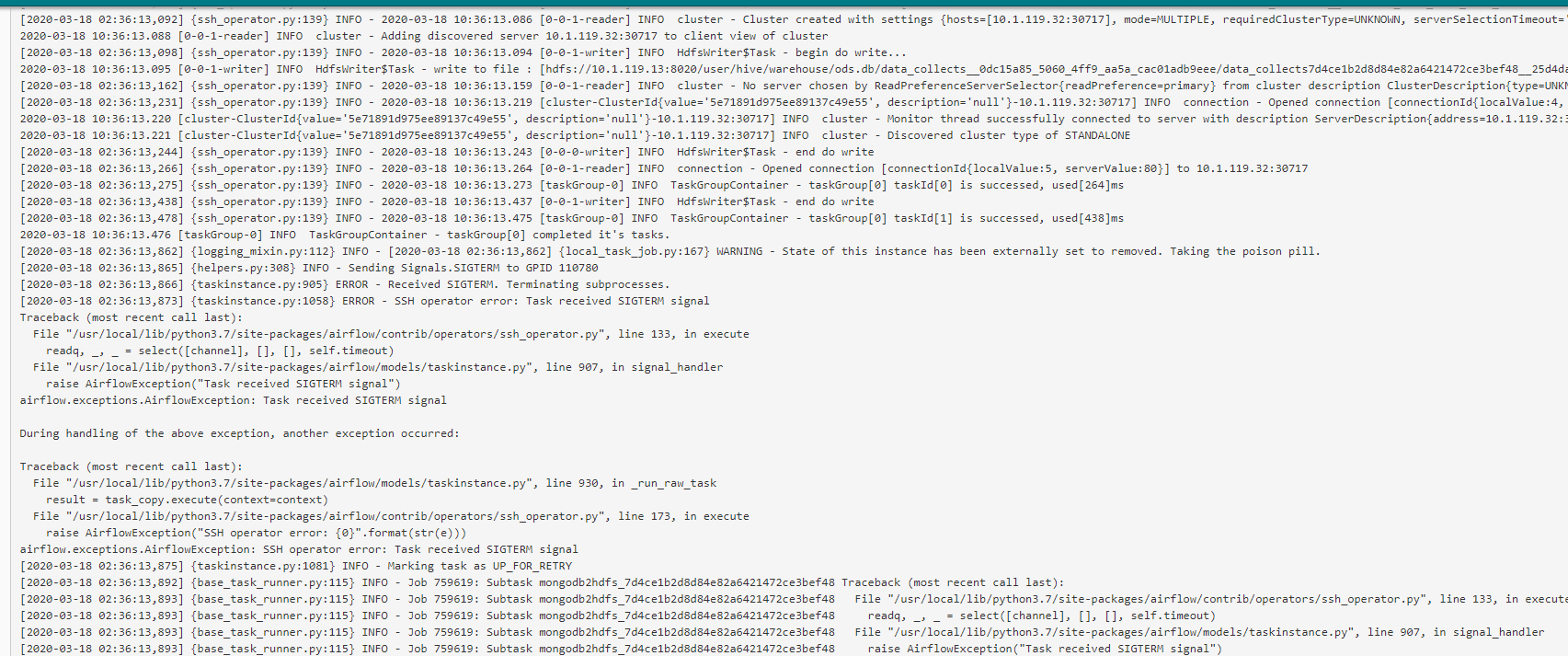

当我们在使用airflow调度系统的时候,出现以下问题

*** Reading local file: /usr/local/airflow/logs/dagabcfcdeebc7d438db4ec7ef944d19b5a_7d4ce1b2d8d84e82a6421472ce3bef48/mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48/2020-03-18T02:36:05.955649+00:00/1.log [2020-03-18 02:36:08,865] {taskinstance.py:630} INFO - Dependencies all met for <TaskInstance: dagabcfcdeebc7d438db4ec7ef944d19b5a_7d4ce1b2d8d84e82a6421472ce3bef48.mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 2020-03-18T02:36:05.955649+00:00 [queued]> [2020-03-18 02:36:08,881] {taskinstance.py:630} INFO - Dependencies all met for <TaskInstance: dagabcfcdeebc7d438db4ec7ef944d19b5a_7d4ce1b2d8d84e82a6421472ce3bef48.mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 2020-03-18T02:36:05.955649+00:00 [queued]> [2020-03-18 02:36:08,881] {taskinstance.py:841} INFO - -------------------------------------------------------------------------------- [2020-03-18 02:36:08,881] {taskinstance.py:842} INFO - Starting attempt 1 of 4 [2020-03-18 02:36:08,881] {taskinstance.py:843} INFO - -------------------------------------------------------------------------------- [2020-03-18 02:36:08,893] {taskinstance.py:862} INFO - Executing <Task(SSHOperator): mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48> on 2020-03-18T02:36:05.955649+00:00 [2020-03-18 02:36:08,893] {base_task_runner.py:133} INFO - Running: ['airflow', 'run', 'dagabcfcdeebc7d438db4ec7ef944d19b5a_7d4ce1b2d8d84e82a6421472ce3bef48', 'mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48', '2020-03-18T02:36:05.955649+00:00', '--job_id', '759619', '--pool', 'default_pool', '--raw', '-sd', 'DAGS_FOLDER/dagabcfcdeebc7d438db4ec7ef944d19b5a_7d4ce1b2d8d84e82a6421472ce3bef48.py', '--cfg_path', '/tmp/tmp6jf69h_y'] [2020-03-18 02:36:09,494] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 /usr/local/lib/python3.7/site-packages/airflow/configuration.py:226: FutureWarning: The task_runner setting in [core] has the old default value of 'BashTaskRunner'. This value has been changed to 'StandardTaskRunner' in the running config, but please update your config before Apache Airflow 2.0. [2020-03-18 02:36:09,495] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 FutureWarning [2020-03-18 02:36:09,495] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 /usr/local/lib/python3.7/site-packages/airflow/configuration.py:606: DeprecationWarning: Specifying both AIRFLOW_HOME environment variable and airflow_home in the config file is deprecated. Please use only the AIRFLOW_HOME environment variable and remove the config file entry. [2020-03-18 02:36:09,495] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 warnings.warn(msg, category=DeprecationWarning) [2020-03-18 02:36:09,495] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 /usr/local/lib/python3.7/site-packages/airflow/config_templates/airflow_local_settings.py:65: DeprecationWarning: The elasticsearch_host option in [elasticsearch] has been renamed to host - the old setting has been used, but please update your config. [2020-03-18 02:36:09,495] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 ELASTICSEARCH_HOST = conf.get('elasticsearch', 'HOST') [2020-03-18 02:36:09,495] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 /usr/local/lib/python3.7/site-packages/airflow/config_templates/airflow_local_settings.py:67: DeprecationWarning: The elasticsearch_log_id_template option in [elasticsearch] has been renamed to log_id_template - the old setting has been used, but please update your config. [2020-03-18 02:36:09,495] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 ELASTICSEARCH_LOG_ID_TEMPLATE = conf.get('elasticsearch', 'LOG_ID_TEMPLATE') [2020-03-18 02:36:09,495] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 /usr/local/lib/python3.7/site-packages/airflow/config_templates/airflow_local_settings.py:69: DeprecationWarning: The elasticsearch_end_of_log_mark option in [elasticsearch] has been renamed to end_of_log_mark - the old setting has been used, but please update your config. [2020-03-18 02:36:09,495] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 ELASTICSEARCH_END_OF_LOG_MARK = conf.get('elasticsearch', 'END_OF_LOG_MARK') [2020-03-18 02:36:09,624] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:09,624] {settings.py:252} INFO - settings.configure_orm(): Using pool settings. pool_size=5, max_overflow=10, pool_recycle=1800, pid=110780 [2020-03-18 02:36:10,159] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,158] {configuration.py:299} WARNING - section/key [rest_api_plugin/log_loading] not found in config [2020-03-18 02:36:10,159] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,159] {rest_api_plugin.py:60} WARNING - Initializing [rest_api_plugin/LOG_LOADING] with default value = False [2020-03-18 02:36:10,159] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,159] {configuration.py:299} WARNING - section/key [rest_api_plugin/filter_loading_messages_in_cli_response] not found in config [2020-03-18 02:36:10,159] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,159] {rest_api_plugin.py:60} WARNING - Initializing [rest_api_plugin/FILTER_LOADING_MESSAGES_IN_CLI_RESPONSE] with default value = True [2020-03-18 02:36:10,160] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,159] {configuration.py:299} WARNING - section/key [rest_api_plugin/rest_api_plugin_http_token_header_name] not found in config [2020-03-18 02:36:10,160] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,160] {rest_api_plugin.py:50} WARNING - Initializing [rest_api_plugin/REST_API_PLUGIN_HTTP_TOKEN_HEADER_NAME] with default value = rest_api_plugin_http_token [2020-03-18 02:36:10,160] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,160] {configuration.py:299} WARNING - section/key [rest_api_plugin/rest_api_plugin_expected_http_token] not found in config [2020-03-18 02:36:10,160] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,160] {rest_api_plugin.py:50} WARNING - Initializing [rest_api_plugin/REST_API_PLUGIN_EXPECTED_HTTP_TOKEN] with default value = None [2020-03-18 02:36:10,301] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,300] {__init__.py:51} INFO - Using executor SequentialExecutor [2020-03-18 02:36:10,301] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,301] {dagbag.py:92} INFO - Filling up the DagBag from /usr/local/airflow/dags/dagabcfcdeebc7d438db4ec7ef944d19b5a_7d4ce1b2d8d84e82a6421472ce3bef48.py [2020-03-18 02:36:10,333] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 /usr/local/lib/python3.7/site-packages/airflow/utils/helpers.py:428: DeprecationWarning: Importing 'ExternalTaskSensor' directly from 'airflow.operators' has been deprecated. Please import from 'airflow.operators.[operator_module]' instead. Support for direct imports will be dropped entirely in Airflow 2.0. [2020-03-18 02:36:10,334] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 DeprecationWarning) [2020-03-18 02:36:10,334] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 /usr/local/lib/python3.7/site-packages/airflow/utils/helpers.py:428: DeprecationWarning: Importing 'EmailOperator' directly from 'airflow.operators' has been deprecated. Please import from 'airflow.operators.[operator_module]' instead. Support for direct imports will be dropped entirely in Airflow 2.0. [2020-03-18 02:36:10,334] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 DeprecationWarning) [2020-03-18 02:36:10,385] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:10,385] {cli.py:545} INFO - Running <TaskInstance: dagabcfcdeebc7d438db4ec7ef944d19b5a_7d4ce1b2d8d84e82a6421472ce3bef48.mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 2020-03-18T02:36:05.955649+00:00 [running]> on host airflow-5d68dbf958-pcvw5 [2020-03-18 02:36:10,405] {logging_mixin.py:112} INFO - [2020-03-18 02:36:10,405] {ssh_hook.py:166} WARNING - Remote Identification Change is not verified. This wont protect against Man-In-The-Middle attacks [2020-03-18 02:36:10,405] {logging_mixin.py:112} INFO - [2020-03-18 02:36:10,405] {ssh_hook.py:170} WARNING - No Host Key Verification. This wont protect against Man-In-The-Middle attacks [2020-03-18 02:36:10,416] {logging_mixin.py:112} INFO - [2020-03-18 02:36:10,416] {transport.py:1819} INFO - Connected (version 2.0, client OpenSSH_8.0) [2020-03-18 02:36:10,493] {logging_mixin.py:112} INFO - [2020-03-18 02:36:10,492] {transport.py:1819} INFO - Authentication (password) successful! [2020-03-18 02:36:10,606] {ssh_operator.py:139} INFO - DataX (DATAX-OPENSOURCE-3.0), From Alibaba ! Copyright (C) 2010-2017, Alibaba Group. All Rights Reserved. [2020-03-18 02:36:11,206] {ssh_operator.py:139} INFO - 2020-03-18 10:36:11.202 [main] INFO VMInfo - VMInfo# operatingSystem class => sun.management.OperatingSystemImpl [2020-03-18 02:36:11,214] {ssh_operator.py:139} INFO - 2020-03-18 10:36:11.213 [main] INFO Engine - the machine info => osInfo: Oracle Corporation 1.8 25.102-b14 jvmInfo: Linux amd64 3.10.0-862.14.4.el7.x86_64 cpu num: 4 totalPhysicalMemory: -0.00G freePhysicalMemory: -0.00G maxFileDescriptorCount: -1 currentOpenFileDescriptorCount: -1 GC Names [PS MarkSweep, PS Scavenge] MEMORY_NAME | allocation_size | init_size PS Eden Space | 256.00MB | 256.00MB Code Cache | 240.00MB | 2.44MB Compressed Class Space | 1,024.00MB | 0.00MB PS Survivor Space | 42.50MB | 42.50MB PS Old Gen | 683.00MB | 683.00MB Metaspace | -0.00MB | 0.00MB [2020-03-18 02:36:11,241] {ssh_operator.py:139} INFO - 2020-03-18 10:36:11.240 [main] INFO Engine - { "content":[ { "reader":{ "name":"mongodbreader", "parameter":{ "address":[ "10.1.119.32:30717" ], "collectionName":"data_collects", "column":[ { "name":"id", "type":"string" }, { "name":"title", "type":"string" }, { "name":"description", "type":"string" }, { "name":"bys", "type":"string" }, { "name":"url", "type":"string" }, { "name":"tags", "type":"Array" }, { "name":"likes", "type":"double" } ], "dbName":"xa_dmp", "userName":"root", "userPassword":"*********" } }, "writer":{ "name":"hdfswriter", "parameter":{ "column":[ { "name":"id", "type":"STRING" }, { "name":"title", "type":"STRING" }, { "name":"description", "type":"STRING" }, { "name":"bys", "type":"STRING" }, { "name":"url", "type":"STRING" }, { "name":"tags", "type":"STRING" }, { "name":"likes", "type":"STRING" } ], "compress":"", "defaultFS":"hdfs://10.1.119.13:8020", "fieldDelimiter":"\u0001", "fileName":"data_collects7d4ce1b2d8d84e82a6421472ce3bef48", "fileType":"text", "path":"/user/hive/warehouse/ods.db/data_collects", "writeMode":"append" } } } ], "setting":{ "speed":{ "channel":2 } } } [2020-03-18 02:36:11,260] {ssh_operator.py:139} INFO - 2020-03-18 10:36:11.259 [main] WARN Engine - prioriy set to 0, because NumberFormatException, the value is: null [2020-03-18 02:36:11,262] {ssh_operator.py:139} INFO - 2020-03-18 10:36:11.261 [main] INFO PerfTrace - PerfTrace traceId=job_-1, isEnable=false, priority=0 2020-03-18 10:36:11.262 [main] INFO JobContainer - DataX jobContainer starts job. [2020-03-18 02:36:11,264] {ssh_operator.py:139} INFO - 2020-03-18 10:36:11.264 [main] INFO JobContainer - Set jobId = 0 [2020-03-18 02:36:11,395] {ssh_operator.py:139} INFO - 2020-03-18 10:36:11.394 [job-0] INFO cluster - Cluster created with settings {hosts=[10.1.119.32:30717], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500} [2020-03-18 02:36:11,396] {ssh_operator.py:139} INFO - 2020-03-18 10:36:11.394 [job-0] INFO cluster - Adding discovered server 10.1.119.32:30717 to client view of cluster [2020-03-18 02:36:11,663] {ssh_operator.py:139} INFO - 2020-03-18 10:36:11.656 [cluster-ClusterId{value='5e71891b975ee89137c49e53', description='null'}-10.1.119.32:30717] INFO connection - Opened connection [connectionId{localValue:1, serverValue:76}] to 10.1.119.32:30717 2020-03-18 10:36:11.658 [cluster-ClusterId{value='5e71891b975ee89137c49e53', description='null'}-10.1.119.32:30717] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=10.1.119.32:30717, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[4, 1, 6]}, minWireVersion=0, maxWireVersion=8, maxDocumentSize=16777216, roundTripTimeNanos=654449} 2020-03-18 10:36:11.659 [cluster-ClusterId{value='5e71891b975ee89137c49e53', description='null'}-10.1.119.32:30717] INFO cluster - Discovered cluster type of STANDALONE [2020-03-18 02:36:12,044] {ssh_operator.py:144} WARNING - 三月 18, 2020 10:36:12 上午 org.apache.hadoop.util.NativeCodeLoader <clinit> 警告: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable [2020-03-18 02:36:12,734] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.733 [job-0] INFO JobContainer - jobContainer starts to do prepare ... [2020-03-18 02:36:12,735] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.734 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] do prepare work . [2020-03-18 02:36:12,736] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.735 [job-0] INFO JobContainer - DataX Writer.Job [hdfswriter] do prepare work . [2020-03-18 02:36:12,854] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.852 [job-0] INFO HdfsWriter$Job - 由于您配置了writeMode append, 写入前不做清理工作, [/user/hive/warehouse/ods.db/data_collects] 目录下写入相应文件名前缀 [data_collects7d4ce1b2d8d84e82a6421472ce3bef48] 的文件 2020-03-18 10:36:12.852 [job-0] INFO JobContainer - jobContainer starts to do split ... 2020-03-18 10:36:12.852 [job-0] INFO JobContainer - Job set Channel-Number to 2 channels. [2020-03-18 02:36:12,896] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.894 [job-0] INFO connection - Opened connection [connectionId{localValue:2, serverValue:77}] to 10.1.119.32:30717 [2020-03-18 02:36:12,910] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.907 [job-0] INFO JobContainer - DataX Reader.Job [mongodbreader] splits to [2] tasks. 2020-03-18 10:36:12.908 [job-0] INFO HdfsWriter$Job - begin do split... [2020-03-18 02:36:12,923] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.917 [job-0] INFO HdfsWriter$Job - splited write file name:[hdfs://10.1.119.13:8020/user/hive/warehouse/ods.db/data_collects__0dc15a85_5060_4ff9_aa5a_cac01adb9eee/data_collects7d4ce1b2d8d84e82a6421472ce3bef48__78d34f42_b4bb_4c9b_88e8_65e6d32e6879] 2020-03-18 10:36:12.918 [job-0] INFO HdfsWriter$Job - splited write file name:[hdfs://10.1.119.13:8020/user/hive/warehouse/ods.db/data_collects__0dc15a85_5060_4ff9_aa5a_cac01adb9eee/data_collects7d4ce1b2d8d84e82a6421472ce3bef48__25d4da52_f1c9_478d_b0ad_13ec3dcadc2f] 2020-03-18 10:36:12.918 [job-0] INFO HdfsWriter$Job - end do split. 2020-03-18 10:36:12.918 [job-0] INFO JobContainer - DataX Writer.Job [hdfswriter] splits to [2] tasks. [2020-03-18 02:36:12,947] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.944 [job-0] INFO JobContainer - jobContainer starts to do schedule ... [2020-03-18 02:36:12,963] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.953 [job-0] INFO JobContainer - Scheduler starts [1] taskGroups. 2020-03-18 10:36:12.956 [job-0] INFO JobContainer - Running by standalone Mode. [2020-03-18 02:36:12,991] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.988 [taskGroup-0] INFO TaskGroupContainer - taskGroupId=[0] start [2] channels for [2] tasks. [2020-03-18 02:36:13,003] {ssh_operator.py:139} INFO - 2020-03-18 10:36:12.997 [taskGroup-0] INFO Channel - Channel set byte_speed_limit to -1, No bps activated. 2020-03-18 10:36:12.998 [taskGroup-0] INFO Channel - Channel set record_speed_limit to -1, No tps activated. [2020-03-18 02:36:13,036] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.027 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] attemptCount[1] is started 2020-03-18 10:36:13.030 [0-0-0-reader] INFO cluster - Cluster created with settings {hosts=[10.1.119.32:30717], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500} 2020-03-18 10:36:13.031 [0-0-0-reader] INFO cluster - Adding discovered server 10.1.119.32:30717 to client view of cluster [2020-03-18 02:36:13,068] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.064 [cluster-ClusterId{value='5e71891d975ee89137c49e54', description='null'}-10.1.119.32:30717] INFO connection - Opened connection [connectionId{localValue:3, serverValue:78}] to 10.1.119.32:30717 2020-03-18 10:36:13.065 [cluster-ClusterId{value='5e71891d975ee89137c49e54', description='null'}-10.1.119.32:30717] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=10.1.119.32:30717, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[4, 1, 6]}, minWireVersion=0, maxWireVersion=8, maxDocumentSize=16777216, roundTripTimeNanos=644490} 2020-03-18 10:36:13.066 [cluster-ClusterId{value='5e71891d975ee89137c49e54', description='null'}-10.1.119.32:30717] INFO cluster - Discovered cluster type of STANDALONE [2020-03-18 02:36:13,069] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.069 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[1] attemptCount[1] is started [2020-03-18 02:36:13,071] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.071 [0-0-0-writer] INFO HdfsWriter$Task - begin do write... [2020-03-18 02:36:13,072] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.071 [0-0-0-writer] INFO HdfsWriter$Task - write to file : [hdfs://10.1.119.13:8020/user/hive/warehouse/ods.db/data_collects__0dc15a85_5060_4ff9_aa5a_cac01adb9eee/data_collects7d4ce1b2d8d84e82a6421472ce3bef48__78d34f42_b4bb_4c9b_88e8_65e6d32e6879] [2020-03-18 02:36:13,092] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.086 [0-0-1-reader] INFO cluster - Cluster created with settings {hosts=[10.1.119.32:30717], mode=MULTIPLE, requiredClusterType=UNKNOWN, serverSelectionTimeout='30000 ms', maxWaitQueueSize=500} 2020-03-18 10:36:13.088 [0-0-1-reader] INFO cluster - Adding discovered server 10.1.119.32:30717 to client view of cluster [2020-03-18 02:36:13,098] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.094 [0-0-1-writer] INFO HdfsWriter$Task - begin do write... 2020-03-18 10:36:13.095 [0-0-1-writer] INFO HdfsWriter$Task - write to file : [hdfs://10.1.119.13:8020/user/hive/warehouse/ods.db/data_collects__0dc15a85_5060_4ff9_aa5a_cac01adb9eee/data_collects7d4ce1b2d8d84e82a6421472ce3bef48__25d4da52_f1c9_478d_b0ad_13ec3dcadc2f] [2020-03-18 02:36:13,162] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.159 [0-0-1-reader] INFO cluster - No server chosen by ReadPreferenceServerSelector{readPreference=primary} from cluster description ClusterDescription{type=UNKNOWN, connectionMode=MULTIPLE, all=[ServerDescription{address=10.1.119.32:30717, type=UNKNOWN, state=CONNECTING}]}. Waiting for 30000 ms before timing out [2020-03-18 02:36:13,231] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.219 [cluster-ClusterId{value='5e71891d975ee89137c49e55', description='null'}-10.1.119.32:30717] INFO connection - Opened connection [connectionId{localValue:4, serverValue:79}] to 10.1.119.32:30717 2020-03-18 10:36:13.220 [cluster-ClusterId{value='5e71891d975ee89137c49e55', description='null'}-10.1.119.32:30717] INFO cluster - Monitor thread successfully connected to server with description ServerDescription{address=10.1.119.32:30717, type=STANDALONE, state=CONNECTED, ok=true, version=ServerVersion{versionList=[4, 1, 6]}, minWireVersion=0, maxWireVersion=8, maxDocumentSize=16777216, roundTripTimeNanos=658846} 2020-03-18 10:36:13.221 [cluster-ClusterId{value='5e71891d975ee89137c49e55', description='null'}-10.1.119.32:30717] INFO cluster - Discovered cluster type of STANDALONE [2020-03-18 02:36:13,244] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.243 [0-0-0-writer] INFO HdfsWriter$Task - end do write [2020-03-18 02:36:13,266] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.264 [0-0-1-reader] INFO connection - Opened connection [connectionId{localValue:5, serverValue:80}] to 10.1.119.32:30717 [2020-03-18 02:36:13,275] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.273 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[0] is successed, used[264]ms [2020-03-18 02:36:13,438] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.437 [0-0-1-writer] INFO HdfsWriter$Task - end do write [2020-03-18 02:36:13,478] {ssh_operator.py:139} INFO - 2020-03-18 10:36:13.475 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] taskId[1] is successed, used[438]ms 2020-03-18 10:36:13.476 [taskGroup-0] INFO TaskGroupContainer - taskGroup[0] completed it's tasks. [2020-03-18 02:36:13,862] {logging_mixin.py:112} INFO - [2020-03-18 02:36:13,862] {local_task_job.py:167} WARNING - State of this instance has been externally set to removed. Taking the poison pill. [2020-03-18 02:36:13,865] {helpers.py:308} INFO - Sending Signals.SIGTERM to GPID 110780 [2020-03-18 02:36:13,866] {taskinstance.py:905} ERROR - Received SIGTERM. Terminating subprocesses. [2020-03-18 02:36:13,873] {taskinstance.py:1058} ERROR - SSH operator error: Task received SIGTERM signal Traceback (most recent call last): File "/usr/local/lib/python3.7/site-packages/airflow/contrib/operators/ssh_operator.py", line 133, in execute readq, _, _ = select([channel], [], [], self.timeout) File "/usr/local/lib/python3.7/site-packages/airflow/models/taskinstance.py", line 907, in signal_handler raise AirflowException("Task received SIGTERM signal") airflow.exceptions.AirflowException: Task received SIGTERM signal During handling of the above exception, another exception occurred: Traceback (most recent call last): File "/usr/local/lib/python3.7/site-packages/airflow/models/taskinstance.py", line 930, in _run_raw_task result = task_copy.execute(context=context) File "/usr/local/lib/python3.7/site-packages/airflow/contrib/operators/ssh_operator.py", line 173, in execute raise AirflowException("SSH operator error: {0}".format(str(e))) airflow.exceptions.AirflowException: SSH operator error: Task received SIGTERM signal [2020-03-18 02:36:13,875] {taskinstance.py:1081} INFO - Marking task as UP_FOR_RETRY [2020-03-18 02:36:13,892] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 Traceback (most recent call last): [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 File "/usr/local/lib/python3.7/site-packages/airflow/contrib/operators/ssh_operator.py", line 133, in execute [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 readq, _, _ = select([channel], [], [], self.timeout) [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 File "/usr/local/lib/python3.7/site-packages/airflow/models/taskinstance.py", line 907, in signal_handler [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 raise AirflowException("Task received SIGTERM signal") [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 airflow.exceptions.AirflowException: Task received SIGTERM signal [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 During handling of the above exception, another exception occurred: [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 Traceback (most recent call last): [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 File "/usr/local/bin/airflow", line 37, in <module> [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 args.func(args) [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 File "/usr/local/lib/python3.7/site-packages/airflow/utils/cli.py", line 74, in wrapper [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 return f(*args, **kwargs) [2020-03-18 02:36:13,893] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 File "/usr/local/lib/python3.7/site-packages/airflow/bin/cli.py", line 551, in run [2020-03-18 02:36:13,894] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 _run(args, dag, ti) [2020-03-18 02:36:13,894] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 File "/usr/local/lib/python3.7/site-packages/airflow/bin/cli.py", line 469, in _run [2020-03-18 02:36:13,894] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 pool=args.pool, [2020-03-18 02:36:13,894] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 File "/usr/local/lib/python3.7/site-packages/airflow/utils/db.py", line 74, in wrapper [2020-03-18 02:36:13,894] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 return func(*args, **kwargs) [2020-03-18 02:36:13,894] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 File "/usr/local/lib/python3.7/site-packages/airflow/models/taskinstance.py", line 930, in _run_raw_task [2020-03-18 02:36:13,894] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 result = task_copy.execute(context=context) [2020-03-18 02:36:13,894] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 File "/usr/local/lib/python3.7/site-packages/airflow/contrib/operators/ssh_operator.py", line 173, in execute [2020-03-18 02:36:13,894] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 raise AirflowException("SSH operator error: {0}".format(str(e))) [2020-03-18 02:36:13,894] {base_task_runner.py:115} INFO - Job 759619: Subtask mongodb2hdfs_7d4ce1b2d8d84e82a6421472ce3bef48 airflow.exceptions.AirflowException: SSH operator error: Task received SIGTERM signal [2020-03-18 02:36:14,279] {helpers.py:286} INFO - Process psutil.Process(pid=110780, status='terminated') (110780) terminated with exit code 1 [2020-03-18 02:36:14,280] {logging_mixin.py:112} INFO - [2020-03-18 02:36:14,280] {local_task_job.py:124} WARNING - Time since last heartbeat(0.43 s) < heartrate(5.0 s), sleeping for 4.5726189999999995 s [2020-03-18 02:36:18,858] {logging_mixin.py:112} INFO - [2020-03-18 02:36:18,857] {local_task_job.py:103} INFO - Task exited with return code 0

网上有帖子说是通过加入线程来解决问题,然后,Airflow会等到所有线程执行完毕后再发送SIGTERM。

threads = [] #array for threads t = Thread(...) threads.append(t) #add all threads # Start all threads for x in threads: x.start() # Wait for all of them to finish for x in threads: x.join()

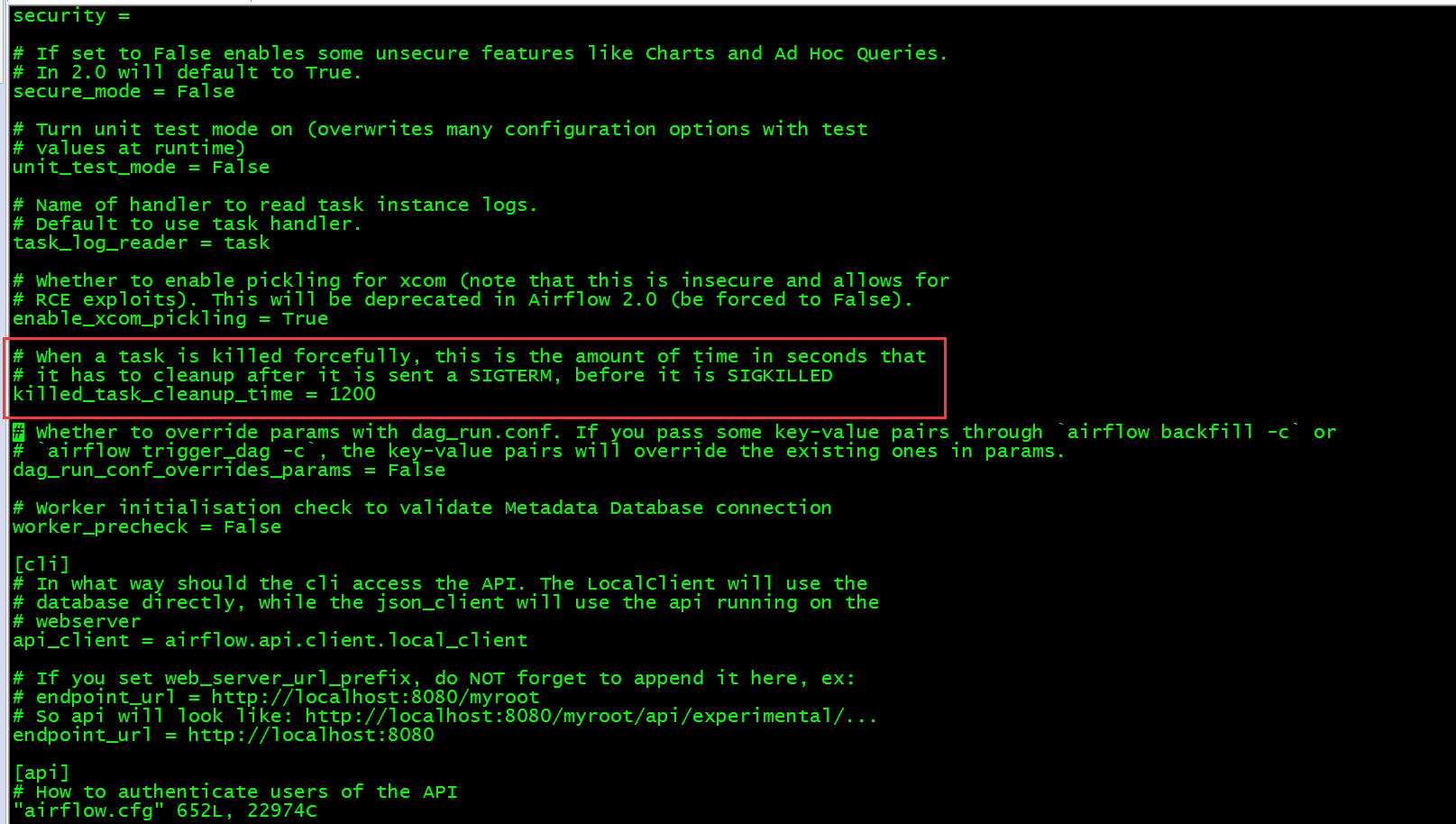

关于这个解决方案我不是很理解了,不过我这里通过另外一种解决方案了解决,实在一点,修改airflow.cfg文件参数

首先进入你安装的airflow.cfg文件

找到这个配置项

把这个超时时间调大一些,因为主要原因是因为没有灯任务运行结束就发出SIGTERM信号了,然后再启动一下调度任务,不过这次等待运行的时间会稍微长一些,最终是成功了的!!

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律

2018-03-18 Spark2.X分布式弹性数据集