手动添加ceph的mds

前提条件

已经部署好ceph集群(ceph-luminous)

1、创建mds(只创建一个单节点)

[root@cluster9 ceph-cluster]# ceph-deploy mds create cluster9

2、创建data pool和metadata pool

[root@cluster9 ceph-cluster]# ceph osd pool create cephfs_data 128 128 pool 'cephfs_data' created [root@cluster9 ceph-cluster]# ceph osd pool create cephfs_metadata 128 128 pool 'cephfs_metadata' created

3、新建cephfs

[root@cluster9 ceph-cluster]# ceph fs new cephfs cephfs_metadata cephfs_data new fs with metadata pool 5 and data pool 4

4、查看集群状态

[root@cluster9 ceph-cluster]# ceph -s cluster: id: d81b3ce4-bcbc-4b43-870e-430950652315 health: HEALTH_WARN too many PGs per OSD (384 > max 250) services: mon: 1 daemons, quorum cluster9 mgr: cluster9(active) mds: cephfs-1/1/1 up {0=cluster9=up:active} osd: 3 osds: 3 up, 3 in data: pools: 3 pools, 384 pgs objects: 26 objects, 2.22KiB usage: 3.07GiB used, 10.9TiB / 10.9TiB avail pgs: 384 active+clean

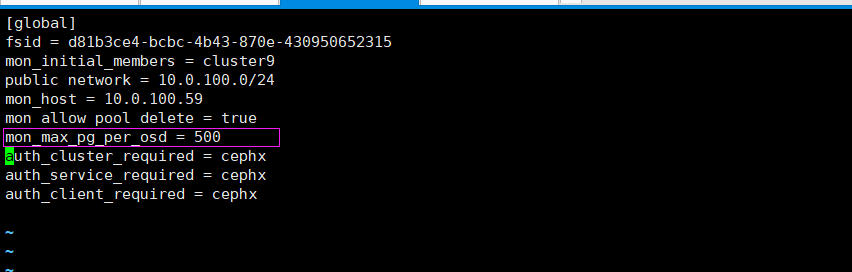

解决上面的问题,进入ceph-deploy目录,修改ceph.conf

将上在的值修改

然后执行以下命令

ceph-deploy --overwrite-conf config push cluster9

systemctl restart ceph-mgr.target

查看是否生效

[root@cluster9 ceph-cluster]# ceph --show-config | grep "mon_max_pg_per_osd" mon_max_pg_per_osd = 500

[root@cluster9 ceph-cluster]# ceph -s cluster: id: d81b3ce4-bcbc-4b43-870e-430950652315 health: HEALTH_OK services: mon: 1 daemons, quorum cluster9 mgr: cluster9(active) mds: cephfs-1/1/1 up {0=cluster9=up:active} osd: 3 osds: 3 up, 3 in data: pools: 3 pools, 384 pgs objects: 26 objects, 2.22KiB usage: 3.07GiB used, 10.9TiB / 10.9TiB avail pgs: 384 active+clean

cephfs 部署完