BeautifulSoup库

(一)简介

BeautifulSoup是一个灵活方便的网页解析库,处理高效,支持多种解析器,利用它可以不用编写正则表达式即可方便的实现网页信息的提取。

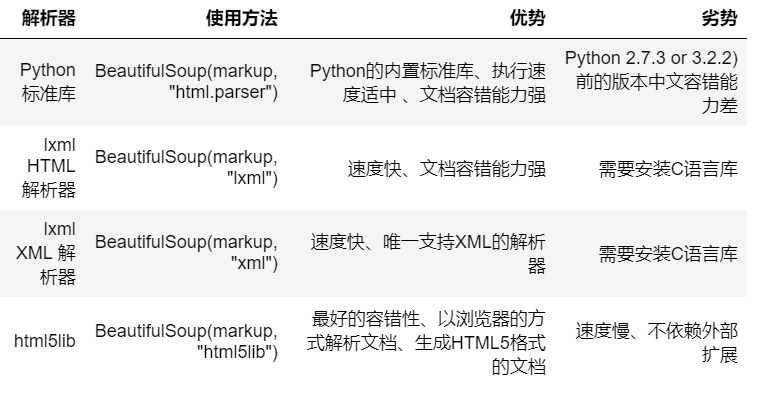

这里我们介绍一下一些常用的解析库:

(二)用法详解

1.基本使用

1 from bs4 import BeautifulSoup 2 3 html = """ 4 <html><head><title>The Dormouse's story</title></head> 5 <body> 6 <p class="title" name="dromouse"><b>The Dormouse's story</b></p> 7 <p class="story">Once upon a time there were three little sisters; and their names were 8 <a href="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>, 9 <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and 10 <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; 11 and they lived at the bottom of a well.</p> 12 <p class="story">...</p> 13 """ 14 soup = BeautifulSoup(html, 'lxml') 15 print(soup.prettify()) #这个方法是格式化的意思,即自动补全html标签的信息。 16 print(soup.title.string)#可以定位title标签的信息

从输出中可以看到,自动为我们补全了不完整的标签信息。

1 <html> 2 <head> 3 <title> 4 The Dormouse's story 5 </title> 6 </head> 7 <body> 8 <p class="title" name="dromouse"> 9 <b> 10 The Dormouse's story 11 </b> 12 </p> 13 <p class="story"> 14 Once upon a time there were three little sisters; and their names were 15 <a class="sister" href="http://example.com/elsie" id="link1"> 16 <!-- Elsie --> 17 </a> 18 , 19 <a class="sister" href="http://example.com/lacie" id="link2"> 20 Lacie 21 </a> 22 and 23 <a class="sister" href="http://example.com/tillie" id="link3"> 24 Tillie 25 </a> 26 ; 27 and they lived at the bottom of a well. 28 </p> 29 <p class="story"> 30 ... 31 </p> 32 </body> 33 </html> 34 The Dormouse's story

2.标签选择器

我们上一段代码简单使用了定位title标签,接下来,我们具体看一下标签选择器方面的操作。

1 html = """ 2 <html><head><title>The Dormouse's story</title></head> 3 <body> 4 <p class="title" name="dromouse"><b>The Dormouse's story</b></p> 5 <p class="story">Once upon a time there were three little sisters; and their names were 6 <a href="http://example.com/elsie" class="sister" id="link1"><!-- Elsie --></a>, 7 <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> and 8 <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a>; 9 and they lived at the bottom of a well.</p> 10 <p class="story">...</p> 11 """

- 选择元素

from bs4 import BeautifulSoup soup = BeautifulSoup(html, 'lxml') print(soup.title, type(soup.title)) #返回title标签 print(soup.head) #返回head标签 print(soup.p) #返回p标签 》》》输出: <title>The Dormouse's story</title> <class 'bs4.element.Tag'> <head><title>The Dormouse's story</title></head> <p class="title" name="dromouse"><b>The Dormouse's story</b></p>

可以看到输出结果很自然,很舒服,注意从p标签的匹配结果来看,bs只会匹配首次满足的标签!

- 获取标签的名称

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 print(soup.title.name) 5 6 》》》输出: 7 title

- 获取属性

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 print(soup.p.attrs['name']) #可以有两种获取方法 5 print(soup.p['name']) 6 7 8 》》》输出: 9 dromouse 10 dromouse

- 获取内容

1 from bs4 import BeautifulSoup 2 soup = BeautifulSoup(html, 'lxml') 3 print(soup.p.string) 4 5 6 》》》输出: 7 The Dormouse's story

- 嵌套选择

即可以一层一层往里的嵌套选择

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 print(soup.head.title.string) 5 6 7 》》》输出: 8 The Dormouse's story

- 子节点和子孙节点

1 html = """ 2 <html> 3 <head> 4 <title>The Dormouse's story</title> 5 </head> 6 <body> 7 <p class="story"> 8 Once upon a time there were three little sisters; and their names were 9 <a href="http://example.com/elsie" class="sister" id="link1"> 10 <span>Elsie</span> 11 </a> 12 <a href="http://example.com/lacie" class="sister" id="link2">Lacie</a> 13 and 14 <a href="http://example.com/tillie" class="sister" id="link3">Tillie</a> 15 and they lived at the bottom of a well. 16 </p> 17 <p class="story">...</p> 18 """

from bs4 import BeautifulSoup soup = BeautifulSoup(html, 'lxml') #获取该标签的子节点即各个子标签并以列表形式输出 print(soup.p.contents)

》》》输出: ['\n Once upon a time there were three little sisters; and their names were\n ', <a class="sister" href="http://example.com/elsie" id="link1"> <span>Elsie</span> </a>, '\n', <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>, ' \n and\n ', <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>, '\n and they lived at the bottom of a well.\n ']

或者可以用如下的方法使用:

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 #获取该标签的子节点,即嵌套标签也要依次输出 5 print(soup.p.children) 6 #其返回结果是一个迭代器,所以采用迭代的方法进行访问 7 for i, child in enumerate(soup.p.children): 8 print(i, child) 9 10 》》》输出: 11 <list_iterator object at 0x1064f7dd8> 12 0 13 Once upon a time there were three little sisters; and their names were 14 15 1 <a class="sister" href="http://example.com/elsie" id="link1"> 16 <span>Elsie</span> 17 </a> 18 2 19 20 3 <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a> 21 4 22 and 23 24 5 <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a> 25 6 26 and they lived at the bottom of a well.

我们还可以访问其子孙节点,即子节点访问后,子孙节点也要访问

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 print(soup.p.descendants) 5 for i, child in enumerate(soup.p.descendants): 6 print(i, child) 7 8 9 》》》输出: 10 <generator object descendants at 0x10650e678> 11 0 12 Once upon a time there were three little sisters; and their names were 13 14 1 <a class="sister" href="http://example.com/elsie" id="link1"> 15 <span>Elsie</span> 16 </a> 17 2 18 19 3 <span>Elsie</span> 20 4 Elsie 21 5 22 23 6 24 25 7 <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a> 26 8 Lacie 27 9 28 and 29 30 10 <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a> 31 11 Tillie 32 12 33 and they lived at the bottom of a well. 34

- 父节点和祖先节点

访问父节点:

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 print(soup.a.parent) 5 6 》》》输出: 7 p class="story"> 8 Once upon a time there were three little sisters; and their names were 9 <a class="sister" href="http://example.com/elsie" id="link1"> 10 <span>Elsie</span> 11 </a> 12 <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a> 13 and 14 <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a> 15 and they lived at the bottom of a well. 16 </p>

访问祖先节点:

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 print(list(enumerate(soup.a.parents)))

1 [(0, <p class="story"> 2 Once upon a time there were three little sisters; and their names were 3 <a class="sister" href="http://example.com/elsie" id="link1"> 4 <span>Elsie</span> 5 </a> 6 <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a> 7 and 8 <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a> 9 and they lived at the bottom of a well. 10 </p>), (1, <body> 11 <p class="story"> 12 Once upon a time there were three little sisters; and their names were 13 <a class="sister" href="http://example.com/elsie" id="link1"> 14 <span>Elsie</span> 15 </a> 16 <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a> 17 and 18 <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a> 19 and they lived at the bottom of a well. 20 </p> 21 <p class="story">...</p> 22 </body>), (2, <html> 23 <head> 24 <title>The Dormouse's story</title> 25 </head> 26 <body> 27 <p class="story"> 28 Once upon a time there were three little sisters; and their names were 29 <a class="sister" href="http://example.com/elsie" id="link1"> 30 <span>Elsie</span> 31 </a> 32 <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a> 33 and 34 <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a> 35 and they lived at the bottom of a well. 36 </p> 37 <p class="story">...</p> 38 </body></html>), (3, <html> 39 <head> 40 <title>The Dormouse's story</title> 41 </head> 42 <body> 43 <p class="story"> 44 Once upon a time there were three little sisters; and their names were 45 <a class="sister" href="http://example.com/elsie" id="link1"> 46 <span>Elsie</span> 47 </a> 48 <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a> 49 and 50 <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a> 51 and they lived at the bottom of a well. 52 </p> 53 <p class="story">...</p> 54 </body></html>)]

- 兄弟节点

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 5 print(list(enumerate(soup.a.next_siblings))) #后面的兄弟节点 6 print(list(enumerate(soup.a.previous_siblings))) #前面的兄弟节点 7 8 9 》》》输出: 10 [(0, '\n'), (1, <a class="sister" href="http://example.com/lacie" id="link2">Lacie</a>), (2, ' \n and\n '), (3, <a class="sister" href="http://example.com/tillie" id="link3">Tillie</a>), (4, '\n and they lived at the bottom of a well.\n ')] 11 [(0, '\n Once upon a time there were three little sisters; and their names were\n ')]

但是仅依靠上述的标签选择,是不能完全解决我们的提取问题,因此需要更方便的方法,因此,bs提供了如下的标准选择器。

3.标准选择器

find_all( name , attrs , recursive , text , **kwargs )

可根据标签名、属性、内容查找文档

- name:

1 html=''' 2 <div class="panel"> 3 <div class="panel-heading"> 4 <h4>Hello</h4> 5 </div> 6 <div class="panel-body"> 7 <ul class="list" id="list-1"> 8 <li class="element">Foo</li> 9 <li class="element">Bar</li> 10 <li class="element">Jay</li> 11 </ul> 12 <ul class="list list-small" id="list-2"> 13 <li class="element">Foo</li> 14 <li class="element">Bar</li> 15 </ul> 16 </div> 17 </div> 18 '''

from bs4 import BeautifulSoup soup = BeautifulSoup(html, 'lxml') #找到所有的’ul‘标签并返回 print(soup.find_all('ul')) #其返回结果是一个tag对象,所以在后续也可以层层访问 print(type(soup.find_all('ul')[0])) 》》》输出: [<ul class="list" id="list-1"> <li class="element">Foo</li> <li class="element">Bar</li> <li class="element">Jay</li> </ul>, <ul class="list list-small" id="list-2"> <li class="element">Foo</li> <li class="element">Bar</li> </ul>] <class 'bs4.element.Tag'>

可以看到返回结果包含两个元素并且是一个tag对象,因此可以按如下层层访问:

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 #找到所有的’ul‘标签 5 for i in soup.find_all('ul'): 6 #在每一个ul标签内再进行查找: 7 print(i.find_all('li')) 8 9 10 》》》输出: 11 [<li class="element">Foo</li>, <li class="element">Bar</li>, <li class="element">Jay</li>] 12 [<li class="element">Foo</li>, <li class="element">Bar</li>]

- attrs:

他会找到以键值对为定位目标的标签,如下:

1 html=''' 2 <div class="panel"> 3 <div class="panel-heading"> 4 <h4>Hello</h4> 5 </div> 6 <div class="panel-body"> 7 <ul class="list" id="list-1" name="elements"> 8 <li class="element">Foo</li> 9 <li class="element">Bar</li> 10 <li class="element">Jay</li> 11 </ul> 12 <ul class="list list-small" id="list-2"> 13 <li class="element">Foo</li> 14 <li class="element">Bar</li> 15 </ul> 16 </div> 17 </div> 18 '''

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 #找到包含‘id=list-1’的标签 5 print(soup.find_all(attrs={'id': 'list-1'})) 6 print(soup.find_all(attrs={'name': 'elements'})) 7 8 》》》输出: 9 [<ul class="list" id="list-1" name="elements"> 10 <li class="element">Foo</li> 11 <li class="element">Bar</li> 12 <li class="element">Jay</li> 13 </ul>] 14 [<ul class="list" id="list-1" name="elements"> 15 <li class="element">Foo</li> 16 <li class="element">Bar</li> 17 <li class="element">Jay</li> 18 </ul>]

另外,我们也可以直接用find_all进行查找,其效果是同上面的一样:

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 #找到所有包含id='list-1'的标签 5 print(soup.find_all(id='list-1')) 6 #找到包含class_='element'的标签 7 print(soup.find_all(class_='element')) 8 9 10 》》》输出: 11 [<ul class="list" id="list-1"> 12 <li class="element">Foo</li> 13 <li class="element">Bar</li> 14 <li class="element">Jay</li> 15 </ul>] 16 [<li class="element">Foo</li>, <li class="element">Bar</li>, <li class="element">Jay</li>, <li class="element">Foo</li>, <li class="element">Bar</li>]

- text:

在做一些内容匹配时,会用到text他不是匹配标签而是直接匹配内容:

1 html=''' 2 <div class="panel"> 3 <div class="panel-heading"> 4 <h4>Hello</h4> 5 </div> 6 <div class="panel-body"> 7 <ul class="list" id="list-1"> 8 <li class="element">Foo</li> 9 <li class="element">Bar</li> 10 <li class="element">Jay</li> 11 </ul> 12 <ul class="list list-small" id="list-2"> 13 <li class="element">Foo</li> 14 <li class="element">Bar</li> 15 </ul> 16 </div> 17 </div> 18 '''

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 #匹配Foo内容: 5 print(soup.find_all(text='Foo')) 6 7 8 》》》输出: 9 ['Foo', 'Foo']

find( name , attrs , recursive , text , **kwargs )

find返回单个元素,find_all返回所有元素,用法方面跟find_all一样

1 html=''' 2 <div class="panel"> 3 <div class="panel-heading"> 4 <h4>Hello</h4> 5 </div> 6 <div class="panel-body"> 7 <ul class="list" id="list-1"> 8 <li class="element">Foo</li> 9 <li class="element">Bar</li> 10 <li class="element">Jay</li> 11 </ul> 12 <ul class="list list-small" id="list-2"> 13 <li class="element">Foo</li> 14 <li class="element">Bar</li> 15 </ul> 16 </div> 17 </div> 18 '''

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 print(soup.find('ul')) 5 print(type(soup.find('ul'))) 6 print(soup.find('page')) 7 8 9 》》》输出: 10 <ul class="list" id="list-1"> 11 <li class="element">Foo</li> 12 <li class="element">Bar</li> 13 <li class="element">Jay</li> 14 </ul> 15 <class 'bs4.element.Tag'> 16 None

find_parents() find_parent()

find_parents()返回所有祖先节点,find_parent()返回直接父节点。

find_next_siblings() find_next_sibling()

find_next_siblings()返回后面所有兄弟节点,find_next_sibling()返回后面第一个兄弟节点。

find_previous_siblings() find_previous_sibling()

find_previous_siblings()返回前面所有兄弟节点,find_previous_sibling()返回前面第一个兄弟节点。

find_all_next() find_next()

find_all_next()返回节点后所有符合条件的节点, find_next()返回第一个符合条件的节点

find_all_previous() 和 find_previous()

find_all_previous()返回节点后所有符合条件的节点, find_previous()返回第一个符合条件的节点

以上这些个用法都和find_all一样,有需要时可以自行参考。

可以举一个简单例子:

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 ul = soup.find('ul') 5 #返回ul的下一个兄弟节点 6 print(ul.find_next_sibling()) 7 #返回ul的所有父节点 8 print(ul.find_parents())

4.css选择器

通过select()直接传入CSS选择器即可完成选择,在select中,.表示class;#表示id。

例如:

1 html=''' 2 <div class="panel"> 3 <div class="panel-heading"> 4 <h4>Hello</h4> 5 </div> 6 <div class="panel-body"> 7 <ul class="list" id="list-1"> 8 <li class="element">Foo</li> 9 <li class="element">Bar</li> 10 <li class="element">Jay</li> 11 </ul> 12 <ul class="list list-small" id="list-2"> 13 <li class="element">Foo</li> 14 <li class="element">Bar</li> 15 </ul> 16 </div> 17 </div> 18 ''' 19 20 21 from bs4 import BeautifulSoup 22 23 soup = BeautifulSoup(html, 'lxml') 24 #相当于定位class=panel下的class=panel-heading标签 25 print(soup.select('.panel .panel-heading')) 26 #相当于定位ul下的li标签 27 print(soup.select('ul li')) 28 #相当于定位id=list-2下的class=element标签 29 print(soup.select('#list-2 .element')) 30 #其返回结果仍然是一个tag对象 31 print(type(soup.select('ul')[0]))

1 [<div class="panel-heading"> 2 <h4>Hello</h4> 3 </div>] 4 [<li class="element">Foo</li>, <li class="element">Bar</li>, <li class="element">Jay</li>, <li class="element">Foo</li>, <li class="element">Bar</li>] 5 [<li class="element">Foo</li>, <li class="element">Bar</li>] 6 <class 'bs4.element.Tag'> 7 In [20]:

当我们获取到标签后,如何获得标签内的属性:

1 from bs4 import BeautifulSoup 2 3 #直接采用下标即可: 4 soup = BeautifulSoup(html, 'lxml') 5 for ul in soup.select('ul'): 6 print(ul['id']) 7 print(ul.attrs['id']) 8 9 》》》输出: 10 list-1 11 list-1 12 list-2 13 list-2

当我们获得标签后,如何获得标签包含的内容:

1 from bs4 import BeautifulSoup 2 3 soup = BeautifulSoup(html, 'lxml') 4 for ur in soup.select('li'): 5 print(ur.get_text()) 6 7 8 》》》输出: 9 Foo 10 Bar 11 Jay 12 Foo 13 Bar

总结

- 推荐使用lxml解析库,必要时使用html.parser

- 标签选择筛选功能弱但是速度快

- 建议使用find()、find_all() 查询匹配单个结果或者多个结果

- 如果对CSS选择器熟悉建议使用select()

- 记住常用的获取属性和文本值的方法

浙公网安备 33010602011771号

浙公网安备 33010602011771号