前面文章已经搭建好windows环境。上述环境都是使用到2.6.0版本的hadoop,如果有不懂的地方可以联系QQ1565189664 ,接下来主要讨论如何在windows本地中执行MapReduce 任务

这里使用Maven作为项目管理工具,jdk1.8

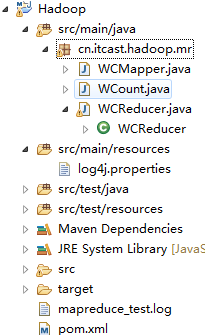

项目结构如下

WCount.java文件代码如下:

package cn.itcast.hadoop.mr; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class WCount { public static void main(String[] args) { System.out.println(args.toString()); Configuration conf = new Configuration(); conf.setInt("mapreduce.client.submit.file.replication", 20); /*conf.set("mapreduce.framework.name", "yarn"); conf.set("mapred.job.tracker", "namenode:9001"); conf.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem"); conf.set("mapreduce.framework.name", "yarn"); conf.addResource("core-site.xml"); conf.addResource("hdfs-site.xml"); conf.addResource("mapred-site.xml"); conf.addResource("yarn-site.xml");*/ //conf.set("mapred.jar", "D:\\workspace\\Hadoop\\target\\Hadoop.jar"); Job job=null; try { job = Job.getInstance(conf); //notice job.setJarByClass(WCount.class); //set mapper`s property job.setMapperClass(WCMapper.class); job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(LongWritable.class); FileInputFormat.setInputPaths(job, new Path("hdfs://192.168.1.2:9000/lzh/word.txt")); //set reducer`s property job.setReducerClass(WCReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(LongWritable.class); FileOutputFormat.setOutputPath(job, new Path("hdfs://192.168.1.2:9000/lzh/wcut")); //submit job.waitForCompletion(true); } catch (Exception e) { // TODO Auto-generated catch block e.printStackTrace(); } } }

WCMapper.java 代码如下:

package cn.itcast.hadoop.mr; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; public class WCMapper extends Mapper<LongWritable, Text, Text, LongWritable>{ @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { //accept String line = value.toString(); //split String[] words = line.split(" "); //loop for(String w : words){ //send //System.out.println(w); context.write(new Text(w), new LongWritable(1)); } } }

WCReducer.java 代码如下

package cn.itcast.hadoop.mr; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import java.io.IOException; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; public class WCReducer extends Reducer<Text, LongWritable, Text, LongWritable>{ @Override protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException { //define a counter long counter = 0; //loop for(LongWritable l : values){ counter += l.get(); } //write context.write(key, new LongWritable(counter)); } }

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>HadoopJar</groupId> <artifactId>Hadoop</artifactId> <version>0.0.1-SNAPSHOT</version> <name>Hadoop</name> <dependencies> <!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-common --> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.7.2</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-core --> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-mapreduce-client-core</artifactId> <version>2.7.2</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-hdfs --> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.7.2</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-common --> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-mapreduce-client-common</artifactId> <version>2.7.2</version> </dependency> <!-- https://mvnrepository.com/artifact/org.apache.hadoop/hadoop-mapreduce-client-jobclient --> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-mapreduce-client-jobclient</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>jdk.tools</groupId> <artifactId>jdk.tools</artifactId> <version>1.8</version> <scope>system</scope> <systemPath>${JAVA_HOME}/lib/tools.jar</systemPath> </dependency> </dependencies> <build> <finalName>Hadoop</finalName> <plugins> <plugin> <artifactId>maven-compiler-plugin</artifactId> <configuration> <source>1.8</source> <target>1.8</target> <encoding>UTF-8</encoding> </configuration> </plugin> <plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-resources-plugin</artifactId> <configuration> <encoding>UTF-8</encoding> </configuration> </plugin> </plugins> </build> </project>

hadoop-mapreduce-client-jobclient 这个jar包在此时可以不需要加入也可以正常运行,这个是在远程提交job的时候有用

log.properties

log4j.rootLogger=DEBUG,stdout,R

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%5p - %m%n

log4j.appender.R=org.apache.log4j.RollingFileAppender

log4j.appender.R.File=mapreduce_test.log

log4j.appender.R.MaxFileSize=1MB

log4j.appender.R.MaxBackupIndex=1

log4j.appender.R.layout=org.apache.log4j.PatternLayout

log4j.appender.R.layout.ConversionPattern=%p %t %c - %m%n

log4j.logger.com.codefutures=INFO

上述代码就可以执行一个word.txt 文件字母统计,word.txt 格式如下:

w e r t t t y y u

d g h j k k l d f

至此,简单的Mapreduce本地执行方式已经完成,大家去尝试吧!