Using SMOTEBoost(过采样) and RUSBoost(使用聚类+集成学习) to deal with class imbalance

Using SMOTEBoost and RUSBoost to deal with class imbalance

from:https://aitopics.org/doc/news:1B9F7A99/

Binary classification with strong class imbalance can be found in many real-world classification problems. From trying to predict events such as network intrusion and bank fraud to a patient's medical diagnosis, the goal in these cases is to be able to identify instances of the minority class -- that is, the class that is underrepresented in the dataset. This, of course, presents a big challenge as most predictive models tend to ignore the more critical minority class while deceptively giving high accuracy results by favoring the majority class. Several techniques have been used to get around the problem of class imbalance, including different sampling methods and modeling algorithms. Examples of sampling methods include adding data samples to the minority class by either duplicating the data or generating synthetic minority samples (oversampling), or randomly removing majority class data to produce a more balanced data distribution (undersampling).

kaggle上建议:摘自:https://www.kaggle.com/general/7793

One approach that I have used in the past was to use a clustering algorithm for the majority class, the negative cases in your example, to create a smaller, representative set of negative cases with which to replace the actual negative cases in the training data.

More specifically, I used K-means algorithm to cluster the negative cases into the x clusters where x is the number of positive examples in my training data. Then I used the cluster centroids as the negative cases and the actual positive cases as the positives. This gave me a 50 / 50 balanced data set for the training. (Note that I only did this for the training data. My cross validation and test sets I kept unclustered).

There are downsides to this approach:

By clustering you lose some accuracy in the negative cases, but that is the price you pay with this approach.

Also, K-means algorithm can converge on a different set of centroids each time you run it as it starts at random starting positions. In optimising the model, I regenerated the negative centroids several times to get different data for the training.

其实rusboost还是很先进的,见下文:

CUSBoost:基于聚类的提升下采样的非平衡数据分类

原论文地址:CUSBoost: Cluster-based Under-sampling with Boosting for Imbalanced Classification

Abstract

普通的机器学习方法,对于非平衡数据分类,总是倾向于最大化占比多的类别的分类准确率,而把占比少的类别分类错误,但是,现实应用中,我们研究的问题,对于少数的类别却更加感兴趣。最近,处理非平衡数据分类问题的方法有:采样方法,成本敏感的学习方法,以及集成学习的方法。这篇文章中,提出了一种新的基于聚类的欠采样boosting方法,CUSBoost,它能够有效地处理非平衡数据分类问题。RUSBoost(random under-sampling with AdaBoost) 和SMOTEBoost (synthetic minority over-sampling with AdaBoost) 算法,在我们提出的算法中作为可选项。经过实验,我们发现CUSBoost算法在处理非平衡数据上能够达到state-of-art的表现,其表现优于一般的集成学习方法。

Introduction

处理非平衡类别问题的方法一般被分为两类:外部(对非平衡数据进行处理得到平衡的数据)、内部(通过降低非平衡类别数据的灵敏度来改变已有的学习算法)的方法。

而CUSBoost的处理方法是:首先把数据分开为少数类别实例和多数类别实例,然后使用K-means算法对多数类别实例进行聚类处理,并且从每个聚类中选择部分数据来组成平衡的数据。聚类的方法帮助我们在多数类别数据中选择了差异性更大的数据(同一个聚类里面的数据则选择的相对较少),比起那些随机采样的方法(随机丢弃多数类别的数据)。CUSBoost combines the sampling and boosting methods to form an efficient and effective algorithm for class imbalance learning.

Related work

Sun等人提出的处理非平衡的二分类问题的方法,首先将大多数类别数据随机分组,每个组内的数据数量和少数类别的数据数量相近。然后由多数类每个组的数据加少数类数据进而组成平衡的数据样本。

Chawla 等人提出一种过采样方法SMOTE,对少数类别数据进行over-sample,不同之处在于,采样的数据是由少数类别数据合成而来。它通过操作特征空间而不是数据空间来合成少数类别的数据(使用KNN)。

Seiffert等人给出的结合Adaboost的随机欠采样方法RUSBoost,RUS减少多数类别的数据组成平衡数据(结合Adaboost)。

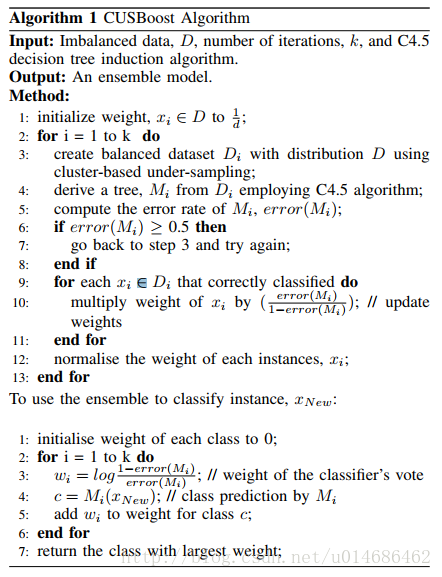

CUSBoost Algorithm

CUSBoost是聚类采样和Adaboost方法的结合:

聚类采样:把多数类数据和少数类数据分开,在多数类数据中,使用K-means算法将其分为K

个聚类(K

采用超参数优化决定)。然后在每个聚类中,使用随机地选择50%的数据(这里可以视具体问题进行调整)。使用选择出来的数据和少数类数据一起组成新的平衡数据。

算法伪代码:

解释一下:步骤三是每一次都实行一次欠采样(对事先已经利用K-means方法得到的K个聚类),组成平衡的数据。

My views

本文提出的算法关键点在于基于聚类的欠采样方法(K-means),然后就是结合Adaboost算法来得到最后的模型。文章后面也给出了实验测试结果,有兴趣的可以看一下,可以得到一下几个结论:

- 总体相比

RUSBoost和SMOTEBoost来说,该方法分类性能具有显著优越的效果;- 正是基于聚类的采样方法,导致了如果数据的特征空间非常适合聚类的时候,该方法将表现地较好。否则,可能需要尝试其他方法了;

- 该算法结合了

Adaboost,其实用其他算法模型来代替也不是不可以的,比如当前很火的Xgboost。

浙公网安备 33010602011771号

浙公网安备 33010602011771号