android NDK 神经网络API——是给tensorflow lite调用的底层API,应用开发者使用tensorflow lite即可

eural Networks API

The Android Neural Networks API (NNAPI) is an Android C API designed for running computationally intensive operations for machine learning on mobile devices. NNAPI is designed to provide a base layer of functionality for higher-level machine learning frameworks (such as TensorFlow Lite, Caffe2, or others) that build and train neural networks. The API is available on all devices running Android 8.1 (API level 27) or higher.

NNAPI supports inferencing by applying data from Android devices to previously trained, developer-defined models. Examples of inferencing include classifying images, predicting user behavior, and selecting appropriate responses to a search query.

On-device inferencing has many benefits:

- Latency: You don’t need to send a request over a network connection and wait for a response. This can be critical for video applications that process successive frames coming from a camera.

- Availability: The application runs even when outside of network coverage.

- Speed: New hardware specific to neural networks processing provide significantly faster computation than with general-use CPU alone.

- Privacy: The data does not leave the device.

- Cost: No server farm is needed when all the computations are performed on the device.

There are also trade-offs that a developer should keep in mind:

- System utilization: Evaluating neural networks involve a lot of computation, which could increase battery power usage. You should consider monitoring the battery health if this is a concern for your app, especially for long-running computations.

- Application size: Pay attention to the size of your models. Models may take up multiple megabytes of space. If bundling large models in your APK would unduly impact your users, you may want to consider downloading the models after app installation, using smaller models, or running your computations in the cloud. NNAPI does not provide functionality for running models in the cloud.

Understanding the Neural Networks API runtime

NNAPI is meant to be called by machine learning libraries, frameworks, and tools that let developers train their models off-device and deploy them on Android devices. Apps typically would not use NNAPI directly, but would instead directly use higher-level machine learning frameworks. These frameworks in turn could use NNAPI to perform hardware-accelerated inference operations on supported devices.

Based on the app’s requirements and the hardware capabilities on a device, Android’s neural networks runtime can efficiently distribute the computation workload across available on-device processors, including dedicated neural network hardware, graphics processing units (GPUs), and digital signal processors (DSPs).

For devices that lack a specialized vendor driver, the NNAPI runtime relies on optimized code to execute requests on the CPU.

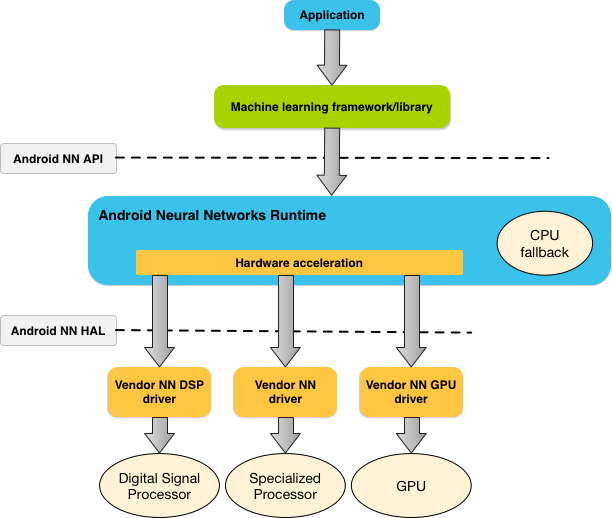

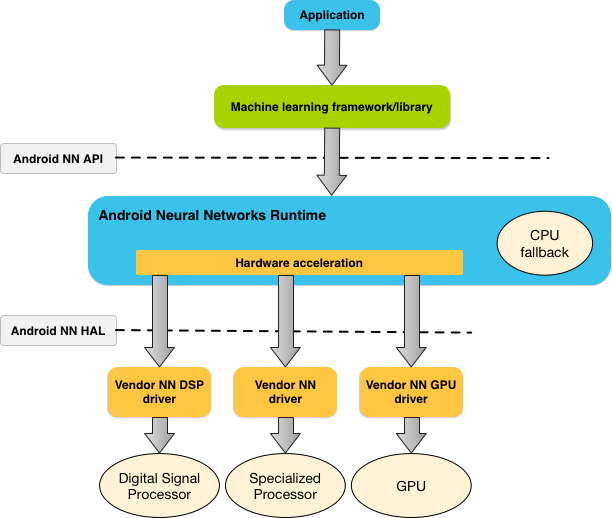

The diagram below shows a high-level system architecture for NNAPI.

Figure 1. System architecture for Android Neural Networks API

Figure 1. System architecture for Android Neural Networks API

eural Networks API

The Android Neural Networks API (NNAPI) is an Android C API designed for running computationally intensive operations for machine learning on mobile devices. NNAPI is designed to provide a base layer of functionality for higher-level machine learning frameworks (such as TensorFlow Lite, Caffe2, or others) that build and train neural networks. The API is available on all devices running Android 8.1 (API level 27) or higher.

NNAPI supports inferencing by applying data from Android devices to previously trained, developer-defined models. Examples of inferencing include classifying images, predicting user behavior, and selecting appropriate responses to a search query.

On-device inferencing has many benefits:

- Latency: You don’t need to send a request over a network connection and wait for a response. This can be critical for video applications that process successive frames coming from a camera.

- Availability: The application runs even when outside of network coverage.

- Speed: New hardware specific to neural networks processing provide significantly faster computation than with general-use CPU alone.

- Privacy: The data does not leave the device.

- Cost: No server farm is needed when all the computations are performed on the device.

There are also trade-offs that a developer should keep in mind:

- System utilization: Evaluating neural networks involve a lot of computation, which could increase battery power usage. You should consider monitoring the battery health if this is a concern for your app, especially for long-running computations.

- Application size: Pay attention to the size of your models. Models may take up multiple megabytes of space. If bundling large models in your APK would unduly impact your users, you may want to consider downloading the models after app installation, using smaller models, or running your computations in the cloud. NNAPI does not provide functionality for running models in the cloud.

Understanding the Neural Networks API runtime

NNAPI is meant to be called by machine learning libraries, frameworks, and tools that let developers train their models off-device and deploy them on Android devices. Apps typically would not use NNAPI directly, but would instead directly use higher-level machine learning frameworks. These frameworks in turn could use NNAPI to perform hardware-accelerated inference operations on supported devices.

Based on the app’s requirements and the hardware capabilities on a device, Android’s neural networks runtime can efficiently distribute the computation workload across available on-device processors, including dedicated neural network hardware, graphics processing units (GPUs), and digital signal processors (DSPs).

For devices that lack a specialized vendor driver, the NNAPI runtime relies on optimized code to execute requests on the CPU.

The diagram below shows a high-level system architecture for NNAPI.

Figure 1. System architecture for Android Neural Networks API

Figure 1. System architecture for Android Neural Networks API参考:https://developer.android.com/ndk/guides/neuralnetworks/index.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号