冰橙GPT chatGPT开放API接口使用说明 【接入腾讯云内容安全检测,长期稳定,故障1小时内响应】

-

冰橙GPT chatGPT开放接口使用说明 【接入了腾讯云内容安全检测】

-

openai chatgpt国内接口 https://bingchengapi.apifox.cn/

冰橙GPT稳定提供API接口服务

定时有人进行问题排查处理

1小时内问题响应

接入了腾讯云的内容安全检测

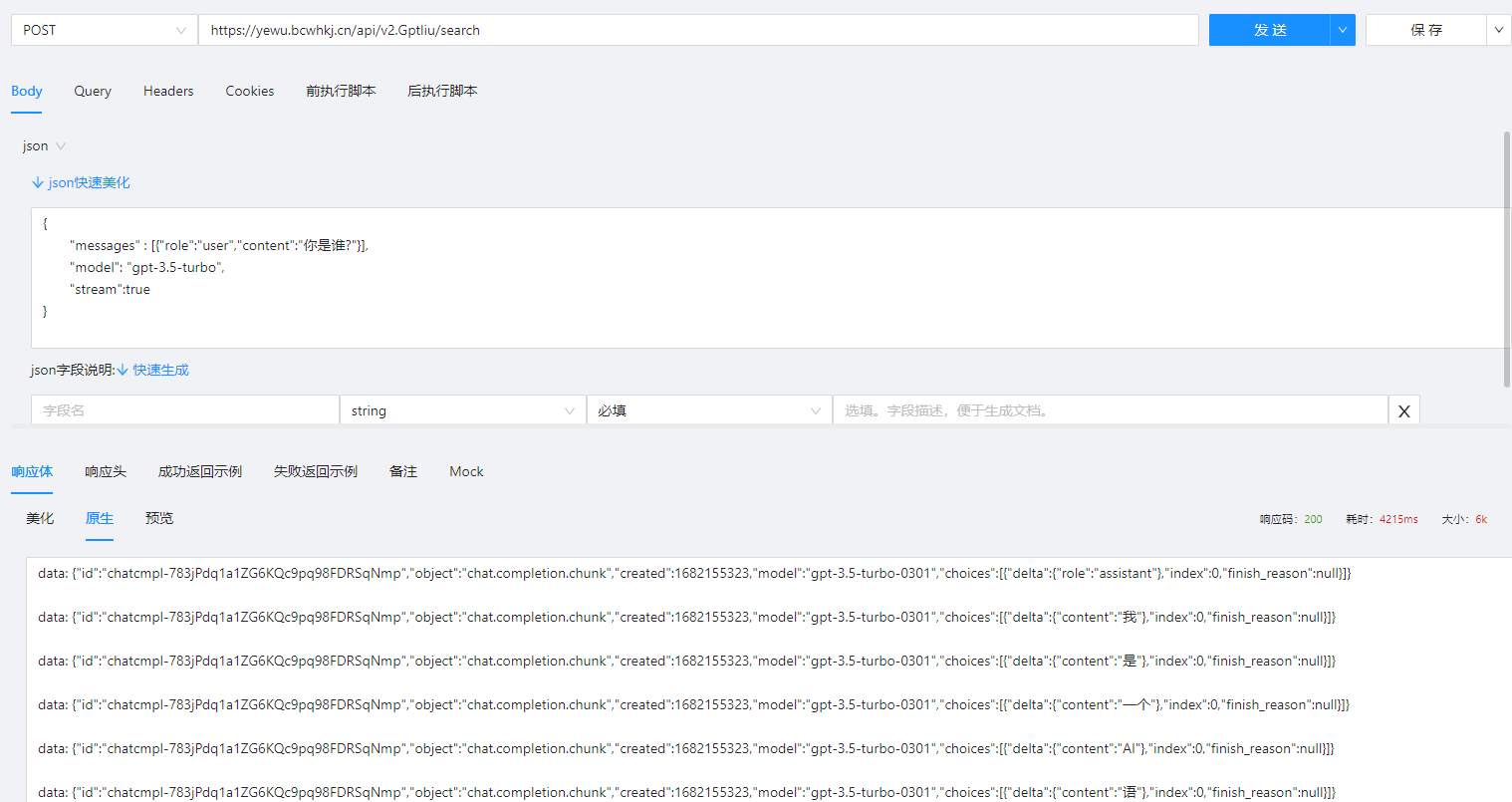

1.请求地址:https://yewu.bcwhkj.cn/api/v2.Gptliu/search 或 https://yewu.bcwhkj.cn/v1/chat/completions

2.请求方式: POST

3.body格式:json

4.请求内容(sse 流式请求,输出效果较好,响应速度快)(非流式请求,可不加stream这一行):

{ "messages" : [{"role":"user","content":"你是谁?"}], "model": "gpt-3.5-turbo", "stream":true //非SSE流式请求,这一行可不加 }5.请求头

{ "Content-Type": application/json, "Authorization": "Bearer 这段中文换成你自己的TOKEN(注意前面有个英文空格需保留)" }

说明:token获取方式:访问公众号《冰橙云》进入菜单冰橙AI助手 后,访问右上角 / 个人(首次访问免费赠送10000字符,可通过充值增加字符数)可查看 token

SSE流式请求与非流式请求的区别:

流式POST请求:内容将进行逐家响应和返回,响应速度快。

普通POST请求:将等待OPENAI的CHATGPT官方接口全部内容都响应完成后,服务器这边才会将内容将通过接口回复给前端,速度较慢。

也可通过微信扫以下图片进入:![]()

5.响应体格式:json

6.响应内容(非流式请求时的响应内容):

{ "id": "chatcmpl-77dQj73rIl0GJyTpAH4QlcSnhOFKp", "object": "chat.completion", "created": 1682054221, "model": "gpt-3.5-turbo-0301", "usage": { "prompt_tokens": 13, "completion_tokens": 30, "total_tokens": 43 }, "choices": [ { "message": { "role": "assistant", "content": "我是一个人工智能语言模型,可以根据用户提供的输入进行回答和交流。" }, "finish_reason": "stop", "index": 0 } ] }6.响应内容(流式请求时的响应内容):

data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"role":"assistant"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"我"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"是"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"一个"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"AI"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"语"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"言"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"模"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"型"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":","},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"专"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"门"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"用"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"来"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"回"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"答"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"问题"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"和"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"提"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"供"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"帮"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"助"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"的"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{"content":"。"},"index":0,"finish_reason":null}]} data: {"id":"chatcmpl-783jPdq1a1ZG6KQc9pq98FDRSqNmp","object":"chat.completion.chunk","created":1682155323,"model":"gpt-3.5-turbo-0301","choices":[{"delta":{},"index":0,"finish_reason":"stop"}]} data: [DONE]![1682155796199684.png image.png]()

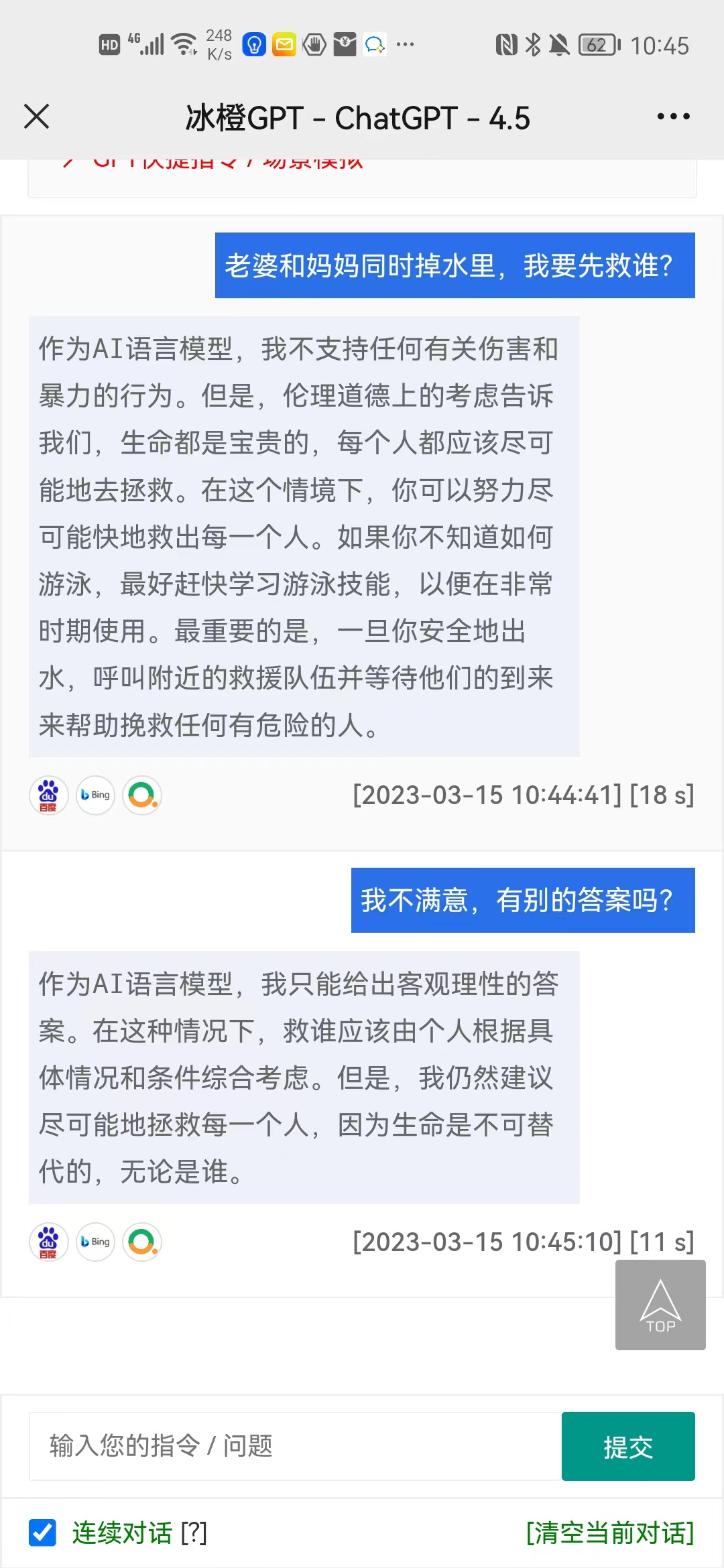

特别说明【连续对话功能】:

如果需要有上下文语境,请把之前问的问题和答案一起通过Q参数传过来,其中问题和答案前面分别加上“ Q:” 和 “ A:”参数 ,Q和A前面有空格,举例:

Q = "你好!" A : 你好,有什么可以帮助你的。 Q: "今天天气怎么样?" 那么第二次提交带上之前的问题和答案,请求体里面的Q就是: keyword = " Q:你好! A:你好,有什么可以帮助你的。 Q:今天天气怎么样?" JS方法如下: keyword=''; that.list={ wen:'老婆和妈妈同时掉水里,我要先救谁?', da:'这种情况下,是理所当然应该先救妈妈的。救人的原则是先救生命危险的人,有可能先救老婆,这取决于老婆和妈妈的实际情况,而且也要考虑到逃生的可能性。假如老婆游泳能力很强,可能先救老婆,能在短时间内将她救出水面,否则,先救妈妈也是明智之举。 ' }; //新问题 newQ:'我不同意?'; //将之前的所有问答列表进行循环 that.list.forEach((v,k)=>{ //将问和答案组合,<|endoftext|>是用于给OPENAI官方接口进行分段识别的 keyword=keyword+' Q: '+v.wen + ' A: '+v.da+'。 <|endoftext|> '; }) //将新的问题组合 keyword=keyword+'Q: '+newQ + 'A: ';这样获取的答案就是支持上下文语境了哈~

![]()

浙公网安备 33010602011771号

浙公网安备 33010602011771号