Kylin build Cube的时候出现java.lang.NoClassDefFoundError: org/apache/hive/hcatalog/mapreduce/HCatInputFormat

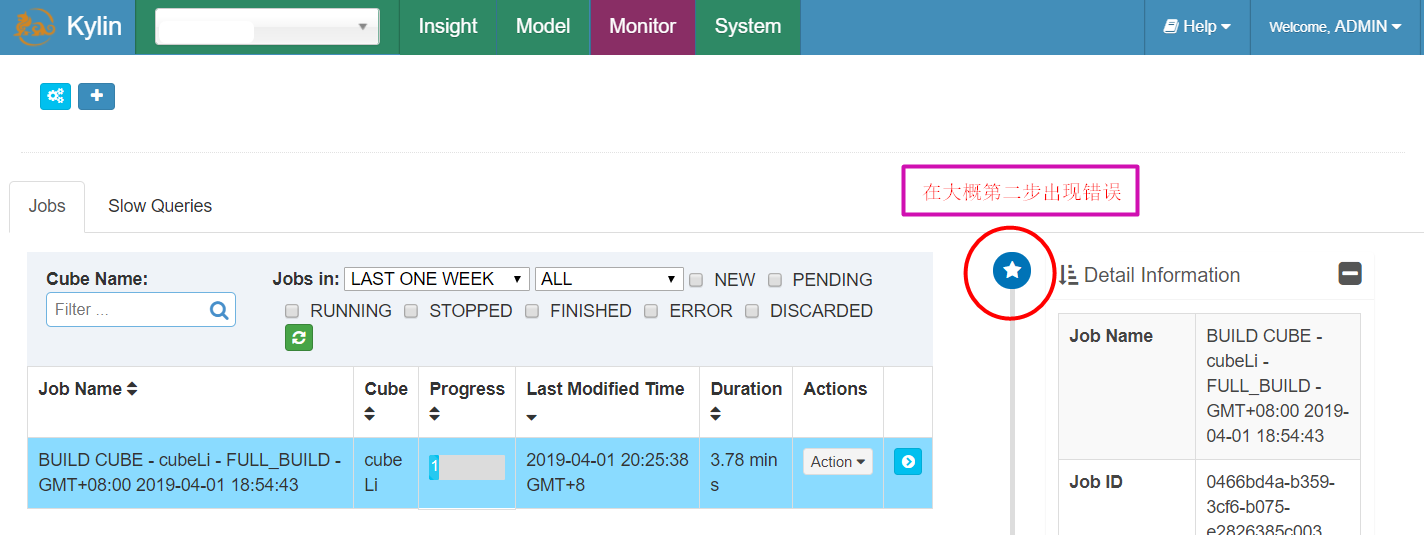

在build一个Cube,大概进行到第二步的时候

出现如下异常:

java.lang.NoClassDefFoundError: org/apache/hive/hcatalog/mapreduce/HCatInputFormat

at org.apache.kylin.source.hive.HiveMRInput$HiveTableInputFormat.configureJob(HiveMRInput.java:94)

at org.apache.kylin.engine.mr.steps.FactDistinctColumnsJob.setupMapper(FactDistinctColumnsJob.java:122)

at org.apache.kylin.engine.mr.steps.FactDistinctColumnsJob.run(FactDistinctColumnsJob.java:100)

at org.apache.kylin.engine.mr.common.MapReduceExecutable.doWork(MapReduceExecutable.java:131)

at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:164)

at org.apache.kylin.job.execution.DefaultChainedExecutable.doWork(DefaultChainedExecutable.java:70)

at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:164)

at org.apache.kylin.job.impl.threadpool.DefaultScheduler$JobRunner.run(DefaultScheduler.java:113)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

解决方案在这里:

https://issues.apache.org/jira/browse/KYLIN-1021

大意是:重新整理关于本地磁盘而不是HDF中JAR文件的问题,是的,Hadoop/Hive/HBase JAR应该存在于Hadoop群集的每台计算机上的本地磁盘中,位置相同;Kylin不会上载这些JAR;请检查并确保Hadoop群集的一致性。

换句话说,集群中的其他主机的HBase目录下的lib找不到某些jar包,集群中所有机器都要在同一位置存在这些jar包。所以我用集群分发命令把这些jar分发了:

[user@node-name hbase-1.3.1]$ bash xsync lib

浙公网安备 33010602011771号

浙公网安备 33010602011771号