centos7 使用docker-compose搭建hadoop集群

1,安装docker和docker-compose

2,在linux服务器上创建hadoop文件夹,在hadoop文件夹下创建docker-compose.yml文件

docker-compose.yml文件内容如下:

version: '3'

services:

namenode:

image: bde2020/hadoop-namenode:2.0.0-hadoop3.2.1-java8

container_name: namenode

hostname: namenode

restart: always

ports:

- 9870:9870

- 9000:9000

volumes:

- ./hadoop/data/hadoop_namenode:/hadoop/dfs/name

environment:

- CLUSTER_NAME=test

env_file:

- ./hadoop.env

networks:

- hadoop_net

resourcemanager:

image: bde2020/hadoop-resourcemanager:2.0.0-hadoop3.2.1-java8

container_name: resourcemanager

hostname: resourcemanager

restart: always

ports:

- "5888:5888"

depends_on:

- namenode

- datanode1

- datanode2

- datanode3

environment:

- YARN_CONF_yarn_resourcemanager_webapp_address=0.0.0.0:5888

env_file:

- ./hadoop.env

networks:

- hadoop_net

historyserver:

image: bde2020/hadoop-historyserver:2.0.0-hadoop3.2.1-java8

container_name: historyserver

restart: always

hostname: historyserver

ports:

- "8188:8188"

depends_on:

- namenode

- datanode1

- datanode2

- datanode3

volumes:

- ./hadoop/data/hadoop_historyserver:/hadoop/yarn/timeline

env_file:

- ./hadoop.env

networks:

- hadoop_net

nodemanager1:

image: bde2020/hadoop-nodemanager:2.0.0-hadoop3.2.1-java8

restart: always

container_name: nodemanager1

hostname: nodemanager1

depends_on:

- namenode

- datanode1

- datanode2

- datanode3

env_file:

- ./hadoop.env

ports:

- "8042:8042"

networks:

- hadoop_net

datanode1:

image: bde2020/hadoop-datanode:2.0.0-hadoop3.2.1-java8

restart: always

container_name: datanode1

hostname: datanode1

depends_on:

- namenode

ports:

- "5642:5642"

volumes:

- ./hadoop/data/hadoop_datanode1:/hadoop/dfs/data

env_file:

- ./hadoop.env

environment:

- HDFS_CONF_dfs_datanode_address=0.0.0.0:5640

- HDFS_CONF_dfs_datanode_ipc_address=0.0.0.0:5641

- HDFS_CONF_dfs_datanode_http_address=0.0.0.0:5642

networks:

- hadoop_net

datanode2:

image: bde2020/hadoop-datanode:2.0.0-hadoop3.2.1-java8

restart: always

container_name: datanode2

hostname: datanode2

depends_on:

- namenode

ports:

- "5645:5645"

volumes:

- ./hadoop/data/hadoop_datanode2:/hadoop/dfs/data

env_file:

- ./hadoop.env

environment:

- HDFS_CONF_dfs_datanode_address=0.0.0.0:5643

- HDFS_CONF_dfs_datanode_ipc_address=0.0.0.0:5644

- HDFS_CONF_dfs_datanode_http_address=0.0.0.0:5645

networks:

- hadoop_net

datanode3:

image: bde2020/hadoop-datanode:2.0.0-hadoop3.2.1-java8

restart: always

container_name: datanode3

hostname: datanode3

depends_on:

- namenode

ports:

- "5648:5648"

volumes:

- ./hadoop/data/hadoop_datanode3:/hadoop/dfs/data

env_file:

- ./hadoop.env

environment:

- HDFS_CONF_dfs_datanode_address=0.0.0.0:5646

- HDFS_CONF_dfs_datanode_ipc_address=0.0.0.0:5647

- HDFS_CONF_dfs_datanode_http_address=0.0.0.0:5648

networks:

- hadoop_net

networks:

hadoop_net:

driver: bridge

3.在hadoop文件夹下创建hadoop环境配置文件,hadoop.env:

hadoop.env如下

CORE_CONF_fs_defaultFS=hdfs://namenode:8020

CORE_CONF_hadoop_http_staticuser_user=root

CORE_CONF_hadoop_proxyuser_hue_hosts=*

CORE_CONF_hadoop_proxyuser_hue_groups=*

HDFS_CONF_dfs_webhdfs_enabled=true

HDFS_CONF_dfs_permissions_enabled=false

YARN_CONF_yarn_log___aggregation___enable=true

YARN_CONF_yarn_resourcemanager_recovery_enabled=true

YARN_CONF_yarn_resourcemanager_store_class=org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStore

YARN_CONF_yarn_resourcemanager_fs_state___store_uri=/rmstate

YARN_CONF_yarn_nodemanager_remote___app___log___dir=/app-logs

YARN_CONF_yarn_log_server_url=http://historyserver:8188/applicationhistory/logs/

YARN_CONF_yarn_timeline___service_enabled=true

YARN_CONF_yarn_timeline___service_generic___application___history_enabled=true

YARN_CONF_yarn_resourcemanager_system___metrics___publisher_enabled=true

YARN_CONF_yarn_resourcemanager_hostname=resourcemanager

YARN_CONF_yarn_timeline___service_hostname=historyserver

YARN_CONF_yarn_resourcemanager_address=resourcemanager:8032

YARN_CONF_yarn_resourcemanager_scheduler_address=resourcemanager:8030

YARN_CONF_yarn_resourcemanager_resource___tracker_address=resourcemanager:8031

4,在hadoop文件夹下创建hadoop/data,然后在hadoop/hadoop/data文件夹下分别创建hadoop_namenode、hadoop_historyserver、hadoop_datanode1、hadoop_datanode2、hadoop_datanode3作为持久化挂载路径

5,执行docker-compose up -d命令拉取镜像并启动容器

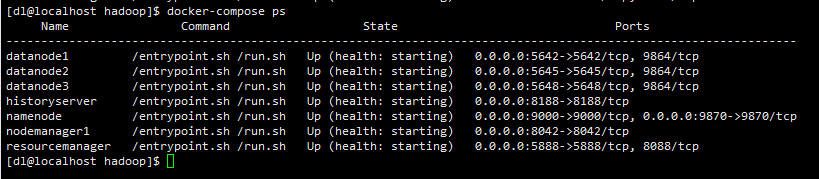

docker-compose ps 出现下面画面说明搭建成功

6,使用ip+端口即可访问hadoop界面