ke01开启: nc -lk 8888

Map:遍历数据流中的每一个元素,产生一个新的元素

package com.text.transformation import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ object MapOperator { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val stream = env.socketTextStream("ke01", 8888) val streamValue = stream.map(x => { if (!x.contains("a")) { x } }) streamValue.print() env.execute() } }

[root@ke01 bigdata]# nc -lk 8888

b

c

b

a

a

结果: 11> b 12> c 1> b 2> () 3> ()

flatMap:遍历数据流中的每一个元素,产生N个元素 N=0,1,2,......

package com.text.transformation import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ object MapOperator { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val stream = env.socketTextStream("ke01", 8888) val value = stream.flatMap( x => x.split(",")) value.print() env.execute() } } a,c a,d,e 结果: 3> a 3> c 4> a 4> d 4> e

使用flatMap代替filter

package com.text.transformation import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ import scala.collection.mutable.ListBuffer object MapOperator { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val stream = env.socketTextStream("ke01", 8888) val value = stream.flatMap( x => { val rest = new ListBuffer[String] if(!x.contains("a")){ rest += x } rest.iterator }) value.print() env.execute() } } abc qwe 结果: 4> qwe

keyBy :根据数据流中指定的字段来分区,相同指定字段值的数据一定是在同一个分区中,内部分区使用的是 HashPartitioner

指定分区字段的方式有三种:

1、根据索引号指定

2、通过匿名函数来指定

3、通过实现KeySelector接口 指定分区字段

package com.text.transformation import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ object MapOperator { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val stream = env.socketTextStream("ke01", 8888)

//根据索引号来指定分区字段

// .keyBy(0)

//通过传入匿名函数 指定分区字段

// .keyBy(x=>x._1)

//通过实现KeySelector接口 指定分区字段

stream.flatMap(_.split(" ")).map((_, 1)).keyBy(0).print() env.execute() } } 结果: 8> (a,1) 8> (a,1) 3> (b,1) 3> (b,1) 6> (c,1) 8> (a,1)

keyBy

package com.text.transformation import org.apache.flink.api.java.functions.KeySelector import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ object MapOperator { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val stream = env.socketTextStream("ke01", 8888) stream.flatMap(_.split(" ")).map((_, 1)).keyBy(new KeySelector[(String, Int), String]{ override def getKey(value: (String, Int)): String = { value._1 } }).print() env.execute() } } 结果: 8> (a,1) 3> (b,1) 8> (a,1) 3> (b,1)

reduce,一般结合keyBy使用

package com.text.transformation import org.apache.flink.api.java.functions.KeySelector import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ object MapOperator { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val stream = env.socketTextStream("ke01", 8888) stream.flatMap(_.split(" ")).map((_, 1)).keyBy(new KeySelector[(String, Int), String]{ override def getKey(value: (String, Int)): String = { value._1 } }).reduce((x, y) => (x._1, x._2 + y ._2)).print() env.execute() } } 结果: 8> (a,1) 8> (a,2) 8> (a,3)

split: 根据条件将一个流分成两个或者更多的流

package com.text.transformation import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ object MapOperator { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment // 偶数分到一个流(first) 奇数分到另外一个流(second) val stream = env.generateSequence(1, 100) val splitStream = stream.split(info => { info % 2 match { case 0 => List("first") case 1 => List("second") } }) // 查找当前流,从SplitStream中选择一个或者多个数据流

splitStream.select("first").print()

env.execute()

}

}

结果:

10> 10

6> 6

12> 12

6> 18

8> 8

6> 30

4> 4

2> 2

4> 16

练习: 读取kafka数据,实时统计各个卡口下的车流量

-- 实现kafka生产者,读取卡口数据并且往kafka中生产数据 package com.text.source import java.util.Properties import org.apache.kafka.clients.producer.{KafkaProducer, ProducerRecord} import org.apache.kafka.common.serialization.StringSerializer import org.apache.flink.streaming.api.scala._ import scala.io.Source object FlinkKafkaProduct { def main(args: Array[String]): Unit = { val prop = new Properties() prop.setProperty("bootstrap.servers", "ke02:9092,ke03:9092,ke04:9092") prop.setProperty("key.serializer", classOf[StringSerializer].getName) prop.setProperty("value.serializer", classOf[StringSerializer].getName) // 创建一个kafaka生产者对象 var producer = new KafkaProducer[String, String](prop) val lines = Source.fromFile("D:\\code\\scala\\test\\test07\\data\\carFlow_all_column_test.txt").getLines() for (i <- 1 to 100) { for (elem <- lines) { val splits = elem.split(",") val monitorId = splits(0).replace("'", "") val carId = splits(2).replace("'", "") val timestamp = splits(4).replace("'", "") val speed = splits(6) val stringBuilder = new StringBuilder val info = stringBuilder.append(monitorId + "\t").append(carId + "\t").append(timestamp + "\t").append(speed) producer.send(new ProducerRecord[String, String]("flink-kafka", i+"", info.toString())) Thread.sleep(500) } } } } -- 流式统计各个卡口的车流量 package com.text.transformation import java.util.Properties import org.apache.flink.api.common.serialization.SimpleStringSchema import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer import org.apache.kafka.common.serialization.StringSerializer import org.apache.flink.streaming.api.scala._ object Demo1 { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val properties = new Properties() properties.setProperty("bootstrap.servers", "ke02:9092,ke03:9092,ke04:9092") properties.setProperty("group.id", "flink-kafka-001") properties.setProperty("key.deserializer", classOf[StringSerializer].getName) properties.setProperty("value.deserializer", classOf[StringSerializer].getName) val stream = env.addSource(new FlinkKafkaConsumer[String]("flink-kafka", new SimpleStringSchema(), properties)) stream.map(data => { val splits = data.split("\t") (splits(0), 1) }).keyBy(_._1).sum(1).print() env.execute() } }

Aggregations是一类聚合算子

keyedStream.sum(0) keyedStream.sum("key") keyedStream.min(0) keyedStream.min("key") keyedStream.max(0) keyedStream.max("key") keyedStream.minBy(0) keyedStream.minBy("key") keyedStream.maxBy(0) keyedStream.maxBy("key")

demo02:实时统计各个卡口最先通过的汽车的信息

package com.text.transformation import java.util.Properties import org.apache.flink.api.common.serialization.SimpleStringSchema import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer import org.apache.kafka.common.serialization.StringSerializer import org.apache.flink.streaming.api.scala._ import java.text.SimpleDateFormat object Demo1 { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val properties = new Properties() properties.setProperty("bootstrap.servers", "ke02:9092,ke03:9092,ke04:9092") properties.setProperty("group.id", "flink-kafka-001") properties.setProperty("key.deserializer", classOf[StringSerializer].getName) properties.setProperty("value.deserializer", classOf[StringSerializer].getName) val stream = env.addSource(new FlinkKafkaConsumer[String]("flink-kafka", new SimpleStringSchema(), properties)) stream.map(data => { val splits = data.split("\t") val eventTime = splits(2) var format = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss") val date = format.parse(eventTime) (splits(0), date.getTime) }).keyBy(_._1).min(1).print() env.execute() } } 结果: 11> (310999021105,1408514970000) 7> (310999012504,1408514970000) 2> (310999008906,1408514970000) 1> (310999008805,1408514973000) 10> (310999007204,1408514970000)

union: 合并两个或者更多的数据流产生一个新的数据流,这个新的数据流中包含了所合并的数据流的元素

注意:需要保证数据流中元素类型一致

package com.text.transformation

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.api.scala._

object MapOperator {

def main(args: Array[String]): Unit = {

val env = StreamExecutionEnvironment.getExecutionEnvironment

val stream1 = env.fromCollection(List(("a", 1), ("b", 2)))

val stream2 = env.fromCollection(List(("a", 3), ("d", 4)))

val value = stream1.union(stream2)

value.print()

env.execute()

}

}

结果:

11> (b,2)

8> (a,3)

10> (a,1)

9> (d,4)

Connect (假合并):合并两个数据流并且保留两个数据流的数据类型,能够共享两个流的状态

package com.text.transformation import org.apache.flink.streaming.api.functions.co.CoMapFunction import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ object Demo1 { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val ds1 = env.socketTextStream("ke01", 8888) val ds2 = env.socketTextStream("ke01", 9999) val wcStream1 = ds1.flatMap(_.split(" ")).map((_, 1)).keyBy(0).sum(1) val wcStream2 = ds2.flatMap(_.split(" ")).map((_, 1)).keyBy(0).sum(1) val restStream: ConnectedStreams[(String, Int), (String, Int)] = wcStream2.connect(wcStream1)

// restStream没有println方法,所以需要map进行转换,map中可以new CoMap、CoFlatMap

restStream.map(new CoMapFunction[(String, Int),(String, Int), (String, Int)] {

override def map1(value: (String, Int)): (String, Int) = {

(value._1 + ": first", value._2 + 100)

}

override def map2(value: (String, Int)): (String, Int) = {

(value._2 + ":second", value._2*100)

}

}).print()

env.execute()

}

}

结果:

8888输入:ke ke ke

7> (ke: first,101)

7> (ke: first,102)

7> (ke: first,103)

9999输入: ke ke ke

7> (9:second,100)

7> (10:second,200)

7> (11:second,300)

CoMap, CoFlatMap并不是具体算子名字,而是一类操作名称

凡是基于ConnectedStreams数据流做map遍历,这类操作叫做CoMap

凡是基于ConnectedStreams数据流做flatMap遍历,这类操作叫做CoFlatMap

//CoMap第一种实现方式:

restStream.map(new CoMapFunction[(String,Int),(String,Int),(String,Int)] {

//对第一个数据流做计算

override def map1(value: (String, Int)): (String, Int) = {

(value._1+":first",value._2+100)

}

//对第二个数据流做计算

override def map2(value: (String, Int)): (String, Int) = {

(value._1+":second",value._2*100)

}

}).print()

// CoMap第二种实现方式:

restStream.map(

//对第一个数据流做计算

x=>{(x._1+":first",x._2+100)}

//对第二个数据流做计算

,y=>{(y._1+":second",y._2*100)}

).print()

CoFlatMap第一种实现方式:

package com.text.transformation import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ import org.apache.flink.util.Collector object Demo1 { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val ds1 = env.socketTextStream("ke01", 8888) val ds2 = env.socketTextStream("ke01", 9999) val wcStream1 = ds1.flatMap(_.split(" ")) val wcStream2 = ds2.flatMap(_.split(" ")) val restStream: ConnectedStreams[(String), (String)] = wcStream2.connect(wcStream1) restStream.flatMap( (x, c:Collector[String])=>{ x.split(" ").foreach(w =>{ c.collect(w) }) }, (y, c:Collector[String])=>{ y.split(" ").foreach(d => { c.collect(d) }) } ).print() env.execute() } }

CoFlatMap第二种实现方式:

ds1.connect(ds2).flatMap( //对第一个数据流做计算 x=>{ x.split(" ") } //对第二个数据流做计算 ,y=>{ y.split(" ") }).print()

CoFlatMap第三种实现方式:

ds1.connect(ds2).flatMap(new CoFlatMapFunction[String,String,(String,Int)] { //对第一个数据流做计算 override def flatMap1(value: String, out: Collector[(String, Int)]): Unit = { val words = value.split(" ") words.foreach(x=>{ out.collect((x,1)) }) } //对第二个数据流做计算 override def flatMap2(value: String, out: Collector[(String, Int)]): Unit = { val words = value.split(" ") words.foreach(x=>{ out.collect((x,1)) }) } }).print()

Demo03:现有一个配置文件存储车牌号与车主的真实姓名,通过数据流中的车牌号实时匹配出对应的

车主姓名(注意:配置文件可能实时改变)

package com.text.transformation import org.apache.flink.api.java.io.TextInputFormat import org.apache.flink.core.fs.Path import org.apache.flink.streaming.api.functions.co.CoMapFunction import org.apache.flink.streaming.api.functions.source.FileProcessingMode import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ import scala.collection.mutable object Demo2 { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment env.setParallelism(1) val filePath = "D:\\code\\scala\\test\\test07\\data\\text.txt" val textStream = env.readFile(new TextInputFormat(new Path(filePath)), filePath, FileProcessingMode.PROCESS_CONTINUOUSLY, 10) val dataStream = env.socketTextStream("ke01", 8888) dataStream.connect(textStream).map(new CoMapFunction[String, String, String] { private val hashMap = new mutable.HashMap[String, String]() override def map1(value: String): String = { hashMap.getOrElse(value, "not found name") } override def map2(value: String): String = { val splits = value.split(" ") hashMap.put(splits(0), splits(1)) value + "加载完毕....." } }).print() env.execute() } } 110 警察加载完毕..... 120 救护车加载完毕..... 119 火警加载完毕..... 结果: 输入aa -> not found name 输入110 -> 警察

side output侧输出流:流计算过程,可能遇到根据不同的条件来分隔数据流。filter分割造成不必要的数据复制

package com.text.transformation import org.apache.flink.streaming.api.functions.ProcessFunction import org.apache.flink.streaming.api.scala.{OutputTag, StreamExecutionEnvironment} import org.apache.flink.streaming.api.scala._ import org.apache.flink.util.Collector object Demo3 { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val stream = env.socketTextStream("ke01", 8888) val gtTag = new OutputTag[String]("gt") val processStream = stream.process(new ProcessFunction[String, String] { override def processElement(value: String, ctx: ProcessFunction[String, String]#Context, out: Collector[String]): Unit = { try { val longVar = value.toLong if (longVar > 100) { out.collect(value) } else { ctx.output(gtTag, value) } } catch { case e => e.getMessage ctx.output(gtTag, value) } } }) val sideStream = processStream.getSideOutput(gtTag) sideStream.print("sideStream") processStream.print("mainStream") env.execute() } } 结果: sideStream:4> 50 sideStream:5> 100 mainStream:6> 120 mainStream:7> 130

Iterate

Iterate算子提供了对数据流迭代的支持

迭代由两部分组成:迭代体、终止迭代条件

不满足终止迭代条件的数据流会返回到stream流中,进行下一次迭代

满足终止迭代条件的数据流继续往下游发送

package com.text.transformation import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ object Demo4 { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val initStream = env.socketTextStream("ke01", 8888) val stream = initStream.map(_.toLong) stream.iterate( info => { val infoIterate = info.map(x => { println(x) if (x > 0) x - 1 else x }) // 大于0的值继续返回到stream流中,当 <= 0 继续往下游发送 (infoIterate.filter(_ > 0), infoIterate.filter(_ < 0)) } ).print() env.execute() } }

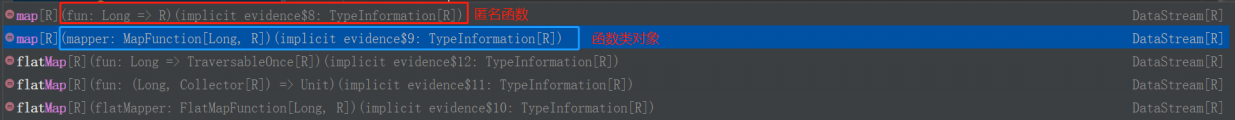

函数类和富函数类

在使用Flink算子的时候,可以通过传入匿名函数和函数类对象 例如:

富函数类相比于普通的函数,可以获取运行环境的上下文(Context),拥有一些生命周期方法,管理状态,可以实现更加复杂的功能

| 普通函数类 |

富函数类 |

| MapFunction | RichMapFunction |

| FlatMapFunction | RichFlatMapFunction |

| FilterFunction |

RichFilterFunction |

- 使用普通函数类过滤掉车速高于100的车辆信息

package com.text.transformation import org.apache.flink.api.common.functions.FilterFunction import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment object Demo5 { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val stream = env.readTextFile("D:\\code\\scala\\test\\test07\\data\\carFlow_all_column_test.txt") stream.filter(new FilterFunction[String] { override def filter(value: String): Boolean = { if (value != null && !"".equals(value)) { val speed = value.split(",")(6).replace("'", "").toLong if (speed > 100) { false } else { true } } else { false } } }).print() env.execute() } }

结果:'310999003001', '3109990030010220140820141230292','00000000','','2014-08-20 14:09:35','0',255,'SN', 0.00,'4','','310999','310999003001','02','','','2','','','2014-08-20 14:12:30','2014-08-20 14:16:13',0,0,'2014-08-21 18:50:05','','',' '

'310999003102', '3109990031020220140820141230266','粤BT96V3','','2014-08-20 14:09:35','0',21,'NS', 0.00,'2','','310999','310999003102','02','','','2','','','2014-08-20 14:12:30','2014-08-20 14:16:13',0,0,'2014-08-21 18:50:05','','',' ' - 使用富函数类,将车牌号转化成车主真实姓名,映射表存储在Redis中

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>${redis.version}</version>

</dependency>package com.text.transformation import org.apache.flink.api.common.functions.RichMapFunction import org.apache.flink.configuration.Configuration import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ import redis.clients.jedis.Jedis object Demo6 { def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val stream = env.socketTextStream("192.168.75.91", 8888) stream.map(new RichMapFunction[String, String] { private var jedis: Jedis = _ // /初始化函数 在每一个thread启动的时候(处理元素的时候,会调用一次) // 在open中可以创建连接redis的连接 override def open(parameters: Configuration): Unit = { //getRuntimeContext可以获取flink运行的上下文环境 AbstractRichFunction抽象类提供的 val taskName = getRuntimeContext.getTaskName val subTasks = getRuntimeContext.getTaskNameWithSubtasks println("=========open======" + "taskName:" + taskName + "subTasks:" + subTasks) jedis = new Jedis("192.168.75.91", 6390) jedis.auth("aa123456") jedis.select(3) } //每处理一个元素,就会调用一次 override def map(value: String): String = { val name = jedis.get(value) if (name == null) { "not found name" } else { name } } //元素处理完毕后,会调用close方法 关闭redis连接 override def close(): Unit = { jedis.close() } }).setParallelism(2).print() env.execute() } } 结果: =========open======taskName:MapsubTasks:Map (2/2) =========open======taskName:MapsubTasks:Map (1/2) 5> not found name 10> 1 ; 6> 1

底层API(ProcessFunctionAPI)

-- map、filter、flatMap等算子都是基于这层高层封装出来的

-- 越低层次的API,功能越强大,用户能够获取的信息越多,比如可以拿到元素状态信息、事件时间、设置定时器等

package com.text.transformation import org.apache.flink.streaming.api.functions.KeyedProcessFunction import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment import org.apache.flink.streaming.api.scala._ import org.apache.flink.util.Collector object Demo7 { case class CarInfo(carId: String, speed: Long) def main(args: Array[String]): Unit = { val env = StreamExecutionEnvironment.getExecutionEnvironment val stream = env.socketTextStream("192.168.75.91", 8888) stream.map(data => { val split = data.split(" ") val carId = split(0) val speed = split(1).toLong CarInfo(carId, speed) }).keyBy(_.carId) // KeyedStream调用process需要传入KeyedProcessFunction // DataStream调用process需要传入ProcessFunction .process(new KeyedProcessFunction[String, CarInfo, String] { override def processElement(value: CarInfo, ctx: KeyedProcessFunction[String, CarInfo, String]#Context, out: Collector[String]): Unit = { val currentTime = ctx.timerService().currentProcessingTime() if (value.speed > 100) { val timerTime = currentTime + 2 * 1000 ctx.timerService().registerEventTimeTimer(timerTime) } } override def onTimer(timestamp: Long, ctx: KeyedProcessFunction[String, CarInfo, String]#OnTimerContext, out: Collector[String]): Unit = { var warnMag = "warn... time:" + timestamp + "carID:" + ctx.getCurrentKey out.collect(warnMag) } }).print() env.execute() } }