导入

- 本地文件导入到Hive表CREATE TABLE testA (

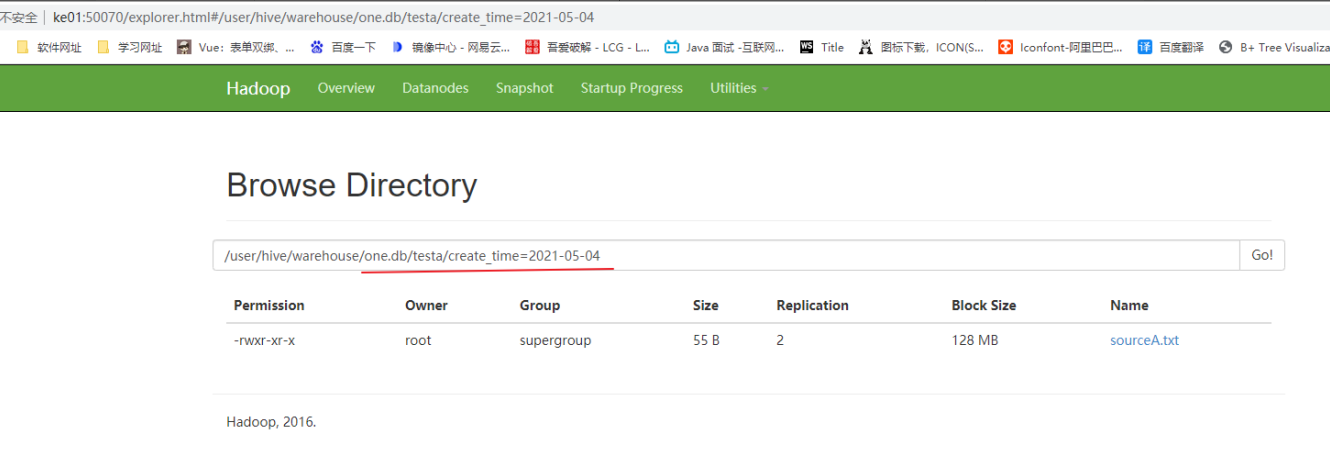

id INT, name string, area string ) PARTITIONED BY (create_time string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE; CREATE TABLE testB ( id INT, name string, area string, code string ) PARTITIONED BY (create_time string) ROW FORMAT DELIMITED FIELDS TERMINATED BY ',' STORED AS TEXTFILE; // 数据文件(sourceA.txt): 1,fish1,SZ 2,fish2,SH 3,fish3,HZ 4,fish4,QD 5,fish5,SR // 数据文件(sourceB.txt): 1,zy1,SZ,1001 2,zy2,SH,1002 3,zy3,HZ,1003 4,zy4,QD,1004 5,zy5,SR,1005 // 方式一:load进数据 hive> load data local inpath '/tmp/20210504/sourceA.txt' into table testA partition(create_time='2021-05-04'); Loading data to table one.testa partition (create_time=2021-05-04) OK Time taken: 1.552 seconds hive> load data local inpath '/tmp/20210504/sourceB.txt' into table testB partition(create_time='2021-05-04'); Loading data to table one.testb partition (create_time=2021-05-04) OK Time taken: 0.789 seconds hive> select * from testA; OK 1 fish1 SZ 2021-05-04 2 fish2 SH 2021-05-04 3 fish3 HZ 2021-05-04 4 fish4 QD 2021-05-04 5 fish5 SR 2021-05-04 Time taken: 2.227 seconds, Fetched: 5 row(s) hive> select * from testB; OK 1 zy1 SZ 1001 2021-05-04 2 zy2 SH 1002 2021-05-04 3 zy3 HZ 1003 2021-05-04 4 zy4 QD 1004 2021-05-04 5 zy5 SR 1005 2021-05-04 Time taken: 0.175 seconds, Fetched: 5 row(s) // 方式二 insert 删除表数据 hive> truncate table testA; OK Time taken: 0.326 seconds 删除分区: hive> alter table testB drop partition(create_time = '2021-05-04'); Dropped the partition create_time=2021-05-04 OK Time taken: 0.27 seconds hive不能删除行 如果想删除部分数据,就创建临时表 ,把留下的数据查出来 插入进去

- Hive表导入到Hive表

insert into table testA partition(create_time='2021-05-06') select id, name, area from testB where id = 1; 有MR Time taken: 173.64 seconds hive> SELECT * FROM testA; OK 1 fish1 SZ 2021-05-04 2 fish2 SH 2021-05-04 3 fish3 HZ 2021-05-04 4 fish4 QD 2021-05-04 5 fish5 SR 2021-05-04 1 zy1 SZ 2021-05-06 1 zy1 SZ 2021-05-06 Time taken: 0.246 seconds, Fetched: 7 row(s) INSERT INTO TABLE testA PARTITION(create_time) select id, name, area, code from testB where id = 2; hive> select * from testA; OK 2 zy2 SH 1002 2 zy2 SH 1002 1 fish1 SZ 2021-05-04 2 fish2 SH 2021-05-04 3 fish3 HZ 2021-05-04 4 fish4 QD 2021-05-04 5 fish5 SR 2021-05-04 1 zy1 SZ 2021-05-06 1 zy1 SZ 2021-05-06 Time taken: 0.212 seconds, Fetched: 9 row(s) 1,将testB中id=1的行,导入到testA,分区为2021-05-06 2,将testB中id=2的行,导入到testA,分区create_time为id=2行的code值。 - HDFS文件导入到Hive表

HDFS文件导入到Hive表 hdfs dfs -put /tmp/20210504/sourceA.txt /xiaoke003/sourceA hive> load data inpath '/xiaoke002/sourceA' into table testA partition(create_time= '2021-05-04'); Loading data to table one.testa partition (create_time=2021-05-04) OK Time taken: 0.54 seconds hive> SELECT * FROM testA; OK 1 fish1 SZ 2021-05-04 2 fish2 SH 2021-05-04 3 fish3 HZ 2021-05-04 4 fish4 QD 2021-05-04 5 fish5 SR 2021-05-04 Time taken: 0.122 seconds, Fetched: 5 row(s)

- 创建表的过程中从其他表导入

create table testC as select name, code from testB;

- 通过sqoop将mysql库导入到Hive表

导出

- Hive表导出到本地文件系统

insert overwrite local directory '/tmp/20210504/output/one' row format delimited fields terminated by ',' select * from testA; 通过INSERT OVERWRITE LOCAL DIRECTORY将hive表testA数据导入到/tmp/20210504/output/one/000000_0,众所周知,HQL会启动Mapreduce完成, /tmp/20210504/output/one就是Mapreduce输出路径,产生的结果存放在文件名为:000000_0。

- Hive表导出到HDFS

insert overwrite directory '/tmp/xiaoke002/one' row format delimited fields terminated by ',' select * from testA; [root@ke03 output]# hdfs dfs -cat /xiaoke002/one/000000_0 1fish1SZ2021-05-04 2fish2SH2021-05-04 3fish3HZ2021-05-04 4fish4QD2021-05-04 5fish5SR2021-05-04

- 使用-e -f 参数来导出数据

-e 的使用方式,后面接SQL语句。>>后面为输出文件路径 hive -e "use one;select * from testA" >> ./two -f 的使用方式,后面接存放sql语句的文件。>>后面为输出文件路径 [root@ke03 output]# cat sql.sql use one; select * from testA; hive -f sql.sql >> three.txt [root@ke03 output]# cat two 1 fish1 SZ 2021-05-04 2 fish2 SH 2021-05-04 3 fish3 HZ 2021-05-04 4 fish4 QD 2021-05-04 5 fish5 SR 2021-05-04 [root@ke03 output]# cat three.txt 1 fish1 SZ 2021-05-04 2 fish2 SH 2021-05-04 3 fish3 HZ 2021-05-04 4 fish4 QD 2021-05-04 5 fish5 SR 2021-05-04

- 从hdfs中直接导出

hdfs dfs -get /xiaoke002/one/000000_0 ./four vi four 1^Afish1^ASZ^A2021-05-04 2^Afish2^ASH^A2021-05-04 3^Afish3^AHZ^A2021-05-04 4^Afish4^AQD^A2021-05-04 5^Afish5^ASR^A2021-05-04 - 通过sqoop将Hive表导出到mysql库