配置spark

目录:/opt/bigdata/spark-2.3.4-bin-hadoop2.6/conf

[root@ke03 conf]# vi spark-env.sh

配置:export HADOOP_CONF_DIR=/opt/bigdata/hadoop-2.6.5/etc/hadoop

cp /opt/bigdata/hive-2.3.4/conf/hive-site.xml ./

<configuration>

<property>

<name>hive.metastore.uris</name>

<value>thrift://ke03:9083</value>

</property>

</configuration>

目录:/opt/bigdata/spark-2.3.4-bin-hadoop2.6/bin

启动:

[root@ke03 bin]# ./spark-shell --master yarn

观看: http://ke03:8088/cluster Spark shell一直在运行,只要spark-shell不退出,一直连接 日志:Spark session available as 'spark'. 启动日志图 测试: scala> spark.sql("show tables").show +--------+---------+-----------+ |database|tableName|isTemporary| +--------+---------+-----------+ | default| test01| false| | default| test02| false| | default| test03| false| +--------+---------+-----------+

方式二:

[root@ke03 bin]# ./spark-sql master yarn

spark-sql> show tables; 2021-02-16 04:33:02 INFO CodeGenerator:54 - Code generated in 367.646292 ms default test01 false default test02 false default test03 false 8080没有任务(无论增删查都没有)

---------------------------------------------------------------- spark使用类似hiveserver2方式 -----------------------------------------------------------------

目录:/opt/bigdata/spark-2.3.4-bin-hadoop2.6/sbin

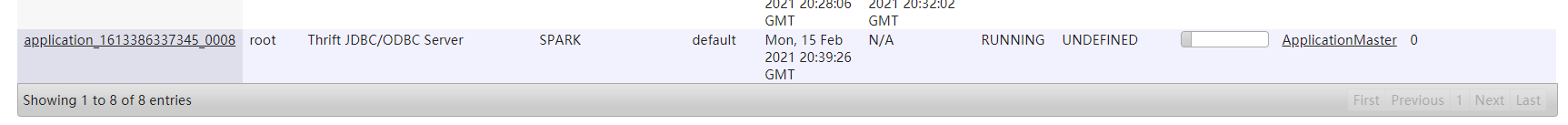

./start-thriftserver.sh --master yarn

jps:多了一个进程 SparkSubmit (后台运行)

8080启动了:Thrift JDBC/ODBC Server 不退出程序,一直连接

目录:/opt/bigdata/spark-2.3.4-bin-hadoop2.6/bin

./beeline // 只要能和ke03这台机器通讯,就可以直接连接

!connect jdbc:hive2://ke03:10000 // hive2连接 原因是模仿hive的

验证:spark数据与hive数据同步

0: jdbc:hive2://ke03:10000> show tables; +-----------+------------+--------------+--+ | database | tableName | isTemporary | +-----------+------------+--------------+--+ | default | test01 | false | | default | test02 | false | | default | test03 | false | +-----------+------------+--------------+--+