双向LSTM

1.理论

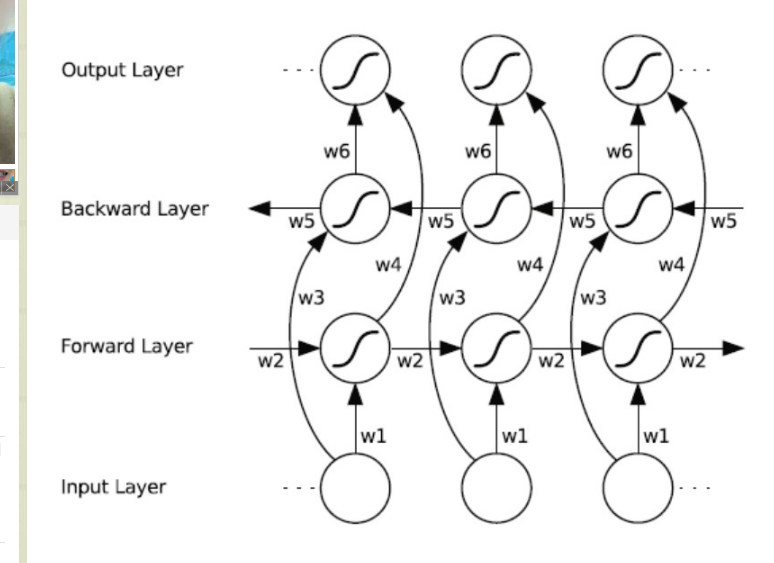

双向循环神经网络(BRNN)的基本思想是提出每一个训练序列向前和向后分别是两个循环神经网络(RNN),而且这两个都连接着一个输出层。

这个结构提供给输出层输入序列中每一个点的完整的过去和未来的上下文信息

六个独特的权值在每一个时步被重复的利用,六个权值分别对应:输入到向前和向后隐含层(w1, w3),隐含层到隐含层自己(w2, w5),向前和向后隐含层到输出层(w4, w6)

值得注意的是:向前和向后隐含层之间没有信息流,这保证了展开图是非循环的

2.代码

#!/usr/bin/env python3

# encoding: utf-8

'''

@author: bigcome

@desc:

@time: 2018/12/5 9:04

'''

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

#准备数据集

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

#设计模型

#设置参数

#学习率

learning_rate = 0.001

# Network Parameters

# n_steps*n_input其实就是那张图 把每一行拆到每个time step上

n_input = 28

n_steps = 28

# 隐藏层大小

n_hidden = 512

n_classes = 10

# 每次训练的样本大小

batch_size = 100

n_batch = mnist.train.num_examples // batch_size

display_step =10

# tf Graph input

# [None, n_steps, n_input]这个None表示这一维不确定大小

x = tf.placeholder(tf.float32,[None,n_steps,n_input])

y = tf.placeholder(tf.float32,[None,n_classes])

#Define weights

weights = tf.get_variable("weights", [2 * n_hidden, n_classes], dtype=tf.float32, #注意这里的维度

initializer = tf.random_normal_initializer(mean=0, stddev=1))

biases = tf.get_variable("biases", [n_classes], dtype=tf.float32,

initializer = tf.random_normal_initializer(mean=0, stddev=1))

def BiRNN(x, weights, biases):

#x是[50,28,28]

#矩阵转置后是[28,50,28]

x = tf.transpose(x, [1, 0, 2])

#调整维度[-1,28]

x = tf.reshape(x, [-1, n_input])

x = tf.split(x, n_steps)

lstm_fw_cell = tf.contrib.rnn.BasicLSTMCell(n_hidden, forget_bias=0.8)

lstm_bw_cell = tf.contrib.rnn.BasicLSTMCell(n_hidden, forget_bias=0.8)

output, _, _ = tf.contrib.rnn.static_bidirectional_rnn(lstm_fw_cell, lstm_bw_cell, x, dtype=tf.float32)

return tf.matmul(output[-1], weights) + biases

#define bi-lstm

def Bilstm(x,weights,biases):

lstm_fw_cell = tf.nn.rnn_cell.BasicLSTMCell(n_hidden,forget_bias=1.0)

lstm_bw_cell = tf.nn.rnn_cell.BasicLSTMCell(n_hidden,forget_bias=1.0)

init_fw = lstm_fw_cell.zero_state(batch_size, dtype=tf.float32)

init_bw = lstm_bw_cell.zero_state(batch_size, dtype=tf.float32)

outputs, final_states = tf.nn.bidirectional_dynamic_rnn(lstm_fw_cell,

lstm_bw_cell,

x,

initial_state_fw=init_fw,

initial_state_bw=init_bw)

outputs = tf.transpose(outputs, (1, 0, 2))

#outputs = tf.concat(outputs, 2) # 将前向和后向的状态连接起来

#tf.reshape(outputs, [-1, 2 * n_hiddens])

ouput = tf.add(tf.matmul(outputs[-1], weights), biases) # 注意这里的维度

return ouput

prediction = BiRNN(x, weights, biases)

#prediction = Bilstm(x,weights,biases)

cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=prediction,labels=y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cross_entropy)

correct_prediction = tf.equal(tf.argmax(prediction,1),tf.argmax(y,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction,tf.float32))

init = tf.global_variables_initializer()

with tf.Session() as sess:

sess.run(init)

epoch = 0

while epoch < n_batch:

batch_x, batch_y = mnist.train.next_batch(batch_size)

batch_x = batch_x.reshape((batch_size, n_steps,n_input)) # 要保证x和batch_x的shape是一样的

sess.run(optimizer, feed_dict={x: batch_x, y: batch_y})

if epoch % display_step == 0:

acc = sess.run(accuracy, feed_dict={x: batch_x, y: batch_y})

loss = sess.run(cross_entropy, feed_dict={x: batch_x, y: batch_y})

print("Iter " + str(epoch * batch_size) + ", Minibatch Loss= " + \

"{:.6f}".format(loss) + ", Training Accuracy= " + \

"{:.5f}".format(acc))

epoch += 1

print("Optimization Finished!")

test_len = 10000

test_data = mnist.test.images[:test_len].reshape((-1, n_steps, n_input))

test_label = mnist.test.labels[:test_len]

print("Testing Accuracy:", sess.run(accuracy, feed_dict={x: test_data, y: test_label}))

浙公网安备 33010602011771号

浙公网安备 33010602011771号