手把手教你搭建实时日志分析平台

背景

基于ELK搭建一个实时日志分析平台

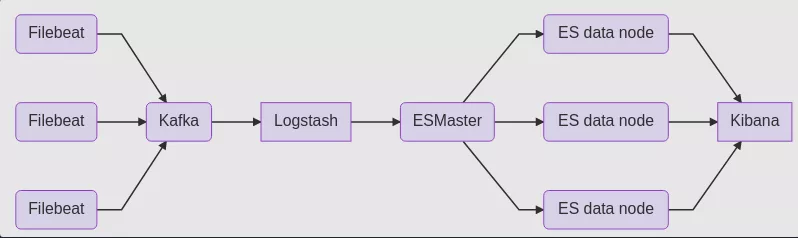

架构

下载

- filebeat:https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.13.1-linux-x86_64.tar.gz

- kafka:https://downloads.apache.org/kafka/2.8.0/kafka_2.12-2.8.0.tgz

- elasticsearch:https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.13.2-linux-x86_64.tar.gz

- logstash:https://artifacts.elastic.co/downloads/logstash/logstash-7.13.2-linux-x86_64.tar.gz

- kibana:https://artifacts.elastic.co/downloads/kibana/kibana-7.13.2-linux-x86_64.tar.gz

#下载

wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.13.1-linux-x86_64.tar.gz

wget https://downloads.apache.org/kafka/2.8.0/kafka_2.12-2.8.0.tgz

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.13.2-linux-x86_64.tar.gz

wget https://artifacts.elastic.co/downloads/logstash/logstash-7.13.2-linux-x86_64.tar.gz

wget https://artifacts.elastic.co/downloads/kibana/kibana-7.13.2-linux-x86_64.tar.gz

#解压

ls *.tar.gz | xargs -n1 tar xzvf

#将filebeat的用户权限改为root

sudo chown -hR root /home/mikey/Downloads/ELK/filebeat-7.13.1-linux-x86_64

安装

Kafka

nohup ./bin/zookeeper-server-start.sh config/zookeeper.properties &

nohup ./bin/kafka-server-start.sh config/server.properties &

Elasticsearch

./bin/elasticsearch -d

kibana

./bin/kibana &

Filebeat

1.查看可用的收集模型

./filebeat modules list

2.开启需要收集的模型

./filebeat modules enable system nginx mysql

3.设置日志文件路径,编辑filebeat.yml配置文件

#配置输出到kafka

output.kafka:

# initial brokers for reading cluster metadata

hosts: ["kafka1:9092", "kafka2:9092", "kafka3:9092"]

# message topic selection + partitioning

topic: collect_log_topic

partition.round_robin:

reachable_only: false

required_acks: 1

compression: gzip

max_message_bytes: 1000000

4.授权启动

sudo chown root filebeat.yml

sudo chown root modules.d/system.yml

sudo ./filebeat -e

5.添加大盘

./filebeat setup --dashboards

logstash

1.配置文件

input {

kafka {

type => "ad"

bootstrap_servers => "127.0.0.1:9092,114.118.13.66:9093,114.118.13.66:9094"

client_id => "es_ad"

group_id => "es_ad"

auto_offset_reset => "latest" # 从最新的偏移量开始消费

consumer_threads => 5

decorate_events => true # 此属性会将当前topic、offset、group、partition等信息也带到message中

topics => ["collect_log_topic"] # 数组类型,可配置多个topic

tags => ["nginx",]

}

}

output {

elasticsearch {

hosts => ["114.118.10.253:9200"]

index => "log-%{+YYYY-MM-dd}"

document_type => "access_log"

timeout => 300

}

}

2.创建目录

mkdir logs_data_dir

3.启动logstash

nohup bin/logstash -f config/kafka-logstash-es.conf --path.data=./logs_data_dir 1>/dev/null 2>&1 &

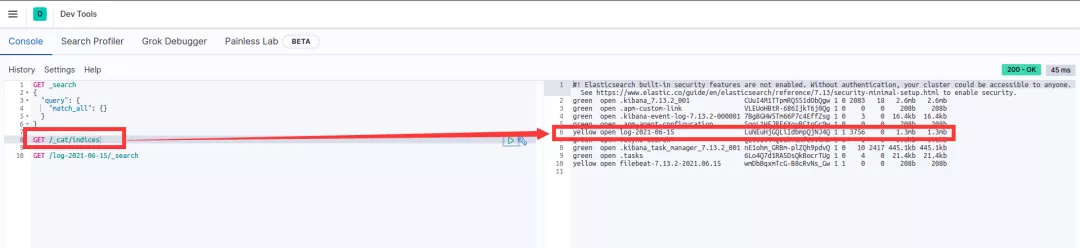

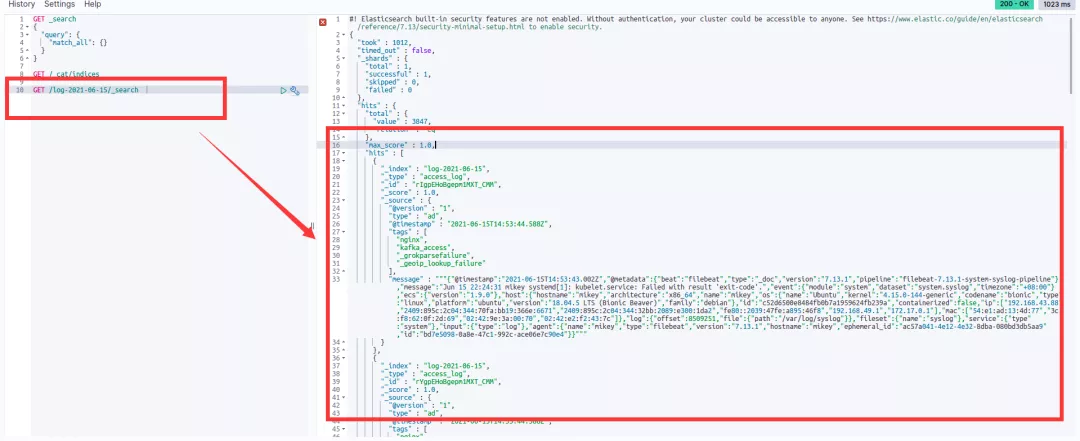

效果

资料

相关博文: 一篇文章搞懂filebeat(ELK)

Filebeat官方文档: Filebeat Reference

filebeat输出到kafka: https://www.elastic.co/guide/en/beats/filebeat/current/kafka-output.html

扫一扫,关注我

合群是堕落的开始 优秀的开始是孤行