TensorRT生成INT8校准文件

需要的资源

- 模型

- 训练模型时用的500~1000张图片

模型这里用的是onnx模型。

预处理

本文参考的例子是TensorRT的sampleSSD项目。

预处理需要将前述500张图片转成生成校准文件时需要的数据读取形式。

首先满足预处理代码中的convert命令,安装imagemagick

apt-get install imagemagick

预处理代码如下:

# # Copyright (c) 2020, NVIDIA CORPORATION. All rights reserved. # # Licensed under the Apache License, Version 2.0 (the "License"); # you may not use this file except in compliance with the License. # You may obtain a copy of the License at # # http://www.apache.org/licenses/LICENSE-2.0 # # Unless required by applicable law or agreed to in writing, software # distributed under the License is distributed on an "AS IS" BASIS, # WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. # See the License for the specific language governing permissions and # limitations under the License. # import numpy as np import sys import os import glob import shutil import struct from random import shuffle try: from PIL import Image except ImportError as err: raise ImportError("""ERROR: Failed to import module ({}) Please make sure you have Pillow installed. For installation instructions, see: http://pillow.readthedocs.io/en/stable/installation.html""".format(err)) height = 640 width = 640 NUM_BATCHES = 0 NUM_PER_BATCH = 1 NUM_CALIBRATION_IMAGES = 500 HOME_DIR = '/home/cmhu/data/500images' CALIBRATION_DATASET_LOC = HOME_DIR + '/images/*.jpg' # images to test imgs = [] print("Location of dataset = " + CALIBRATION_DATASET_LOC) imgs = glob.glob(CALIBRATION_DATASET_LOC) shuffle(imgs) imgs = imgs[:NUM_CALIBRATION_IMAGES] NUM_BATCHES = NUM_CALIBRATION_IMAGES // NUM_PER_BATCH + (NUM_CALIBRATION_IMAGES % NUM_PER_BATCH > 0) print("Total number of images = " + str(len(imgs))) print("NUM_PER_BATCH = " + str(NUM_PER_BATCH)) print("NUM_BATCHES = " + str(NUM_BATCHES)) # output outDir = HOME_DIR + "/batches" if os.path.exists(outDir): os.system("rm " + outDir +"/*") # prepare output if not os.path.exists(outDir): os.makedirs(outDir) for i in range(NUM_CALIBRATION_IMAGES): os.system("convert "+imgs[i]+" -resize "+str(height)+"x"+str(width)+"! "+outDir+"/"+str(i)+".ppm") CALIBRATION_DATASET_LOC = outDir + '/*.ppm' imgs = glob.glob(CALIBRATION_DATASET_LOC) # load image, switch to BGR, subtract mean, and make dims C x H x W for Caffe img = 0 for i in range(NUM_BATCHES): batchfile = outDir + "/batch_calibration" + str(i) + ".batch" batchlistfile = outDir + "/batch_calibration" + str(i) + ".list" batchlist = open(batchlistfile,'a') batch = np.zeros(shape=(NUM_PER_BATCH, 3, height, width), dtype = np.float32) for j in range(NUM_PER_BATCH): batchlist.write(os.path.basename(imgs[img]) + '\n') im = Image.open(imgs[img]).resize((width,height), Image.NEAREST) in_ = np.array(im, dtype=np.float32, order='C') in_ = in_[:,:,::-1] in_-= np.array((104.0, 117.0, 123.0)) in_ = in_.transpose((2,0,1)) batch[j] = in_ img += 1 # save batch.tofile(batchfile) batchlist.close() # Prepend batch shape information ba = bytearray(struct.pack("4i", batch.shape[0], batch.shape[1], batch.shape[2], batch.shape[3])) with open(batchfile, 'rb+') as f: content = f.read() f.seek(0,0) f.write(ba) f.write(content) # os.system("rm " + outDir +"/*.ppm")

其中height 和 width 与模型的输入宽高一致。

其他注意修改 CALIBRATION_DATASET_LOC ,outDir

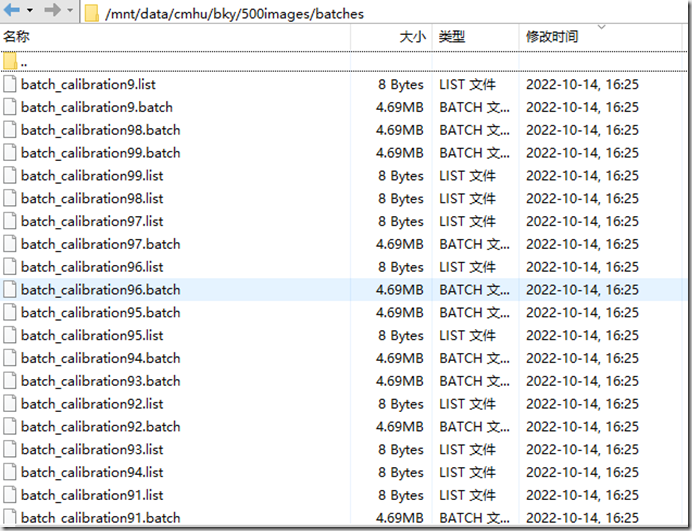

预处理得到的结果示例如下

生成校准文件

生成校准文件只需 buildEngine 成功,无需 infer

这里给出一个基于完整onnx模型推理的代码修改的生成INT8校准文件的示例代码,然后针对代码做说明:

#include "buffers.h" #include "common.h" #include "logger.h" #include "parserOnnxConfig.h" #include "NvInfer.h" #include <algorithm> #include <cmath> #include <cuda_runtime_api.h> #include <fstream> #include <iostream> #include <sstream> #include <assert.h> #include <stdio.h> #include <vector> #include <dirent.h> #include "EntropyCalibrator.h" using namespace std; class VPTBatchStream : public IBatchStream { public: VPTBatchStream( int batchSize, int maxBatches, std::string prefix, std::string suffix, std::vector<std::string> directories) : mBatchSize(batchSize) , mMaxBatches(maxBatches) , mPrefix(prefix) , mSuffix(suffix) , mDataDir(directories) { string filepath = locateFile(mPrefix + std::string("0") + mSuffix, mDataDir); cout << filepath << endl; FILE* file = fopen(filepath.c_str(), "rb"); assert(file != nullptr); int d[4]; size_t readSize = fread(d, sizeof(int), 4, file); assert(readSize == 4); mDims.nbDims = 4; // The number of dimensions. mDims.d[0] = d[0]; // Batch Size mDims.d[1] = d[1]; // Channels mDims.d[2] = d[2]; // Height mDims.d[3] = d[3]; // Width assert(mDims.d[0] > 0 && mDims.d[1] > 0 && mDims.d[2] > 0 && mDims.d[3] > 0); fclose(file); mImageSize = mDims.d[1] * mDims.d[2] * mDims.d[3]; mBatch.resize(mBatchSize * mImageSize, 0); mLabels.resize(mBatchSize, 0); mFileBatch.resize(mDims.d[0] * mImageSize, 0); mFileLabels.resize(mDims.d[0], 0); reset(0); cout << mDims.d[1] <<" " << mDims.d[2] <<" " << mDims.d[3] << endl; } VPTBatchStream(int batchSize, int maxBatches, std::string prefix, std::vector<std::string> directories) : VPTBatchStream(batchSize, maxBatches, prefix, ".batch", directories) { } VPTBatchStream( int batchSize, int maxBatches, nvinfer1::Dims dims, std::string listFile, std::vector<std::string> directories) : mBatchSize(batchSize) , mMaxBatches(maxBatches) , mDims(dims) , mListFile(listFile) , mDataDir(directories) { mImageSize = mDims.d[1] * mDims.d[2] * mDims.d[3]; mBatch.resize(mBatchSize * mImageSize, 0); mLabels.resize(mBatchSize, 0); mFileBatch.resize(mDims.d[0] * mImageSize, 0); mFileLabels.resize(mDims.d[0], 0); reset(0); } // Resets data members void reset(int firstBatch) override { mBatchCount = 0; mFileCount = 0; mFileBatchPos = mDims.d[0]; skip(firstBatch); } // Advance to next batch and return true, or return false if there is no batch left. bool next() override { if (mBatchCount == mMaxBatches) { return false; } for (int csize = 1, batchPos = 0; batchPos < mBatchSize; batchPos += csize, mFileBatchPos += csize) { assert(mFileBatchPos > 0 && mFileBatchPos <= mDims.d[0]); if (mFileBatchPos == mDims.d[0] && !update()) { return false; } // copy the smaller of: elements left to fulfill the request, or elements left in the file buffer. csize = std::min(mBatchSize - batchPos, mDims.d[0] - mFileBatchPos); std::copy_n( getFileBatch() + mFileBatchPos * mImageSize, csize * mImageSize, getBatch() + batchPos * mImageSize); std::copy_n(getFileLabels() + mFileBatchPos, csize, getLabels() + batchPos); } mBatchCount++; return true; } // Skips the batches void skip(int skipCount) override { if (mBatchSize >= mDims.d[0] && mBatchSize % mDims.d[0] == 0 && mFileBatchPos == mDims.d[0]) { mFileCount += skipCount * mBatchSize / mDims.d[0]; return; } int x = mBatchCount; for (int i = 0; i < skipCount; i++) { next(); } mBatchCount = x; } float* getBatch() override { return mBatch.data(); } float* getLabels() override { return mLabels.data(); } int getBatchesRead() const override { return mBatchCount; } int getBatchSize() const override { return mBatchSize; } nvinfer1::Dims getDims() const override { return mDims; } private: float* getFileBatch() { return mFileBatch.data(); } float* getFileLabels() { return mFileLabels.data(); } bool update() { if (mListFile.empty()) { std::string inputFileName = locateFile(mPrefix + std::to_string(mFileCount++) + mSuffix, mDataDir); FILE* file = fopen(inputFileName.c_str(), "rb"); if (!file) { return false; } int d[4]; size_t readSize = fread(d, sizeof(int), 4, file); assert(readSize == 4); assert(mDims.d[0] == d[0] && mDims.d[1] == d[1] && mDims.d[2] == d[2] && mDims.d[3] == d[3]); size_t readInputCount = fread(getFileBatch(), sizeof(float), mDims.d[0] * mImageSize, file); assert(readInputCount == size_t(mDims.d[0] * mImageSize)); size_t readLabelCount = fread(getFileLabels(), sizeof(float), mDims.d[0], file); assert(readLabelCount == 0 || readLabelCount == size_t(mDims.d[0])); fclose(file); } else { std::vector<std::string> fNames; std::ifstream file(locateFile(mListFile, mDataDir), std::ios::binary); if (!file) { return false; } sample::gLogInfo << "Batch #" << mFileCount << std::endl; file.seekg(((mBatchCount * mBatchSize)) * 7); for (int i = 1; i <= mBatchSize; i++) { std::string sName; std::getline(file, sName); sName = sName + ".ppm"; sample::gLogInfo << "Calibrating with file " << sName << std::endl; fNames.emplace_back(sName); } mFileCount++; const int imageC = 3; const int imageH = 640; const int imageW = 640; std::vector<samplesCommon::PPM<imageC, imageH, imageW>> ppms(fNames.size()); for (uint32_t i = 0; i < fNames.size(); ++i) { readPPMFile(locateFile(fNames[i], mDataDir), ppms[i]); } std::vector<float> data(samplesCommon::volume(mDims)); const float scale = 2.0 / 255.0; const float bias = 1.0; long int volChl = mDims.d[2] * mDims.d[3]; // Normalize input data for (int i = 0, volImg = mDims.d[1] * mDims.d[2] * mDims.d[3]; i < mBatchSize; ++i) { for (int c = 0; c < mDims.d[1]; ++c) { for (int j = 0; j < volChl; ++j) { data[i * volImg + c * volChl + j] = scale * float(ppms[i].buffer[j * mDims.d[1] + c]) - bias; } } } std::copy_n(data.data(), mDims.d[0] * mImageSize, getFileBatch()); } mFileBatchPos = 0; return true; } int mBatchSize{0}; int mMaxBatches{0}; int mBatchCount{0}; int mFileCount{0}; int mFileBatchPos{0}; int mImageSize{0}; std::vector<float> mBatch; //!< Data for the batch std::vector<float> mLabels; //!< Labels for the batch std::vector<float> mFileBatch; //!< List of image files std::vector<float> mFileLabels; //!< List of label files std::string mPrefix; //!< Batch file name prefix std::string mSuffix; //!< Batch file name suffix nvinfer1::Dims mDims; //!< Input dimensions std::string mListFile; //!< File name of the list of image names std::vector<std::string> mDataDir; //!< Directories where the files can be found }; struct InferDeleter { template <typename T> void operator()(T* obj) const { if (obj) { obj->destroy(); } } }; template <typename T> using SampleUniquePtr = std::unique_ptr<T, samplesCommon::InferDeleter>; static auto StreamDeleter = [](cudaStream_t* pStream) { if (pStream) { cudaStreamDestroy(*pStream); delete pStream; } }; inline std::unique_ptr<cudaStream_t, decltype(StreamDeleter)> makeCudaStream() { std::unique_ptr<cudaStream_t, decltype(StreamDeleter)> pStream(new cudaStream_t, StreamDeleter); if (cudaStreamCreateWithFlags(pStream.get(), cudaStreamNonBlocking) != cudaSuccess) { pStream.reset(nullptr); } return pStream; } struct CalibratorParams { int32_t batchSize{1}; //!< Number of inputs in a batch bool int8{false}; //!< Allow runnning the network in Int8 mode. bool fp16{false}; //!< Allow running the network in FP16 mode. std::vector<std::string> inputTensorNames; std::vector<std::string> outputTensorNames; std::string onnxFileName; std::string batchDatDir; std::string networkName; // 用来标记生成的校准文件的,如networkName 为 best, 则生成的校准文件名为 CalibrationTablebest }; bool buildEngine(CalibratorParams mParams) { // build auto builder = SampleUniquePtr<nvinfer1::IBuilder>(nvinfer1::createInferBuilder(sample::gLogger.getTRTLogger())); if (!builder) { return false; } const auto explicitBatch = 1U << static_cast<uint32_t>(NetworkDefinitionCreationFlag::kEXPLICIT_BATCH); auto network = SampleUniquePtr<nvinfer1::INetworkDefinition>(builder->createNetworkV2(explicitBatch)); if (!network) { return false; } auto config = SampleUniquePtr<nvinfer1::IBuilderConfig>(builder->createBuilderConfig()); if (!config) { return false; } auto parser = SampleUniquePtr<nvonnxparser::IParser>(nvonnxparser::createParser(*network, sample::gLogger.getTRTLogger())); if (!parser) { return false; } /************** constructNetwork **************/ auto parsed = parser->parseFromFile(mParams.onnxFileName.c_str(),static_cast<int>(sample::gLogger.getReportableSeverity())); if (!parsed) { return false; } int32_t nb_inputs = network->getNbInputs(); if (nb_inputs > 1) { cout << "warnning: nb_inputs > 1" << endl; } else if (nb_inputs <= 0) { cout << "error: nb_inputs < 0" << endl; return false; } Dims dim = network->getInput(0)->getDimensions(); // 添加IOptimization Profile,指定维度范围 // 解决 Error Code 4: Internal Error (Network has dynamic or shape inputs, but no optimization profile has been defined.) IOptimizationProfile* profile = builder->createOptimizationProfile(); profile->setDimensions(mParams.inputTensorNames[0].c_str(), OptProfileSelector::kMIN, Dims4(1, dim.d[1], dim.d[2], dim.d[3])); profile->setDimensions(mParams.inputTensorNames[0].c_str(), OptProfileSelector::kOPT, Dims4(4, dim.d[1], dim.d[2], dim.d[3])); profile->setDimensions(mParams.inputTensorNames[0].c_str(), OptProfileSelector::kMAX, Dims4(16, dim.d[1], dim.d[2], dim.d[3])); int32_t index = config->addOptimizationProfile(profile); if (index < 0) { cout << "addOptimizationProfile failed !" << endl; return false; } cout << "profile index : " << index << endl; if (mParams.fp16) { config->setFlag(BuilderFlag::kFP16); } std::unique_ptr<IInt8Calibrator> calibrator; if (mParams.int8) { config->setFlag(BuilderFlag::kINT8); std::vector<std::string> dataDirs; dataDirs.push_back(mParams.batchDatDir); VPTBatchStream calibrationStream(1, 50, "/batch_calibration", dataDirs); calibrator.reset(new Int8EntropyCalibrator2<VPTBatchStream>(calibrationStream, 0, mParams.networkName.c_str(), mParams.inputTensorNames[0].c_str())); config->setInt8Calibrator(calibrator.get()); } /***************************/ // CUDA stream used for profiling by the builder. auto profileStream = makeCudaStream(); if (!profileStream) { return false; } config->setProfileStream(*profileStream); auto mEngine = SampleUniquePtr<nvinfer1::ICudaEngine>(builder->buildEngineWithConfig(*network, *config)); if (!mEngine) { return false; } return true; } int main() { cudaSetDevice(3); CalibratorParams mParams; mParams.onnxFileName = "/mnt/data/cmhu/bky/pytorch_10cls_part_newdata_20210715_yolov5m_hw640/weights/best.onnx"; mParams.batchDatDir = "/mnt/data/cmhu/bky/500images/batches/"; mParams.inputTensorNames.push_back("images"); mParams.outputTensorNames.push_back("output"); mParams.networkName = "best"; mParams.int8 = true; mParams.fp16 = false; if (!buildEngine(mParams)) { cout << "build engine failed !!!" << endl; return -1; } cout << "build engin succeed !!" << endl; return 0; }

代码主要是两部分:VPTBatchStream 和 buildEngine。VPTBatchStream 按指定的接口方式读取batch数据,buildEngine构建推理引擎,在构建推理引擎的时候生成INT8校准文件,核心代码如下:

std::unique_ptr<IInt8Calibrator> calibrator; if (mParams.int8) { config->setFlag(BuilderFlag::kINT8); std::vector<std::string> dataDirs; dataDirs.push_back(mParams.batchDatDir); VPTBatchStream calibrationStream(1, 50, "/batch_calibration", dataDirs); calibrator.reset(new Int8EntropyCalibrator2<VPTBatchStream>(calibrationStream, 0, mParams.networkName.c_str(), mParams.inputTensorNames[0].c_str())); config->setInt8Calibrator(calibrator.get()); }

其中注意 calibrator 不能提前释放,否则会在构建推理引擎的时候报错,无法生成校准文件。

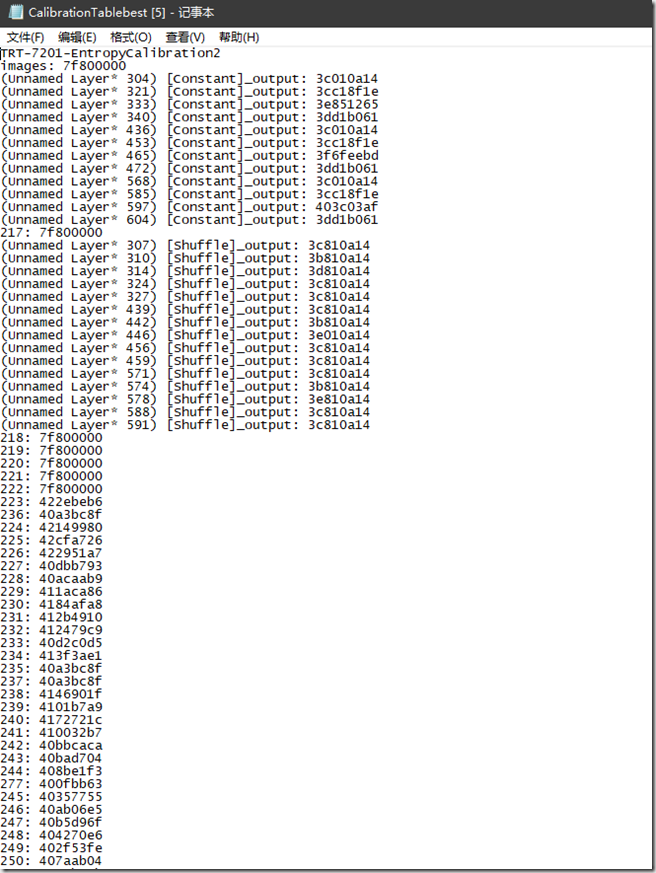

生成的校准文件名与设置的 networkName 参数有关,见 networkName 参数的注释。

生成的校准文件示例:

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步