毕业设计之LVS+keealive 负载均衡高可用实现

环境介绍

centos6.9最小化安装

|

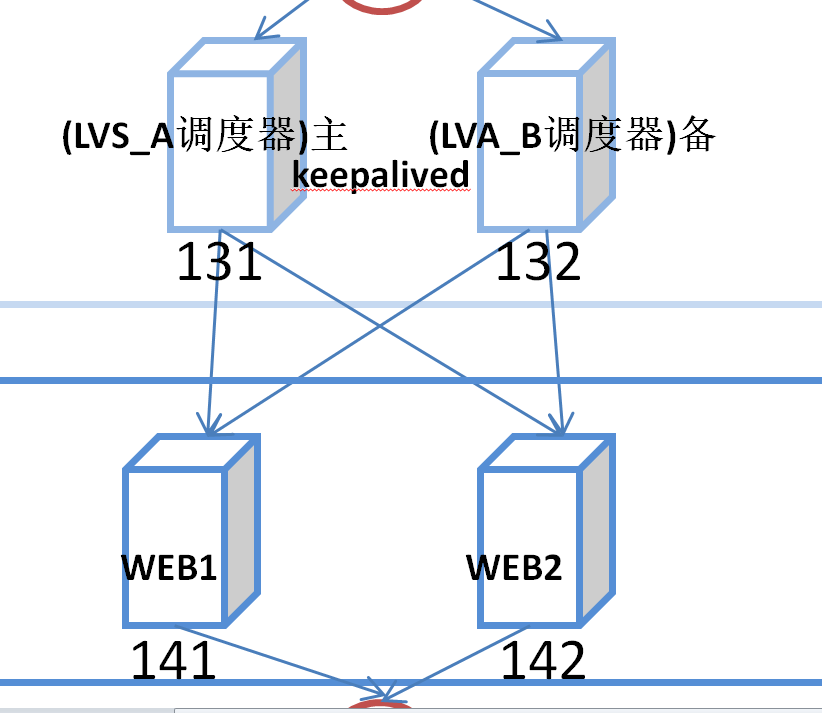

主机名 |

IPADDR |

|

lvsA.load.quan.bbs |

192.168.111.131 |

|

lvsB.load.quan.bbs |

192.168.111.132 |

|

webone.quan.bbs |

192.168.111.141 |

|

webtwo.quan.bbs |

192.168.111.142 |

lvsA.load.quan.bbs和lvsB.load.quan.bbs上都进行的操作

[apps@lvsA.load.quan.bbs ~]$yum install -y keepalived ipvsadm [apps@lvsB.load.quan.bbs ~]$yum install -y keepalived ipvsadm #keepalived能够有关于IPVS的功能模块,可以直接在其配置文件中实现LVS的配置,不需要通过ipvsadm命令再单独配置。 #但是这里安装是为了查看keepalived 有没有配置成功,具体的配置内容是什么

注意:但是A和B的配置文件是有区别的:

LSV_A的配置文件:

[root@lvsA.load.quan.bbs ~]$vim /etc/keepalived/keepalived.conf

配置文件详情如下:

! Configuration File for keepalived #全局配置 global_defs { # notification_email { # acassen@firewall.loc # failover@firewall.loc # sysadmin@firewall.loc # } # notification_email_from Alexandre.Cassen@firewall.loc # smtp_server 192.168.200.1 # smtp_connect_timeout 30 router_id LVS_DEVEL #是运行keepalived的一个表示,多个集群设置不同。 } # VRRP 实例配置 vrrp_instance VI_1 { state MASTER #指定实例初始状态 interface eth0 #指定实例绑定的网卡 virtual_router_id 31 #设置VRID标记,多个集群不能重复(0..255)主备的虚拟机路由ID必须一致 priority 100 #设置优先级,主优先级要大于备,优先级高的会被竞选为Master,最好Master要高于BACKUP50 advert_int 1 #VRRP Multicast广播周期秒数,检查的时间间隔 #设置认证 authentication { auth_type PASS # VRRP认证方式,支持PASS和AH,官方建议使用PASS auth_pass 2004 # VRRP口令字 } virtual_ipaddress { 192.168.111.100 #设置VIP,可以设置多个,用于切换时的地址绑定。 # 192.168.200.17 # 192.168.200.18 # 如果有多个VIP,继续换行填写 } }

virtual_server 192.168.111.100 80 {

delay_loop 1 # 每隔1秒查询realserver状态 后端服务器健康检查时间间隔 lb_algo wrr # lvs 算法rr|wrr|lc|wlc|lblc|sh|dh lb_kind DR # Direct Route,负载均衡转发规则NAT|DR|RUN # persistence_timeout 50 # 会话保持时间,这段时间内,同一ip发起的请求将被转发到同一个realserver protocol TCP # 用TCP协议检查realserver状态 real_server 192.168.111.141 80 { weight 1 # #默认为1,0为失效 TCP_CHECK { connect_timeout 3 #连接超时时间 nb_get_retry 3 #重连次数 delay_before_retry 3 #重连间隔时间 connect_port 80 #健康检查的端口的端口 } } # SSL_GET { # url { # path / # digest ff20ad2481f97b1754ef3e12ecd3a9cc # } # url { # path /mrtg/ # digest 9b3a0c85a887a256d6939da88aabd8cd # } # connect_timeout 3 # nb_get_retry 3 # delay_before_retry 3 # } real_server 192.168.111.142 80 { weight 1 TCP_CHECK { connect_timeout 3 nb_get_retry 3 delay_before_retry 3 connect_port 80 } } } #virtual_server 10.10.10.2 1358 { # delay_loop 6 # lb_algo rr # lb_kind NAT # persistence_timeout 50 # protocol TCP # # sorry_server 192.168.200.200 1358 # # real_server 192.168.200.2 1358 { # weight 1 # HTTP_GET { # url { # path /testurl/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334d # } # url { # path /testurl2/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334d # } # url { # path /testurl3/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334d # } # connect_timeout 3 # nb_get_retry 3 # delay_before_retry 3 # } # } # # real_server 192.168.200.3 1358 { # weight 1 # HTTP_GET { # url { # path /testurl/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334c # } # url { # path /testurl2/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334c # } # connect_timeout 3 # nb_get_retry 3 # delay_before_retry 3 # } # } #} # #virtual_server 10.10.10.3 1358 { # delay_loop 3 # lb_algo rr # lb_kind NAT # nat_mask 255.255.255.0 # persistence_timeout 50 # protocol TCP # # real_server 192.168.200.4 1358 { # weight 1 # HTTP_GET { # url { # path /testurl/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334d # } # url { # path /testurl2/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334d # } # url { # path /testurl3/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334d # } # connect_timeout 3 # nb_get_retry 3 # delay_before_retry 3 # } # } # # real_server 192.168.200.5 1358 { # weight 1 # HTTP_GET { # url { # path /testurl/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334d # } # url { # path /testurl2/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334d # } # url { # path /testurl3/test.jsp # digest 640205b7b0fc66c1ea91c463fac6334d # } # connect_timeout 3 # nb_get_retry 3 # delay_before_retry 3 # } # } #}

其中检测:

[root@lvsA.load.quan.bbs ~]$ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:2a:c9:ec brd ff:ff:ff:ff:ff:ff inet 192.168.111.131/24 brd 192.168.111.255 scope global eth0 inet 192.168.111.100/32 scope global eth0 inet6 fe80::20c:29ff:fe2a:c9ec/64 scope link valid_lft forever preferred_lft forever [root@lvsA.load.quan.bbs ~]$ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.111.100:80 wrr -> 192.168.111.141:80 Route 1 0 0 -> 192.168.111.142:80 Route 1 0 0

再LVAB上只是配置文件的稍有不同而已:不同如下:

vrrp_instance VI_1 { state BACKUP #初始状态不同 interface eth0 #注意查看需要绑定的网卡具体是哪个,可以与MASTER绑定的不一致 virtual_router_id 31 #要与A是一样才行, priority 50 #优先级,比master advert_int 1 authentication { auth_type PASS auth_pass 2004 } #其他都是一致的了

现在是检测不到的,因为master并没有殆机

-> 192.168.111.142:80 Route 1 0 0

[root@lvsB.load.quan.bbs ~]$ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

link/ether 00:50:56:25:d4:bb brd ff:ff:ff:ff:ff:ff

inet 192.168.111.132/24 brd 192.168.111.255 scope global eth0

inet6 fe80::250:56ff:fe25:d4bb/64 scope link

valid_lft forever preferred_lft forever

但是他的负载均衡配置是会存在的:

[root@lvsB.load.quan.bbs ~]$ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.111.100:80 wrr -> 192.168.111.141:80 Route 1 0 0 -> 192.168.111.142:80 Route 1 0 0

测试访问VIP 192.168.111.100

master 可以查看实时信息:

[root@lvsA.load.quan.bbs ~]$ipvsadm -Ln IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.111.100:80 wrr -> 192.168.111.141:80 Route 1 2 1 -> 192.168.111.142:80 Route 1 3 0 ActiveConn 活跃的连接数量 InAcctConn 不活跃的连接数量

模拟master殆机:

[root@lvsA.load.quan.bbs ~]$service keepalived stop

Stopping keepalived: [ OK ]

[root@lvsB.load.quan.bbs ~]$ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:50:56:25:d4:bb brd ff:ff:ff:ff:ff:ff inet 192.168.111.132/24 brd 192.168.111.255 scope global eth0 inet 192.168.111.100/32 scope global eth0 inet6 fe80::250:56ff:fe25:d4bb/64 scope link valid_lft forever preferred_lft forever

也可以查看日志:

vim /var/log/message

Feb 16 22:16:32 nginx Keepalived[3695]: Stopping Keepalived v1.2.13 (03/19,2015) Feb 16 22:16:32 nginx Keepalived_vrrp[3698]: VRRP_Instance(VI_1) sending 0 priority Feb 16 22:16:32 nginx Keepalived_vrrp[3698]: VRRP_Instance(VI_1) removing protocol VIPs. Feb 16 22:16:32 nginx Keepalived_healthcheckers[3697]: Netlink reflector reports IP 192.168.111.100 removed Feb 16 22:16:32 nginx Keepalived_healthcheckers[3697]: Removing service [192.168.111.141]:80 from VS [192.168.111.100]:80 Feb 16 22:16:32 nginx Keepalived_healthcheckers[3697]: Removing service [192.168.111.142]:80 from VS [192.168.111.100]:80 Feb 16 22:16:32 nginx kernel: IPVS: __ip_vs_del_service: enter

模拟master重启之后

[root@lvsA.load.quan.bbs ~]$service keepalived start Starting keepalived: [ OK ] [root@lvsA.load.quan.bbs ~]$ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:2a:c9:ec brd ff:ff:ff:ff:ff:ff inet 192.168.111.131/24 brd 192.168.111.255 scope global eth0 inet 192.168.111.100/32 scope global eth0 inet6 fe80::20c:29ff:fe2a:c9ec/64 scope link valid_lft forever preferred_lft forever

查看日志会更加清楚:

Feb 16 22:20:03 nginx Keepalived[3766]: Starting Keepalived v1.2.13 (03/19,2015) Feb 16 22:20:03 nginx Keepalived[3767]: Starting Healthcheck child process, pid=3769 Feb 16 22:20:03 nginx Keepalived[3767]: Starting VRRP child process, pid=3770 Feb 16 22:20:03 nginx Keepalived_vrrp[3770]: Netlink reflector reports IP 192.168.111.131 added Feb 16 22:20:03 nginx Keepalived_vrrp[3770]: Netlink reflector reports IP fe80::20c:29ff:fe2a:c9ec added Feb 16 22:20:03 nginx Keepalived_vrrp[3770]: Registering Kernel netlink reflector Feb 16 22:20:03 nginx Keepalived_vrrp[3770]: Registering Kernel netlink command channel Feb 16 22:20:03 nginx Keepalived_vrrp[3770]: Registering gratuitous ARP shared channel Feb 16 22:20:03 nginx Keepalived_healthcheckers[3769]: Netlink reflector reports IP 192.168.111.131 added Feb 16 22:20:03 nginx Keepalived_healthcheckers[3769]: Netlink reflector reports IP fe80::20c:29ff:fe2a:c9ec added Feb 16 22:20:03 nginx Keepalived_healthcheckers[3769]: Registering Kernel netlink reflector Feb 16 22:20:03 nginx Keepalived_healthcheckers[3769]: Registering Kernel netlink command channel Feb 16 22:20:13 nginx Keepalived_vrrp[3770]: Opening file '/etc/keepalived/keepalived.conf'. Feb 16 22:20:13 nginx Keepalived_vrrp[3770]: Configuration is using : 61855 Bytes Feb 16 22:20:13 nginx Keepalived_vrrp[3770]: Using LinkWatch kernel netlink reflector... Feb 16 22:20:13 nginx Keepalived_vrrp[3770]: VRRP sockpool: [ifindex(2), proto(112), unicast(0), fd(10,11)] Feb 16 22:20:13 nginx Keepalived_vrrp[3770]: VRRP_Instance(VI_1) Transition to MASTER STATE Feb 16 22:20:13 nginx Keepalived_vrrp[3770]: VRRP_Instance(VI_1) Received lower prio advert, forcing new election Feb 16 22:20:14 nginx Keepalived_vrrp[3770]: VRRP_Instance(VI_1) Entering MASTER STATE Feb 16 22:20:14 nginx Keepalived_vrrp[3770]: VRRP_Instance(VI_1) setting protocol VIPs. Feb 16 22:20:14 nginx Keepalived_vrrp[3770]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.111.100 Feb 16 22:20:19 nginx Keepalived_vrrp[3770]: VRRP_Instance(VI_1) Sending gratuitous ARPs on eth0 for 192.168.111.100 Feb 16 22:20:23 nginx Keepalived_healthcheckers[3769]: Opening file '/etc/keepalived/keepalived.conf'. Feb 16 22:20:23 nginx Keepalived_healthcheckers[3769]: Configuration is using : 13318 Bytes Feb 16 22:20:23 nginx Keepalived_healthcheckers[3769]: Using LinkWatch kernel netlink reflector... Feb 16 22:20:23 nginx Keepalived_healthcheckers[3769]: Activating healthchecker for service [192.168.111.141]:80 Feb 16 22:20:23 nginx Keepalived_healthcheckers[3769]: Activating healthchecker for service [192.168.111.142]:80 Feb 16 22:20:23 nginx Keepalived_healthcheckers[3769]: Netlink reflector reports IP 192.168.111.100 added

第一步done!!!!!

后端服务器也需要设置:

[root@webone.quan.bbs ~]$vim /etc/init.d/vip

[root@webone.quan.bbs ~]$chmod +x /etc/init.d/vip

#在 DR 模式下,2台 rs 节点的 gateway 不需要设置成 dir 节点的 IP 。

#!/bin/bash #chkconfig: 2345 80 90 便于开机自启动,90是停止 80是开机 #description:vip VIP=192.168.111.100 case "$1" in start) /sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up /sbin/route add -host $VIP dev lo:0 echo "1" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "1" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "2" >/proc/sys/net/ipv4/conf/all/arp_announce ;; stop) /sbin/ifconfig lo:0 down echo "0" >/proc/sys/net/ipv4/conf/lo/arp_ignore echo "0" >/proc/sys/net/ipv4/conf/lo/arp_announce echo "0" >/proc/sys/net/ipv4/conf/all/arp_ignore echo "0" >/proc/sys/net/ipv4/conf/all/arp_announce ;; *) echo "Usage: $0 {start|stop}" exit 1 esac exit 0

内核参数解释

arp_ignore:

0 - (默认值): 回应任何网络接口(网卡)上对任何本机IP地址的arp查询请求。比如eth0=192.168.0.1/24,eth1=10.1.1.1/24,那么即使eth0收到来自10.1.1.2这样地址发起的对10.1.1.1 的arp查询也会给出正确的回应;

而原本这个请求该是出现在eth1上,也该有eth1回应的。 1 - 只回答目标IP地址是本机上来访网络接口(网卡)IP地址的ARP查询请求 。比如eth0=192.168.0.1/24,eth1=10.1.1.1/24,那么即使eth0收到来自10.1.1.2这样地址发起的对192.168.0.1的查询会回应,

而对10.1.1.1 的arp查询不会回应。 2 -只回答目标IP地址是本机上来访网络接口(网卡)IP地址的ARP查询请求,且来访IP(源IP)必须与该网络接口(网卡)上的IP(目标IP)在同一子网段内 。比如eth0=192.168.0.1/24,eth1=10.1.1.1/24,eth1收到

来自10.1.1.2这样地址发起的对192.168.0.1的查询不会回应,而对192.168.0.2发起的对192.168.0.1的arp查询会回应。 3 - do not reply for local addresses configured with scope host,only resolutions for global and link addresses are replied。(

不回应该网络界接口的arp请求,而只对设置的唯一和连接地址做出回应) 4-7 - 保留未使用 8 -不回应所有(本机地址)的arp查询 在设置参数的时候将arp_ignore 设置为1,意味着当别人的arp请求过来的时候,如果接收的网络接口卡上面没有这个ip,就不做出响应。默认是0,只要这台机器上面任何一个设备上面有这个ip,就响应arp请求,并发送mac地址。 arp_announce 0 - (默认) 在任意网络接口(eth0,eth1,lo)上使用任何本机地址进行ARP请求。也就是说如果IP数据包中的源IP与当前发送ARP请求的网络接口卡IP地址不同时(但这个IP依然是本主机上其他网络接口卡上的IP地址),

ARP请求包中的源IP地址将使用与IP数据包中的 源IP相同的本主机上的IP地址,而不是使用当前发送ARP请求的网络接口卡的IP地址。 1 -尽量避免使用不在该网络接口(网卡)子网段内的IP地址做为arp请求的源IP地址。当接收此ARP请求的主机要求ARP请求的源IP地址与接收方IP在同一子网段时,此模式非常有用。此时会检查IP数据包中的源IP是否

为所有网络接口上子网段内的ip之一。如果找到了一个网络接口的IP正好与IP数据包中的源IP在同一子网段,则使用该网络接口卡进行ARP请求。如果IP数据包中的源IP不属于各个网络接口上子网段内的ip,

那么将采用级别2的方式来进行处理。 2 - 始终使用与目标IP地址对应的最佳本地IP地址作为ARP请求的源IP地址。在此模式下将忽略IP数据包的源IP地址并尝试选择能与目标IP地址通信的本机地址。首要是选择所有网络接口中子网包含该目标IP地址的本机IP地址。

如果没有合适的地址,将选择当前的网络接口或其他的有可能接受到该ARP回应的网络接口来进行发送ARP请求,并把发送ARP请求的网络接口卡的IP地址设置为ARP请求的源IP。

检测:

[root@webone.quan.bbs ~]$service vip start [root@webone.quan.bbs ~]$ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet 192.168.111.100/32 brd 192.168.111.100 scope global lo:0 inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:50:56:28:34:fd brd ff:ff:ff:ff:ff:ff inet 192.168.111.141/24 brd 192.168.111.255 scope global eth0 inet6 fe80::250:56ff:fe28:34fd/64 scope link valid_lft forever preferred_lft forever

开机自启动

[root@webone.quan.bbs ~]$chkconfig --add vip

[root@webone.quan.bbs ~]$chkconfig vip on

webtwo也是一样设置即可

注:附上keepalived 的配置文件详解,在下面的最后部分:

https://www.cnblogs.com/betterquan/p/11921021.html