Spark统计Hive表主键

数据开发很多时候由于文档缺失等原因导致不记得一个表哪个字段或者某几个字段构成的主键,不清楚主键很麻烦,我们就不清楚怎么去和其他的表进行关联,或者有些公司各种不规范,需求过来连产品自己都不清楚的情况,那么这个时候就只有开发自己去梳理了(苦逼的数据开发),本文主要是写了一个小工具用于根据数据检测出主键。

一.工具思路

工具的大致思路就是传入一个表名,使用desc命令查出所有字段,然后把这些字段拼接到一起,使用rollup命令把数据按照各个字段组合求取条数,最后筛选出条数为1并且分组id最大的就可以了,最后判断每个字段的值是否为空,如果不为空,那么就是主键字段之一,为空就不是。

优化建议:

-

其实像时间戳这些字段是可以去掉的,去掉后组合就会减少,有助于效率提升。

-

字段大部分值为null的也可以进行去除。

二.实现例子

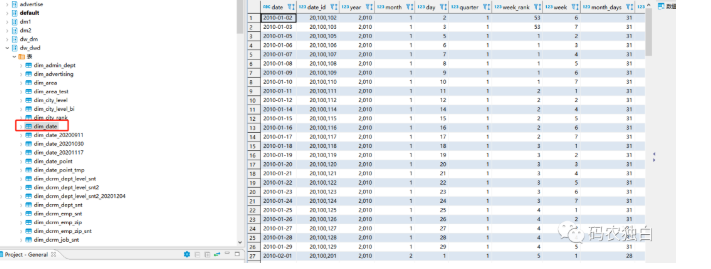

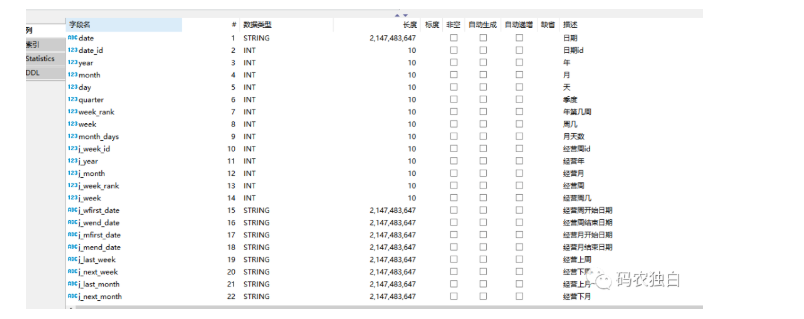

下面以dw_dwd.dim_date日期维度表来进行测试,该表有24个字段,主键是date。

执行spark拼凑出以下sql,首先是找到每组条数为1的sql语句:

select t4.* from(select date,date_id,year,month,day,quarter,week_rank,week,month_days,j_week_id,j_year,j_month,j_week_rank,j_week,j_wfirst_date,j_wend_date,j_mfirst_date,j_mend_date,j_last_week,j_next_week,j_last_month,j_next_month,work_date_code,etl_time,count(*) AS num,GROUPING__ID as test_group_id from dw_dwd.dim_date group by date,date_id,year,month,day,quarter,week_rank,week,month_days,j_week_id,j_year,j_month,j_week_rank,j_week,j_wfirst_date,j_wend_date,j_mfirst_date,j_mend_date,j_last_week,j_next_week,j_last_month,j_next_month,work_date_code,etl_time with ROLLUP) t4 where t4.test_group_id in (select t2.GROUPING__ID from (select max(t1.num) as num,t1.GROUPING__ID from (select date,date_id,year,month,day,quarter,week_rank,week,month_days,j_week_id,j_year,j_month,j_week_rank,j_week,j_wfirst_date,j_wend_date,j_mfirst_date,j_mend_date,j_last_week,j_next_week,j_last_month,j_next_month,work_date_code,etl_time,count(*) AS num,GROUPING__ID from dw_dwd.dim_date group by date,date_id,year,month,day,quarter,week_rank,week,month_days,j_week_id,j_year,j_month,j_week_rank,j_week,j_wfirst_date,j_wend_date,j_mfirst_date,j_mend_date,j_last_week,j_next_week,j_last_month,j_next_month,work_date_code,etl_time with ROLLUP order by GROUPING__ID asc)t1 group by t1.GROUPING__ID)t2 where t2.num=1)

然后是拼凑出分组最大的以及对字段判空的sql:

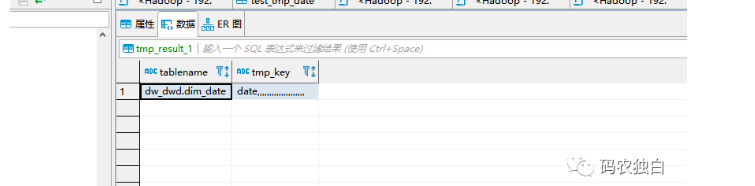

select 'dw_dwd.dim_date' as tableName,concat(if(length(max(date))>0,'date',''),',',if(length(max(date_id))>0,'date_id',''),',',if(length(max(year))>0,'year',''),',',if(length(max(month))>0,'month',''),',',if(length(max(day))>0,'day',''),',',if(length(max(quarter))>0,'quarter',''),',',if(length(max(week_rank))>0,'week_rank',''),',',if(length(max(week))>0,'week',''),',',if(length(max(month_days))>0,'month_days',''),',',if(length(max(j_week_id))>0,'j_week_id',''),',',if(length(max(j_year))>0,'j_year',''),',',if(length(max(j_month))>0,'j_month',''),',',if(length(max(j_week_rank))>0,'j_week_rank',''),',',if(length(max(j_week))>0,'j_week',''),',',if(length(max(j_wfirst_date))>0,'j_wfirst_date',''),',',if(length(max(j_wend_date))>0,'j_wend_date',''),',',if(length(max(j_mfirst_date))>0,'j_mfirst_date',''),',',if(length(max(j_mend_date))>0,'j_mend_date',''),',',if(length(max(j_last_week))>0,'j_last_week',''),',',if(length(max(j_next_week))>0,'j_next_week',''),',',if(length(max(j_last_month))>0,'j_last_month',''),',',if(length(max(j_next_month))>0,'j_next_month',''),',',if(length(max(work_date_code))>0,'work_date_code',''),',',if(length(max(etl_time))>0,'etl_time',''),',') as tmp_key from global_temp.temp_key_dataframe where `test_group_id` in (select max(`test_group_id`) as id from global_temp.temp_key_dataframe) group by `test_group_id`

最后查看结果,只有date字段:

spark源码可关注公众号《码农独白》搜索Spark统计Hive表主键获取:

浙公网安备 33010602011771号

浙公网安备 33010602011771号