CS231n assignment3 Q2 Image Captioning with LSTMs

跟作业1很类似,区别只是在于每个单元的公式不一样

前向过程

def lstm_step_forward(x, prev_h, prev_c, Wx, Wh, b):

"""

Forward pass for a single timestep of an LSTM.

The input data has dimension D, the hidden state has dimension H, and we use

a minibatch size of N.

Note that a sigmoid() function has already been provided for you in this file.

Inputs:

- x: Input data, of shape (N, D)

- prev_h: Previous hidden state, of shape (N, H)

- prev_c: previous cell state, of shape (N, H)

- Wx: Input-to-hidden weights, of shape (D, 4H)

- Wh: Hidden-to-hidden weights, of shape (H, 4H)

- b: Biases, of shape (4H,)

Returns a tuple of:

- next_h: Next hidden state, of shape (N, H)

- next_c: Next cell state, of shape (N, H)

- cache: Tuple of values needed for backward pass.

"""

next_h, next_c, cache = None, None, None

#############################################################################

# TODO: Implement the forward pass for a single timestep of an LSTM. #

# You may want to use the numerically stable sigmoid implementation above. #

#############################################################################

#i = sigmoid(ai),f = sigmoid(af),o = sigmoid(ao),g = tanh(ag)

#c = f * prev_c + i * g

#h = o * tanh(c)

N,H = prev_h.shape

A = x.dot(Wx) + prev_h.dot(Wh) + b #A是拼成的大矩阵的计算下来的结果

ai = A[:,0:H]

af = A[:,H: 2*H]

ao = A[:,2*H : 3*H]

ag = A[:,3*H : 4*H]

i = sigmoid(ai)

f = sigmoid(af)

o = sigmoid(ao)

g = np.tanh(ag)

next_c = np.multiply(f,prev_c) + np.multiply(i,g)

next_h = np.multiply(o,np.tanh(next_c))

cache = (x,prev_h,prev_c,i,f,o,g,Wx,Wh,next_c,A)

##############################################################################

# END OF YOUR CODE #

##############################################################################

return next_h, next_c, cache

next_h error: 5.7054131967097955e-09

next_c error: 5.8143123088804145e-09

后向过程

def lstm_step_backward(dnext_h, dnext_c, cache):

"""

Backward pass for a single timestep of an LSTM.

Inputs:

- dnext_h: Gradients of next hidden state, of shape (N, H)

- dnext_c: Gradients of next cell state, of shape (N, H)

- cache: Values from the forward pass

Returns a tuple of:

- dx: Gradient of input data, of shape (N, D)

- dprev_h: Gradient of previous hidden state, of shape (N, H)

- dprev_c: Gradient of previous cell state, of shape (N, H)

- dWx: Gradient of input-to-hidden weights, of shape (D, 4H)

- dWh: Gradient of hidden-to-hidden weights, of shape (H, 4H)

- db: Gradient of biases, of shape (4H,)

"""

dx, dprev_h, dprev_c, dWx, dWh, db = None, None, None, None, None, None

#############################################################################

# TODO: Implement the backward pass for a single timestep of an LSTM. #

# #

# HINT: For sigmoid and tanh you can compute local derivatives in terms of #

# the output value from the nonlinearity. #

#############################################################################

#利用前边的公式计算即可

N,H = dnext_h.shape

prev_x,prev_h,prev_c,i,f,o,g,Wx,Wh,nc,A = cache

ai = A[:,0:H]

af = A[:,H: 2*H]

ao = A[:,2*H : 3*H]

ag = A[:,3*H : 4*H]

#计算到c_t-1的梯度

dc_c = np.multiply(dnext_c,f)

dc_h_temp = np.multiply(dnext_h,o)

temp = np.ones_like(nc) - np.square(np.tanh(nc))

temp2 = np.multiply(temp,f)

dprev_c = np.multiply(temp2,dc_h_temp) + dc_c

#计算(dE/dh)(dh/dc)

dc_from_h = np.multiply(dc_h_temp,temp)

dtotal_c = dc_from_h + dnext_c

#计算到o,f,i,g的梯度

tempo = np.multiply(np.tanh(nc),dnext_h)

tempf = np.multiply(dtotal_c,prev_c)

tempi = np.multiply(dtotal_c,g)

tempg = np.multiply(dtotal_c,i)

#计算到ao,ai,af,ag的梯度

tempao = np.multiply(tempo,np.multiply(o,np.ones_like(o) - o))

tempai = np.multiply(tempi,np.multiply(i,np.ones_like(i) - i))

tempaf = np.multiply(tempf,np.multiply(f,np.ones_like(f) - f))

dtanhg = np.ones_like(ag) - np.square(np.tanh(ag))

tempag = np.multiply(tempg,dtanhg)

#计算各参数的梯度

Temp = np.concatenate((tempai,tempaf,tempao,tempag),axis = 1)

dx = Temp.dot(Wx.T)

dprev_h = Temp.dot(Wh.T)

xt = prev_x.T

dWx = xt.dot(Temp)

ht = prev_h.T

dWh = ht.dot(Temp)

db = np.sum(Temp,axis = 0).T

##############################################################################

# END OF YOUR CODE #

##############################################################################

return dx, dprev_h, dprev_c, dWx, dWh, db

dx error: 6.335163002532046e-10

dh error: 3.3963774090592634e-10

dc error: 1.5221747946070454e-10

dWx error: 2.1010960934639614e-09

dWh error: 9.712296109943072e-08

db error: 2.491522041931035e-10

前向传播

def lstm_forward(x, h0, Wx, Wh, b):

"""

Forward pass for an LSTM over an entire sequence of data. We assume an input

sequence composed of T vectors, each of dimension D. The LSTM uses a hidden

size of H, and we work over a minibatch containing N sequences. After running

the LSTM forward, we return the hidden states for all timesteps.

Note that the initial cell state is passed as input, but the initial cell

state is set to zero. Also note that the cell state is not returned; it is

an internal variable to the LSTM and is not accessed from outside.

Inputs:

- x: Input data of shape (N, T, D)

- h0: Initial hidden state of shape (N, H)

- Wx: Weights for input-to-hidden connections, of shape (D, 4H)

- Wh: Weights for hidden-to-hidden connections, of shape (H, 4H)

- b: Biases of shape (4H,)

Returns a tuple of:

- h: Hidden states for all timesteps of all sequences, of shape (N, T, H)

- cache: Values needed for the backward pass.

"""

h, cache = None, None

#############################################################################

# TODO: Implement the forward pass for an LSTM over an entire timeseries. #

# You should use the lstm_step_forward function that you just defined. #

#############################################################################

N,T,D = x.shape

N,H = h0.shape

prev_h = h0

#for backward

h3 = np.empty([N,T,H])

h4 = np.empty([N,T,H])

I = np.empty([N,T,H])

F = np.empty([N,T,H])

O = np.empty([N,T,H])

G = np.empty([N,T,H])

NC = np.empty([N,T,H])

AT = np.empty([N,T,4 * H])

h2 = np.empty([N,T,H])

prev_c = np.zeros_like(prev_h)

for i in range(T):

h3[:,i,:] = prev_h

h4[:,i,:] = prev_c

next_h,next_c,cache_temp = lstm_step_forward(x[:,i,:],prev_h,prev_c,Wx,Wh,b)

prev_h = next_h

prev_c = next_c #更新隐藏层状态

h2[:,i,:] = prev_h #用来记录状态

I[:,i,:] = cache_temp[3]

F[:,i,:] = cache_temp[4]

O[:,i,:] = cache_temp[5]

G[:,i,:] = cache_temp[6]

NC[:,i,:] = cache_temp[9]

AT[:,i,:] = cache_temp[10]

cache = (x,h3,h4,I,F,O,G,Wx,Wh,NC,AT)

##############################################################################

# END OF YOUR CODE #

##############################################################################

return h2, cache

h error: 8.610537452106624e-08

后向传播

def lstm_backward(dh, cache):

"""

Backward pass for an LSTM over an entire sequence of data.]

Inputs:

- dh: Upstream gradients of hidden states, of shape (N, T, H)

- cache: Values from the forward pass

Returns a tuple of:

- dx: Gradient of input data of shape (N, T, D)

- dh0: Gradient of initial hidden state of shape (N, H)

- dWx: Gradient of input-to-hidden weight matrix of shape (D, 4H)

- dWh: Gradient of hidden-to-hidden weight matrix of shape (H, 4H)

- db: Gradient of biases, of shape (4H,)

"""

dx, dh0, dWx, dWh, db = None, None, None, None, None

#############################################################################

# TODO: Implement the backward pass for an LSTM over an entire timeseries. #

# You should use the lstm_step_backward function that you just defined. #

#############################################################################

x = cache[0]

N,T,D = x.shape

N,T,H = dh.shape

dWx = np.zeros((D,4*H))

dWh = np.zeros((H,4*H))

db = np.zeros(4*H)

dout = dh

dx = np.empty([N,T,D])

hnow = np.zeros([N,H])

cnow = np.zeros([N,H])

for k in range(T):

i = T-1-k

hnow = hnow + dout[:,i,:]

cacheT = (cache[0][:,i,:],cache[1][:,i,:],cache[2][:,i,:],cache[3][:,i,:],

cache[4][:,i,:],cache[5][:,i,:],cache[6][:,i,:],cache[7],cache[8],cache[9][:,i,:],cache[10][:,i,:])

#对应的从之前的cache中取出当前用的那一个

dx_temp,dprev_h,dprev_c,dWx_temp,dWh_temp,db_temp = lstm_step_backward(hnow,cnow,cacheT)

hnow = dprev_h

cnow = dprev_c

dx[:,i,:] = dx_temp

dWx = dWx + dWx_temp

dWh = dWh + dWh_temp

db = db + db_temp #将当前的梯度加上之前累积的

dh0 = hnow

##############################################################################

# END OF YOUR CODE #

##############################################################################

return dx, dh0, dWx, dWh, db

dx error: 6.993891410911015e-09

dh0 error: 1.5042757150604852e-09

dWx error: 3.226295800444722e-09

dWh error: 2.6984653332291804e-06

db error: 8.236637741088407e-10

计算loss

def loss(self, features, captions):

"""

计算训练时RNN/LSTM的损失函数。我们输入图像特征和正确的图片注释,使用RNN/LSTM计算损失函数和所有参数的梯度

输入:

- features: 输入图像特征,维度 (N, D)

- captions: 正确的图像注释; 维度为(N, T)的整数列

输出一个tuple:

- loss: 标量损失函数值

- grads: 所有参数的梯度

"""

#这里将captions分成了两个部分,captions_in是除了最后一个词外的所有词,是输入到RNN/LSTM的输入;

#captions_out是除了第一个词外的所有词,是RNN/LSTM期望得到的输出。

captions_in = captions[:, :-1]

captions_out = captions[:, 1:]

# You'll need this

mask = (captions_out != self._null)

# 从图像特征到初始隐藏状态的权值矩阵和偏差值

W_proj, b_proj = self.params['W_proj'], self.params['b_proj']

# 词嵌入矩阵

W_embed = self.params['W_embed']

# RNN/LSTM参数

Wx, Wh, b = self.params['Wx'], self.params['Wh'], self.params['b']

# 每一隐藏层到输出的权值矩阵和偏差

W_vocab, b_vocab = self.params['W_vocab'], self.params['b_vocab']

loss, grads = 0.0, {}

############################################################################

# TODO: Implement the forward and backward passes for the CaptioningRNN. #

# In the forward pass you will need to do the following: #

# (1) Use an affine transformation to compute the initial hidden state #

# from the image features. This should produce an array of shape (N, H)#

# (2) Use a word embedding layer to transform the words in captions_in #

# from indices to vectors, giving an array of shape (N, T, W). #

# (3) Use either a vanilla RNN or LSTM (depending on self.cell_type) to #

# process the sequence of input word vectors and produce hidden state #

# vectors for all timesteps, producing an array of shape (N, T, H). #

# (4) Use a (temporal) affine transformation to compute scores over the #

# vocabulary at every timestep using the hidden states, giving an #

# array of shape (N, T, V). #

# (5) Use (temporal) softmax to compute loss using captions_out, ignoring #

# the points where the output word is <NULL> using the mask above. #

# #

# In the backward pass you will need to compute the gradient of the loss #

# with respect to all model parameters. Use the loss and grads variables #

# defined above to store loss and gradients; grads[k] should give the #

# gradients for self.params[k]. #

# #

# Note also that you are allowed to make use of functions from layers.py #

# in your implementation, if needed. #

############################################################################

N,D = features.shape

#(1) 用线性变换从图像特征值得到初始隐藏状态,将产生维度为(N,H)的数列

out,cache_affine = temporal_affine_forward(features.reshape(N,1,D),W_proj,b_proj)

N,T,H = out.shape

h0 = out.reshape(N,H)

#(2) 用词嵌入层将captions_in中词的索引转换成词向量,得到一个维度为(N, T, W)的数列

word_out,cache_word = word_embedding_forward(captions_in,W_embed)

#(3) 用RNN/LSTM处理输入的词向量,产生每一层的隐藏状态,维度为(N,T,H)

if self.cell_type == 'rnn':

hidden,cache_hidden = rnn_forward(word_out,h0,Wx,Wh,b)

else:

hidden,cache_hidden = lstm_forward(word_out,h0,Wx,Wh,b)

#(4) 用线性变换计算每一步隐藏层对应的输出(得分),维度(N, T, V)

out_vo,cache_vo = temporal_affine_forward(hidden,W_vocab,b_vocab)

#(5) 用softmax函数计算损失,真实值为captions_out, 用mask忽视所有向量中<NULL>词汇

loss,dx = temporal_softmax_loss(out_vo[:,:,:],captions_out,mask,verbose = False)

#反向传播,得到对应参数

dx_affine,dW_vocab,db_vocab = temporal_affine_backward(dx,cache_vo)

grads['W_vocab'] = dW_vocab

grads['b_vocab'] = db_vocab

if self.cell_type == 'rnn':

dx_hidden,dh0,dWx,dWh,db = rnn_backward(dx_affine,cache_hidden)

else:

dx_hidden,dh0,dWx,dWh,db = lstm_backward(dx_affine,cache_hidden)

grads['Wx'] = dWx

grads['Wh'] = dWh

grads['b'] = db

dW_embed = word_embedding_backward(dx_hidden,cache_word)

grads['W_embed'] = dW_embed

dx_initial,dW_proj,db_proj = temporal_affine_backward(dh0.reshape(N,T,H),cache_affine)

grads['W_proj'] = dW_proj

grads['b_proj'] = db_proj

############################################################################

# END OF YOUR CODE #

############################################################################

return loss, grads

loss: 9.824459354432264

expected loss: 9.82445935443

difference: 2.2648549702353193e-12

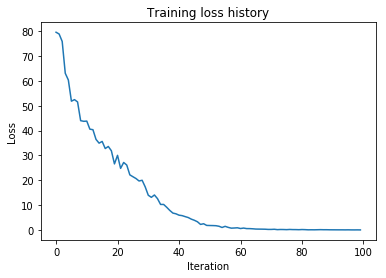

过拟合一个小数据集

(Iteration 1 / 100) loss: 79.551150

(Iteration 11 / 100) loss: 43.829102

(Iteration 21 / 100) loss: 30.062629

(Iteration 31 / 100) loss: 14.020168

(Iteration 41 / 100) loss: 6.004460

(Iteration 51 / 100) loss: 1.850130

(Iteration 61 / 100) loss: 0.640367

(Iteration 71 / 100) loss: 0.280877

(Iteration 81 / 100) loss: 0.231307

(Iteration 91 / 100) loss: 0.120624

sample

def sample(self, features, max_length=30):

"""

Run a test-time forward pass for the model, sampling captions for input

feature vectors.

At each timestep, we embed the current word, pass it and the previous hidden

state to the RNN to get the next hidden state, use the hidden state to get

scores for all vocab words, and choose the word with the highest score as

the next word. The initial hidden state is computed by applying an affine

transform to the input image features, and the initial word is the <START>

token.

For LSTMs you will also have to keep track of the cell state; in that case

the initial cell state should be zero.

输入:

- captions: 输入图像特征,维度 (N, D)

- max_length: 生成的注释的最长长度

输出:

- captions: 采样得到的注释,维度(N, max_length), 每个元素是词汇的索引

"""

N = features.shape[0]

captions = self._null * np.ones((N, max_length), dtype=np.int32)

# Unpack parameters

W_proj, b_proj = self.params['W_proj'], self.params['b_proj']

W_embed = self.params['W_embed']

Wx, Wh, b = self.params['Wx'], self.params['Wh'], self.params['b']

W_vocab, b_vocab = self.params['W_vocab'], self.params['b_vocab']

###########################################################################

# TODO: Implement test-time sampling for the model. You will need to #

# initialize the hidden state of the RNN by applying the learned affine #

# transform to the input image features. The first word that you feed to #

# the RNN should be the <START> token; its value is stored in the #

# variable self._start. At each timestep you will need to do to: #

# (1) Embed the previous word using the learned word embeddings #

# (2) Make an RNN step using the previous hidden state and the embedded #

# current word to get the next hidden state. #

# (3) Apply the learned affine transformation to the next hidden state to #

# get scores for all words in the vocabulary #

# (4) Select the word with the highest score as the next word, writing it #

# (the word index) to the appropriate slot in the captions variable #

# #

# For simplicity, you do not need to stop generating after an <END> token #

# is sampled, but you can if you want to. #

# #

# HINT: You will not be able to use the rnn_forward or lstm_forward #

# functions; you'll need to call rnn_step_forward or lstm_step_forward in #

# a loop. #

# #

# NOTE: we are still working over minibatches in this function. Also if #

# you are using an LSTM, initialize the first cell state to zeros. #

###########################################################################

# (1)用线性变换从图像特征值得到初始隐藏状态,将产生维度为(N,H)的数列

N,D = features.shape

out,cache_affine = temporal_affine_forward(features.reshape(N,1,D),W_proj,b_proj)

N,T,H = out.shape

h0 = out.reshape(N,H)

h = h0

#初始输入

x0 = W_embed[[1,1],:]

x_input = x0

captions[:,0] = [1,1]

prev_c = np.zeros_like(h) # only for lstm

#(2) 执行rnn/lstm步骤

for i in range(max_length - 1):

if self.cell_type == 'rnn':

next_h,_ = rnn_step_forward(x_input,h,Wx,Wh,b)

else:

next_h,next_c,cache = lstm_step_forward(x_input,h,prev_c,Wx,Wh,b)

prev_c = next_c

#(3) 计算每一层的输出

out_vo,cache_vo = temporal_affine_forward(next_h.reshape(N,1,H),W_vocab,b_vocab)

#(4) 找到输出最大值的项作为下一时刻的输入

index = np.argmax(out_vo,axis = 2)

x_input = np.squeeze(W_embed[index,:])

h = next_h

captions[:,i+1] = np.squeeze(index)#记录其索引

############################################################################

# END OF YOUR CODE #

############################################################################

return captions