hive学习(五) 应用案例

1.实现struct数据结构例子

1.1创建student表

create table student( id int, info struct<name:string,age:int> ) row format delimited fields terminated by ',' collection items terminated by ':';

1.2向这个student表中插入数

上传文件到hdfs,并加载hdfs上的文件

上传前 根目录下的目录

1.3上传文件到hdfs根目录

[root@node04 ~]# hdfs dfs -put /root/data2 /

刷新网页

1.4客户端执行上传

hive> load data inpath '/data2' into table student; Loading data to table default.student OK Time taken: 5.294 seconds

根目录下的data2目录移动到远程目录下,改成copy后缀的名称

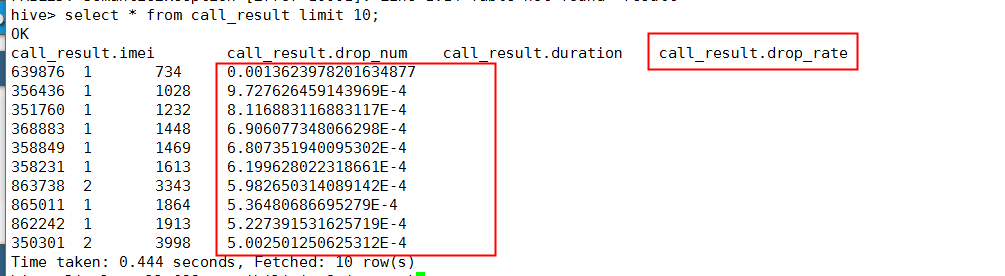

2.基站掉话率,找出掉线率最高的前10名

2.1排名需求

record time:通话时间

imei:基站编号

cell:手机编号

drop_num:掉话的描述

duration:通话持续总秒数

2.2建表call_monitor

create table call_monitor ( record_time string, imei string, cell string, ph_num string, call_num string, drop_num int, duration int, drop_rate double, net_type string, erl string ) row format delimited fields terminated by ','

2.3加载实验数据;

123.txt 实验数据 (有将近10w行数据)

[root@node04 ~]# head -10 123.csv record_time,imei,cell,ph_num,call_num,drop_num,duration,drop_rate,net_type,erl 2011-07-13 00:00:00+08,356966,29448-37062,0,0,0,0,0,G,0 2011-07-13 00:00:00+08,352024,29448-51331,0,0,0,0,0,G,0 2011-07-13 00:00:00+08,353736,29448-51331,0,0,0,0,0,G,0 2011-07-13 00:00:00+08,353736,29448-51333,0,0,0,0,0,G,0 2011-07-13 00:00:00+08,351545,29448-51333,0,0,0,0,0,G,0 2011-07-13 00:00:00+08,353736,29448-51343,1,0,0,8,0,G,0 2011-07-13 00:00:00+08,359681,29448-51462,0,0,0,0,0,G,0 2011-07-13 00:00:00+08,354707,29448-51462,0,0,0,0,0,G,0 2011-07-13 00:00:00+08,356137,29448-51470,0,0,0,0,0,G,0 插入数据到数据库

hive> load data local inpath '/root/123.csv' into table cell_monitor;

由于数据量比较多选择5条查询

hive> select * from call_monitor limit 5; OK record_time imei cell ph_num call_num NULL NULL NULL net_type erl 2011-07-13 00:00:00+08 356966 29448-37062 0 0 0 0 0.0 G 0 2011-07-13 00:00:00+08 352024 29448-51331 0 0 0 0 0.0 G 0 2011-07-13 00:00:00+08 353736 29448-51331 0 0 0 0 0.0 G 0 2011-07-13 00:00:00+08 353736 29448-51333 0 0 0 0 0.0 G 0 Time taken: 5.268 seconds, Fetched: 5 row(s)

三个显示null是因为,类型为两个int和一个double,而字段属性名都是string类型所以识别不了显示null

2.4创建结果表call_result

create table call_result( imei string, drop_num int, duration int, drop_rate double ) row format delimited fields terminated by ',';

从原始表中获取数据插入结果表

from call_monitor cm insert into call_result select cm.imei,sum(cm.drop_num) sdrop,sum(cm.duration) sdura,sum(cm.drop_num)/sum(cm.duration) s_rate group by cm.imei order by s_rate desc;

执行报错:

Starting Job = job_1547478544687_0001, Tracking URL = http://node04:8088/proxy/application_1547478544687_0001/

Kill Command = /opt/hadoop-3.1.1/bin/mapred job -kill job_1547478544687_0001

Hadoop job information for Stage-1: number of mappers: 0; number of reducers: 0

2019-01-14 23:17:47,747 Stage-1 map = 0%, reduce = 0%

java.io.IOException: java.net.ConnectException: Call From node04/10.10.0.14 to node01:10020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefuse

jobhistory的主要功能是记录MapReduce的作业信息,默认情况下是没有启动的,需要配置完后手工启动服务。

./mr-jobhistory-daemon.sh start historyserver [root@node01 sbin]# netstat -lntup|grep 10020 tcp 0 0 10.10.0.11:10020 0.0.0.0:* LISTEN 7129/java

hive> from call_monitor cm insert into call_result select cm.imei,sum(cm.drop_num) sdrop,sum(cm.duration) sdura,sum(cm.drop_num)/sum(cm.duration) s_rate group by cm.imei order by s_rate desc; Query ID = root_20190117012907_30f64bed-26aa-4fa9-89d6-f270f7d5c78d Total jobs = 2 Launching Job 1 out of 2 Number of reduce tasks not specified. Estimated from input data size: 1 In order to change the average load for a reducer (in bytes): set hive.exec.reducers.bytes.per.reducer=<number> In order to limit the maximum number of reducers: set hive.exec.reducers.max=<number> In order to set a constant number of reducers: set mapreduce.job.reduces=<number> Starting Job = job_1547641212223_0010, Tracking URL = http://node03:8088/proxy/application_1547641212223_0010/ Kill Command = /opt/hadoop-3.1.1/bin/mapred job -kill job_1547641212223_0010 Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 1 2019-01-17 01:29:29,142 Stage-1 map = 0%, reduce = 0% 2019-01-17 01:29:49,591 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.96 sec 2019-01-17 01:30:49,868 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 3.96 sec 2019-01-17 01:31:00,064 Stage-1 map = 100%, reduce = 67%, Cumulative CPU 5.73 sec 2019-01-17 01:31:29,873 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 8.47 sec MapReduce Total cumulative CPU time: 8 seconds 470 msec Ended Job = job_1547641212223_0010 Launching Job 2 out of 2 Number of reduce tasks determined at compile time: 1 In order to change the average load for a reducer (in bytes): set hive.exec.reducers.bytes.per.reducer=<number> In order to limit the maximum number of reducers: set hive.exec.reducers.max=<number> In order to set a constant number of reducers: set mapreduce.job.reduces=<number> Starting Job = job_1547641212223_0011, Tracking URL = http://node03:8088/proxy/application_1547641212223_0011/ Kill Command = /opt/hadoop-3.1.1/bin/mapred job -kill job_1547641212223_0011 Hadoop job information for Stage-2: number of mappers: 1; number of reducers: 1 2019-01-17 01:31:44,214 Stage-2 map = 0%, reduce = 0% 2019-01-17 01:31:58,527 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 1.58 sec 2019-01-17 01:32:08,732 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 4.63 sec MapReduce Total cumulative CPU time: 4 seconds 630 msec Ended Job = job_1547641212223_0011 Loading data to table default.call_result MapReduce Jobs Launched: Stage-Stage-1: Map: 1 Reduce: 1 Cumulative CPU: 8.47 sec HDFS Read: 57416114 HDFS Write: 415000 SUCCESS Stage-Stage-2: Map: 1 Reduce: 1 Cumulative CPU: 4.63 sec HDFS Read: 428553 HDFS Write: 264283 SUCCESS Total MapReduce CPU Time Spent: 13 seconds 100 msec OK _col0 _col1 _col2 _col3 Time taken: 188.336 seconds

3.使用hive实现wordcount

3.1.实验wc文件

hello world hive

hadoop hello hive

hello

world

3,2创建wc表

create table wc( word string );

3.3加载数据

load data local inpath '/root/wc' into table wc;

3.4切分数据

select split(word,' ') from wc; select explode(split(word,' ')) from wc;

hive> select split(word,' ') from wc; OK ["hello","world","hive"] ["hadoop","hello","hive"] ["hello"] ["world"] Time taken: 18.56 seconds, Fetched: 4 row(s)

hive> select explode(split(word,' ')) from wc; OK hello world hive hadoop hello hive hello world Time taken: 4.82 seconds, Fetched: 8 row(s)

3.5创建结果表

create table wc_result( word string, ct int ); from (select explode(split(word,' ')) word from wc) t1 insert into wc_result select t1.word,count(t1.word) group by t1.word;

浙公网安备 33010602011771号

浙公网安备 33010602011771号