Mariadb之MHA配置

工作拓扑

一、MHA简明:

MHA(Master HA)是一款开源的MySQL的高可用程序,它为MySQL主从复制架构提供了

automating master failover 功能。MHA在监控到master节点故障时,会提升其中

拥有最新数据的slave节点来成为新的master节点。在此期间,MHA会通过于其它从节点

获取额外信息来避免一致性方面的问题。MHA还提供了master节点的在线切换功能,即按

需切换master/slave节点。

MHA服务有两咱角色。MHA Mangager(管理节点)和MHA Node(数据节点):

MHA Manager:通常单独部署在一台独立机器上管理多个master/slave集群,每个

master/slave集群称作一个application:

MHA node:运行在每台MySQL服务器之上(master/slave/manager),它通过监控

具务解析和清理logs功能来脚本来加快故障转移。

二、MHA 组件

MHA会提供诸多工具程序,其常见的如下所示。

Manager节点:

masterha_check_ssh:MHA依赖的SSH环境检测工具;

masterha_check_repl:MySQL复制环境检测工具;

masterha_mamager:MHA服务主程序;

masterha_check_status:MHA运行状态探测工具;

masterha_master_monitor:MySQL master节点可用性监测工具;

masterha_master_swith:master节点切换工具;

masterha_conf_host:添加或删除配置的节点;

masterha_stop:斗闭MHA服务的工具 ;

Node 节点:

save_binary_logs:保存和复制Master的二进制日志 ;

apply_diff_replay_logs:识别差异的中继日志事件并应用于其它slave;

filter_mysqlbinlog:去除不必要的ROLLBACK事件(MHA已不再使用这个工具);

purge_replay_logs:清除中继日志(不会阻塞SQL线程);

自定义扩展:

secondary_check_script:通过多条网络路由检测master的可用性;

master_ip_failover_scipt:更新application使用的masterip;

shutdown_script:强制关闭master节点;

report_scipt:发送报告;

init_conf_load_script:加载初始配置参数;

master_ip_online_change_scipt:更新master节点ip地址;

三、准备MySQL Replicatin环境

MHA对MySQL复制环境有特殊要求,例如各节点都要开启二进制日志及中继日志,各从节点

必须启用其read-only skip_name_resolve innodb_file_per_table=ON 属性,并关闭relay_log_purge

功能等,还有体集事物所必需的同步;同步主机:

#for i in {0..3} ; do ssh node$i ntpdate 172.16.0.1 ; done

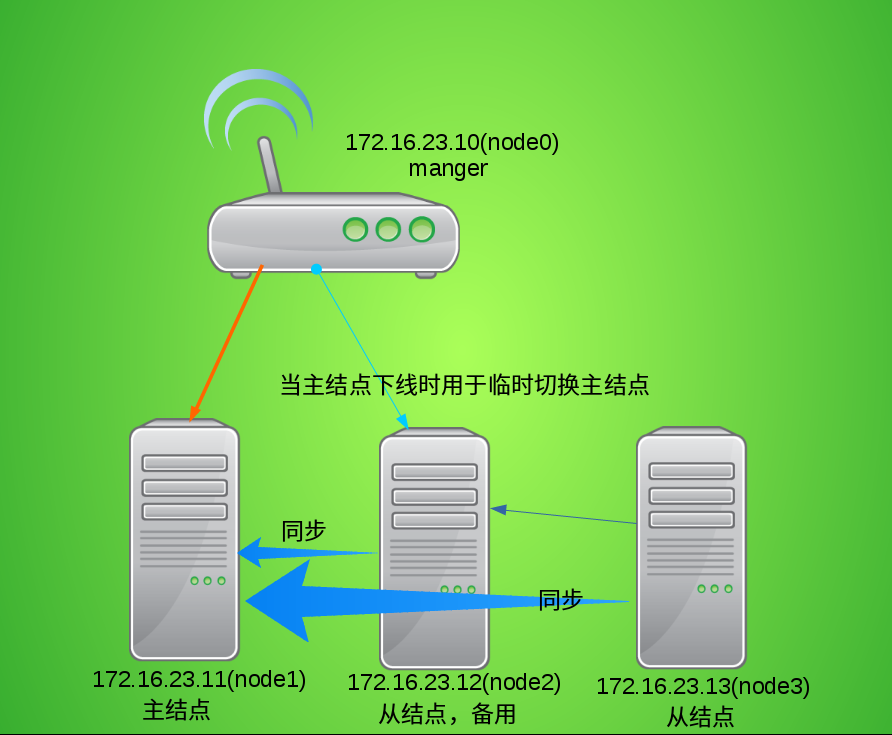

本实验境共有四个节点,其中角色分配如下;

node0 :MHA Manager(172.16.23.10); node1 :MariaDB master node2 :MariaDB slave node3 :MariaDB slave

各节点的/etc/hosts文件配置内容添加如下内容;

172.16.23.10 node0 node0.rj.com 172.16.23.11 node1 node1.rj.com 172.16.23.12 node2 node2.rj.com 172.16.23.13 node3 node3.rj.com

初始主节点的master配置;

node1 # vim /etc/my.cnf

innodb_file_per_table=ON 此项内容与下面一项内容一般在服务器启动时便加上

skip_name_resolve=ON

server_id=1

relay_log=relay-bin

log-bin=log-bin

symbolic-links=0

所有slave节点依赖的配置;

node(2,3)# vim /etc/my.cnf

innodb_file_per_table=ON

skip_name_resolve=ON

server_id=2 #注 此处的结点到了别的节点中一定要改,各节点的id必需唯一;

relay-log=relay-bin

log-bin=master-bin

relay-log-purge=OFF

read-only=ON

按上述要求分别配置好主从节点之后,按MySQL复制配置架构的配置方式将其配置完成并吂动

master节点和各slave节点,以及各slave节点启动其IO和SQL线程,确保主从复制运行无误

node1 mysql> GRANT REPLICATION SLAVE,REPLICATION CLIENT ON *.* TO 'rj'@'172.16.%.%' IDENTIFIED BY 'centos.123' ;

node(2,3) mysql> CHANGE MASTER TO MASTER_HOST='172.16.23.11',MASTER_USER='rj',MASTER_PASSWORD='centos.123',MASTER_LOG_FILE='log-bin.000001',MASTER_LOG_POS=492;

mysql> START SLAVE;

mysql> SHOW SLAVE STATUS;

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

以下两项是否为yes

同时,也可以在主结点上创建个库或表,查看其是否可台实现主从同步;

而后,在所有MySQL节点授权拥有管理权限的用户可在本地网络中有其它节点上远程方问。

当然,此时仅需要且只能在master节点运行类似如下SQL语句即可。

mysql> GRANT ALL ON *.* to 'rjyy'@'172.16.%.%' IDENTIFIED BY 'centos.123';

三、安装配置MHA

准备基于ssh互信通信环境

MHA集群中的各节点彼此之间均需基于ssh互信通信,以实现远程控制及数据管理功能。简单起见,可在Manager

节点生成密钥对儿,并设置其可远程连接本地主机后,将私钥文件及authorized_keys文件复制给余下的所有结点即可。

下面的的操作在manager(node0)节点操作完成。

# for i in {0..3} ; do scp -p .ssh/id_rsa .ssh/authorized_keys node$i:/root/.ssh/; done

安装MHA

node0 # yum install mha4mysql-manager-0.56-0.el6.noarch.rpm mha4mysql-node-0.56-0.el6.noarch.rpm

# for i in {1..3}; do scp mha4mysql-node-0.56-0.el6.noarch.rpm node$i:/root/ ; done

node(1,2,3) # yum -y install mha4mysql-node-0.56-0.el6.noarch.rpm

[root@node0 ~]# rpm -ql mha4mysql-manager

/usr/bin/masterha_check_repl

/usr/bin/masterha_check_ssh

/usr/bin/masterha_check_status

/usr/bin/masterha_conf_host

/usr/bin/masterha_manager

/usr/bin/masterha_master_monitor

/usr/bin/masterha_master_switch

/usr/bin/masterha_secondary_check

/usr/bin/masterha_stop 以上为可执行文件,就是上面所列出的命令

/usr/share/man/man1/masterha_check_repl.1.gz

/usr/share/man/man1/masterha_check_ssh.1.gz

/usr/share/man/man1/masterha_check_status.1.gz

/usr/share/man/man1/masterha_conf_host.1.gz

/usr/share/man/man1/masterha_manager.1.gz

/usr/share/man/man1/masterha_master_monitor.1.gz

/usr/share/man/man1/masterha_master_switch.1.gz

/usr/share/man/man1/masterha_secondary_check.1.gz

/usr/share/man/man1/masterha_stop.1.gz

/usr/share/perl5/vendor_perl/MHA/Config.pm

/usr/share/perl5/vendor_perl/MHA/DBHelper.pm

/usr/share/perl5/vendor_perl/MHA/FileStatus.pm

/usr/share/perl5/vendor_perl/MHA/HealthCheck.pm

/usr/share/perl5/vendor_perl/MHA/ManagerAdmin.pm

/usr/share/perl5/vendor_perl/MHA/ManagerAdminWrapper.pm

/usr/share/perl5/vendor_perl/MHA/ManagerConst.pm

/usr/share/perl5/vendor_perl/MHA/ManagerUtil.pm

/usr/share/perl5/vendor_perl/MHA/MasterFailover.pm

/usr/share/perl5/vendor_perl/MHA/MasterMonitor.pm

/usr/share/perl5/vendor_perl/MHA/MasterRotate.pm

/usr/share/perl5/vendor_perl/MHA/SSHCheck.pm

/usr/share/perl5/vendor_perl/MHA/Server.pm

/usr/share/perl5/vendor_perl/MHA/ServerManager.pm

Manager节点需要为每个监控的master/slave集群提供一个专用的配置文件,而所有的master/salve集群也可共享全局

配置。全局配置文件默认为/etc/masterha_default.cnf,其为可先配置。如果仅监控一组 master/slave集群,也可

直接通过application的配置提供各服务器的默认配置信息。而每个application的配置文件路径为自定义,本示例将使用

/etc/masterha/appl.cnf

# vim /etc/masterha/appl.cnf

[server default]

user=rjrj

password=centos.123

manager_workdir=/data/masterha/app1

manager_log=/data/masterha/app1/manager.log

remote_workdir=/data/masterha/app1

ssh_user=root

repl_user=rjrj

repl_password=centos.123

ping_interval=1

[server1]

hostname=172.16.23.11

candidate_master=1

[server2]

hostname=172.16.23.12

candidate_master=1

[server3]

hostname=172.16.23.13

[root@node0 ~]# masterha_check_ssh --conf=/etc/masterha/app1.cnf

Tue Feb 21 23:13:48 2017 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Tue Feb 21 23:13:48 2017 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Tue Feb 21 23:13:48 2017 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Tue Feb 21 23:13:48 2017 - [info] Starting SSH connection tests..

Tue Feb 21 23:13:49 2017 - [debug]

Tue Feb 21 23:13:48 2017 - [debug] Connecting via SSH from root@172.16.23.11(172.16.23.11:22) to root@172.16.23.12(172.16.23.12:22)..

Tue Feb 21 23:13:48 2017 - [debug] ok.

Tue Feb 21 23:13:48 2017 - [debug] Connecting via SSH from root@172.16.23.11(172.16.23.11:22) to root@172.16.23.13(172.16.23.13:22)..

Tue Feb 21 23:13:48 2017 - [debug] ok.

Tue Feb 21 23:13:49 2017 - [debug]

Tue Feb 21 23:13:48 2017 - [debug] Connecting via SSH from root@172.16.23.12(172.16.23.12:22) to root@172.16.23.11(172.16.23.11:22)..

Tue Feb 21 23:13:49 2017 - [debug] ok.

Tue Feb 21 23:13:49 2017 - [debug] Connecting via SSH from root@172.16.23.12(172.16.23.12:22) to root@172.16.23.13(172.16.23.13:22)..

Tue Feb 21 23:13:49 2017 - [debug] ok.

Tue Feb 21 23:13:50 2017 - [debug]

Tue Feb 21 23:13:49 2017 - [debug] Connecting via SSH from root@172.16.23.13(172.16.23.13:22) to root@172.16.23.11(172.16.23.11:22)..

Tue Feb 21 23:13:49 2017 - [debug] ok.

Tue Feb 21 23:13:49 2017 - [debug] Connecting via SSH from root@172.16.23.13(172.16.23.13:22) to root@172.16.23.12(172.16.23.12:22)..

Tue Feb 21 23:13:50 2017 - [debug] ok.

Tue Feb 21 23:13:50 2017 - [info] All SSH connection tests passed successfully.

最后显示为successfully表示已经成功了

[root@node0 ~]# masterha_check_repl --conf=/etc/masterha/app1.cnf

Wed Feb 22 10:18:40 2017 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Wed Feb 22 10:18:40 2017 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Wed Feb 22 10:18:40 2017 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Wed Feb 22 10:18:40 2017 - [info] MHA::MasterMonitor version 0.56.

Wed Feb 22 10:18:41 2017 - [info] GTID failover mode = 0

Wed Feb 22 10:18:41 2017 - [info] Dead Servers:

Wed Feb 22 10:18:41 2017 - [info] Alive Servers:

Wed Feb 22 10:18:41 2017 - [info] 172.16.23.11(172.16.23.11:3306)

Wed Feb 22 10:18:41 2017 - [info] 172.16.23.12(172.16.23.12:3306)

Wed Feb 22 10:18:41 2017 - [info] 172.16.23.13(172.16.23.13:3306)

Wed Feb 22 10:18:41 2017 - [info] Alive Slaves:

Wed Feb 22 10:18:41 2017 - [info] 172.16.23.12(172.16.23.12:3306) Version=5.5.44-MariaDB-log (oldest major version between slaves) log-bin:enabled

Wed Feb 22 10:18:41 2017 - [info] Replicating from 172.16.23.11(172.16.23.11:3306)

Wed Feb 22 10:18:41 2017 - [info] Primary candidate for the new Master (candidate_master is set)

Wed Feb 22 10:18:41 2017 - [info] 172.16.23.13(172.16.23.13:3306) Version=5.5.44-MariaDB-log (oldest major version between slaves) log-bin:enabled

Wed Feb 22 10:18:41 2017 - [info] Replicating from 172.16.23.11(172.16.23.11:3306)

Wed Feb 22 10:18:41 2017 - [info] Current Alive Master: 172.16.23.11(172.16.23.11:3306)

Wed Feb 22 10:18:41 2017 - [info] Checking slave configurations..

Wed Feb 22 10:18:41 2017 - [info] Checking replication filtering settings..

Wed Feb 22 10:18:41 2017 - [info] binlog_do_db= , binlog_ignore_db=

Wed Feb 22 10:18:41 2017 - [info] Replication filtering check ok.

Wed Feb 22 10:18:41 2017 - [info] GTID (with auto-pos) is not supported

Wed Feb 22 10:18:41 2017 - [info] Starting SSH connection tests..

Wed Feb 22 10:18:43 2017 - [info] All SSH connection tests passed successfully.

Wed Feb 22 10:18:43 2017 - [info] Checking MHA Node version..

Wed Feb 22 10:18:48 2017 - [info] Version check ok.

Wed Feb 22 10:18:48 2017 - [info] Checking SSH publickey authentication settings on the current master..

Wed Feb 22 10:18:48 2017 - [info] HealthCheck: SSH to 172.16.23.11 is reachable.

Wed Feb 22 10:18:49 2017 - [info] Master MHA Node version is 0.56.

Wed Feb 22 10:18:49 2017 - [info] Checking recovery script configurations on 172.16.23.11(172.16.23.11:3306)..

Wed Feb 22 10:18:49 2017 - [info] Executing command: save_binary_logs --command=test --start_pos=4 --binlog_dir=/var/lib/mysql,/var/log/mysql --output_file=/data/masterha/app1/save_binary_logs_test --manager_version=0.56 --start_file=log-bin.000003

Wed Feb 22 10:18:49 2017 - [info] Connecting to root@172.16.23.11(172.16.23.11:22)..

Creating /data/masterha/app1 if not exists.. Creating directory /data/masterha/app1.. done.

ok.

Checking output directory is accessible or not..

ok.

Binlog found at /var/lib/mysql, up to log-bin.000003

Wed Feb 22 10:18:50 2017 - [info] Binlog setting check done.

Wed Feb 22 10:18:50 2017 - [info] Checking SSH publickey authentication and checking recovery script configurations on all alive slave servers..

Wed Feb 22 10:18:50 2017 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='rjyy' --slave_host=172.16.23.12 --slave_ip=172.16.23.12 --slave_port=3306 --workdir=/data/masterha/app1 --target_version=5.5.44-MariaDB-log --manager_version=0.56 --relay_log_info=/var/lib/mysql/relay-log.info --relay_dir=/var/lib/mysql/ --slave_pass=xxx

Wed Feb 22 10:18:50 2017 - [info] Connecting to root@172.16.23.12(172.16.23.12:22)..

Creating directory /data/masterha/app1.. done.

Checking slave recovery environment settings..

Opening /var/lib/mysql/relay-log.info ... ok.

Relay log found at /var/lib/mysql, up to relay-bin.000009

Temporary relay log file is /var/lib/mysql/relay-bin.000009

Testing mysql connection and privileges.. done.

Testing mysqlbinlog output.. done.

Cleaning up test file(s).. done.

Wed Feb 22 10:18:51 2017 - [info] Executing command : apply_diff_relay_logs --command=test --slave_user='rjyy' --slave_host=172.16.23.13 --slave_ip=172.16.23.13 --slave_port=3306 --workdir=/data/masterha/app1 --target_version=5.5.44-MariaDB-log --manager_version=0.56 --relay_log_info=/var/lib/mysql/relay-log.info --relay_dir=/var/lib/mysql/ --slave_pass=xxx

Wed Feb 22 10:18:51 2017 - [info] Connecting to root@172.16.23.13(172.16.23.13:22)..

Creating directory /data/masterha/app1.. done.

Checking slave recovery environment settings..

Opening /var/lib/mysql/relay-log.info ... ok.

Relay log found at /var/lib/mysql, up to relay-bin.000007

Temporary relay log file is /var/lib/mysql/relay-bin.000007

Testing mysql connection and privileges.. done.

Testing mysqlbinlog output.. done.

Cleaning up test file(s).. done.

Wed Feb 22 10:18:52 2017 - [info] Slaves settings check done.

Wed Feb 22 10:18:52 2017 - [info]

172.16.23.11(172.16.23.11:3306) (current master)

+--172.16.23.12(172.16.23.12:3306)

+--172.16.23.13(172.16.23.13:3306)

Wed Feb 22 10:18:52 2017 - [info] Checking replication health on 172.16.23.12..

Wed Feb 22 10:18:52 2017 - [info] ok.

Wed Feb 22 10:18:52 2017 - [info] Checking replication health on 172.16.23.13..

Wed Feb 22 10:18:52 2017 - [info] ok.

Wed Feb 22 10:18:52 2017 - [warning] master_ip_failover_script is not defined.

Wed Feb 22 10:18:52 2017 - [warning] shutdown_script is not defined.

Wed Feb 22 10:18:52 2017 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.

检查管理的MySQL复制集群的连接配置参数是正常的;

# nohup masterha_manager --conf=/etc/masterha/app1.cnf > /data/master/app1/manager.log 2>&1 &

让masterha_manager进程工作于后台

四、开始测试

[root@node1 ~]# systemctl stop mariadb 让主服务器停掉;

[root@node2 ~]# mysql

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 50

Server version: 5.5.44-MariaDB-log MariaDB Server

Copyright (c) 2000, 2015, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> SHOW MASTER STATUS;

+-------------------+----------+--------------+------------------+

| File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+-------------------+----------+--------------+------------------+

| master-bin.000005 | 245 | | |

+-------------------+----------+--------------+------------------+

1 row in set (0.00 sec)

MariaDB [(none)]> SHOW SLAVE STATUS\G;

Empty set (0.00 sec)

ERROR: No query specified

此时node2被提升为主服务器 ,node3拉取数据走向node2

Welcome to the MariaDB monitor. Commands end with ; or \g.

Your MariaDB connection id is 33

Server version: 5.5.44-MariaDB-log MariaDB Server

Copyright (c) 2000, 2015, Oracle, MariaDB Corporation Ab and others.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

MariaDB [(none)]> SHOW SLAVE STATUS\G;

*************************** 1. row ***************************

Slave_IO_State: Waiting for master to send event

Master_Host: 172.16.23.12

Master_User: rj

Master_Port: 3306

Connect_Retry: 60

Master_Log_File: master-bin.000008

Read_Master_Log_Pos: 245

Relay_Log_File: relay-bin.000002

Relay_Log_Pos: 530

Relay_Master_Log_File: master-bin.000008

Slave_IO_Running: Yes

Slave_SQL_Running: Yes

Replicate_Do_DB:

Replicate_Ignore_DB:

Replicate_Do_Table:

Replicate_Ignore_Table:

Replicate_Wild_Do_Table:

Replicate_Wild_Ignore_Table:

Last_Errno: 0

Last_Error:

Skip_Counter: 0

Exec_Master_Log_Pos: 245

Relay_Log_Space: 818

Until_Condition: None

Until_Log_File:

Until_Log_Pos: 0

Master_SSL_Allowed: No

Master_SSL_CA_File:

Master_SSL_CA_Path:

Master_SSL_Cert:

Master_SSL_Cipher:

Master_SSL_Key:

Seconds_Behind_Master: 0

Master_SSL_Verify_Server_Cert: No

Last_IO_Errno: 0

Last_IO_Error:

Last_SQL_Errno: 0

Last_SQL_Error:

Replicate_Ignore_Server_Ids:

Master_Server_Id: 2

1 row in set (0.00 sec)