使用java API操作hdfs--拷贝部分文件到本地

要求:和前一篇的要求正好相反。。

在HDFS中生成一个130KB的文件:

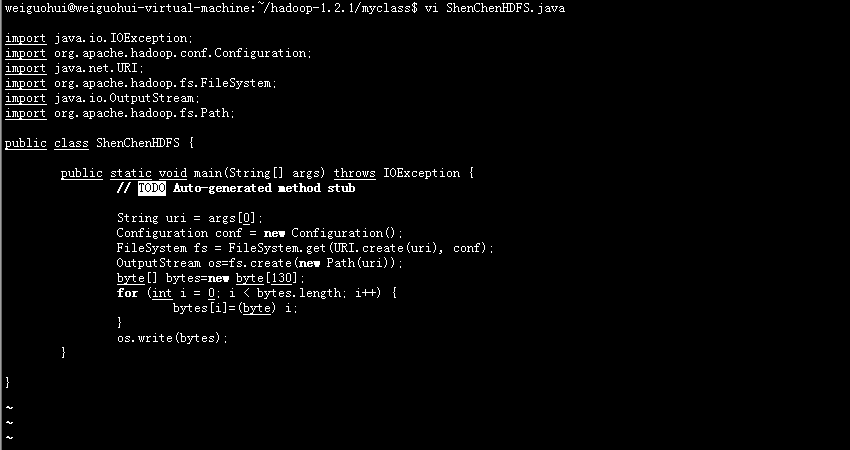

代码如下:

import java.io.IOException; import org.apache.hadoop.conf.Configuration; import java.net.URI; import org.apache.hadoop.fs.FileSystem; import java.io.OutputStream; import org.apache.hadoop.fs.Path; public class ShenChenHDFS { public static void main(String[] args) throws IOException { // TODO Auto-generated method stub String uri = args[0]; Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(URI.create(uri), conf); OutputStream os=fs.create(new Path(uri)); byte[] bytes=new byte[130]; for (int i = 0; i < bytes.length; i++) { bytes[i]=(byte) i; } os.write(bytes); } }

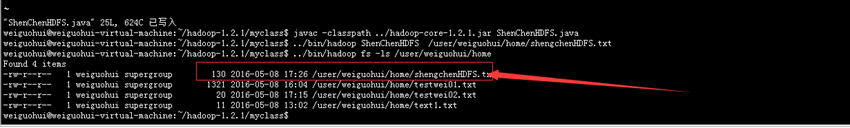

运行的结果:

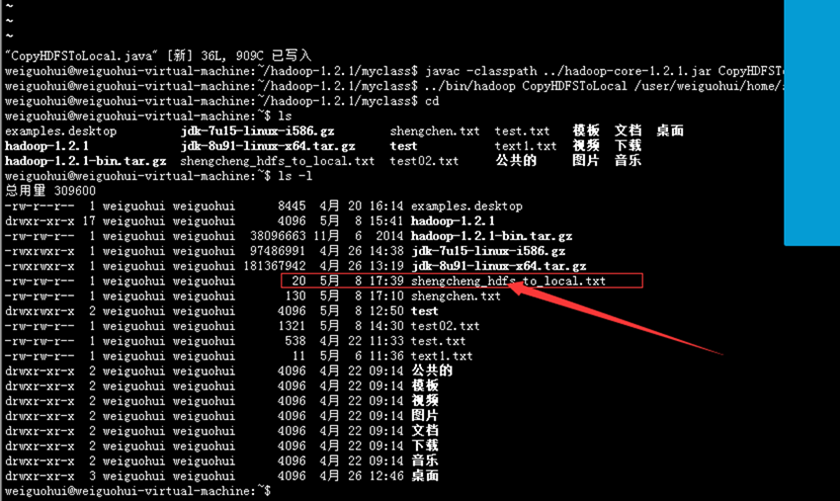

代码如下:

import java.io.File; import java.io.FileOutputStream; import java.io.IOException; import java.io.InputStream; import org.apache.hadoop.io.IOUtils; import org.apache.hadoop.fs.Path; import java.net.URI; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.conf.Configuration; public class CopyHDFSToLocal { public static void main(String[] args) throws IOException { String uri = args[0]; Configuration conf = new Configuration(); FileSystem fs = FileSystem.get(URI.create(uri), conf); InputStream in = in = fs.open(new Path(uri)); File file=new File("/home/weiguohui/shengcheng_hdfs_to_local.txt"); FileOutputStream fos=new FileOutputStream(file); byte[] bytes=new byte[1024]; int offset=100; int len=20; int numberRead=0; while((numberRead=in.read(bytes))!=-1){ fos.write(bytes, 100, 20); } IOUtils.closeStream(in); IOUtils.closeStream(fos); } }

大道至简,逻辑起点,记忆关联,直观抽象。。。