python分析《三国演义》,谁才是这部书的绝对主角(包含统计指定角色的方法)

前面分析统计了金庸名著《倚天屠龙记》中人物按照出现次数并排序

https://www.cnblogs.com/becks/p/11421214.html

然后使用pyecharts,统计B站某视频弹幕内容,并绘制成词云显示

https://www.cnblogs.com/becks/p/14743080.html

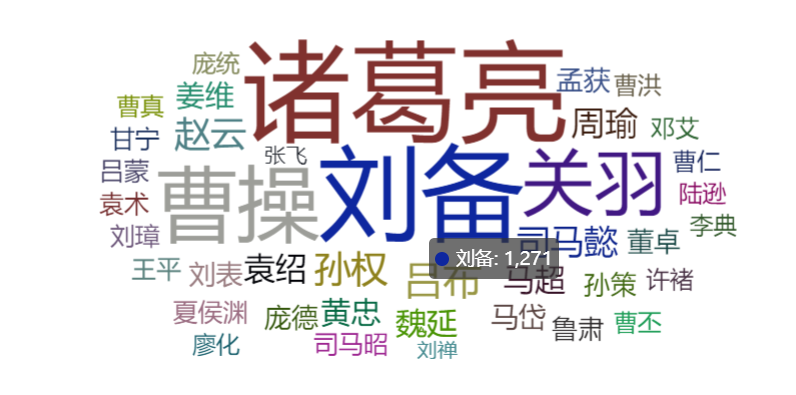

这次,就用分析统计下《三国演义》这部书里各角色出现的频率,并绘制成词云显示,看看谁是绝对的主角吧

首先,我们需要把这部书里出现的人物都枚举出来,毕竟只统计角色信息,不需要把非人物名也统计进来

角色 = {'刘备','诸葛亮','关羽','张飞','刘禅',"孙权",'赵云','司马懿','周瑜','曹操','袁绍','马超','魏延',

'黄忠','姜维','马岱','庞德','孟获','刘表','董卓','孙策',

'鲁肃','司马昭','夏侯渊','王平','刘璋','袁术','吕蒙','甘宁','邓艾','曹仁',

'陆逊','许褚','庞统','曹洪','李典','曹丕','廖化','曹真','吕布'}

然后就是读取实现准备好的《三国演义》书籍txt文档格式,使用jieba库对文档内容进行处理

# -*-coding:utf8-*- # encoding:utf-8 import jieba #倒入jieba库 import os import sys from collections import Counter#分词后词频统计 from pyecharts.charts import WordCloud#词云 path = os.path.abspath(os.path.dirname(sys.argv[0])) txt=open(path+'\\171182.txt',"r", encoding='utf-8').read() #读取三国演义文本 words=jieba.lcut(txt) #jieba库分析文本 counts={}

在就是统计指定角色姓名出现次数

for word in words: if len(word)<=1: continue elif word in 角色: counts[word]=counts.get(word,0)+1 else: None

绘制词云

items=list(counts.items())#字典到列表 wordcloud = WordCloud() wordcloud.add("",items,word_size_range=[15, 80],rotate_step=30,shape='cardioid') wordcloud.render(path+'\\wordcloud.html')

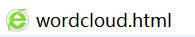

执行脚本后查看生成文件

曹操两个字的显示的最大,说明整部书里出现的次数最多。这肯定不对,罗贯中是刘备粉啊,

后来想了下,在三国里,直呼人姓名那是骂人,是损。那些所谓的正派人士都是有雅称的,比如卧龙、诸葛等等

改了下代码,把这些人的雅称也匹配进去

刘备 = {"玄德","玄德曰","先主","刘豫州","刘皇叔",'刘玄德','刘使君'}

诸葛亮 = {"孔明","孔明曰","卧龙","卧龙先生","诸葛先生",'孔明先生','诸葛丞相','诸葛'}

关羽 = {"关公","云长","汉寿亭侯","关云长"}

曹操 = {"孟德",'曹孟德','曹操'}

张飞 = {"张翼德",'翼德'}

同时,统计部分也作了处理

for word in words: #筛选分析后的名词 if len(word)<=1: #因为词组中的汉字数大于1个即认为是一个词组,所以通过continue结束掉读取的汉字书为1的内容 continue #elif word in exculdes: #continue #elif word in 诸葛亮 or word in 刘备 or word in 关羽 or word in 曹操: #counts[word]=counts.get(word,0)+1 elif word in 刘备: word ="刘备" counts[word]=counts.get(word,0)+1 elif word in 诸葛亮: word ="诸葛亮" counts[word]=counts.get(word,0)+1 elif word in 曹操: word ="曹操" counts[word]=counts.get(word,0)+1 elif word in 关羽: word ="关羽" counts[word]=counts.get(word,0)+1 elif word in 张飞: word ="张飞" counts[word]=counts.get(word,0)+1 elif word in 其他: counts[word]=counts.get(word,0)+1 else: None

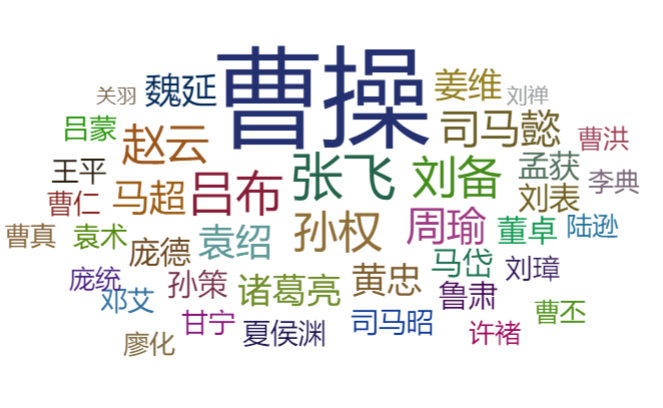

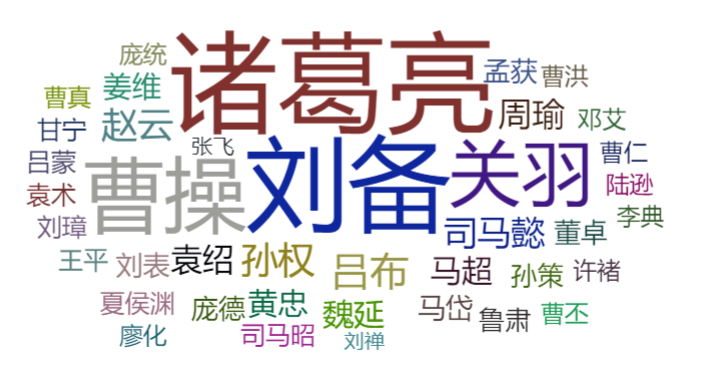

再次执行,嗯,诸葛亮是王者,诸葛亮合计出现了1350次,刘备合计出现1271次

附整个代码

# -*-coding:utf8-*- # encoding:utf-8 import jieba #倒入jieba库 import os import sys from collections import Counter#分词后词频统计 from pyecharts.charts import WordCloud#词云 path = os.path.abspath(os.path.dirname(sys.argv[0])) txt=open(path+'\\三国演义.txt',"r", encoding='utf-8').read() #文本 words=jieba.lcut(txt) #jieba库分析文本 counts={} 刘备 = {"玄德","玄德曰","先主","刘豫州","刘皇叔",'刘玄德','刘使君'} 诸葛亮 = {"孔明","孔明曰","卧龙","卧龙先生","诸葛先生",'孔明先生','诸葛丞相','诸葛'} 关羽 = {"关公","云长","汉寿亭侯","关云长"} 刘禅 = {"后主"} 曹操 = {"孟德",'曹孟德','曹操'} 张飞 = {"张翼德",'翼德'} 其他 = {"孙权",'赵云','司马懿','周瑜','刘禅','袁绍','马超','魏延','黄忠','姜维','马岱','庞德','孟获','刘表','董卓','孙策', '鲁肃','司马昭','夏侯渊','王平','刘璋','袁术','吕蒙','甘宁','邓艾','曹仁','陆逊','许褚','庞统','曹洪','李典','曹丕','廖化','曹真','吕布'} for word in words: #筛选分析后的名词 if len(word)<=1: #因为词组中的汉字数大于1个即认为是一个词组,所以通过continue结束掉读取的汉字书为1的内容 continue #elif word in exculdes: #continue #elif word in 诸葛亮 or word in 刘备 or word in 关羽 or word in 曹操: #counts[word]=counts.get(word,0)+1 elif word in 刘备: word ="刘备" counts[word]=counts.get(word,0)+1 elif word in 诸葛亮: word ="诸葛亮" counts[word]=counts.get(word,0)+1 elif word in 曹操: word ="曹操" counts[word]=counts.get(word,0)+1 elif word in 关羽: word ="关羽" counts[word]=counts.get(word,0)+1 elif word in 张飞: word ="张飞" counts[word]=counts.get(word,0)+1 elif word in 其他: counts[word]=counts.get(word,0)+1 else: None items=list(counts.items())#字典到列表 wordcloud = WordCloud() wordcloud.add("",items,word_size_range=[15, 80],rotate_step=30,shape='cardioid') wordcloud.render(path+'\\wordcloud.html')

浙公网安备 33010602011771号

浙公网安备 33010602011771号