《利用python进行数据分析》读书笔记--第十章 时间序列(二)

5、时期及其算数运算

时期(period)表示的是时间区间,比如数日、数月、数季、数年等。Period类所表示的就是这种数据类型,其构造函数需要用到一个字符串或整数,以及频率。

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz #下面的'A-DEC'是年第12月底最后一个日历日 p = pd.Period('2016',freq = 'A-DEC') #Period可以直接加减 print p + 5 #相同频率的Period可以进行加减,不同频率是不能加减的 rng = pd.Period('2015',freq = 'A-DEC') - p print rng rng = pd.period_range('1/1/2000','6/30/2000',freq = 'M') #类型是<class 'pandas.tseries.period.PeriodIndex'>,形式上是一个array数组 #注意下面的形式已经不是书上的形式,而是float类型,但是做索引时,还是日期形式 print rng print type(rng) print Series(np.random.randn(6),index = rng),'\n' #PeriodIndex类的构造函数还允许直接使用一组字符串 values = ['2001Q3','2002Q2','2003Q1'] index = pd.PeriodIndex(values,freq = 'Q-DEC') #下面index的 print index

>>>

2021

-1

array([360, 361, 362, 363, 364, 365], dtype=int64)

<class 'pandas.tseries.period.PeriodIndex'>

2000-01 -0.504031

2000-02 1.345024

2000-03 0.074367

2000-04 -1.152187

2000-05 -0.460272

2000-06 0.486135

Freq: M

array([126, 129, 132], dtype=int64)

[Finished in 1.4s]

-

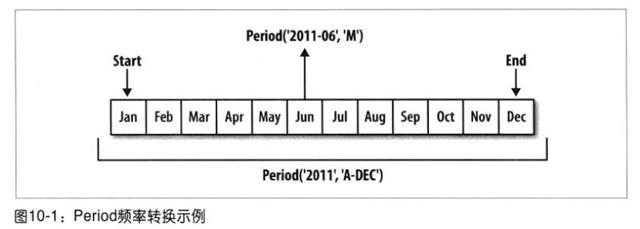

时期的频率转换

Period和PeriodIndex对象都可以通过其asfreq方法转换为别的频率。

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz #下面这条语句实际上是一个被划分为多个月度时期的时间段中的游标 p = pd.Period('2007',freq = 'A-DEC') print p print p.asfreq('M',how = 'start') print p.asfreq('M',how = 'end') #高频率转换为低频率时,超时期是由子时期所属位置决定的,例如在A-JUN频率中,月份“2007年8月”实际上属于“2008年” p = pd.Period('2007-08','M') print p.asfreq('A-JUN'),'\n' #PeriodIndex或TimeSeries的频率转换方式也是如此: rng = pd.period_range('2006','2009',freq = 'A-DEC') ts = Series(np.random.randn(len(rng)),index = rng) print ts print ts.asfreq('M',how = 'start') print ts.asfreq('B',how = 'end'),'\n'

>>>

2007

2007-01

2007-12

2008

2006 0.001601

2007 0.285760

2008 -0.458762

2009 0.076204

Freq: A-DEC

2006-01 0.001601

2007-01 0.285760

2008-01 -0.458762

2009-01 0.076204

Freq: M

2006-12-29 0.001601

2007-12-31 0.285760

2008-12-31 -0.458762

2009-12-31 0.076204

Freq: B

[Finished in 1.4s]

Period频率转换示意图:

-

按季度计算的时期频率

季度型数据在会计、金融等领域中很常见。许多季度型数据都会涉及“财年末”的概念,通常是一年12个月中某月的最后一个日历日或工作日。就这一点来说,“2012Q4”根据财年末的会有不同含义。pandas支持12种可能的季度频率,即Q-JAN、Q-DEC。

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz p = pd.Period('2012Q4',freq = 'Q-JAN') print p #在以1月结束的财年中,2012Q4是从11月到1月 print p.asfreq('D','start') print p.asfreq('D','end'),'\n' #因此,Period之间的运算会非常简单,例如,要获取该季度倒数第二个工作日下午4点的时间戳 p4pm = (p.asfreq('B','e') - 1).asfreq('T','s') + 16 * 60 print p4pm print p4pm.to_timestamp(),'\n' #period_range还可以用于生产季度型范围,季度型范围的算数运算也跟上面是一样的: #要非常小心的是Q-JAN是什么意思 rng = pd.period_range('2011Q3','2012Q4',freq = 'Q-JAN') print rng.to_timestamp() ts = Series(np.arange(len(rng)),index = rng) print ts,'\n' new_rng = (rng.asfreq('B','e') - 1).asfreq('T','s') + 16 * 60 ts.index = new_rng.to_timestamp() print ts,'\n' >>> 2012Q4 2011-11-01 2012-01-31 2012-01-30 16:00 2012-01-30 16:00:00 <class 'pandas.tseries.index.DatetimeIndex'> [2010-10-31 00:00:00, ..., 2012-01-31 00:00:00] Length: 6, Freq: Q-OCT, Timezone: None 2011Q3 0 2011Q4 1 2012Q1 2 2012Q2 3 2012Q3 4 2012Q4 5 Freq: Q-JAN 2010-10-28 16:00:00 0 2011-01-28 16:00:00 1 2011-04-28 16:00:00 2 2011-07-28 16:00:00 3 2011-10-28 16:00:00 4 2012-01-30 16:00:00 5 [Finished in 3.3s]

下面是一个示意图,很直观:

-

将Timestamp转换为Period

通过to_period方法,可以将由时间戳索引的Series和DataFrame对象转换为以时期为索引的对象。

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz rng = pd.date_range('1/1/2015',periods = 3,freq = 'M') ts = Series(np.random.randn(3),index = rng) print ts pts = ts.to_period() print pts,'\n' #由于时期指的是非重叠时间区间,因此对于给定的频率,一个时间戳只能属于一个时期。 #新PeriodIndex的频率默认是从时间戳推断而来的,当然可以自己指定频率,当然会有重复时期存在 rng = pd.date_range('1/29/2000',periods = 6,freq = 'D') ts2 = Series(np.random.randn(6),index = rng) print ts2 print ts2.to_period('M') #要想转换为时间戳,使用to_timestamp即可 print pts.to_timestamp(how = 'end') >>> 2015-01-31 -1.085886 2015-02-28 -0.919741 2015-03-31 0.656477 Freq: M 2015-01 -1.085886 2015-02 -0.919741 2015-03 0.656477 Freq: M 2000-01-29 -0.394812 2000-01-30 0.669354 2000-01-31 0.197537 2000-02-01 -1.374942 2000-02-02 0.451683 2000-02-03 1.542144 Freq: D 2000-01 -0.394812 2000-01 0.669354 2000-01 0.197537 2000-02 -1.374942 2000-02 0.451683 2000-02 1.542144 Freq: M 2015-01-31 -1.085886 2015-02-28 -0.919741 2015-03-31 0.656477 Freq: M [Finished in 1.8s]

-

通过数组创建PeriodIndex

固定频率的数据集通常会将时间信息分开存放在多个列中。例如下面的这个宏观经济数据集中,年度和季度就分别存放在不同的列中。

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz data = pd.read_csv('E:\\macrodata.csv') print data.year print data.quarter,'\n' index = pd.PeriodIndex(year = data.year,quarter = data.quarter,freq = 'Q-DEC') #index是以整数数组的形式存储的,当显示某一个是才会有年份-季度的展示 print index print index[0],'\n' data.index = index #下面的结果证明,infl的index已经变为了年份-季度形式 print data.infl >>> 0 1959 1 1959 2 1959 3 1959 4 1960 5 1960 6 1960 7 1960 8 1961 9 1961 10 1961 11 1961 12 1962 13 1962 14 1962 ... 188 2006 189 2006 190 2006 191 2006 192 2007 193 2007 194 2007 195 2007 196 2008 197 2008 198 2008 199 2008 200 2009 201 2009 202 2009 Name: year, Length: 203 0 1 1 2 2 3 3 4 4 1 5 2 6 3 7 4 8 1 9 2 10 3 11 4 12 1 13 2 14 3 ... 188 1 189 2 190 3 191 4 192 1 193 2 194 3 195 4 196 1 197 2 198 3 199 4 200 1 201 2 202 3 Name: quarter, Length: 203 array([-44, -43, -42, -41, -40, -39, -38, -37, -36, -35, -34, -33, -32, -31, -30, -29, -28, -27, -26, -25, -24, -23, -22, -21, -20, -19, -18, -17, -16, -15, -14, -13, -12, -11, -10, -9, -8, -7, -6, -5, -4, -3, -2, -1, 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158], dtype=int64) 1959Q1 1959Q1 0.00 1959Q2 2.34 1959Q3 2.74 1959Q4 0.27 1960Q1 2.31 1960Q2 0.14 1960Q3 2.70 1960Q4 1.21 1961Q1 -0.40 1961Q2 1.47 1961Q3 0.80 1961Q4 0.80 1962Q1 2.26 1962Q2 0.13 1962Q3 2.11 ... 2006Q1 2.60 2006Q2 3.97 2006Q3 -1.58 2006Q4 3.30 2007Q1 4.58 2007Q2 2.75 2007Q3 3.45 2007Q4 6.38 2008Q1 2.82 2008Q2 8.53 2008Q3 -3.16 2008Q4 -8.79 2009Q1 0.94 2009Q2 3.37 2009Q3 3.56 Freq: Q-DEC, Name: infl, Length: 203 [Finished in 1.8s]

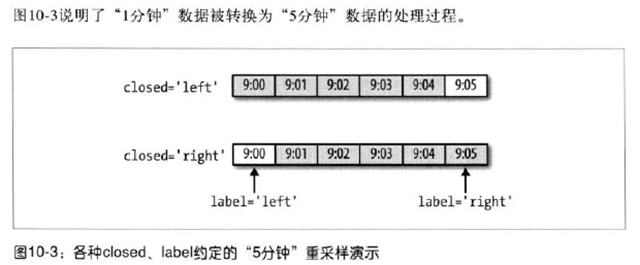

6、重采样及频率转换

重采样(resampling)指的是将时间序列从一个频率转换到另一个频率的过程。将高频率数据聚合到低频率成为降采样(downsampling),而将低频率数据转换到高频率成为升采样(uosampling)。并不是所有的重采样都能被划分到这两类中,比如将W-WED转换为W-FRI既不是降采样也不是升采样。

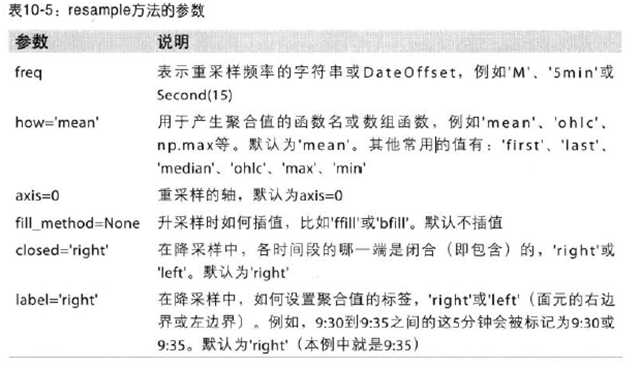

pandas中的resample方法,它是各种频率转换工作的主力函数。

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz rng = pd.date_range('1/1/2000',periods = 100,freq = 'D') ts = Series(np.random.randn(100),index = rng) #print ts #注意下面的结果中有4个月的值,因为ts已经到了四月份 print ts.resample('M',how = 'mean') print ts.resample('M',how = 'mean',kind = 'period') >>> 2000-01-31 0.015620 2000-02-29 0.002502 2000-03-31 -0.029775 2000-04-30 -0.618537 Freq: M 2000-01 0.015620 2000-02 0.002502 2000-03 -0.029775 2000-04 -0.618537 Freq: M [Finished in 0.7s]

下面是resample的参数:

-

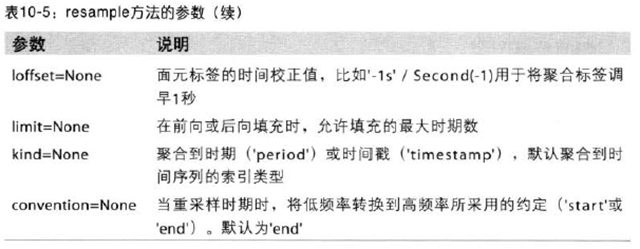

降采样

将数据的频率降低称为降采样,也就是将数据进行聚合。一个数据点只能属于一个聚合时间段,所有时间段的并集组成整个时间帧。在进行降采样时,应该考虑如下:

-

各区间那便是闭合的

-

如何标记各个聚合面元,用区间的开头还是结尾

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz #下面生成1分钟线 rng = pd.date_range('1/1/2000',periods = 12,freq = 'T') ts = Series(range(0,12),index = rng) print ts,'\n' #下面聚合到5min线 print ts.resample('5min',how = 'sum') #传入的频率将会以“5min”的增量定义面元。默认情况下,面元的有边界是包含右边届的,即00:00到00:05是包含00:05的 #传入closed = 'left'会让左边的区间闭合 print ts.resample('5min',how = 'sum',closed = 'left') #最终的时间序列默认是用右侧的边界标记,但是传入label = 'left'可以转换为左边标记 print ts.resample('5min',how = 'sum',closed = 'left',label = 'left'),'\n' #最后,你可能需要对结果索引做一些位移,比如将右边界减去一秒更容易明白到底是属于哪一个区间 #通过loffset设置一个字符串或者日期偏移量即可实现此目的,书上作者没有加left是矛盾的,当然也可以调用shift来进行时间偏移 print ts.resample('5min',how = 'sum',closed = 'left',loffset = '-1s') >>> 2000-01-01 00:00:00 0 2000-01-01 00:01:00 1 2000-01-01 00:02:00 2 2000-01-01 00:03:00 3 2000-01-01 00:04:00 4 2000-01-01 00:05:00 5 2000-01-01 00:06:00 6 2000-01-01 00:07:00 7 2000-01-01 00:08:00 8 2000-01-01 00:09:00 9 2000-01-01 00:10:00 10 2000-01-01 00:11:00 11 Freq: T 2000-01-01 00:00:00 0 2000-01-01 00:05:00 15 2000-01-01 00:10:00 40 2000-01-01 00:15:00 11 Freq: 5T 2000-01-01 00:05:00 10 2000-01-01 00:10:00 35 2000-01-01 00:15:00 21 Freq: 5T 2000-01-01 00:00:00 10 2000-01-01 00:05:00 35 2000-01-01 00:10:00 21 Freq: 5T 2000-01-01 00:04:59 10 2000-01-01 00:09:59 35 2000-01-01 00:14:59 21 Freq: 5T [Finished in 0.6s]

下面是个下采样的一个直观展示:

a、OHLC重采样

金融领域中有一种无所不在的时间序列聚合方式,及计算四个面元值:open、close、hign、close。传入how = ‘ohlc’即可得到一个含有这四种聚合值的DataFrame。这个过程很高效!(顺便:真的很实用啊!)只需一次扫描即可计算出结果:

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz rng = pd.date_range('1/1/2000',periods = 12,freq = 'T') ts = Series(np.random.randn(12),index = rng) print ts,'\n' print ts.resample('5min',how = 'ohlc')

>>>

open high low close

2000-01-01 00:00:00 1.239881 1.239881 1.239881 1.239881

2000-01-01 00:05:00 0.035189 0.371294 -1.764463 -1.764463

2000-01-01 00:10:00 -0.959353 1.441732 -0.959353 0.019104

2000-01-01 00:15:00 1.169352 1.169352 1.169352 1.169352

[Finished in 0.7s]

b、通过groupby进行重采样

另一种方法是使用pandas的groupby功能。例如,你打算根据月份或者周几进行分组,只需传入一个能够访问时间序列的索引上的这些字段的函数即可:

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz rng = pd.date_range('1/1/2000',periods = 100,freq = 'D') ts = Series(np.arange(100),index = rng) print ts.groupby(lambda x:x.month).mean() #作真是越写越省事了…… print ts.groupby(lambda x:x.weekday).mean() >>> 1 15 2 45 3 75 4 95 0 47.5 1 48.5 2 49.5 3 50.5 4 51.5 5 49.0 6 50.0 [Finished in 0.6s]

- 升采样和差值

将数据从低频率转换到高频率是,就不需要聚合了。看一下下面的例子:

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz frame = DataFrame(np.random.randn(2,4),index = pd.date_range('1/1/2000',periods = 2,freq = 'W-WED'), columns = ['Colorado','Texas','New York','Ohio']) print frame,'\n' #将其重采样到日频率,默认会引入缺省值 df_daily = frame.resample('D') print df_daily,'\n' #可以跟fillna和reindex一样,将上面的数值用resampling进行填充 print frame.resample('D',fill_method = 'ffill'),'\n' #同样,这里可以只填充指定的时期数(目的是限制前面的观测值的持续使用距离) print frame.resample('D',fill_method = 'ffill',limit = 2) #注意,新的日期索引完全没必要跟旧的相交,注意这个例子展现了数据日期可以延长 print frame.resample('W-THU',fill_method = 'ffill') >>> Colorado Texas New York Ohio 2000-01-05 0.093695 1.382325 -0.146193 1.206698 2000-01-12 -1.873184 0.603526 -1.407574 1.452790 Colorado Texas New York Ohio 2000-01-05 0.093695 1.382325 -0.146193 1.206698 2000-01-06 NaN NaN NaN NaN 2000-01-07 NaN NaN NaN NaN 2000-01-08 NaN NaN NaN NaN 2000-01-09 NaN NaN NaN NaN 2000-01-10 NaN NaN NaN NaN 2000-01-11 NaN NaN NaN NaN 2000-01-12 -1.873184 0.603526 -1.407574 1.452790 Colorado Texas New York Ohio 2000-01-05 0.093695 1.382325 -0.146193 1.206698 2000-01-06 0.093695 1.382325 -0.146193 1.206698 2000-01-07 0.093695 1.382325 -0.146193 1.206698 2000-01-08 0.093695 1.382325 -0.146193 1.206698 2000-01-09 0.093695 1.382325 -0.146193 1.206698 2000-01-10 0.093695 1.382325 -0.146193 1.206698 2000-01-11 0.093695 1.382325 -0.146193 1.206698 2000-01-12 -1.873184 0.603526 -1.407574 1.452790 Colorado Texas New York Ohio 2000-01-05 0.093695 1.382325 -0.146193 1.206698 2000-01-06 0.093695 1.382325 -0.146193 1.206698 2000-01-07 0.093695 1.382325 -0.146193 1.206698 2000-01-08 NaN NaN NaN NaN 2000-01-09 NaN NaN NaN NaN 2000-01-10 NaN NaN NaN NaN 2000-01-11 NaN NaN NaN NaN 2000-01-12 -1.873184 0.603526 -1.407574 1.452790 Colorado Texas New York Ohio 2000-01-06 0.093695 1.382325 -0.146193 1.206698 2000-01-13 -1.873184 0.603526 -1.407574 1.452790 [Finished in 0.7s]

- 通过日期进行重采样

对那些使用时期索引的数据进行重采样是一件非常简单的事情。

#-*- coding:utf-8 -*- import numpy as np import pandas as pd import matplotlib.pyplot as plt import datetime as dt from pandas import Series,DataFrame from datetime import datetime from dateutil.parser import parse import time from pandas.tseries.offsets import Hour,Minute,Day,MonthEnd import pytz frame = DataFrame(np.random.randn(24,4),index = pd.period_range('1-2000','12-2001',freq = 'M'), columns = ['Colorado','Texas','New York','Ohio']) print frame,'\n' annual_frame = frame.resample('A-DEC',how = 'mean') print annual_frame,'\n' #升采样要稍微麻烦些,因为你必须决定在新的频率中各区间的哪端用于放置原来的值,就像asfreq方法一样,convention默认为'end',可设置为'start' #Q-DEC:季度型(每年以12月结束) print annual_frame.resample('Q-DEC',fill_method = 'ffill') print annual_frame.resample('Q-DEC',fill_method = 'ffill',convention = 'start'),'\n' #由于时期指的是时间区间,所以升采样和降采样的规则就比较严格 #在降采样中,目标频率必须是原频率的子时期 #在升采样中,目标频率必须是原频率的超时期 #如果不满足这些条件,就会引发异常,主要影响的是按季、年、周计算的频率。 #例如,由Q-MAR定义的时间区间只能升采样为A-MAR、A-JUN等 print annual_frame.resample('Q-MAR',fill_method = 'ffill') #实话说,上面的几个例子需要在实战中去理解 >>> Colorado Texas New York Ohio 2000-01 0.531119 0.514660 -1.051243 1.900872 2000-02 0.937613 -0.301391 1.034113 -0.015524 2000-03 0.368118 -1.236412 0.455100 1.648863 2000-04 -0.728873 0.250044 1.523354 0.230613 2000-05 -0.188811 1.418581 -1.285510 1.051915 2000-06 2.059990 -0.703682 1.293203 -0.792534 2000-07 0.911168 -0.362981 -1.873637 1.033383 2000-08 0.817223 1.512153 -0.365323 -1.325069 2000-09 -0.087511 0.238656 -2.078260 1.415511 2000-10 0.185765 0.223584 1.242821 -0.654831 2000-11 -0.725814 0.723152 -0.250924 -2.110532 2000-12 -0.153382 1.535816 1.455040 0.700309 2001-01 -0.146100 -1.036274 -0.954112 -0.212434 2001-02 0.283262 1.868316 2.128798 -0.857980 2001-03 -0.793054 -1.858595 -1.243900 0.952001 2001-04 0.878166 -0.846098 1.161008 1.060023 2001-05 0.071310 -0.705115 0.489365 0.187680 2001-06 -0.622563 -1.070024 -1.044217 0.119744 2001-07 1.086923 -1.142216 1.015157 0.804685 2001-08 -2.642336 -0.758853 -0.248052 -0.024919 2001-09 -0.335489 -1.354160 0.171963 -0.993819 2001-10 -0.715587 -0.833531 0.797166 0.127754 2001-11 -0.265285 -2.005336 1.271591 0.016298 2001-12 0.971353 -0.150070 -1.170043 1.067736 Colorado Texas New York Ohio 2000 0.327217 0.317682 0.008228 0.256915 2001 -0.185783 -0.824330 0.197894 0.187231 Colorado Texas New York Ohio 2000Q4 0.327217 0.317682 0.008228 0.256915 2001Q1 0.327217 0.317682 0.008228 0.256915 2001Q2 0.327217 0.317682 0.008228 0.256915 2001Q3 0.327217 0.317682 0.008228 0.256915 2001Q4 -0.185783 -0.824330 0.197894 0.187231 Colorado Texas New York Ohio 2000Q1 0.327217 0.317682 0.008228 0.256915 2000Q2 0.327217 0.317682 0.008228 0.256915 2000Q3 0.327217 0.317682 0.008228 0.256915 2000Q4 0.327217 0.317682 0.008228 0.256915 2001Q1 -0.185783 -0.824330 0.197894 0.187231 Colorado Texas New York Ohio 2001Q3 0.327217 0.317682 0.008228 0.256915 2001Q4 0.327217 0.317682 0.008228 0.256915 2002Q1 0.327217 0.317682 0.008228 0.256915 2002Q2 0.327217 0.317682 0.008228 0.256915 2002Q3 -0.185783 -0.824330 0.197894 0.187231 [Finished in 0.8s]