linux负载均衡(三)select_task_rq_fair分析

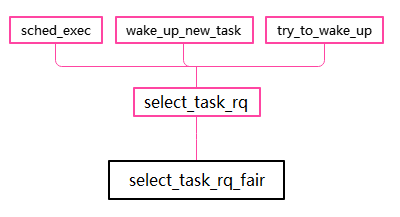

有三种情况需要为task选择cpu:刚创建的进程(fork),刚exec的进程(exec),刚被唤醒的进程(wakeup)他们都会调用select_task_rq,对于cfs,就是select_task_rq_fair。

static int select_task_rq_fair(struct task_struct *p, int prev_cpu, int wake_flags) { int sync = (wake_flags & WF_SYNC) && !(current->flags & PF_EXITING); //wf_sync代表waker将会被阻塞,可以选择waker cpu struct sched_domain *tmp, *sd = NULL; int cpu = smp_processor_id(); int new_cpu = prev_cpu; int want_affine = 0; /* SD_flags and WF_flags share the first nibble */ int sd_flag = wake_flags & 0xF; /* * required for stable ->cpus_allowed */ lockdep_assert_held(&p->pi_lock); if (wake_flags & WF_TTWU) { //被唤醒的进程会带上wf_ttwu标志 record_wakee(p); if ((wake_flags & WF_CURRENT_CPU) && cpumask_test_cpu(cpu, p->cpus_ptr)) //wf_current_cpu表示选择waker cpu return cpu; if (!is_rd_overutilized(this_rq()->rd)) { //异构系统用的,比如arm的大小核系统 new_cpu = find_energy_efficient_cpu(p, prev_cpu); if (new_cpu >= 0) return new_cpu; new_cpu = prev_cpu; } want_affine = !wake_wide(p) && cpumask_test_cpu(cpu, p->cpus_ptr); // 跟waker和wakee过去唤醒操作记录,判断是否考虑本cpu } rcu_read_lock(); for_each_domain(cpu, tmp) { //沿着bash调度域向上找 /* * If both 'cpu' and 'prev_cpu' are part of this domain, * cpu is a valid SD_WAKE_AFFINE target. */ if (want_affine && (tmp->flags & SD_WAKE_AFFINE) && //如果期望亲和性,当前cpu和prev_cpu都是本调度域 cpumask_test_cpu(prev_cpu, sched_domain_span(tmp))) { if (cpu != prev_cpu) new_cpu = wake_affine(tmp, p, cpu, prev_cpu, sync); // 从prev cpu和本cpu选一个 sd = NULL; /* Prefer wake_affine over balance flags */ break; } /* * Usually only true for WF_EXEC and WF_FORK, as sched_domains * usually do not have SD_BALANCE_WAKE set. That means wakeup * will usually go to the fast path. */ if (tmp->flags & sd_flag) //ttwu不会满足这个,因为调度域都没有ttwu标志,只有exec和fork会满足条件 sd = tmp; else if (!want_affine) //ttwu但是没有亲和性要求,sd为null break; } //如果是exec或fork,sd一般会是最顶层的domains,因为每个domains一般都包含sd_balance_fork | sd_balance_exec if (unlikely(sd)) { //fork和exec走这条slow path /* Slow path */ new_cpu = sched_balance_find_dst_cpu(sd, p, cpu, prev_cpu, sd_flag); } else if (wake_flags & WF_TTWU) { /* XXX always ? */ // ttwu走fast path /* Fast path */ new_cpu = select_idle_sibling(p, prev_cpu, new_cpu); //从上面选择的new cpu和prev cpu上选一个 } //有没有没包含的路径? rcu_read_unlock(); return new_cpu; }

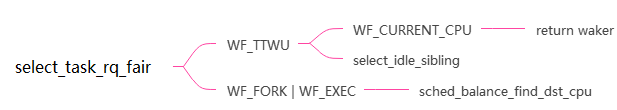

流程可以用下图表示。

一个可能的问题:fork的线程有可能跟主线程共享内存,因此是存在亲和性的,但是在select_task_rq_fair里面没有考虑这一点。

快速路径:select_idle_sibling会在waker cpu,prev cpu,llc share cpu中选一个cpu。

慢速路径:sched_balance_find_dst_cpu,这个稍微复杂一点。

static inline int sched_balance_find_dst_cpu(struct sched_domain *sd, struct task_struct *p, int cpu, int prev_cpu, int sd_flag) { int new_cpu = cpu; if (!cpumask_intersects(sched_domain_span(sd), p->cpus_ptr)) //如果选中的domain和task绑定的核没有重合的cpu,直接返回prev cpu return prev_cpu; //由于我们选择的是顶层domain,这种情况不存在 /* * We need task's util for cpu_util_without, sync it up to * prev_cpu's last_update_time. */ if (!(sd_flag & SD_BALANCE_FORK)) sync_entity_load_avg(&p->se); while (sd) { struct sched_group *group; struct sched_domain *tmp; int weight; if (!(sd->flags & sd_flag)) { sd = sd->child; continue; } group = sched_balance_find_dst_group(sd, p, cpu); // 在当前的domain中沿着sched_group链找最清闲的group if (!group) { sd = sd->child; // 假如没合适的group就往下找 continue; } new_cpu = sched_balance_find_dst_group_cpu(group, p, cpu); // 在上面选中的group中找最清闲的cpu if (new_cpu == cpu) { /* Now try balancing at a lower domain level of 'cpu': */ //如果选中的cpu和当前cpu一致,再向下找找 sd = sd->child; continue; } /* Now try balancing at a lower domain level of 'new_cpu': */ cpu = new_cpu; weight = sd->span_weight; sd = NULL; for_each_domain(cpu, tmp) { // 沿着上面选中的最清闲cpu,它的base domain往上找满足flag的domain if (weight <= tmp->span_weight) // 但不能超过当前domain的级别 break; if (tmp->flags & sd_flag) sd = tmp; } } return new_cpu; }

现在可以大概梳理一下对于exec或fork的情形如果来找合适的cpu。先从当前cpu开始从base domain向上找到顶层domain,sched_balance_find_dst_cpu再从顶层domain向下找最清闲的cpu,这就相当于在全局范围内找合适的cpu,所以很难预测选中的cpu会落在哪里,这种情况没有是假设当前cpu和选中的cpu没有亲和性。

总的来说,对于上述三种选核的情形,ttwu会选择prev cpu,waker cpu或者在共享llc的cpu中选一个idle的cpu。而其他两种情况需要在整个系统中寻找合适的cpu。ttwu更强调亲和性,fork和exec没有亲和性的考虑。