linux内存管理(三)进程地址空间

以下是基于v5.0。

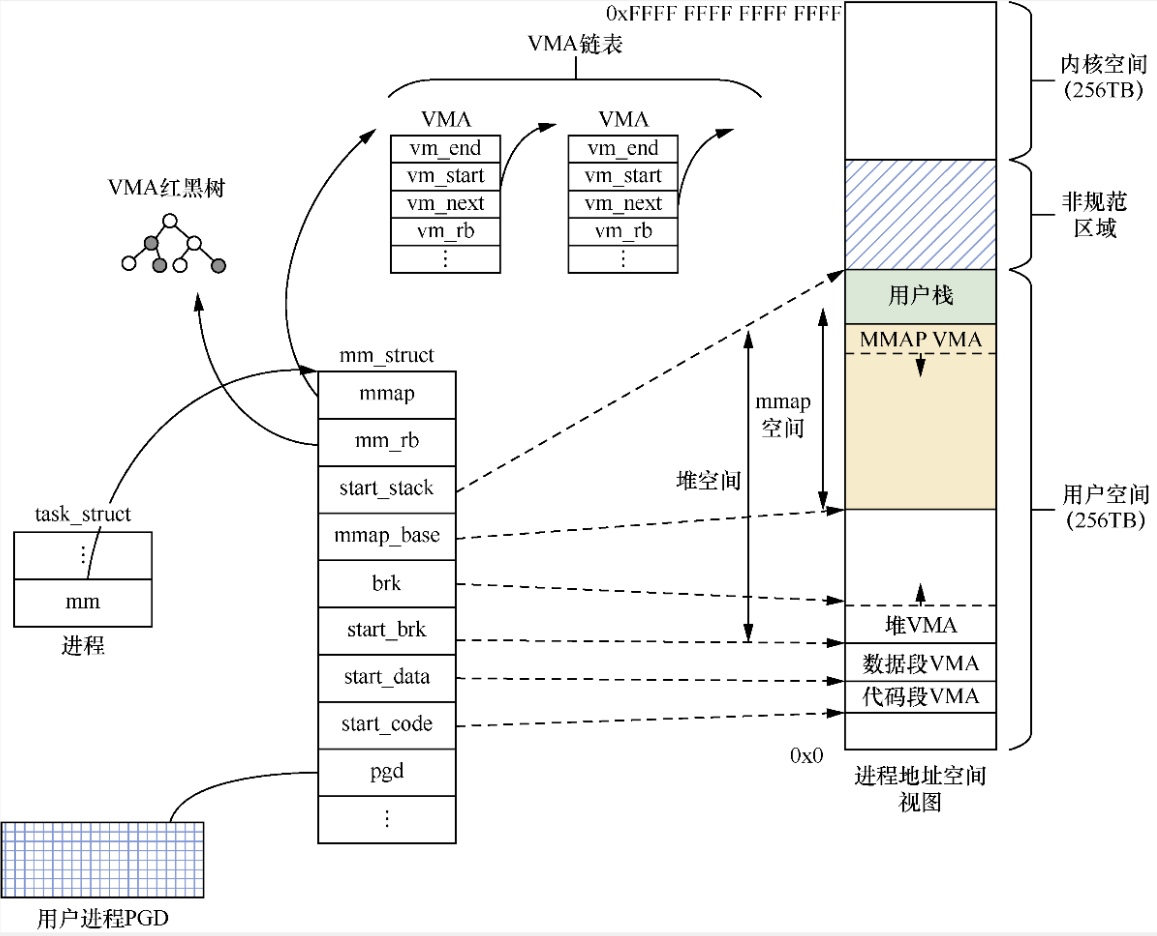

每个进程都有自己的虚拟机地址空间。在task_struct数据结构中有一个mm_struct专门用来描述进程的虚拟地址空间。

struct task_struct { ... struct mm_struct *mm; ... }

struct mm_struct { struct { struct vm_area_struct *mmap; /* list of VMAs */ struct rb_root mm_rb; u64 vmacache_seqnum; /* per-thread vmacache */ #ifdef CONFIG_MMU unsigned long (*get_unmapped_area) (struct file *filp, unsigned long addr, unsigned long len, unsigned long pgoff, unsigned long flags); #endif unsigned long mmap_base; /* base of mmap area */ unsigned long mmap_legacy_base; /* base of mmap area in bottom-up allocations */ #ifdef CONFIG_HAVE_ARCH_COMPAT_MMAP_BASES /* Base adresses for compatible mmap() */ unsigned long mmap_compat_base; unsigned long mmap_compat_legacy_base; #endif unsigned long task_size; /* size of task vm space */ unsigned long highest_vm_end; /* highest vma end address */ pgd_t * pgd; /** * @mm_users: The number of users including userspace. * * Use mmget()/mmget_not_zero()/mmput() to modify. When this * drops to 0 (i.e. when the task exits and there are no other * temporary reference holders), we also release a reference on * @mm_count (which may then free the &struct mm_struct if * @mm_count also drops to 0). */ atomic_t mm_users; /** * @mm_count: The number of references to &struct mm_struct * (@mm_users count as 1). * * Use mmgrab()/mmdrop() to modify. When this drops to 0, the * &struct mm_struct is freed. */ atomic_t mm_count; #ifdef CONFIG_MMU atomic_long_t pgtables_bytes; /* PTE page table pages */ #endif int map_count; /* number of VMAs */ spinlock_t page_table_lock; /* Protects page tables and some * counters */ struct rw_semaphore mmap_sem; struct list_head mmlist; /* List of maybe swapped mm's. These * are globally strung together off * init_mm.mmlist, and are protected * by mmlist_lock */ unsigned long hiwater_rss; /* High-watermark of RSS usage */ unsigned long hiwater_vm; /* High-water virtual memory usage */ unsigned long total_vm; /* Total pages mapped */ unsigned long locked_vm; /* Pages that have PG_mlocked set */ unsigned long pinned_vm; /* Refcount permanently increased */ unsigned long data_vm; /* VM_WRITE & ~VM_SHARED & ~VM_STACK */ unsigned long exec_vm; /* VM_EXEC & ~VM_WRITE & ~VM_STACK */ unsigned long stack_vm; /* VM_STACK */ unsigned long def_flags; spinlock_t arg_lock; /* protect the below fields */ unsigned long start_code, end_code, start_data, end_data; unsigned long start_brk, brk, start_stack; unsigned long arg_start, arg_end, env_start, env_end; unsigned long saved_auxv[AT_VECTOR_SIZE]; /* for /proc/PID/auxv */ /* * Special counters, in some configurations protected by the * page_table_lock, in other configurations by being atomic. */ struct mm_rss_stat rss_stat; struct linux_binfmt *binfmt; /* Architecture-specific MM context */ mm_context_t context; unsigned long flags; /* Must use atomic bitops to access */ struct core_state *core_state; /* coredumping support */ #ifdef CONFIG_MEMBARRIER atomic_t membarrier_state; #endif #ifdef CONFIG_AIO spinlock_t ioctx_lock; struct kioctx_table __rcu *ioctx_table; #endif #ifdef CONFIG_MEMCG /* * "owner" points to a task that is regarded as the canonical * user/owner of this mm. All of the following must be true in * order for it to be changed: * * current == mm->owner * current->mm != mm * new_owner->mm == mm * new_owner->alloc_lock is held */ struct task_struct __rcu *owner; #endif struct user_namespace *user_ns; /* store ref to file /proc/<pid>/exe symlink points to */ struct file __rcu *exe_file; #ifdef CONFIG_MMU_NOTIFIER struct mmu_notifier_mm *mmu_notifier_mm; #endif #if defined(CONFIG_TRANSPARENT_HUGEPAGE) && !USE_SPLIT_PMD_PTLOCKS pgtable_t pmd_huge_pte; /* protected by page_table_lock */ #endif #ifdef CONFIG_NUMA_BALANCING /* * numa_next_scan is the next time that the PTEs will be marked * pte_numa. NUMA hinting faults will gather statistics and * migrate pages to new nodes if necessary. */ unsigned long numa_next_scan; /* Restart point for scanning and setting pte_numa */ unsigned long numa_scan_offset; /* numa_scan_seq prevents two threads setting pte_numa */ int numa_scan_seq; #endif /* * An operation with batched TLB flushing is going on. Anything * that can move process memory needs to flush the TLB when * moving a PROT_NONE or PROT_NUMA mapped page. */ atomic_t tlb_flush_pending; #ifdef CONFIG_ARCH_WANT_BATCHED_UNMAP_TLB_FLUSH /* See flush_tlb_batched_pending() */ bool tlb_flush_batched; #endif struct uprobes_state uprobes_state; #ifdef CONFIG_HUGETLB_PAGE atomic_long_t hugetlb_usage; #endif struct work_struct async_put_work; #if IS_ENABLED(CONFIG_HMM) /* HMM needs to track a few things per mm */ struct hmm *hmm; #endif } __randomize_layout; /* * The mm_cpumask needs to be at the end of mm_struct, because it * is dynamically sized based on nr_cpu_ids. */ unsigned long cpu_bitmap[]; };

mm_struct包含了运行一个进程所需的所有内存方面的描述。启动进程需要的可执行文件exe_file,程序的代码段数据段要放到内存中,由start_code, end_code, start_data, end_data表示;进程使用的内存,malloc用的堆由start_brk, brk表示,mmap空间用mmap_base表示,stack由start_stack表示。这样就构成了进程地址空间的视图。访问内存需要页表,页表的基地址放在pgd中。所有进程的mm_struct会链接起来。与栈空间不同,描述mmap空间有一个专门的数据结构vm_area_struct,它代表mmap空间的一段区域,也用来描述代码段,数据段。mm_struct中的mmap字段就是指向进程某个VMA的指针,VMA内有prev和next指针可以方便的遍历所有VMA。所有VMA又通过rbtree组织起来。

图片来自《奔跑吧linux内核卷一》

struct vm_area_struct { /* The first cache line has the info for VMA tree walking. */ unsigned long vm_start; /* Our start address within vm_mm. */ unsigned long vm_end; /* The first byte after our end address within vm_mm. */ /* linked list of VM areas per task, sorted by address */ struct vm_area_struct *vm_next, *vm_prev; struct rb_node vm_rb; /* * Largest free memory gap in bytes to the left of this VMA. * Either between this VMA and vma->vm_prev, or between one of the * VMAs below us in the VMA rbtree and its ->vm_prev. This helps * get_unmapped_area find a free area of the right size. */ unsigned long rb_subtree_gap; /* Second cache line starts here. */ struct mm_struct *vm_mm; /* The address space we belong to. */ pgprot_t vm_page_prot; /* Access permissions of this VMA. */ unsigned long vm_flags; /* Flags, see mm.h. */ /* * For areas with an address space and backing store, * linkage into the address_space->i_mmap interval tree. */ struct { struct rb_node rb; unsigned long rb_subtree_last; } shared; /* * A file's MAP_PRIVATE vma can be in both i_mmap tree and anon_vma * list, after a COW of one of the file pages. A MAP_SHARED vma * can only be in the i_mmap tree. An anonymous MAP_PRIVATE, stack * or brk vma (with NULL file) can only be in an anon_vma list. */ struct list_head anon_vma_chain; /* Serialized by mmap_sem & * page_table_lock */ struct anon_vma *anon_vma; /* Serialized by page_table_lock */ /* Function pointers to deal with this struct. */ const struct vm_operations_struct *vm_ops; /* Information about our backing store: */ unsigned long vm_pgoff; /* Offset (within vm_file) in PAGE_SIZE units */ struct file * vm_file; /* File we map to (can be NULL). */ void * vm_private_data; /* was vm_pte (shared mem) */ atomic_long_t swap_readahead_info; #ifndef CONFIG_MMU struct vm_region *vm_region; /* NOMMU mapping region */ #endif #ifdef CONFIG_NUMA struct mempolicy *vm_policy; /* NUMA policy for the VMA */ #endif struct vm_userfaultfd_ctx vm_userfaultfd_ctx; } __randomize_layout;

vm_area_struct可以描述一个VMA区域的起始地址和大小,区域的属性,由vm_flags表示。下面是属性列表:

#define VM_NONE 0x00000000 #define VM_READ 0x00000001 //可读 #define VM_WRITE 0x00000002 //可写 #define VM_EXEC 0x00000004 //可执行 #define VM_SHARED 0x00000008 //多进程共享 /* mprotect() hardcodes VM_MAYREAD >> 4 == VM_READ, and so for r/w/x bits. */ #define VM_MAYREAD 0x00000010 /* limits for mprotect() etc */ #define VM_MAYWRITE 0x00000020 #define VM_MAYEXEC 0x00000040 #define VM_MAYSHARE 0x00000080 #define VM_GROWSDOWN 0x00000100 /* general info on the segment */ #ifdef CONFIG_MMU #define VM_UFFD_MISSING 0x00000200 /* missing pages tracking */ #else /* CONFIG_MMU */ #define VM_MAYOVERLAY 0x00000200 /* nommu: R/O MAP_PRIVATE mapping that might overlay a file mapping */ #define VM_UFFD_MISSING 0 #endif /* CONFIG_MMU */ #define VM_PFNMAP 0x00000400 /* Page-ranges managed without "struct page", just pure PFN */ #define VM_UFFD_WP 0x00001000 /* wrprotect pages tracking */ #define VM_LOCKED 0x00002000 #define VM_IO 0x00004000 /* Memory mapped I/O or similar */ 。。。

vm_flags只是从caller得到的属性需求,要想落实这些属性必须转换成pte可以识别的格式,VMA还有一个vm_page_prot,用来表示pte的属性,他是一个pgprot_t类型的变量,而pgprot_t本质是pteval_t。函数vm_get_page_pro用来完成从vm_flags到vm_page_prot的转换。

pgprot_t vm_get_page_prot(unsigned long vm_flags) { pgprot_t ret = __pgprot(pgprot_val(protection_map[vm_flags & (VM_READ|VM_WRITE|VM_EXEC|VM_SHARED)]) | pgprot_val(arch_vm_get_page_prot(vm_flags))); return arch_filter_pgprot(ret); }

pgprot_t protection_map[16] __ro_after_init = { __P000, __P001, __P010, __P011, __P100, __P101, __P110, __P111, __S000, __S001, __S010, __S011, __S100, __S101, __S110, __S111 }; #define __P000 PAGE_NONE #define __P001 PAGE_READONLY #define __P010 PAGE_READONLY #define __P011 PAGE_READONLY #define __P100 PAGE_EXECONLY #define __P101 PAGE_READONLY_EXEC #define __P110 PAGE_READONLY_EXEC #define __P111 PAGE_READONLY_EXEC #define __S000 PAGE_NONE #define __S001 PAGE_READONLY #define __S010 PAGE_SHARED #define __S011 PAGE_SHARED #define __S100 PAGE_EXECONLY #define __S101 PAGE_READONLY_EXEC #define __S110 PAGE_SHARED_EXEC #define __S111 PAGE_SHARED_EXEC

看一下有关VMA的操作。

find_vma

/* Look up the first VMA which satisfies addr < vm_end, NULL if none. */ struct vm_area_struct *find_vma(struct mm_struct *mm, unsigned long addr) { struct rb_node *rb_node; struct vm_area_struct *vma; /* Check the cache first. */ vma = vmacache_find(mm, addr); if (likely(vma)) return vma; rb_node = mm->mm_rb.rb_node; while (rb_node) { struct vm_area_struct *tmp; tmp = rb_entry(rb_node, struct vm_area_struct, vm_rb); if (tmp->vm_end > addr) { vma = tmp; if (tmp->vm_start <= addr) break; rb_node = rb_node->rb_left; } else rb_node = rb_node->rb_right; } if (vma) vmacache_update(addr, vma); return vma; }

VMA可能存在与vmacache中,该变量存在于当前进程的vmacache变量。

struct task_struct { ... /* Per-thread vma caching: */ struct vmacache vmacache; ... } struct vmacache { u64 seqnum; struct vm_area_struct *vmas[VMACACHE_SIZE]; }; #define VMACACHE_BITS 2 #define VMACACHE_SIZE (1U << VMACACHE_BITS)

每个进程可以保存最多4个VMA。

如果vma缓存中没有找到,则区vma的rbtree中找。找到后还要更新一下vmacache,这利用了时间局部性,最近访问的数据放入缓存中,稍后还会用到。

插入VMA,insert_vm_struct.

/* Insert vm structure into process list sorted by address * and into the inode's i_mmap tree. If vm_file is non-NULL * then i_mmap_rwsem is taken here. */ int insert_vm_struct(struct mm_struct *mm, struct vm_area_struct *vma) { struct vm_area_struct *prev; struct rb_node **rb_link, *rb_parent;

// 在rbtree中找到要插入的位置 if (find_vma_links(mm, vma->vm_start, vma->vm_end, &prev, &rb_link, &rb_parent)) return -ENOMEM; /* * The vm_pgoff of a purely anonymous vma should be irrelevant * until its first write fault, when page's anon_vma and index * are set. But now set the vm_pgoff it will almost certainly * end up with (unless mremap moves it elsewhere before that * first wfault), so /proc/pid/maps tells a consistent story. * * By setting it to reflect the virtual start address of the * vma, merges and splits can happen in a seamless way, just * using the existing file pgoff checks and manipulations. * Similarly in do_mmap_pgoff and in do_brk. */

//没明白 if (vma_is_anonymous(vma)) { BUG_ON(vma->anon_vma); vma->vm_pgoff = vma->vm_start >> PAGE_SHIFT; } //将vma插入vma 链表和rbtree vma_link(mm, vma, prev, rb_link, rb_parent); return 0; }

最新的kernel(v6.8)在描述VMA有关的部分已经有了变化(改动发生在之前的某个版本)。

struct mm_struct {

struct {

...

struct maple_tree mm_mt;

...

}

maple_tree结构的mm_mt取代了原来的原始的vm_area_struct 结构mmap。相应的VMA有关的操作也发生了很大的变化。

查找VMA,find_vma.

/**

* find_vma() - Find the VMA for a given address, or the next VMA.

* @mm: The mm_struct to check

* @addr: The address

*

* Returns: The VMA associated with addr, or the next VMA.

* May return %NULL in the case of no VMA at addr or above.

*/

struct vm_area_struct *find_vma(struct mm_struct *mm, unsigned long addr)

{

unsigned long index = addr;

mmap_assert_locked(mm);

return mt_find(&mm->mm_mt, &index, ULONG_MAX);

}

很简单的调用maple tree的接口去实现查找。maple tree的详细信息可以看Documentation/core-api/maple_tree.rst

浙公网安备 33010602011771号

浙公网安备 33010602011771号