k8s部署coredns

Kubernetes基于DNS的服务发现

在Kubernetes集群推荐使用Service Name作为服务的访问地址,因此需要一个Kubernetes集群范围的DNS服务实现从Service Name到Cluster Ip的解析,这就是Kubernetes基于DNS的服务发现功能。

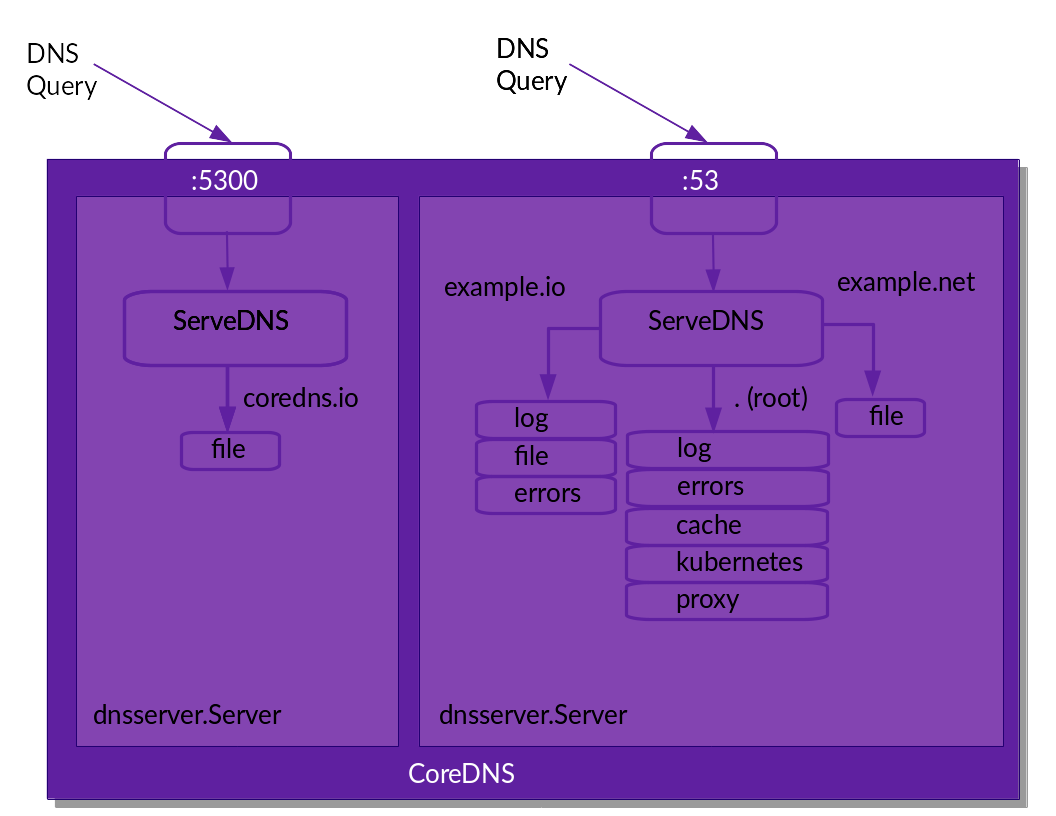

CoreDNS的架构

DNS 格式

1、service资源的DNS记录

A记录:<service>.<ns>.svc.<zone>.<ttl> IN A <cluster-ip>

my-svc.my-namespace.svc.cluster.local

SRV记录:_<port>._<proto>.<service>.<ns>.svc.<zone>.<ttl> IN SRV <weight><priority><port-number><service>.<ns>.svc.<zone>

_my-port-name._my-port-protocol.my-svc.my-namespace.svc.cluster.local

A记录:<service>.<ns>.svc.<zone>.<ttl> IN A <cluster-ip>

SRV记录:_<port>._<proto>.<service>.<ns>.svc.<zone>.<ttl> IN SRV <weight><priority><port-number><hostname>.<service>.<ns>.svc.<zone>

PTR记录:<d>.<c>.<b>.<a>.in-addr.arpa.<ttl> IN PTR <hostname>.<service>.<ns>.svc.<zone>

3、ExternalName类型的service资源,需要具有CNAME类型的资源记录

CNAME记录:<service>.<ns>.svc.<zone> IN CNAME <extname>

部署

wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/coredns.yaml.sed wget https://raw.githubusercontent.com/coredns/deployment/master/kubernetes/deploy.sh chmod +x deploy.sh ./deploy.sh -i 10.96.0.10 >coredns.yml kubectl apply -f coredns.yml

coredns.yml文件

cat coredns.yaml apiVersion: v1 kind: ServiceAccount metadata: name: coredns namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns rules: - apiGroups: - "" resources: - endpoints - services - pods - namespaces verbs: - list - watch - apiGroups: - discovery.k8s.io resources: - endpointslices verbs: - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: "true" labels: kubernetes.io/bootstrapping: rbac-defaults name: system:coredns roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:coredns subjects: - kind: ServiceAccount name: coredns namespace: kube-system --- apiVersion: v1 kind: ConfigMap metadata: name: coredns namespace: kube-system data: Corefile: | .:53 { errors health { lameduck 5s } ready kubernetes cluster.local in-addr.arpa ip6.arpa { fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . /etc/resolv.conf { max_concurrent 1000 } cache 30 loop reload loadbalance } --- apiVersion: apps/v1 kind: Deployment metadata: name: coredns namespace: kube-system labels: k8s-app: kube-dns kubernetes.io/name: "CoreDNS" spec: strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 selector: matchLabels: k8s-app: kube-dns template: metadata: labels: k8s-app: kube-dns spec: priorityClassName: system-cluster-critical serviceAccountName: coredns tolerations: - key: "CriticalAddonsOnly" operator: "Exists" nodeSelector: kubernetes.io/os: linux affinity: podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: k8s-app operator: In values: ["kube-dns"] topologyKey: kubernetes.io/hostname containers: - name: coredns image: coredns/coredns:1.8.4 imagePullPolicy: IfNotPresent resources: limits: memory: 170Mi requests: cpu: 100m memory: 70Mi args: [ "-conf", "/etc/coredns/Corefile" ] volumeMounts: - name: config-volume mountPath: /etc/coredns readOnly: true ports: - containerPort: 53 name: dns protocol: UDP - containerPort: 53 name: dns-tcp protocol: TCP - containerPort: 9153 name: metrics protocol: TCP securityContext: allowPrivilegeEscalation: false capabilities: add: - NET_BIND_SERVICE drop: - all readOnlyRootFilesystem: true livenessProbe: httpGet: path: /health port: 8080 scheme: HTTP initialDelaySeconds: 60 timeoutSeconds: 5 successThreshold: 1 failureThreshold: 5 readinessProbe: httpGet: path: /ready port: 8181 scheme: HTTP dnsPolicy: Default volumes: - name: config-volume configMap: name: coredns items: - key: Corefile path: Corefile --- apiVersion: v1 kind: Service metadata: name: kube-dns namespace: kube-system annotations: prometheus.io/port: "9153" prometheus.io/scrape: "true" labels: k8s-app: kube-dns kubernetes.io/cluster-service: "true" kubernetes.io/name: "CoreDNS" spec: selector: k8s-app: kube-dns clusterIP: 10.96.0.10 ports: - name: dns port: 53 protocol: UDP - name: dns-tcp port: 53 protocol: TCP - name: metrics port: 9153 protocol: TCP

CoreDNS ConfigMap选项

Upstream 用于解析指向外部主机的服务(外部服务)。

- prometheus:CoreDNS的度量标准可以在http//localhost:9153/Prometheus格式的指标中找到。

- proxy:任何不在Kubernetes集群域内的查询都将转发到预定义的解析器(/etc/resolv.conf)。

- cache:这将启用前端缓存。

- loop:检测简单的转发循环,如果找到循环则停止CoreDNS进程。

- reload:允许自动重新加载已更改的Corefile。编辑ConfigMap配置后,请等待两分钟以使更改生效。

- loadbalance:这是一个循环DNS负载均衡器,可以在答案中随机化A,AAAA和MX记录的顺序。

测试

cat >busybox.yaml<<EOF

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: default

spec:

containers:

- name: busybox

image: busybox:1.28

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOF

kubectl create -f busybox.yaml

kubectl get pods busybox

kubectl get pods busybox

kubectl exec busybox -- cat /etc/resolv.conf

[root@k8s-master coredns]# kubectl exec busybox -- cat /etc/resolv.conf

nameserver 10.96.0.10

search default.svc.cluster.local svc.cluster.local cluster.local

options ndots:5

kubectl exec -ti busybox -- nslookup kubernetes.default

[root@k8s-master coredns]# kubectl exec -ti busybox -- nslookup kubernetes.default

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

作者:南辞、归

本博客所有文章仅用于学习、研究和交流目的,欢迎非商业性质转载。

博主的文章没有高度、深度和广度,只是凑字数。由于博主的水平不高,不足和错误之处在所难免,希望大家能够批评指出。

博主是利用读书、参考、引用、抄袭、复制和粘贴等多种方式打造成自己的文章,请原谅博主成为一个无耻的文档搬运工!

浙公网安备 33010602011771号

浙公网安备 33010602011771号