【Ceph 】Async 网络通信源代码分析--研读

目录

前言

ceph在L版本中把Async网络通信模型做为默认的通信方式。Async实现了IO的多路复用,使用共享的线程池实现异步发送和接收任务。

本文主要介绍Async的的Epoll + 线程池的 实现模型,主要介绍基本框架和关键实现。

本文的思路是首先概要介绍相关的类,在介绍类时主要关注其数据结构和相关的操作。

其次介绍网络通信的核心流程:server端sock的监听和接受连接,客户端如何主动发起连接。消息的发送和接收主要流程。

基本类介绍

NetHandler

类NetHandler 封装了Socket的基本的功能。

class NetHandler {

int generic_connect(const entity_addr_t& addr,

const entity_addr_t& bind_addr,

bool nonblock);

CephContext *cct;

public:

explicit NetHandler(CephContext *c): cct(c) {}

//创建socket

int create_socket(int domain, bool reuse_addr=false);

//设置socket 为非阻塞

int set_nonblock(int sd);

//当用exec起子进程时:设置socket关闭

void set_close_on_exec(int sd);

//设置socket的选项:nodelay,buffer size

int set_socket_options(int sd, bool nodelay, int size);

//connect

int connect(const entity_addr_t &addr, const entity_addr_t& bind_addr);

//重连

int reconnect(const entity_addr_t &addr, int sd);

//非阻塞connect

int nonblock_connect(const entity_addr_t &addr, const entity_addr_t& bind_addr);

//设置优先级

void set_priority(int sd, int priority);

}Worker类

Worker类是工作线程的抽象接口,同时添加了listen和connect接口用于服务端和客户端的网络处理。其内部创建一个EventCenter类,该类保存相关处理的事件。

class Worker {

std::atomic_uint references;

EventCenter center; //事件处理中心, 处理该center的所有的事件

// server 端

virtual int listen(entity_addr_t &addr,

const SocketOptions &opts, ServerSocket *) = 0;

// client主动连接

virtual int connect(const entity_addr_t &addr,

const SocketOptions &opts, ConnectedSocket *socket) = 0;

}

PosixWorker

类PosixWorker实现了 Worker接口。class PosixWorker:public Worker

class PosixWorker : public Worker {

NetHandler net;

int listen(entity_addr_t &sa,

const SocketOptions

&opt,ServerSocket *socks) override;

int connect(const entity_addr_t &addr,

const SocketOptions &opts,

ConnectedSocket *socket) override;

}

int PosixWorker::listen(entity_addr_t &sa, const SocketOptions &opt,ServerSocket *sock)

函数PosixWorker::listen 实现了Server端的sock的功能:底层调用了NetHandler的功能,实现了socket 的 bind ,listen等操作,最后返回ServerSocket对象。

int PosixWorker::connect(const entity_addr_t &addr, const SocketOptions &opts, ConnectedSocket *socket)

函数PosixWorker::connect 实现了主动连接请求。返回ConnectedSocket对象。

NetworkStack

class NetworkStack : public CephContext::ForkWatcher {

std::string type; //NetworkStack的类型

ceph::spinlock pool_spin;

bool started = false;

//Worker 工作队列

unsigned num_workers = 0;

vector<Worker*> workers;

}

类NetworkStack是 网络协议栈的接口。PosixNetworkStack实现了linux的 tcp/ip 协议接口。DPDKStack实现了DPDK的接口。RDMAStack实现了IB的接口。

class PosixNetworkStack : public NetworkStack {

vector<int> coreids;

vector<std::thread> threads; //线程池

}Worker可以理解为工作者线程,其对应一个thread线程。为了兼容其它协议的设计,对应线程定义在了PosixNetworkStack类里。

通过上述分析可知,一个Worker对应一个线程,同时对应一个 事件处理中心EventCenter类。

EventDriver

EventDriver是一个抽象的接口,定义了添加事件监听,删除事件监听,获取触发的事件的接口。

class EventDriver {

public:

virtual ~EventDriver() {} // we want a virtual destructor!!!

virtual int init(EventCenter *center, int nevent) = 0;

virtual int add_event(int fd, int cur_mask, int mask) = 0;

virtual int del_event(int fd, int cur_mask, int del_mask) = 0;

virtual int event_wait(vector<FiredFileEvent> &fired_events, struct timeval *tp) = 0;

virtual int resize_events(int newsize) = 0;

virtual bool need_wakeup() { return true; }

};针对不同的IO多路复用机制,实现了不同的类。SelectDriver实现了select的方式。EpollDriver实现了epoll的网络事件处理方式。KqueueDriver是FreeBSD实现kqueue事件处理模型。

EventCenter

类EventCenter,主要保存事件(包括fileevent,和timeevent,以及外部事件)和 处理事件的相关的函数。

| Class EventCenter { //触发执行外部事件的fd //底层事件监控机制 // Used by internal thread // Used by external thread } |

Class EventCenter {

//外部事件

std::mutex external_lock;

std::atomic_ulong external_num_events;

deque<EventCallbackRef> external_events;

//socket事件, 其下标是socket对应的fd

vector<FileEvent> file_events; //-------------------------------------FileEvent

//时间事件 [expire time point, TimeEvent]

std::multimap<clock_type::time_point, TimeEvent> time_events; //------TimeEvent

//时间事件的map [id, iterator of [expire time point,time_event]]

std::map<uint64_t,

std::multimap<clock_type::time_point, TimeEvent>::iterator> event_map;

//触发执行外部事件的fd

int notify_receive_fd;

int notify_send_fd;

EventCallbackRef notify_handler;

//底层事件监控机制

EventDriver *driver;

NetHandler net;

// Used by internal thread

//创建file event

int create_file_event(int fd, int mask, EventCallbackRef ctxt);

//创建time event

uint64_t create_time_event(uint64_t milliseconds, EventCallbackRef ctxt);

//删除file event

void delete_file_event(int fd, int mask);

//删除 time event

void delete_time_event(uint64_t id);

//处理事件

int process_events(int timeout_microseconds);

//唤醒处理线程

void wakeup();

// Used by external thread

void dispatch_event_external(EventCallbackRef e);

}1)FileEvent

FileEvent事件,也就是socket对应的事件。

struct FileEvent {

int mask; //标志

EventCallbackRef read_cb; //处理读操作的回调函数

EventCallbackRef write_cb; //处理写操作的回调函数

FileEvent(): mask(0), read_cb(NULL), write_cb(NULL) {}

};2)TimeEvent

struct TimeEvent {

uint64_t id; //时间事件的ID号

EventCallbackRef time_cb; //事件处理的回调函数

TimeEvent(): id(0), time_cb(NULL) {}

};

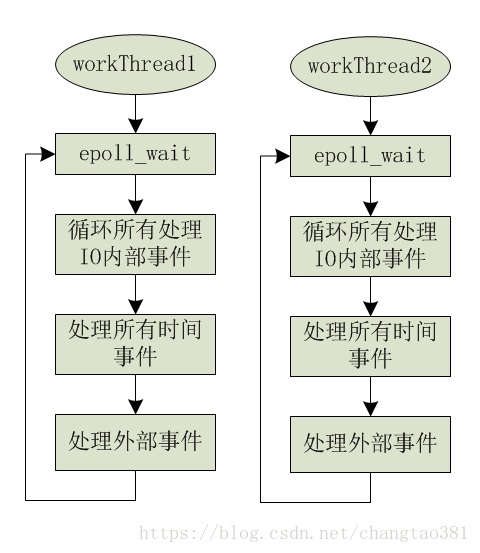

处理事件

int EventCenter::process_events(int timeout_microseconds, ceph::timespan *working_dur)

函数process_event处理相关的事件,其处理流程如下:

- 如果有外部事件,或者是poller模式,阻塞时间设置为0,也就是epoll_wait的超时时间。

- 默认超时时间为参数设定的超时时间timeout_microseconds,如果最近有时间事件,并且expect time 小于超时时间timeout_microseconds,就把超时时间设置为expect time到当前的时间间隔,并设置trigger_time为true标志,触发后续处理时间事件。

- 调用epoll_wait获取事件,并循环调用相应的回调函数处理相应的事件。

- 处理到期时间事件

- 处理所有的外部事件

在这里,内部事件指的是通过 epoll_wait 获取的事件。外部事件(external event)是其它投送的事件,例如处理主动连接,新的发送消息触发事件。

在类EventCenter里定义了两种方式向EventCenter里投递外部事件:

//直接投递EventCallback类型的事件处理函数

void EventCenter::dispatch_event_external(EventCallbackRef e)

//处理func类型的事件处理函数

void submit_to(int i, func &&f, bool nowait = false)

AsyncMessenger

类AsyncMessenger 主要完成AsyncConnection的管理。其内部保存了所有Connection相关的信息。

class AsyncMessenger : public SimplePolicyMessenger {

//现在的Connection

ceph::unordered_map<entity_addr_t, AsyncConnectionRef> conns;

//正在accept的Connection

set<AsyncConnectionRef> accepting_conns;

//准备删除的 Connection

set<AsyncConnectionRef> deleted_conns;

}

连接相关的流程介绍

Server端监听和接受连接的过程

这段代码来自 src/test/msgr/perf_msgr_server.cc,主要用于建立Server端的msgr:

void start() {

entity_addr_t addr;

addr.parse(bindaddr.c_str());

msgr->bind(addr);

msgr->add_dispatcher_head(&dispatcher);

msgr->start();

msgr->wait();

}

上面的代码是典型的服务端的启动流程:

- 绑定服务端地址 msgr->bind(addr)

- 添加消息分发类型 dispatcher

- 启动 msgr->start()

下面从内部具体如何实现。

- 调用processor的bind 函数,对于PosixStack, 只需要一个porcessor就可以了。

| int AsyncMessenger::bind(const entity_addr_t &bind_addr) ...... |

p->bind的内容:

| int Processor::bind(const entity_addr_t &bind_addr, const set<int>& avoid_ports, entity_addr_t* bound_addr){ ... //向Processor对于的工作者线程 投递外部事件,其回调函数为 worker的 listen函数 worker->center.submit_to(worker->center.get_id(), [this, &listen_addr, &opts, &r]() { r = worker->listen(listen_addr, opts, &listen_socket); }, false); ... } |

当该外部事件被worker线程调度执行后,worker->listen完成了该Processor的listen_socket的创建。

- 添加 dispatcher

void add_dispatcher_head(Dispatcher *d) {

if (first)

ready();

}在ready 函数里调用了Processor::start函数

在Processor::start函数里,向EventCenter 投递了外部事件,该外部事件的回调函数里实现了向 EventCenter注册listen socket 的读事件监听。 该事件的处理函数为 listen_handeler

void Processor::start()

{

ldout(msgr->cct, 1) << __func__ << dendl;

// start thread

if (listen_socket) {

worker->center.submit_to(worker->center.get_id(),

[this]() {

worker->center.create_file_event(

listen_socket.fd(), EVENT_READABLE, listen_handler);

}, false);

}

}listen_handler对应的 处理函数为 processor::accept函数,其处理接收连接的事件。

class Processor::C_processor_accept : public EventCallback {

Processor *pro;

public:

explicit C_processor_accept(Processor *p): pro(p) {}

void do_request(int id) {

pro->accept();

}

};在函数Processor::accept里, 首先获取了一个worker,通过调用accept函数接收该连接。并调用 msgr->add_accept 函数。

void Processor::accept()

{

......

if (!msgr->get_stack()->support_local_listen_table())

w = msgr->get_stack()->get_worker();

int r = listen_socket.accept(&cli_socket, opts, &addr, w);

if (r == 0) {

msgr->add_accept(w, std::move(cli_socket), addr);

continue;

}

}void AsyncMessenger::add_accept(Worker *w,

ConnectedSocket cli_socket,

entity_addr_t &addr)

{

lock.Lock();

//创建连接,该Connection已经指定了 worker处理该Connection上所有的事件。

AsyncConnectionRef conn = new AsyncConnection(cct, this, &dispatch_queue, w);

conn->accept(std::move(cli_socket), addr);

accepting_conns.insert(conn);

lock.Unlock();

}

Client端主动连接的过程

AsyncConnectionRef AsyncMessenger::create_connect(const entity_addr_t& addr, int type)

{

// 获取一个 worker,根据负载均衡

Worker *w = stack->get_worker();

//创建Connection

AsyncConnectionRef conn = new AsyncConnection(cct, this, &dispatch_queue, w);

//

conn->connect(addr, type);

//添加到conns列表中

conns[addr] = conn;

return conn;

}下面的代码,函数 AsyncConnection::_connect 设置了状态为 STATE_CONNECTING,向对应的 EventCenter投递 外部外部事件,其read_handler为 void AsyncConnection::process()函数。

void AsyncConnection::_connect()

{

ldout(async_msgr->cct, 10) << __func__ << " csq=" << connect_seq << dendl;

state = STATE_CONNECTING;

// rescheduler connection in order to avoid lock dep

// may called by external thread(send_message)

center->dispatch_event_external(read_handler);

}void AsyncConnection::process()

{

......

default:

{

if (_process_connection() < 0)

goto fail;

break;

}

}

ssize_t AsyncConnection::_process_connection()

{

......

r = worker->connect(get_peer_addr(), opts, &cs);

if (r < 0)

goto fail;

center->create_file_event(cs.fd(), EVENT_READABLE, read_handler);

}

消息的接收和发送

消息的接收

消息的接收比较简单,因为消息的接收都是 内部事件,也就是都是由 epoll_wait触发的事件。其对应的回调函数 AsyncConnection::process() 去处理相应的接收事件。

消息的发送

消息的发送比较特殊,它涉及到外部事件和内部事件的相关的调用。

int AsyncConnection::send_message(Message *m){

......

//把消息添加到 内部发送队列里

out_q[m->get_priority()].emplace_back(std::move(bl), m);

//添加外部事件给对应的的CenterEvent,并触发外部事件

if (can_write != WriteStatus::REPLACING)

center->dispatch_event_external(write_handler);

}发送相关的调用函数

void AsyncConnection::handle_write()

ssize_t AsyncConnection::write_message(Message *m,bufferlist& bl, bool more)

ssize_t AsyncConnection::_try_send(bool more)ssize_t AsyncConnection::_try_send(bool more)

{

......

if (!open_write && is_queued()) {

center->create_file_event(cs.fd(), EVENT_WRITABLE,

write_handler);

open_write = true;

}

if (open_write && !is_queued()) {

center->delete_file_event(cs.fd(), EVENT_WRITABLE);

open_write = false;

if (state_after_send != STATE_NONE)

center->dispatch_event_external(read_handler);

}

}

函数_try_send里比较关键的是:

- 当还有消息没有发送时, 就把该socket的 fd 添加到 EVENT_WRITABLE 事件中。

- 如果没有消息发送, 就把该 socket的 fd 的 EVENT_WRITABLE 事件监听删除

也就是当有发送请求时, 添加外部事件,并触发线程去处理发送事件。当外部事件一次可以完成发送消息的请求时,就不需要添加该fd对应的EVENT_WRITABLE 事件监听。当没有发送完消息时,就添加该fd的EVENT_WRITABLE 事件监听来触发内部事件去继续完成消息的发送。

Ceph Async 模型

IO 多路复用多线程模型

Half-sync/Half-async模型

本模型主要实现如下:

- 有一个专用的独立线程(事件监听线程)调用epoll_wait 函数来监听网络IO事件

- 线程池(工作线程)用于处理网络IO事件 : 每个线程会有一个事件处理队列。

- 事件监听线程获取到 IO事件后,选择一个线程,把事件投递到该线程的处理队列,由该线程后续处理。

这里关键的一点是:如果选择一个线程?一般根据 socket 的 fd 来 hash映射到线程池中的线程。这里特别要避免的是:同一个socket不能有多个线程处理,只能由单个线程处理。

如图所示,系统有一个监听线程,一般为主线程 main_loop 调用 epoll_wait 来获取并产生事件,根据socket的 fd 的 hash算法来调度到相应的 线程,把事件投递到线程对应的队列中。工作线程负责处理具体的事件。

这个模型的优点是结构清晰,实现比较直观。 但也有如下的 不足:

- 生产事件的线程(main_loop线程) 和 消费事件的线程(工作者线程)访问同一个队列会有锁的互斥和线程的切换。

- main_loop是同步的,如果有线程的队列满,会阻塞main_loop线程,导致其它线程临时没有事件可消费。

Leader/Follower

当Leader监听到socket事件后:处理模式

1)指定一个Follower为新的Leader负责监听socket事件,自己成为Follower去处理事件

2)指定Follower 去完成相应的事件,自己仍然是Leader

由于Leader自己监听IO事件并处理客户请求,该模式不需要在线程间传递额外数据,也无需像半同步/半反应堆模式那样在线程间同步对请求队列的访问。

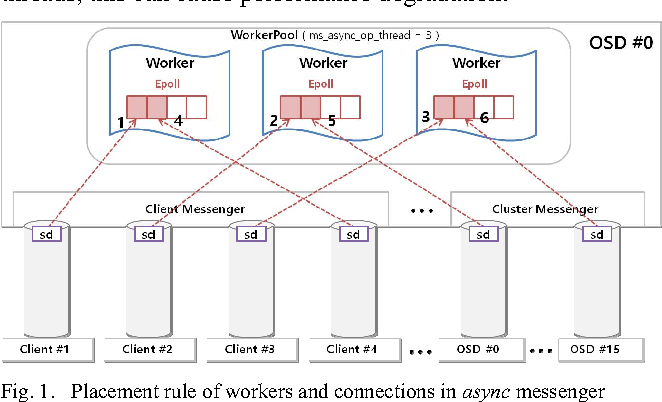

ceph Async 模型

在Ceph Async模型里,一个Worker类对应一个工作线程和一个事件中心EventCenter。 每个socket对应的AsyncConnection在创建时根据负载均衡绑定到对应的Worker中,以后都由该Worker处理该AsyncConnection上的所有的读写事件。

如图所示,在Ceph Async模型里,没有单独的main_loop线程,每个工作线程都是独立的,其循环处理如下:

- epoll_wait 等待事件

- 处理获取到的所有IO事件

- 处理所有时间相关的事件

- 处理外部事件

在这个模型中,消除了Half-sync/half-async的 队列互斥访问和 线程切换的问题。 本模型的优点本质上是利用了操作系统的事件队列,而没有自己去处理事件队列

Overall structure of Ceph async messenger

效果:

下文摘抄自:[部分转]OSD源码解读1--通信流程 - yimuxi - 博客园

[部分转自 https://hustcat.github.io/ceph-internal-network/ ]

由于Ceph的历史很久,网络没有采用现在常用的事件驱动(epoll)的模型,而是采用了与MySQL类似的多线程模型,每个连接(socket)有一个读线程,不断从socket读取,一个写线程,负责将数据写到socket。多线程实现简单,但并发性能就不敢恭维了。

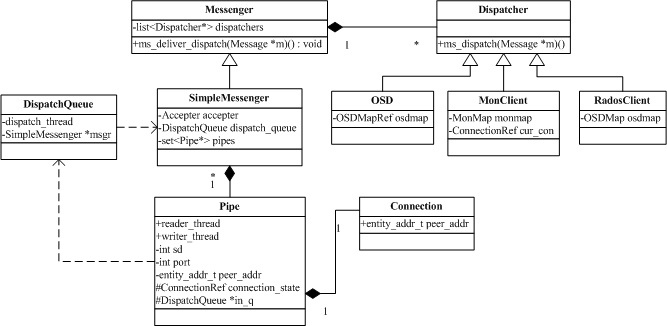

Messenger是网络模块的核心数据结构,负责接收/发送消息。OSD主要有两个Messenger:ms_public处于与客户端的消息,ms_cluster处理与其它OSD的消息。

初始化过程

初始化的过程在ceph-osd.cc中:

//创建一个 Messenger 对象,由于 Messenger 是抽象类,不能直接实例化,提供了一个 ::create 的方法来创建子类

Messenger *ms_public = Messenger::create(g_ceph_context, public_msg_type,

entity_name_t::OSD(whoami), "client",

getpid(),

Messenger::HAS_HEAVY_TRAFFIC |

Messenger::HAS_MANY_CONNECTIONS);//处理client消息

//??会选择SimpleMessager类实例,SimpleMessager类中会有一个叫做Accepter的成员,会申请该对象,并且初始化。

Messenger *ms_cluster = Messenger::create(g_ceph_context, cluster_msg_type,

entity_name_t::OSD(whoami), "cluster",

getpid(),

Messenger::HAS_HEAVY_TRAFFIC |

Messenger::HAS_MANY_CONNECTIONS);

//下面几个是检测心跳的

Messenger *ms_hb_back_client = Messenger::create(·····)

Messenger *ms_hb_front_client = Messenger::create(·····)

Messenger *ms_hb_back_server = Messenger::create(······)

Messenger *ms_hb_front_server = Messenger::create(·····)

Messenger *ms_objecter = Messenger::create(······)

··········

//绑定到固定ip

// 这个ip最终会绑定在Accepter中。然后在Accepter->bind函数中,会对这个ip初始化一个socket,

//并且保存为listen_sd。接着会启动Accepter->start(),这里会启动Accepter的监听线程,这个线

//程做的事情放在Accepter->entry()函数中

/*

messenger::bindv() -- messenger::bind()

--simpleMessenger::bind()

---- accepter.bind()

----创建socket---- socket bind() --- socket listen()

*/

if (ms_public->bindv(public_addrs) < 0)

forker.exit(1);

if (ms_cluster->bindv(cluster_addrs) < 0)

forker.exit(1);

···········

//创建dispatcher子类对象

osd = new OSD(g_ceph_context,

store,

whoami,

ms_cluster,

ms_public,

ms_hb_front_client,

ms_hb_back_client,

ms_hb_front_server,

ms_hb_back_server,

ms_objecter,

&mc,

data_path,

journal_path);

·········

// 启动 Reaper 线程

ms_public->start();

ms_hb_front_client->start();

ms_hb_back_client->start();

ms_hb_front_server->start();

ms_hb_back_server->start();

ms_cluster->start();

ms_objecter->start();

//初始化 OSD模块

/**

a). 初始化 OSD 模块

b). 通过 SimpleMessenger::add_dispatcher_head() 注册自己到

SimpleMessenger::dispatchers 中, 流程如下:

Messenger::add_dispatcher_head()

--> ready()

--> dispatch_queue.start()(新 DispatchQueue 线程)

--> Accepter::start()(启动start线程)

--> accept

--> SimpleMessenger::add_accept_pipe

--> Pipe::start_reader

--> Pipe::reader()

在 ready() 中: 通过 Messenger::reader(),

1) DispatchQueue 线程会被启动,用于缓存收到的消息消息

2) Accepter 线程启动,开始监听新的连接请求.

*/

// start osd

err = osd->init();

············

// 进入 mainloop, 等待退出

ms_public->wait(); // Simplemessenger::wait()

ms_hb_front_client->wait();

ms_hb_back_client->wait();

ms_hb_front_server->wait();

ms_hb_back_server->wait();

ms_cluster->wait();

ms_objecter->wait();

消息处理

相关数据结构

网络模块的核心是SimpleMessager:

(1)它包含一个Accepter对象,它会创建一个单独的线程,用于接收新的连接(Pipe)。

void *Accepter::entry()

{

...

int sd = ::accept(listen_sd, (sockaddr*)&addr.ss_addr(), &slen);

if (sd >= 0) {

errors = 0;

ldout(msgr->cct,10) << "accepted incoming on sd " << sd << dendl;

msgr->add_accept_pipe(sd);

...

//创建新的Pipe

Pipe *SimpleMessenger::add_accept_pipe(int sd)

{

lock.Lock();

Pipe *p = new Pipe(this, Pipe::STATE_ACCEPTING, NULL);

p->sd = sd;

p->pipe_lock.Lock();

p->start_reader();

p->pipe_lock.Unlock();

pipes.insert(p);

accepting_pipes.insert(p);

lock.Unlock();

return p;

}

(2)包含所有的连接对象(Pipe),每个连接Pipe有一个读线程/写线程。读线程负责从socket读取数据,然后放消息放到DispatchQueue分发队列。写线程负责从发送队列取出Message,然后写到socket。

class Pipe : public RefCountedObject {

/**

* The Reader thread handles all reads off the socket -- not just

* Messages, but also acks and other protocol bits (excepting startup,

* when the Writer does a couple of reads).

* All the work is implemented in Pipe itself, of course.

*/

class Reader : public Thread {

Pipe *pipe;

public:

Reader(Pipe *p) : pipe(p) {}

void *entry() { pipe->reader(); return 0; }

} reader_thread; ///读线程

friend class Reader;

/**

* The Writer thread handles all writes to the socket (after startup).

* All the work is implemented in Pipe itself, of course.

*/

class Writer : public Thread {

Pipe *pipe;

public:

Writer(Pipe *p) : pipe(p) {}

void *entry() { pipe->writer(); return 0; }

} writer_thread; ///写线程

friend class Writer;

...

///发送队列

map<int, list<Message*> > out_q; // priority queue for outbound msgs

DispatchQueue *in_q; ///接收队列

(3)包含一个分发队列DispatchQueue,分发队列有一个专门的分发线程(DispatchThread),将消息分发给Dispatcher(OSD)完成具体逻辑处理。

收到连接请求

请求的监听和处理由 SimpleMessenger::ready –> Accepter::entry 实现

1 void SimpleMessenger::ready()

2 {

3 ldout(cct,10) << "ready " << get_myaddr() << dendl;

4

5 // 启动 DispatchQueue 线程

6 dispatch_queue.start();

7

8 lock.Lock();

9 if (did_bind)

10

11 // 启动 Accepter 线程监听客户端连接, 见下面的 Accepter::entry

12 accepter.start();

13 lock.Unlock();

14 }

15

16

17 /*

18 poll.h

19

20 struct polldf{

21 int fd;

22 short events;

23 short revents;

24 }

25 这个结构中fd表示文件描述符,events表示请求检测的事件,

26 revents表示检测之后返回的事件,

27 如果当某个文件描述符有状态变化时,revents的值就不为空。

28

29 */

30

31 //监听

32 void *Accepter::entry()

33 {

34 ldout(msgr->cct,1) << __func__ << " start" << dendl;

35

36 int errors = 0;

37

38 struct pollfd pfd[2];

39 memset(pfd, 0, sizeof(pfd));

40 // listen_sd 是 Accepter::bind()中创建绑定的 socket

41 pfd[0].fd = listen_sd;//想监听的文件描述符

42 pfd[0].events = POLLIN | POLLERR | POLLNVAL | POLLHUP;//所关心的事件

43 pfd[1].fd = shutdown_rd_fd;

44 pfd[1].events = POLLIN | POLLERR | POLLNVAL | POLLHUP;

45 while (!done) {//开始循环监听

46 ldout(msgr->cct,20) << __func__ << " calling poll for sd:" << listen_sd << dendl;

47 int r = poll(pfd, 2, -1);

48 if (r < 0) {

49 if (errno == EINTR) {

50 continue;

51 }

52 ldout(msgr->cct,1) << __func__ << " poll got error"

53 << " errno " << errno << " " << cpp_strerror(errno) << dendl;

54 ceph_abort();

55 }

56 ldout(msgr->cct,10) << __func__ << " poll returned oke: " << r << dendl;

57 ldout(msgr->cct,20) << __func__ << " pfd.revents[0]=" << pfd[0].revents << dendl;

58 ldout(msgr->cct,20) << __func__ << " pfd.revents[1]=" << pfd[1].revents << dendl;

59

60 if (pfd[0].revents & (POLLERR | POLLNVAL | POLLHUP)) {

61 ldout(msgr->cct,1) << __func__ << " poll got errors in revents "

62 << pfd[0].revents << dendl;

63 ceph_abort();

64 }

65 if (pfd[1].revents & (POLLIN | POLLERR | POLLNVAL | POLLHUP)) {

66 // We got "signaled" to exit the poll

67 // clean the selfpipe

68 char ch;

69 if (::read(shutdown_rd_fd, &ch, sizeof(ch)) == -1) {

70 if (errno != EAGAIN)

71 ldout(msgr->cct,1) << __func__ << " Cannot read selfpipe: "

72 << " errno " << errno << " " << cpp_strerror(errno) << dendl;

73 }

74 break;

75 }

76 if (done) break;

77

78 // accept

79 sockaddr_storage ss;

80 socklen_t slen = sizeof(ss);

81 int sd = accept_cloexec(listen_sd, (sockaddr*)&ss, &slen);

82 if (sd >= 0) {

83 errors = 0;

84 ldout(msgr->cct,10) << __func__ << " incoming on sd " << sd << dendl;

85

86 msgr->add_accept_pipe(sd);//客户端连接成功,函数在simplemessenger.cc中,建立pipe,告知要处理的socket为sd。启动pipe的读线程。

87 } else {

88 int e = errno;

89 ldout(msgr->cct,0) << __func__ << " no incoming connection? sd = " << sd

90 << " errno " << e << " " << cpp_strerror(e) << dendl;

91 if (++errors > msgr->cct->_conf->ms_max_accept_failures) {

92 lderr(msgr->cct) << "accetper has encoutered enough errors, just do ceph_abort()." << dendl;

93 ceph_abort();

94 }

95 }

96 }

97

98 ldout(msgr->cct,20) << __func__ << " closing" << dendl;

99 // socket is closed right after the thread has joined.

100 // closing it here might race

101 if (shutdown_rd_fd >= 0) {

102 ::close(shutdown_rd_fd);

103 shutdown_rd_fd = -1;

104 }

105

106 ldout(msgr->cct,10) << __func__ << " stopping" << dendl;

107 return 0;

108 }

随后创建pipe()开始消息的处理

1 Pipe *SimpleMessenger::add_accept_pipe(int sd)

2 {

3 lock.Lock();

4 Pipe *p = new Pipe(this, Pipe::STATE_ACCEPTING, NULL);

5 p->sd = sd;

6 p->pipe_lock.Lock();

7 /*

8 调用 Pipe::start_reader() 开始读取消息, 将会创建一个读线程开始处理.

9 Pipe::start_reader() --> Pipe::reader

10 */

11 p->start_reader();

12 p->pipe_lock.Unlock();

13 pipes.insert(p);

14 accepting_pipes.insert(p);

15 lock.Unlock();

16 return p;

17 }

创建消息读取和处理线程

处理消息由 Pipe::start_reader() –> Pipe::reader() 开始,此时已经是在 Reader 线程中. 首先会调用 accept() 做一些简答的处理然后创建 Writer() 线程,等待发送回复 消息. 然后读取消息, 读取完成之后, 将收到的消息封装在 Message 中,交由 dispatch_queue() 处理.

dispatch_queue() 找到注册者,将消息转交给它处理,处理完成唤醒 Writer() 线程发送回复消息.

1 /* read msgs from socket.

2 * also, server.

3 */

4 /*

5 处理消息由 Pipe::start_reader() –> Pipe::reader() 开始,此时已经是在 Reader 线程中. 首先会调用 accept()

6 做一些简答的处理然后创建 Writer() 线程,等待发送回复 消息. 然后读取消息, 读取完成之后, 将收到的消息封装在 Message

7 中,交由 dispatch_queue() 处理.dispatch_queue() 找到注册者,将消息转交给它处理,处理完成唤醒 Writer() 线程发送回复消息.s

8 */

9 void Pipe::reader()

10 {

11 pipe_lock.Lock();

12

13

14 /*

15 Pipe::accept() 会调用 Pipe::start_writer() 创建 writer 线程, 进入 writer 线程

16 后,会 cond.Wait() 等待被激活,激活的流程看下面的说明. Writer 线程的创建见后后面

17 Pipe::accept() 的分析

18 */

19 if (state == STATE_ACCEPTING) {

20 accept();

21 ceph_assert(pipe_lock.is_locked());

22 }

23

24 // loop.

25 while (state != STATE_CLOSED &&

26 state != STATE_CONNECTING) {

27 ceph_assert(pipe_lock.is_locked());

28

29 // sleep if (re)connecting

30 if (state == STATE_STANDBY) {

31 ldout(msgr->cct,20) << "reader sleeping during reconnect|standby" << dendl;

32 cond.Wait(pipe_lock);

33 continue;

34 }

35

36 // get a reference to the AuthSessionHandler while we have the pipe_lock

37 std::shared_ptr<AuthSessionHandler> auth_handler = session_security;

38

39 pipe_lock.Unlock();

40

41 char tag = -1;

42 ldout(msgr->cct,20) << "reader reading tag..." << dendl;

43 // 读取消息类型,某些消息会马上激活 writer 线程先处理

44 if (tcp_read((char*)&tag, 1) < 0) {//读取失败

45 pipe_lock.Lock();

46 ldout(msgr->cct,2) << "reader couldn't read tag, " << cpp_strerror(errno) << dendl;

47 fault(true);

48 continue;

49 }

50

51 if (tag == CEPH_MSGR_TAG_KEEPALIVE) {//keepalive 信息

52 ldout(msgr->cct,2) << "reader got KEEPALIVE" << dendl;

53 pipe_lock.Lock();

54 connection_state->set_last_keepalive(ceph_clock_now());

55 continue;

56 }

57 if (tag == CEPH_MSGR_TAG_KEEPALIVE2) {//keepalive 信息

58 ldout(msgr->cct,30) << "reader got KEEPALIVE2 tag ..." << dendl;

59 ceph_timespec t;

60 int rc = tcp_read((char*)&t, sizeof(t));

61 pipe_lock.Lock();

62 if (rc < 0) {

63 ldout(msgr->cct,2) << "reader couldn't read KEEPALIVE2 stamp "

64 << cpp_strerror(errno) << dendl;

65 fault(true);

66 } else {

67 send_keepalive_ack = true;

68 keepalive_ack_stamp = utime_t(t);

69 ldout(msgr->cct,2) << "reader got KEEPALIVE2 " << keepalive_ack_stamp

70 << dendl;

71 connection_state->set_last_keepalive(ceph_clock_now());

72 cond.Signal();//直接激活writer线程处理

73 }

74 continue;

75 }

76 if (tag == CEPH_MSGR_TAG_KEEPALIVE2_ACK) {

77 ldout(msgr->cct,2) << "reader got KEEPALIVE_ACK" << dendl;

78 struct ceph_timespec t;

79 int rc = tcp_read((char*)&t, sizeof(t));

80 pipe_lock.Lock();

81 if (rc < 0) {

82 ldout(msgr->cct,2) << "reader couldn't read KEEPALIVE2 stamp " << cpp_strerror(errno) << dendl;

83 fault(true);

84 } else {

85 connection_state->set_last_keepalive_ack(utime_t(t));

86 }

87 continue;

88 }

89

90 // open ...

91 if (tag == CEPH_MSGR_TAG_ACK) {

92 ldout(msgr->cct,20) << "reader got ACK" << dendl;

93 ceph_le64 seq;

94 int rc = tcp_read((char*)&seq, sizeof(seq));

95 pipe_lock.Lock();

96 if (rc < 0) {

97 ldout(msgr->cct,2) << "reader couldn't read ack seq, " << cpp_strerror(errno) << dendl;

98 fault(true);

99 } else if (state != STATE_CLOSED) {

100 handle_ack(seq);

101 }

102 continue;

103 }

104

105 else if (tag == CEPH_MSGR_TAG_MSG) {

106 ldout(msgr->cct,20) << "reader got MSG" << dendl;

107 // 收到 MSG 消息

108 Message *m = 0;

109 // 将消息读取到 new 到的 Message 对象

110 int r = read_message(&m, auth_handler.get());

111

112 pipe_lock.Lock();

113

114 if (!m) {

115 if (r < 0)

116 fault(true);

117 continue;

118 }

119

120 m->trace.event("pipe read message");

121

122 if (state == STATE_CLOSED ||

123 state == STATE_CONNECTING) {

124 in_q->dispatch_throttle_release(m->get_dispatch_throttle_size());

125 m->put();

126 continue;

127 }

128

129 // check received seq#. if it is old, drop the message.

130 // note that incoming messages may skip ahead. this is convenient for the client

131 // side queueing because messages can't be renumbered, but the (kernel) client will

132 // occasionally pull a message out of the sent queue to send elsewhere. in that case

133 // it doesn't matter if we "got" it or not.

134 if (m->get_seq() <= in_seq) {

135 ldout(msgr->cct,0) << "reader got old message "

136 << m->get_seq() << " <= " << in_seq << " " << m << " " << *m

137 << ", discarding" << dendl;

138 in_q->dispatch_throttle_release(m->get_dispatch_throttle_size());

139 m->put();

140 if (connection_state->has_feature(CEPH_FEATURE_RECONNECT_SEQ) &&

141 msgr->cct->_conf->ms_die_on_old_message)

142 ceph_abort_msg("old msgs despite reconnect_seq feature");

143 continue;

144 }

145 if (m->get_seq() > in_seq + 1) {

146 ldout(msgr->cct,0) << "reader missed message? skipped from seq "

147 << in_seq << " to " << m->get_seq() << dendl;

148 if (msgr->cct->_conf->ms_die_on_skipped_message)

149 ceph_abort_msg("skipped incoming seq");

150 }

151

152 m->set_connection(connection_state.get());

153

154 // note last received message.

155 in_seq = m->get_seq();

156

157 // 先激活 writer 线程 ACK 这个消息

158 cond.Signal(); // wake up writer, to ack this

159

160 ldout(msgr->cct,10) << "reader got message "

161 << m->get_seq() << " " << m << " " << *m

162 << dendl;

163 in_q->fast_preprocess(m);

164

165 /*

166 如果该次请求是可以延迟处理的请求,将 msg 放到 Pipe::DelayedDelivery::delay_queue,

167 后面通过相关模块再处理

168 注意,一般来讲收到的消息分为三类:

169 1. 直接可以在 reader 线程中处理,如上面的 CEPH_MSGR_TAG_ACK

170 2. 正常处理, 需要将消息放入 DispatchQueue 中,由后端注册的消息处理,然后唤醒发送线程发送

171 3. 延迟发送, 下面的这种消息, 由定时时间决定什么时候发送

172 */

173 if (delay_thread) {

174 utime_t release;

175 if (rand() % 10000 < msgr->cct->_conf->ms_inject_delay_probability * 10000.0) {

176 release = m->get_recv_stamp();

177 release += msgr->cct->_conf->ms_inject_delay_max * (double)(rand() % 10000) / 10000.0;

178 lsubdout(msgr->cct, ms, 1) << "queue_received will delay until " << release << " on " << m << " " << *m << dendl;

179 }

180 delay_thread->queue(release, m);

181 } else {

182

183 /*

184 正常处理的消息,

185 若can_fast_dispatch: ;

186

187 否则放到 Pipe::DispatchQueue *in_q 中, 以下是整个消息的流程

188 DispatchQueue::enqueue()

189 --> mqueue.enqueue() -> cond.Signal()(激活唤醒DispatchQueue::dispatch_thread 线程)

190 --> DispatchQueue::dispatch_thread::entry() 该线程得到唤醒

191 --> Messenger::ms_deliver_XXX

192 --> 具体的 Dispatch 实例, 如 Monitor::ms_dispatch()

193 --> Messenger::send_message()

194 --> SimpleMessenger::submit_message()

195 --> Pipe::_send()

196 --> Pipe::out_q[].push_back(m) -> cond.Signal 激活 writer 线程

197 --> ::sendmsg()//发送到 socket

198 */

199 if (in_q->can_fast_dispatch(m)) {

200 reader_dispatching = true;

201 pipe_lock.Unlock();

202 in_q->fast_dispatch(m);

203 pipe_lock.Lock();

204 reader_dispatching = false;

205 if (state == STATE_CLOSED ||

206 notify_on_dispatch_done) { // there might be somebody waiting

207 notify_on_dispatch_done = false;

208 cond.Signal();

209 }

210 } else {

211 in_q->enqueue(m, m->get_priority(), conn_id);//交给 dispatch_queue 处理

212 }

213 }

214 }

215

216 else if (tag == CEPH_MSGR_TAG_CLOSE) {

217 ldout(msgr->cct,20) << "reader got CLOSE" << dendl;

218 pipe_lock.Lock();

219 if (state == STATE_CLOSING) {

220 state = STATE_CLOSED;

221 state_closed = true;

222 } else {

223 state = STATE_CLOSING;

224 }

225 cond.Signal();

226 break;

227 }

228 else {

229 ldout(msgr->cct,0) << "reader bad tag " << (int)tag << dendl;

230 pipe_lock.Lock();

231 fault(true);

232 }

233 }

234

235

236 // reap?

237 reader_running = false;

238 reader_needs_join = true;

239 unlock_maybe_reap();

240 ldout(msgr->cct,10) << "reader done" << dendl;

241 }

Pipe::accept() 做一些简单的协议检查和认证处理,之后创建 Writer() 线程: Pipe::start_writer() –> Pipe::Writer

1 //ceph14中内容比这里多很多

2 int Pipe::accept()

3 {

4 ldout(msgr->cct,10) << "accept" << dendl;

5 // 检查自己和对方的协议版本等信息是否一致等操作

6 // ......

7

8 while (1) {

9 // 协议检查等操作

10 // ......

11

12 /**

13 * 通知注册者有新的 accept 请求过来,如果 Dispatcher 的子类有实现

14 * Dispatcher::ms_handle_accept(),则会调用该方法处理

15 */

16 msgr->dispatch_queue.queue_accept(connection_state.get());

17

18 // 发送 reply 和认证相关的消息

19 // ......

20

21 if (state != STATE_CLOSED) {

22 /**

23 * 前面的协议检查,认证等都完成之后,开始创建 Writer() 线程等待注册者

24 * 处理完消息之后发送

25 *

26 */

27 start_writer();

28 }

29 ldout(msgr->cct,20) << "accept done" << dendl;

30

31 /**

32 * 如果该消息是延迟发送的消息, 且相关的发送线程没有启动,启动之

33 * Pipe::maybe_start_delay_thread()

34 * --> Pipe::DelayedDelivery::entry()

35 */

36 maybe_start_delay_thread();

37 return 0; // success.

38 }

39 }

随后writer线程等待被唤醒发送回复消息

1 /* write msgs to socket.

2 * also, client.

3 */

4 void Pipe::writer()

5 {

6 pipe_lock.Lock();

7 while (state != STATE_CLOSED) {// && state != STATE_WAIT) {

8 ldout(msgr->cct,10) << "writer: state = " << get_state_name()

9 << " policy.server=" << policy.server << dendl;

10

11 // standby?

12 if (is_queued() && state == STATE_STANDBY && !policy.server)

13 state = STATE_CONNECTING;

14

15 // connect?

16 if (state == STATE_CONNECTING) {

17 ceph_assert(!policy.server);

18 connect();

19 continue;

20 }

21

22 if (state == STATE_CLOSING) {

23 // write close tag

24 ldout(msgr->cct,20) << "writer writing CLOSE tag" << dendl;

25 char tag = CEPH_MSGR_TAG_CLOSE;

26 state = STATE_CLOSED;

27 state_closed = true;

28 pipe_lock.Unlock();

29 if (sd >= 0) {

30 // we can ignore return value, actually; we don't care if this succeeds.

31 int r = ::write(sd, &tag, 1);

32 (void)r;

33 }

34 pipe_lock.Lock();

35 continue;

36 }

37

38 if (state != STATE_CONNECTING && state != STATE_WAIT && state != STATE_STANDBY &&

39 (is_queued() || in_seq > in_seq_acked)) {

40

41

42 // 对 keepalive, keepalive2, ack 包的处理

43 // keepalive?

44 if (send_keepalive) {

45 int rc;

46 if (connection_state->has_feature(CEPH_FEATURE_MSGR_KEEPALIVE2)) {

47 pipe_lock.Unlock();

48 rc = write_keepalive2(CEPH_MSGR_TAG_KEEPALIVE2,

49 ceph_clock_now());

50 } else {

51 pipe_lock.Unlock();

52 rc = write_keepalive();

53 }

54 pipe_lock.Lock();

55 if (rc < 0) {

56 ldout(msgr->cct,2) << "writer couldn't write keepalive[2], "

57 << cpp_strerror(errno) << dendl;

58 fault();

59 continue;

60 }

61 send_keepalive = false;

62 }

63 if (send_keepalive_ack) {

64 utime_t t = keepalive_ack_stamp;

65 pipe_lock.Unlock();

66 int rc = write_keepalive2(CEPH_MSGR_TAG_KEEPALIVE2_ACK, t);

67 pipe_lock.Lock();

68 if (rc < 0) {

69 ldout(msgr->cct,2) << "writer couldn't write keepalive_ack, " << cpp_strerror(errno) << dendl;

70 fault();

71 continue;

72 }

73 send_keepalive_ack = false;

74 }

75

76 // send ack?

77 if (in_seq > in_seq_acked) {

78 uint64_t send_seq = in_seq;

79 pipe_lock.Unlock();

80 int rc = write_ack(send_seq);

81 pipe_lock.Lock();

82 if (rc < 0) {

83 ldout(msgr->cct,2) << "writer couldn't write ack, " << cpp_strerror(errno) << dendl;

84 fault();

85 continue;

86 }

87 in_seq_acked = send_seq;

88 }

89

90 // 从 Pipe::out_q 中得到一个取出包准备发送

91 // grab outgoing message

92 Message *m = _get_next_outgoing();

93 if (m) {

94 m->set_seq(++out_seq);

95 if (!policy.lossy) {

96 // put on sent list

97 sent.push_back(m);

98 m->get();

99 }

100

101 // associate message with Connection (for benefit of encode_payload)

102 m->set_connection(connection_state.get());

103

104 uint64_t features = connection_state->get_features();

105

106 if (m->empty_payload())

107 ldout(msgr->cct,20) << "writer encoding " << m->get_seq() << " features " << features

108 << " " << m << " " << *m << dendl;

109 else

110 ldout(msgr->cct,20) << "writer half-reencoding " << m->get_seq() << " features " << features

111 << " " << m << " " << *m << dendl;

112

113 // 对包进行一些加密处理

114 // encode and copy out of *m

115 m->encode(features, msgr->crcflags);

116

117 // 包头

118 // prepare everything

119 const ceph_msg_header& header = m->get_header();

120 const ceph_msg_footer& footer = m->get_footer();

121

122 // Now that we have all the crcs calculated, handle the

123 // digital signature for the message, if the pipe has session

124 // security set up. Some session security options do not

125 // actually calculate and check the signature, but they should

126 // handle the calls to sign_message and check_signature. PLR

127 if (session_security.get() == NULL) {

128 ldout(msgr->cct, 20) << "writer no session security" << dendl;

129 } else {

130 if (session_security->sign_message(m)) {

131 ldout(msgr->cct, 20) << "writer failed to sign seq # " << header.seq

132 << "): sig = " << footer.sig << dendl;

133 } else {

134 ldout(msgr->cct, 20) << "writer signed seq # " << header.seq

135 << "): sig = " << footer.sig << dendl;

136 }

137 }

138 // 取出要发送的二进制数据

139 bufferlist blist = m->get_payload();

140 blist.append(m->get_middle());

141 blist.append(m->get_data());

142

143 pipe_lock.Unlock();

144

145 m->trace.event("pipe writing message");

146 // 发送包: Pipe::write_message() --> Pipe::do_sendmsg --> ::sendmsg()

147 ldout(msgr->cct,20) << "writer sending " << m->get_seq() << " " << m << dendl;

148 int rc = write_message(header, footer, blist);

149

150 pipe_lock.Lock();

151 if (rc < 0) {

152 ldout(msgr->cct,1) << "writer error sending " << m << ", "

153 << cpp_strerror(errno) << dendl;

154 fault();

155 }

156 m->put();

157 }

158 continue;

159 }

160

161 // 等待被 Reader 或者 Dispatcher 唤醒

162 // wait

163 ldout(msgr->cct,20) << "writer sleeping" << dendl;

164 cond.Wait(pipe_lock);

165 }

166

167 ldout(msgr->cct,20) << "writer finishing" << dendl;

168

169 // reap?

170 writer_running = false;

171 unlock_maybe_reap();

172 ldout(msgr->cct,10) << "writer done" << dendl;

173 }

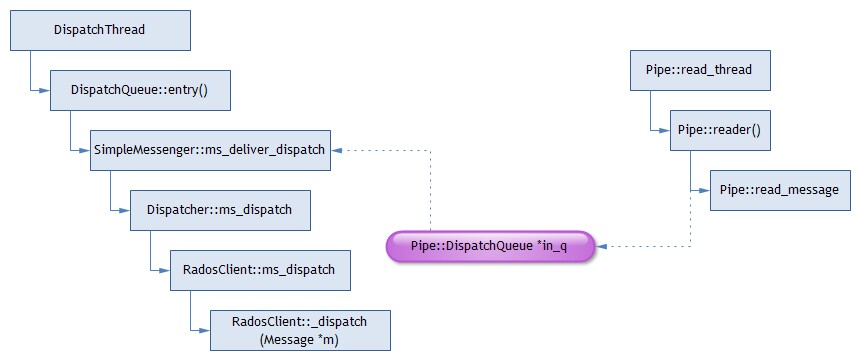

消息的处理

reader将消息交给dispatch_queue处理,流程如下:

可以ms_can_fast_dispatch()的执行 ms_fast_dispatch(),其他的进入in_q.

pipe::reader() -------> pipe::in_q -> enqueue()

1 void DispatchQueue::enqueue(const Message::ref& m, int priority, uint64_t id)

2 {

3 Mutex::Locker l(lock);

4 if (stop) {

5 return;

6 }

7 ldout(cct,20) << "queue " << m << " prio " << priority << dendl;

8 add_arrival(m);

9 // 将消息按优先级放入 DispatchQueue::mqueue 中

10 if (priority >= CEPH_MSG_PRIO_LOW) {

11 mqueue.enqueue_strict(id, priority, QueueItem(m));

12 } else {

13 mqueue.enqueue(id, priority, m->get_cost(), QueueItem(m));

14 }

15

16 // 唤醒 DispatchQueue::entry() 处理消息

17 cond.Signal();

18 }

19

20 /*

21 * This function delivers incoming messages to the Messenger.

22 * Connections with messages are kept in queues; when beginning a message

23 * delivery the highest-priority queue is selected, the connection from the

24 * front of the queue is removed, and its message read. If the connection

25 * has remaining messages at that priority level, it is re-placed on to the

26 * end of the queue. If the queue is empty; it's removed.

27 * The message is then delivered and the process starts again.

28 */

29 void DispatchQueue::entry()

30 {

31 lock.Lock();

32 while (true) {

33 while (!mqueue.empty()) {

34 QueueItem qitem = mqueue.dequeue();

35 if (!qitem.is_code())

36 remove_arrival(qitem.get_message());

37 lock.Unlock();

38

39 if (qitem.is_code()) {

40 if (cct->_conf->ms_inject_internal_delays &&

41 cct->_conf->ms_inject_delay_probability &&

42 (rand() % 10000)/10000.0 < cct->_conf->ms_inject_delay_probability) {

43 utime_t t;

44 t.set_from_double(cct->_conf->ms_inject_internal_delays);

45 ldout(cct, 1) << "DispatchQueue::entry inject delay of " << t

46 << dendl;

47 t.sleep();

48 }

49 switch (qitem.get_code()) {

50 case D_BAD_REMOTE_RESET:

51 msgr->ms_deliver_handle_remote_reset(qitem.get_connection());

52 break;

53 case D_CONNECT:

54 msgr->ms_deliver_handle_connect(qitem.get_connection());

55 break;

56 case D_ACCEPT:

57 msgr->ms_deliver_handle_accept(qitem.get_connection());

58 break;

59 case D_BAD_RESET:

60 msgr->ms_deliver_handle_reset(qitem.get_connection());

61 break;

62 case D_CONN_REFUSED:

63 msgr->ms_deliver_handle_refused(qitem.get_connection());

64 break;

65 default:

66 ceph_abort();

67 }

68 } else {

69 const Message::ref& m = qitem.get_message();

70 if (stop) {

71 ldout(cct,10) << " stop flag set, discarding " << m << " " << *m << dendl;

72 } else {

73 uint64_t msize = pre_dispatch(m);

74

75 /**

76 * 交给 Messenger::ms_deliver_dispatch() 处理,后者会找到

77 * Monitor/OSD 等的 ms_dispatch() 开始对消息的逻辑处理

78 * Messenger::ms_deliver_dispatch()

79 * --> OSD::ms_dispatch()

80 */

81 msgr->ms_deliver_dispatch(m);

82 post_dispatch(m, msize);

83 }

84 }

85

86 lock.Lock();

87 }

88 if (stop)

89 break;

90

91 // 等待被 DispatchQueue::enqueue() 唤醒

92 // wait for something to be put on queue

93 cond.Wait(lock);

94 }

95 lock.Unlock();

96 }

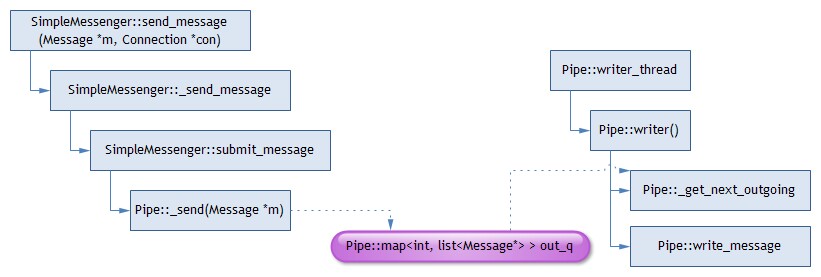

看下消息怎么订阅者的消息怎么放入out_q

1 messenger::ms_deliver_dispatch() 2 --->OSD::ms_dispatch() 3 --->OSD::_dispatch() 4 ----某种command处理??(未举例) 5 --> SimpleMessenger::send_message() 6 --> SimpleMessenger::_send_message() 7 --> SimpleMessenger::submit_message() 8 --> Pipe::_send() 9 ---> cond.signal()唤醒writer线程

1 void OSD::_dispatch(Message *m)

2 {

3 ceph_assert(osd_lock.is_locked());

4 dout(20) << "_dispatch " << m << " " << *m << dendl;

5

6 switch (m->get_type()) {

7 // -- don't need OSDMap --

8

9 // map and replication

10 case CEPH_MSG_OSD_MAP:

11 handle_osd_map(static_cast<MOSDMap*>(m));

12 break;

13

14 // osd

15 case MSG_OSD_SCRUB:

16 handle_scrub(static_cast<MOSDScrub*>(m));

17 break;

18

19 case MSG_COMMAND:

20 handle_command(static_cast<MCommand*>(m));

21 return;

22

23 // -- need OSDMap --

24

25 case MSG_OSD_PG_CREATE:

26 {

27 OpRequestRef op = op_tracker.create_request<OpRequest, Message*>(m);

28 if (m->trace)

29 op->osd_trace.init("osd op", &trace_endpoint, &m->trace);

30 // no map? starting up?

31 if (!osdmap) {

32 dout(7) << "no OSDMap, not booted" << dendl;

33 logger->inc(l_osd_waiting_for_map);

34 waiting_for_osdmap.push_back(op);

35 op->mark_delayed("no osdmap");

36 break;

37 }

38

39 // need OSDMap

40 dispatch_op(op);

41 }

42 }

43 }

消息的接收

接收流程如下:

Pipe的读线程从socket读取Message,然后放入接收队列,再由分发线程取出Message交给Dispatcher处理。

消息的发送

发送流程如下:

其它资料

这篇文章解析Ceph: 网络层的处理简单介绍了一下Ceph的网络,但对Pipe与Connection的关系描述似乎不太准确,Pipe是对socket的封装,Connection更加上层、抽象。