【ceph】 MDS处理mkdir

mkdir函数_cephfs: MDS处理mkdir(1)_weixin_39921689的博客-CSDN博客

mkdir函数_cephfs: MDS处理mkdir(2)_weixin_39730801的博客-CSDN博客

之前记录了《cephfs:用户态客户端mkdir》,但是并没有具体研究MDS怎么处理mkdir的。现在就研究下MDS这边处理mkdir的流程。例子:mkdir /test/a

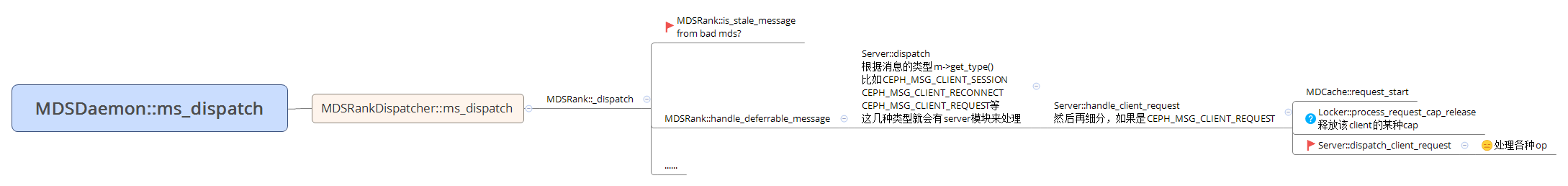

MDS对于来自客户端请求的通用处理

通用处理流程

在上面的图中可以看出,在正式处理mkdir请求之前,先处理了请求中附带的cap_realse消息,即函数Locker::process_request_cap_release;

Locker::process_request_cap_release

process_request_cap_release用来处理请求中ceph_mds_request_release消息,ceph_mds_request_release中的caps就是客户端持有父目录的caps,比如mkdir /test/a,caps就是客户端持有"test"目录的caps。客户端在发送mkdir请求时,会丢掉自己持有的"Fs"权限:客户端"test"的inode中caps为"pAsLsXsFs", 丢掉"Fs",就是"pAsLsXs"。process_request_cap_release的代码简略如下。

void Locker::process_request_cap_release(MDRequestRef& mdr, client_t client, const ceph_mds_request_release& item, std::string_view dname)

{ // item就是从客户端那边传过来的,dname = ""(客户端传的时候,并没有给dname赋值)

inodeno_t ino = (uint64_t)item.ino; // ino = "test"的inode号

uint64_t cap_id = item.cap_id;

int caps = item.caps; // caps = "pAsLsXs"

int wanted = item.wanted; // wanted = 0

int seq = item.seq;

int issue_seq = item.issue_seq;

int mseq = item.mseq;

CInode *in = mdcache->get_inode(ino); // 获取"test"的CInode

Capability *cap = in->get_client_cap(client);

cap->confirm_receipt(seq, caps); // 将"test"的CInode的caps的_issued和_pending变成“pAsLsXs”

adjust_cap_wanted(cap, wanted, issue_seq); // 设置caps中的wanted

eval(in, CEPH_CAP_LOCKS);

......

}

简单来讲就是将MDS缓存的"test"的CInode中的对应的客户端的caps与客户端保持一致,即cap中的_issued和_pending变成"pAsLsXs"。这样做的目的就是在acquire_lock时避免向该客户端发送revoke消息。

Server::handle_client_mkdir

cap_release消息处理完后,通过Server::dispatch_client_request分发请求,根据op执行Server::handle_client_mkdir,处理过程可以分为7个重要的流程:

1,获取"a"目录的CDentry以及需要加锁的元数据lock,具体函数为Server::rdlock_path_xlock_dentry

2,加锁,具体函数为Locker::acquire_locks,如果加锁不成功,即某些客户端持有的caps需要回收,就新建C_MDS_RetryRequest,加入"test"的CInode的waiting队列中,等待满足加锁条件后,再把请求拿出来处理。

3,如果加锁成功,则继续,新建"a"的CInode,具体函数为Server::prepare_new_inode

4,新建"a"的CDir,具体函数为CInode::get_or_open_dirfrag

5,更新"a"目录到"/"根目录的CDir和CInode中的元数据,填充"mkdir"事件,具体函数为MDCache::predirty_journal_parents

6,新建"a"的Capability,具体函数为Locker::issue_new_caps

7,记录"mkdir"事件,进行第一次回复,提交日志,具体函数为Server::journal_and_reply。

现在根据具体情况研究代码,代码如下

void Server::handle_client_mkdir(MDRequestRef& mdr)

{

MClientRequest *req = mdr->client_request;

set<SimpleLock*> rdlocks, wrlocks, xlocks;

// 获取"a"目录的CDentry以及需要加锁的元数据lock,填充rdlocks,wrlocks,xlocks,dn是"a"的CDentry

CDentry *dn = rdlock_path_xlock_dentry(mdr, 0, rdlocks, wrlocks, xlocks, false, false, false);

......

CDir *dir = dn->get_dir(); // dir是"test"的CDir

CInode *diri = dir->get_inode(); // diri是"test"的CInode

rdlocks.insert(&diri->authlock); // 将"test"的CInode的authlock加入rdlocks

// 去获取锁,由于有锁未获取到,所以直接返回

if (!mds->locker->acquire_locks(mdr, rdlocks, wrlocks, xlocks))

return;

......

}

Server::rdlock_path_xlock_dentry

该函数具体做的事如下

1,获取"a"的CDentry

2,填充rdlocks、wrlocks、xlocks

rdlocks:"a"的CDentry中的lock

"/"、"test"的CInode的snaplocks(从根到父目录)

wrlocks:"test"的CInode的filelock和nestlock

xlocks:"a"的CDentry中的lock(simplelock)

代码如下

CDentry* Server::rdlock_path_xlock_dentry(MDRequestRef& mdr, int n, set<SimpleLock*>& rdlocks, set<SimpleLock*>& wrlocks, set<SimpleLock*>& xlocks,

bool okexist, bool mustexist, bool alwaysxlock, file_layout_t **layout)

{ // n = 0, rdlocks, wrlocks, xlocks都为空,okexist = mustexist = alwaysxlock = false,layout = 0

const filepath& refpath = n ? mdr->get_filepath2() : mdr->get_filepath(); // refpath = path: path.ino = 0x10000000001, path.path = "a"

client_t client = mdr->get_client();

CDir *dir = traverse_to_auth_dir(mdr, mdr->dn[n], refpath); // 获取"test"的CDir

CInode *diri = dir->get_inode(); // 获取"test"的CInode

std::string_view dname = refpath.last_dentry(); // dname = "a"

CDentry *dn;

if (mustexist) { ...... // mustexist = false

} else {

dn = prepare_null_dentry(mdr, dir, dname, okexist); // 获取“a”的CDentry

if (!dn)

return 0;

}

mdr->dn[n].push_back(dn); // n = 0, 即mdr->dn[0][0] = dn;

CDentry::linkage_t *dnl = dn->get_linkage(client, mdr); // dnl中的remote_ino = 0 && inode = 0

mdr->in[n] = dnl->get_inode(); // mdr->in[0] = 0

// -- lock --

for (int i=0; i<(int)mdr->dn[n].size(); i++) // (int)mdr->dn[n].size() = 1

rdlocks.insert(&mdr->dn[n][i]->lock); // 将"a"的CDentry中的lock放入rdlocks

if (alwaysxlock || dnl->is_null()) // dnl->is_null()为真

xlocks.insert(&dn->lock); // new dn, xlock,将"a"的CDentry中的lock放入xlocks

else ......

// 下面是将"test"的CDir中的CInode的filelock和nestlock都放入wrlocks

wrlocks.insert(&dn->get_dir()->inode->filelock); // also, wrlock on dir mtime

wrlocks.insert(&dn->get_dir()->inode->nestlock); // also, wrlock on dir mtime

if (layout) ......

else

mds->locker->include_snap_rdlocks(rdlocks, dn->get_dir()->inode); // 将路径上的CInode的snaplock全放入rdlocks中,即从"test"到“/”

return dn;

}

在prepare_null_dentry函数中会新生成"a"的CDentry,代码如下

CDentry* Server::prepare_null_dentry(MDRequestRef& mdr, CDir *dir, std::string_view dname, bool okexist)

{ // dir是"test"的CDir,dname = "a"

// does it already exist?

CDentry *dn = dir->lookup(dname);

if (dn) {......} // dn没有lookup到,所以为NULL

// create

dn = dir->add_null_dentry(dname, mdcache->get_global_snaprealm()->get_newest_seq() + 1); // 新建CDentry

dn->mark_new(); // 设置 state | 1

return dn;

}

即Server::prepare_null_dentry会先去父目录"test"的CDir的items中去找有没有"a"的CDentry,如果没有找到就新生成一个CDentry。研究MDS,不去研究元数据细节,很容易迷失。下面就是CDentry的类定义,其中可以看到CDentry是继承自LRUObject,因为CDentry是元数据缓存,得靠简单的LRU算法来平衡缓存空间。先研究其中的成员变量的含义

class CDentry : public MDSCacheObject, public LRUObject, public Counter<CDentry> {

......

// 成员变量如下

public:

__u32 hash; // hash就是"a"通过ceph_str_hash_rjenkins函数算出来的hash值

snapid_t first, last;

elist<CDentry*>::item item_dirty, item_dir_dirty;

elist<CDentry*>::item item_stray;

// lock

static LockType lock_type; // LockType CDentry::lock_type(CEPH_LOCK_DN)

static LockType versionlock_type; // LockType CDentry::versionlock_type(CEPH_LOCK_DVERSION)

SimpleLock lock; // 初始化下lock.type->type = CEPH_LOCK_DN,lock.state = LOCK_SYNC

LocalLock versionlock; // 初始化下lock.type->type = CEPH_LOCK_DVERSION,lock.state = LOCK_LOCK

mempool::mds_co::map<client_t,ClientLease*> client_lease_map;

protected:

CDir *dir = nullptr; // dir是父目录的CDir,即"test"的CDir

linkage_t linkage; // 里面保存了CInode,在mkdir时,由于CInode还没有创建,所以linkage_t里面的内容为空

mempool::mds_co::list<linkage_t> projected; // 修改CDentry中的linkage时,并不直接去修改linkage

// 而是先新建一个临时的linkage_t用来保存修改的值,并存放在peojected中

// 待日志下刷后,再将临时值赋给linkage,并删掉临时值

// 所以projected中存放linkage_t的修改值。

version_t version = 0;

version_t projected_version = 0; // what it will be when i unlock/commit.

private:

mempool::mds_co::string name; // 文件或目录名, name = "a"

public:

struct linkage_t { // linkage_t中主要存了CInode的指针

CInode *inode = nullptr;

inodeno_t remote_ino = 0;

unsigned char remote_d_type = 0;

......

};

}

接下来就是填充rdlocks,wrlocks,xlocks,然后根据填充的锁set数组,去拿锁,只有拿到需要的锁,才能去修改元数据。

Locker::acquire_locks

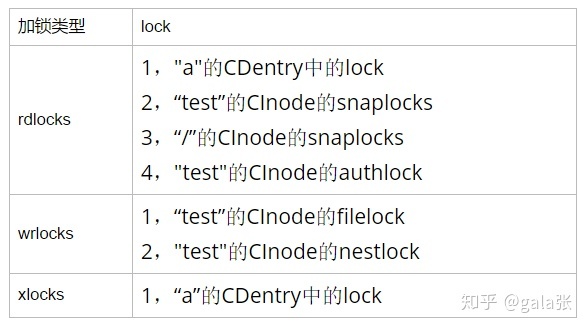

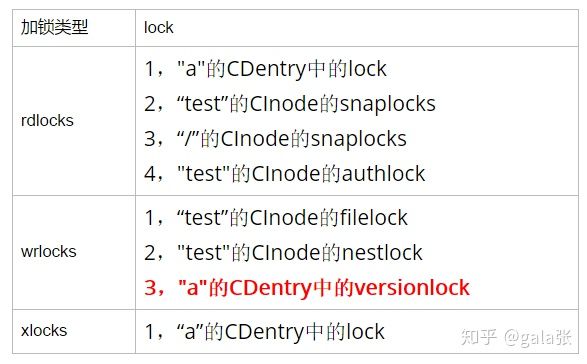

进行acquire_lock之前需要知道有哪些lock要去获取,如下

对"a"的CDentry的lock进行rdlock和xlock(这里有一个疑点,对lock加xlock后,其实就不需要再加rdlock,事实上接下来也只加了xlock),是因为在接下来会对"a"的CDentry里面的内容读写;对"a"的父目录"test"的filelock和nestlock加wrlock,是因为接下来要对"test"的CInode的inode里面的dirstat和neststat进行修改;对"test"的authlock加rdlock,是因为要读取"test"的权限相关的内容(mode、uid、gid等);剩下的就是snaplock,这个与快照有关,这里暂不讨论快照。

这里解释下,为什么要加这些锁

1,对"test"的CInode的authlock加读锁,因为在Server::prepare_new_inode过程中会获取"test"的CInode的mode内容,如下

if (diri->inode.mode & S_ISGID) {

dout(10) << " dir is sticky" << dendl;

in->inode.gid = diri->inode.gid;

if (S_ISDIR(mode)) {

dout(10) << " new dir also sticky" << dendl;

in->inode.mode |= S_ISGID;

}

2,对"test"的CInode的filelock和nestlock加wrlock,是因为之后在MDCache::predirty_journal_parents过程中会修改"test"的CInode中inode_t的dirstat和rstat:dirstat受filelock保护,rstat受nestlock保护。

3,对"a"的CDentry加xlock,是因为之后要去给CDentry中的linkage_t填充内容(CInode指针之类)

4,在之后也会去对CInode的versionlock加wrlock,是因为要去修改CInode中inode_t的version;对"/"的CInode的nestlock也加wrlock。

Locker::acquire_locks函数代码有好几百行,我把它分了3个步骤。

第一个步骤是整理xlocks、wrlock和rdlocks,因为这三个要加锁的set里面,可能有重复的lock,所以要把所有的lock放入一个整体的set中(sorted)。先遍历xlocks,将"a"的CDentry中的lock放入sorted中,将"a"的CDentry放入mustpin中,并且将"a"的CDentry的versionlock放入wrlocks中;接下来遍历wrlocks,将"a"的CDentry的versionlock和"test"的CInode的filelock和nestlock放入sorted中,并且将"test"的CInode放入mustpin中;遍历rdlocks,将"a"CDentry的lock,"test"CInode的authlock、snaplock,和"/"的CInode的snaplock放入sorted中,并将"/"的CInode加入mustpin中。代码如下

bool Locker::acquire_locks(MDRequestRef& mdr, set<SimpleLock*> &rdlocks, set<SimpleLock*> &wrlocks, set<SimpleLock*> &xlocks,

map<SimpleLock*,mds_rank_t> *remote_wrlocks, CInode *auth_pin_freeze, bool auth_pin_nonblock)

{ // remote_wrlocks = NULL, auth_pin_freeze = NULL, auth_pin_nonblock = false

client_t client = mdr->get_client();

set<SimpleLock*, SimpleLock::ptr_lt> sorted; // sort everything we will lock

set<MDSCacheObject*> mustpin; // items to authpin

// xlocks,遍历xlocks,此时xlocks只有一个,就是“a”的CDentry的lock

for (set<SimpleLock*>::iterator p = xlocks.begin(); p != xlocks.end(); ++p) {

sorted.insert(lock); // 将"a"的CDentry中的lock放入sorted中

mustpin.insert(lock->get_parent()); // 将CDentry放入mustpin中

// augment xlock with a versionlock?

if ((*p)->get_type() == CEPH_LOCK_DN) {

CDentry *dn = (CDentry*)lock->get_parent(); // dn就是"a"的CDentry

if (mdr->is_master()) {

// master. wrlock versionlock so we can pipeline dentry updates to journal.

wrlocks.insert(&dn->versionlock); // 将"a"的CDentry中的versionlock放入wrlocks中

} else { ...... }

} ......

}

// wrlocks,遍历wrlocks,此时wrlocks里面有三个: "a"的CDentry的versionlock,

// “test”的CInode的filelock和nestlock

for (set<SimpleLock*>::iterator p = wrlocks.begin(); p != wrlocks.end(); ++p) {

MDSCacheObject *object = (*p)->get_parent();

sorted.insert(*p); // 将三个lock加入sorted中

if (object->is_auth())

mustpin.insert(object); // 将"test"的CInode加入mustpin中

else if ......

}

// rdlocks,rdlocks里面有4个lock:"a"CDentry的lock,

// "test"CInode的authlock、snaplock,"/"的CInode的snaplock

for (set<SimpleLock*>::iterator p = rdlocks.begin();p != rdlocks.end();++p) {

MDSCacheObject *object = (*p)->get_parent();

sorted.insert(*p); // 将4个lock加入sorted中

if (object->is_auth())

mustpin.insert(object); // 将"/"的CInode加入mustpin中

else if ......

}

......

}

综上述得:所以sorted中有7个lock:"a"的CDentry的lock和versionlock,"test"的CInode的filelock、nestlock、authlock、snaplock, 还有“/”目录的snaplock。

第二个步骤是auth_pin住元数据,通过第一步,可以知道要auth_pin的MDSCacheObject:"a"的CDentry,"test"的CInode,"/"的CInode。先遍历这三个,去看看是否可以auth_pin,即判断两个部分:auth、pin。如果当前MDS持有的MDSCacheObject不是auth结点,则需要发给auth的MDS去auth_pin,如果当前的MDSCacheObject处于被冻结,或冻结中,则不能auth_pin,加入等待队列,等待可以auth_pin;然后直接返回false。如果可以auth_pin,下面才去auth_pin,将MDSCacheObject中的auth_pins++,代码如下

bool Locker::acquire_locks(MDRequestRef& mdr, set<SimpleLock*> &rdlocks, set<SimpleLock*> &wrlocks, set<SimpleLock*> &xlocks,

map<SimpleLock*,mds_rank_t> *remote_wrlocks, CInode *auth_pin_freeze, bool auth_pin_nonblock)

{

......

// AUTH PINS

map<mds_rank_t, set<MDSCacheObject*> > mustpin_remote; // mds -> (object set)

// can i auth pin them all now?,看是否可以authpin

// 遍历mustpin,mustpin中含有三个元素:"a"的CDentry,"test"的CInode,"/"的CInode

marker.message = "failed to authpin local pins";

for (set<MDSCacheObject*>::iterator p = mustpin.begin();p != mustpin.end(); ++p) {

MDSCacheObject *object = *p;

if (mdr->is_auth_pinned(object)) {...... }// 即看mdr的auth_pins中是否有该MDSCacheObject,如果有,就表示已经auth_pin了

if (!object->is_auth()) { ...... } // 如果不是auth节点,将该CDentry/CInode加入mustpin_remote队列,在下面去auth_pin时,发MMDSSlaveRequest消息给auth的mds去处理

// 并将该CDentry/CInode加入waiting_on_slave后,直接返回

int err = 0;

if (!object->can_auth_pin(&err)) { // CDentry是否可以auth_pin,即看父目录("test")的CDir是否可以can_auth_pin

// "test"的CDir是否是auth,且是否被冻结frozen或者正在被冻结frozing

// 如果不能auth_pin,则add_waiter,并返回,等待下次唤醒重试。

//CInode是否可以auth_pin,得看CInode是否是auth,或者inode是否被冻结,或者正在被冻结,或者auth_pin被冻结;

// 看CInode的CDentry是否可以can_auth_pin

if (err == MDSCacheObject::ERR_EXPORTING_TREE) {

marker.message = "failed to authpin, subtree is being exported";

} else if (err == MDSCacheObject::ERR_FRAGMENTING_DIR) {

marker.message = "failed to authpin, dir is being fragmented";

} else if (err == MDSCacheObject::ERR_EXPORTING_INODE) {

marker.message = "failed to authpin, inode is being exported";

}

object->add_waiter(MDSCacheObject::WAIT_UNFREEZE, new C_MDS_RetryRequest(mdcache, mdr));

......

return false;

}

}

// ok, grab local auth pins

for (set<MDSCacheObject*>::iterator p = mustpin.begin(); p != mustpin.end(); ++p) {

MDSCacheObject *object = *p;

if (mdr->is_auth_pinned(object)) { ...... }

else if (object->is_auth()) {

mdr->auth_pin(object); // 开始auth_pin,即将object中的auth_pins++

}

......

}

第三个步骤,正式开始加锁,经过一系列操作,要加锁的lock变化了,如下

wrlocks中多了"a"的CDentry的versionlock。sorted中有7个lock:"a"的CDentry的versionlock和lock, “/”目录的snaplock,"test"的CInode的snaplock、filelock、authlock、nestlock。

bool Locker::acquire_locks(MDRequestRef& mdr, set<SimpleLock*> &rdlocks, set<SimpleLock*> &wrlocks, set<SimpleLock*> &xlocks,

map<SimpleLock*,mds_rank_t> *remote_wrlocks, CInode *auth_pin_freeze, bool auth_pin_nonblock)

{

......

// caps i'll need to issue

set<CInode*> issue_set;

bool result = false;

// acquire locks.

// make sure they match currently acquired locks.

set<SimpleLock*, SimpleLock::ptr_lt>::iterator existing = mdr->locks.begin();

for (set<SimpleLock*, SimpleLock::ptr_lt>::iterator p = sorted.begin(); p != sorted.end(); ++p) {

bool need_wrlock = !!wrlocks.count(*p); // 先是"a"的CDentry的versionlock

bool need_remote_wrlock = !!(remote_wrlocks && remote_wrlocks->count(*p));

// lock

if (xlocks.count(*p)) {

marker.message = "failed to xlock, waiting";

// xlock_start "a"的CDentry的lock,lock状态由LOCK_SYNC --> LOCK_SYNC_LOCK --> LOCK_LOCK (simple_lock) --> LOCK_LOCK_XLOCK --> LOCK_PEXLOCK(simple_xlock)

// --> LOCK_XLOCK (xlock_start)

if (!xlock_start(*p, mdr)) // 先进行xlock

goto out;

dout(10) << " got xlock on " << **p << " " << *(*p)->get_parent() << dendl;

} else if (need_wrlock || need_remote_wrlock) {

if (need_wrlock && !mdr->wrlocks.count(*p)) {

marker.message = "failed to wrlock, waiting";

// nowait if we have already gotten remote wrlock

if (!wrlock_start(*p, mdr, need_remote_wrlock)) // 进行wrlock

goto out;

dout(10) << " got wrlock on " << **p << " " << *(*p)->get_parent() << dendl;

}

} else {

marker.message = "failed to rdlock, waiting";

if (!rdlock_start(*p, mdr)) // 进行rdlock

goto out;

dout(10) << " got rdlock on " << **p << " " << *(*p)->get_parent() << dendl;

}

}

......

out:

issue_caps_set(issue_set);

return result;

}

开始遍历sorted。

- 对"a"的CDentry的versionlock加wrlock,看是否可以wrlock,即是否已经xlocked,这里可以直接加wrlock。并没有涉及到锁的切换。

bool can_wrlock() const {

return !is_xlocked();

}

- 对"a"的CDentry的lock加xlock,即进行xlock_start,最初锁的状态为LOCK_SYNC,而这种状态是不可以直接加xlock的,具体判断这里先不细讲,后面研究lock时,再扩展。

bool can_xlock(client_t client) const {

return get_sm()->states[state].can_xlock == ANY ||

(get_sm()->states[state].can_xlock == AUTH && parent->is_auth()) ||

(get_sm()->states[state].can_xlock == XCL && client >= 0 && get_xlock_by_client() == client);

}

从simplelock数组中可以查的get_sm()->states[state].can_xlock == 0不满足xlock条件,所以要经过锁切换。先经过Locker::simple_lock,将锁的状态切换为LOCK_LOCK:LOCK_SYNC --> LOCK_SYNC_LOCK -->LOCK_LOCK。在LOCK_SYNC_LOCK -->LOCK_LOCK的切换过程中,需要判断是否满足条件:即该lock是否leased;是否被rdlocked;该CDentry是否在别的MDS上有副本,如果有,则需要发送LOCK_AC_LOCK消息给拥有副本的MDS,也去对它加锁。这里都满足,因为"a"目录是正在创建的。但是LOCK_LOCK也不能xlock,所以还需要继续切换,即通过Locker::simple_xlock,来切换锁:LOCK_LOCK --> LOCK_LOCK_XLOCK --> LOCK_PEXLOCK。切换成LOCK_PEXLOCK后就可以加xlock了。最后将锁状态切换为LOCK_XLOCK。

- 对"/"和"test"的CInode的snaplock加rdlock,它们锁的状态都是LOCK_SYNC,是可以直接加rdlock。这里没有涉及到锁的切换。

- 对"test"的CInode的filelock加wrlock,最初锁的状态为LOCK_SYNC,不满足加wrlock条件,需要通过Locker::simple_lock对锁进行切换。先将锁切换为中间状态LOCK_SYNC_LOCK,然后判断是否可以切换成LOCK_LOCK状态,在CInode::issued_caps_need_gather中,发现别的客户端拿了"test"目录inode的"Fs"权限(此时filelock的状态为LOCK_SYNC_LOCK,而这种状态的锁,只允许客户端持有"Fc",其他与"F"有关的权限都不允许),所以"test"的CInode的filelock不能切换成LOCK_LOCK状态。需要通过Locker::issue_caps去收回其他客户端持有的"Fs"权限。

void Locker::simple_lock(SimpleLock *lock, bool *need_issue)

{ //need_issue = NULL

CInode *in = 0;

if (lock->get_cap_shift()) // 由于lock的type是CEPH_LOCK_IFILE,所以cap_shift为8

in = static_cast<CInode *>(lock->get_parent());

int old_state = lock->get_state(); // old_state = LOCK_SYNC

switch (lock->get_state()) {

case LOCK_SYNC: lock->set_state(LOCK_SYNC_LOCK); break;

......}

int gather = 0;

if (lock->is_leased()) { ...... }

if (lock->is_rdlocked()) gather++;

if (in && in->is_head()) {

if (in->issued_caps_need_gather(lock)) { // in->issued_caps_need_gather(lock) = true

if (need_issue) *need_issue = true;

else issue_caps(in);

gather++;

}

}

......

if (gather) {

lock->get_parent()->auth_pin(lock);

......

} else { ...... }

}

issue_caps代码如下,即遍历"test"目录的CInode中client_caps中保存的各个客户端的Capability,此时通过get_caps_allowed_by_type算出客户端允许的caps为"pAsLsXsFc",而有客户端持有"pAsLsXsFs",所以发送CEPH_CAP_OP_REVOKE消息给客户端,让客户端释放"Fs"权限。

bool Locker::issue_caps(CInode *in, Capability *only_cap)

{

// allowed caps are determined by the lock mode.

int all_allowed = in->get_caps_allowed_by_type(CAP_ANY); // all_allowed = "pAsLsXsFc"

int loner_allowed = in->get_caps_allowed_by_type(CAP_LONER); // loner_allowed = "pAsLsXsFc"

int xlocker_allowed = in->get_caps_allowed_by_type(CAP_XLOCKER); // xlocker_allowed = "pAsLsXsFc"

// count conflicts with

int nissued = 0;

// client caps

map<client_t, Capability>::iterator it;

if (only_cap) ...... // only_cap = NULL

else it = in->client_caps.begin();

for (; it != in->client_caps.end(); ++it) {

Capability *cap = &it->second;

if (cap->is_stale()) continue; // cap如果过期,就不需要遍历

// do not issue _new_ bits when size|mtime is projected

int allowed;

if (loner == it->first) ......

else allowed = all_allowed; // allowed = all_allowed = "pAsLsXsFc"

// add in any xlocker-only caps (for locks this client is the xlocker for)

allowed |= xlocker_allowed & in->get_xlocker_mask(it->first); // allowed |= 0

int pending = cap->pending(); // pending = "pAsLsXsFs"

int wanted = cap->wanted(); // wanted = "AsLsXsFsx"

// are there caps that the client _wants_ and can have, but aren't pending?

// or do we need to revoke?

if (((wanted & allowed) & ~pending) || // missing wanted+allowed caps

(pending & ~allowed)) { // need to revoke ~allowed caps. // (pending & ~allowed) = "Fs"

// issue

nissued++;

// include caps that clients generally like, while we're at it.

int likes = in->get_caps_liked(); // likes = "pAsxLsxXsxFsx"

int before = pending; // before = "pAsLsXsFs"

long seq;

if (pending & ~allowed)

// (wanted|likes) & allowed & pending = "AsLsXsFsx" | "pAsxLsxXsxFsx" & "pASLsXsFc" & "pASLsXsFs" = "pASLsXs"

seq = cap->issue((wanted|likes) & allowed & pending); // if revoking, don't issue anything new.

else ......

int after = cap->pending(); // after = "pAsLsXs"

if (cap->is_new()) { ......

} else {

int op = (before & ~after) ? CEPH_CAP_OP_REVOKE : CEPH_CAP_OP_GRANT; // op = CEPH_CAP_OP_REVOKE

if (op == CEPH_CAP_OP_REVOKE) {

revoking_caps.push_back(&cap->item_revoking_caps);

revoking_caps_by_client[cap->get_client()].push_back(&cap->item_client_revoking_caps);

cap->set_last_revoke_stamp(ceph_clock_now());

cap->reset_num_revoke_warnings();

}

auto m = MClientCaps::create(op, in->ino(), in->find_snaprealm()->inode->ino(),cap->get_cap_id(),

cap->get_last_seq(), after, wanted, 0, cap->get_mseq(), mds->get_osd_epoch_barrier());

in->encode_cap_message(m, cap);

mds->send_message_client_counted(m, it->first);

}

}

}

return (nissued == 0); // true if no re-issued, no callbacks

}

发送完revoke cap消息后,在Locker::wrlock_start中,跳出循环,生成 C_MDS_RetryRequest,加入等待队列,等待lock状态变成稳态后,再把请求拿出来执行。

bool Locker::wrlock_start(SimpleLock *lock, MDRequestRef& mut, bool nowait)

{ // nowait = false

......

while (1) {

// wrlock?

// ScatterLock中sm是sm_filelock,states是filelock,而此时CInode的filelock->state是LOCK_SYNC_LOCK, filelock[LOCK_SYNC_LOCK].can_wrlock == 0, 所以不可wrlock

if (lock->can_wrlock(client) && (!want_scatter || lock->get_state() == LOCK_MIX)) { ...... }

......

if (!lock->is_stable()) break; // 由于此时filelock->state是LOCK_SYNC_LOCK,不是stable的,所以跳出循环

......

}

if (!nowait) {

dout(7) << "wrlock_start waiting on " << *lock << " on " << *lock->get_parent() << dendl;

lock->add_waiter(SimpleLock::WAIT_STABLE, new C_MDS_RetryRequest(mdcache, mut)); // C_MDS_RetryRequest(mdcache, mut))加入等待队列,等待“test”的CInode的filelock变为稳态

nudge_log(lock);

}

return false;

}

接下来客户端会回复caps消息op为CEPH_CAP_OP_UPDATE。MDS通过Locker::handle_client_caps处理caps消息

Locker::handle_client_caps

代码如下

void Locker::handle_client_caps(const MClientCaps::const_ref &m)

{

client_t client = m->get_source().num();

snapid_t follows = m->get_snap_follows(); // follows = 0

auto op = m->get_op(); // op = CEPH_CAP_OP_UPDATE

auto dirty = m->get_dirty(); // dirty = 0

Session *session = mds->get_session(m);

......

CInode *head_in = mdcache->get_inode(m->get_ino()); // head_in是"test"的CInode

Capability *cap = 0;

cap = head_in->get_client_cap(client); // 获取该client的cap

bool need_unpin = false;

// flushsnap?

if (cap->get_cap_id() != m->get_cap_id()) { ...... }

else {

CInode *in = head_in;

// head inode, and cap

MClientCaps::ref ack;

int caps = m->get_caps(); // caps = "pAsLsXs"

cap->confirm_receipt(m->get_seq(), caps); // cap->_issued = "pAsLsXs",cap->_pending = "pAsLsXs"

// filter wanted based on what we could ever give out (given auth/replica status)

bool need_flush = m->flags & MClientCaps::FLAG_SYNC;

int new_wanted = m->get_wanted() & head_in->get_caps_allowed_ever(); // m->get_wanted() = 0

if (new_wanted != cap->wanted()) { // cap->wanted() = "AsLsXsFsx"

......

adjust_cap_wanted(cap, new_wanted, m->get_issue_seq()); // 将wanted设置为0

}

if (updated) { ...... }

else {

bool did_issue = eval(in, CEPH_CAP_LOCKS); //

......

}

if (need_flush)

mds->mdlog->flush();

}

out:

if (need_unpin)

head_in->auth_unpin(this);

}

在handle_client_caps中将客户端的cap中的_issued和_pending改变为"pAsLsXs"后,开始eval流程,分别eval_any "test"的CInode的filelock,authlock,linklock和xattrlock。

bool Locker::eval(CInode *in, int mask, bool caps_imported)

{ //in是"test"目录的CInode指针,mask = 2496, caps_imported = false

bool need_issue = caps_imported; // need_issue = false

MDSInternalContextBase::vec finishers;

retry:

if (mask & CEPH_LOCK_IFILE) // 此时filelock的state为LOCK_SYNC_LOCK,不是稳态

eval_any(&in->filelock, &need_issue, &finishers, caps_imported);

if (mask & CEPH_LOCK_IAUTH) // 此时authlock的状态为LOCK_SYNC

eval_any(&in->authlock, &need_issue, &finishers, caps_imported);

if (mask & CEPH_LOCK_ILINK) // 此时linklock的状态为LOCK_SYNC

eval_any(&in->linklock, &need_issue, &finishers, caps_imported);

if (mask & CEPH_LOCK_IXATTR) // 此时xattrlock的状态为LOCK_SYNC

eval_any(&in->xattrlock, &need_issue, &finishers, caps_imported);

if (mask & CEPH_LOCK_INEST)

eval_any(&in->nestlock, &need_issue, &finishers, caps_imported);

if (mask & CEPH_LOCK_IFLOCK)

eval_any(&in->flocklock, &need_issue, &finishers, caps_imported);

if (mask & CEPH_LOCK_IPOLICY)

eval_any(&in->policylock, &need_issue, &finishers, caps_imported);

// drop loner?

......

finish_contexts(g_ceph_context, finishers);

if (need_issue && in->is_head())

issue_caps(in);

dout(10) << "eval done" << dendl;

return need_issue;

}

由于filelock的state为LOCK_SYNC_LOCK,不是稳态,所以去eval_gather, state状态的转换过程是LOCK_SYNC_LOCK --> LOCK_LOCK --> LOCK_LOCK_SYNC --> LOCK_SYNC,在mkdir的acquire_lock过程中,将LOCK_SYNC转换成LOCK_LOCK_SYNC,这里再将状态转换回来,转换成LOCK_SYNC。代码如下

void Locker::eval_gather(SimpleLock *lock, bool first, bool *pneed_issue, MDSInternalContextBase::vec *pfinishers)

{ // first = false

int next = lock->get_next_state(); // next = LOCK_LOCK

CInode *in = 0;

bool caps = lock->get_cap_shift(); // caps = 8

if (lock->get_type() != CEPH_LOCK_DN)

in = static_cast<CInode *>(lock->get_parent()); // 得到"test"的CInode

bool need_issue = false;

int loner_issued = 0, other_issued = 0, xlocker_issued = 0;

if (caps && in->is_head()) {

in->get_caps_issued(&loner_issued, &other_issued, &xlocker_issued, lock->get_cap_shift(), lock->get_cap_mask());

// 得到loner_issued = 0,other_issued = 0,xlocker_issued = 0

......

}

#define IS_TRUE_AND_LT_AUTH(x, auth) (x && ((auth && x <= AUTH) || (!auth && x < AUTH)))

bool auth = lock->get_parent()->is_auth();

if (!lock->is_gathering() && // gather_set为空,即其他mds并不需要获取锁,所以lock不处于gathering中,

(IS_TRUE_AND_LT_AUTH(lock->get_sm()->states[next].can_rdlock, auth) || !lock->is_rdlocked()) &&

(IS_TRUE_AND_LT_AUTH(lock->get_sm()->states[next].can_wrlock, auth) || !lock->is_wrlocked()) &&

(IS_TRUE_AND_LT_AUTH(lock->get_sm()->states[next].can_xlock, auth) || !lock->is_xlocked()) &&

(IS_TRUE_AND_LT_AUTH(lock->get_sm()->states[next].can_lease, auth) || !lock->is_leased()) &&

!(lock->get_parent()->is_auth() && lock->is_flushing()) && // i.e. wait for scatter_writebehind!

(!caps || ((~lock->gcaps_allowed(CAP_ANY, next) & other_issued) == 0 &&

(~lock->gcaps_allowed(CAP_LONER, next) & loner_issued) == 0 &&

(~lock->gcaps_allowed(CAP_XLOCKER, next) & xlocker_issued) == 0)) &&

lock->get_state() != LOCK_SYNC_MIX2 && // these states need an explicit trigger from the auth mds

lock->get_state() != LOCK_MIX_SYNC2

) {

if (!lock->get_parent()->is_auth()) { // 如果是副本,则发送消息给auth的mds, 让auth的mds去加锁

......

} else {

......

}

lock->set_state(next); // 将锁转换为LOCK_LOCK

if (lock->get_parent()->is_auth() && lock->is_stable())

lock->get_parent()->auth_unpin(lock);

// drop loner before doing waiters

if (pfinishers)

// 将之前的mkdir的C_MDS_RetryRequest取出,放入pfinishers中

lock->take_waiting(SimpleLock::WAIT_STABLE|SimpleLock::WAIT_WR|SimpleLock::WAIT_RD|SimpleLock::WAIT_XLOCK, *pfinishers);

...

if (caps && in->is_head()) need_issue = true;

if (lock->get_parent()->is_auth() && lock->is_stable()) try_eval(lock, &need_issue);

}

if (need_issue) {

if (pneed_issue)

*pneed_issue = true;

else if (in->is_head())

issue_caps(in);

}

}

在eval_gather中只是将LOCK_SYNC_LOCK转换成LOCK_LOCK,在Locker::simple_sync中将lock转换为LOCK_SYNC, 代码如下

bool Locker::simple_sync(SimpleLock *lock, bool *need_issue)

{

CInode *in = 0;

if (lock->get_cap_shift())

in = static_cast<CInode *>(lock->get_parent());

int old_state = lock->get_state(); // old_state = LOCK_LOCK

if (old_state != LOCK_TSYN) {

switch (lock->get_state()) {

case LOCK_LOCK: lock->set_state(LOCK_LOCK_SYNC); break; // 将state转换成LOCK_LOCK_SYNC

......

}

int gather = 0;

}

......

lock->set_state(LOCK_SYNC); // 将state转换成LOCK_SYNC

lock->finish_waiters(SimpleLock::WAIT_RD|SimpleLock::WAIT_STABLE); // 此时waiting之前被取出来了,所以waiting为空

if (in && in->is_head()) {

if (need_issue) *need_issue = true;

......

}

return true;

}

流程为

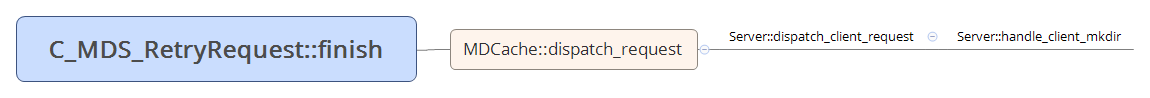

由于其他4个锁的状态都是LOCK_SYNC,不需要去转换状态,所以在eval_gather中并没有做实际的事情。接下来在finish_contexts中执行finishers中的回调函数,finishers存了之前的C_MDS_RetryRequest。即重新执行handle_client_mkdir

void C_MDS_RetryRequest::finish(int r)

{

mdr->retry++;

cache->dispatch_request(mdr);

}

流程为:

即重来一遍handle_client_mkdir,虽说是重来一遍,但由于之前request中保存了一些数据,所有有些过程不用重走。Server::rdlock_path_xlock_dentry与之前一样,就不重复分析,再来一遍Locker::acquire_locks

Locker::acquire_locks

之前讲了Locker::acquire_locks分为3个步骤:整理xlocks、wrlock和rdlocks;auth_pin住元数据;开始加锁。前两个步骤之前已经研究了,所以直接从第三个步骤开始。上一次是在对"test"的filelock加wrlock时,没加成功,所以这里直接从对"test"的filelock加wrlock开始。将锁切换为中间状态LOCK_SYNC_LOCK后,CInode::issued_caps_need_gather中并没有发现别的客户端拿了"test"目录inode的与"F"有关的权限,所以直接将lock的状态设为LOCK_LOCK。代码如下

void Locker::simple_lock(SimpleLock *lock, bool *need_issue)

{

CInode *in = 0;

if (lock->get_cap_shift()) // 由于lock的type是CEPH_LOCK_IFILE,所以cap_shift为8

in = static_cast<CInode *>(lock->get_parent());

int old_state = lock->get_state(); // old_state = LOCK_SYNC

switch (lock->get_state()) {

case LOCK_SYNC: lock->set_state(LOCK_SYNC_LOCK); break;

......}

int gather = 0;

if (lock->is_leased()) { ...... }

if (lock->is_rdlocked()) ......;

if (in && in->is_head()) {

if (in->issued_caps_need_gather(lock)) { ... }

}

...

if (gather) { ...

} else {

lock->set_state(LOCK_LOCK);

lock->finish_waiters(ScatterLock::WAIT_XLOCK|ScatterLock::WAIT_WR|ScatterLock::WAIT_STABLE);

}

}

"test"的CInode的filelock状态为LOCK_LOCK时,就可以被加上wrlock了。加锁结束。

接下来是对"test"的CInode的authlock加rdlock。它的锁的状态是LOCK_SYNC,是可以直接加rdlock。这里没有涉及到锁的切换。

对"test"的CInode的nestlock加wrlock。而此时nestlock的状态已经是LOCK_LOCK,这个状态估计是之前的请求中加上的。可以直接加上wrlock。自此,acquire_lock过程完结。

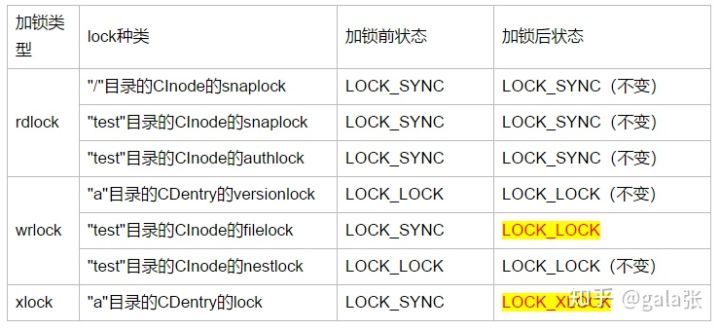

总结:在acquire_lock中对7个lock("a"的CDentry的versionlock和lock, “/”目录的snaplock,"test"的CInode的snaplock、filelock、authlock、nestlock)加锁。锁的状态变化如下图

接下来就是生成"a"目录的CInode,处理函数Server::prepare_new_inode,见下一篇。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· 写一个简单的SQL生成工具

· AI 智能体引爆开源社区「GitHub 热点速览」

· C#/.NET/.NET Core技术前沿周刊 | 第 29 期(2025年3.1-3.9)