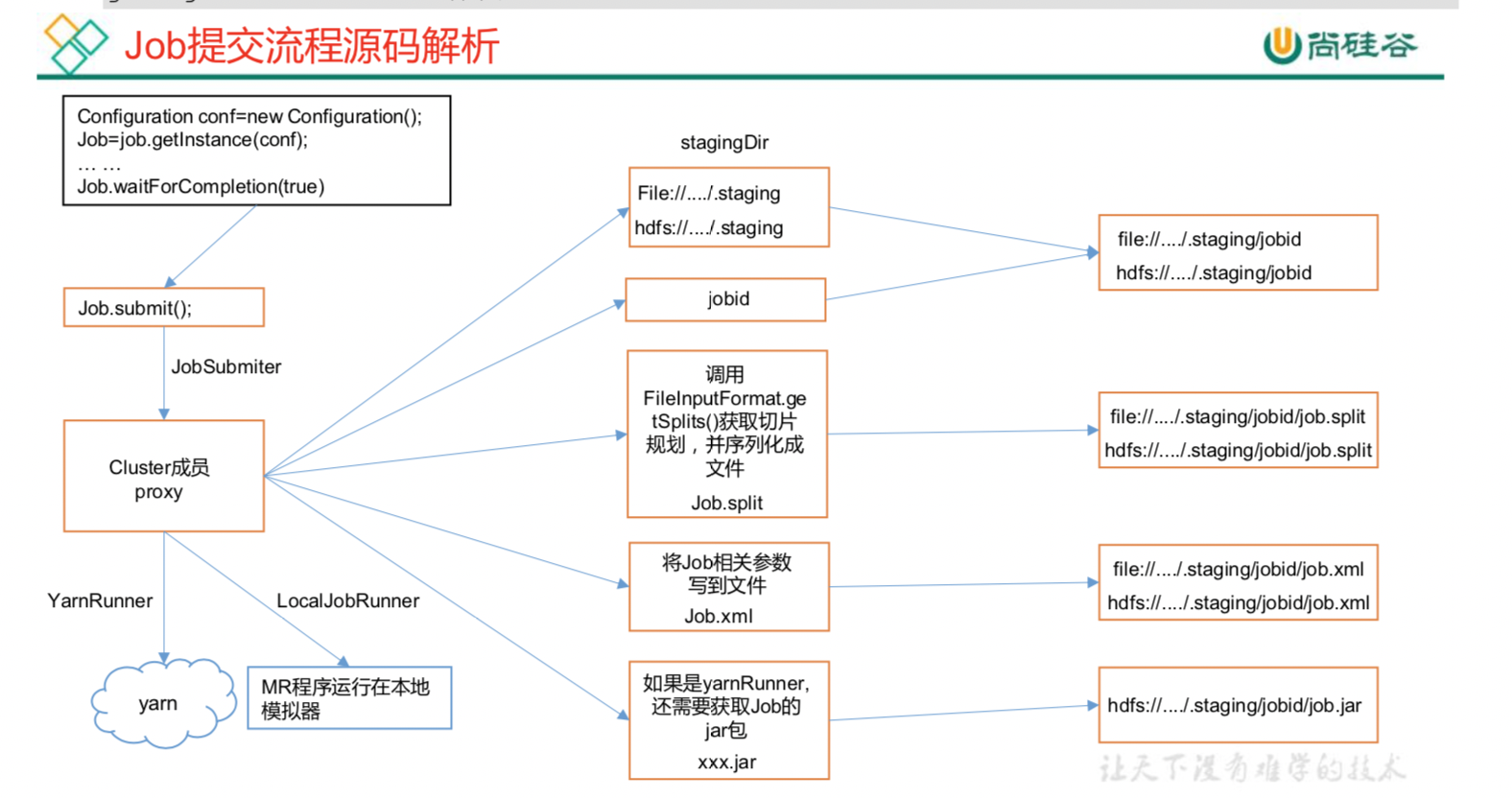

03_MapReduce框架原理_3.2 Job提交流程(源码)

点击查看代码

/*

客户端 提交Job流程

1. 客户端 执行Driver类的main方法

waitForCompletion() 提交Job,并监控Job运行状态,Job完成后返回 true

2. 提交job

submit()

3. 创建连接,并创建 集群代理对象cluster

connect()

// 创建提交job的集群代对象

// 根据上下文对象,判断 是创建 本地运行环境 还是yarn集群环境

return new Cluster(getConfiguration())

4. 提交job 到指定集群

1. 在指定集群中 创建staging(暂存)目录,并返回路径

// 示例 : file:/tmp/hadoop/mapred/staging/dxm1446706250/.staging

Path jobStagingArea = JobSubmissionFiles.getStagingDir(cluster, conf);

2. 创建JobID

JobID jobId = submitClient.getNewJobID();

3. 根据Jobid 创建Job提交路径

// 示例 : file:/tmp/hadoop/mapred/staging/dxm870750042/.staging/job_local870750042_0001

Path submitJobDir = new Path(jobStagingArea, jobId.toString());

4. 上传 configure files, libjars, jobjars, and archives pertaining(相关文档) 到指定路径

// rUploader.uploadResources(job, jobSubmitDir)

// 上传 -libjars, -files, -archives

copyAndConfigureFiles(job, submitJobDir);

5. 根据输入文件,计算切片,并生成切片规划文件,并上传到stag路径

// 示例 job.split、job.splitmetainfo

// 返回切片个数

int maps = writeSplits(job, submitJobDir);

6. 上传 job.xml 到stag路径

writeConf(conf, submitJobFile);

7. 提交job,并返回提交job的状态

status = submitClient.submitJob

*/

//1. 提交job

// 方法功能 : 提交job,并且监控job的运行状态,job完成后返回 true

val bool: Boolean = job.waitForCompletion(true)

public boolean waitForCompletion(boolean verbose

) throws IOException, InterruptedException,

ClassNotFoundException {

if (state == JobState.DEFINE) {

//2. 提交job

submit();

}

// 监控job运行状态

if (verbose) {

monitorAndPrintJob();

} else {

// get the completion poll interval from the client.

int completionPollIntervalMillis =

Job.getCompletionPollInterval(cluster.getConf());

while (!isComplete()) {

try {

Thread.sleep(completionPollIntervalMillis);

} catch (InterruptedException ie) {

}

}

}

// Job完成,返回 true

return isSuccessful();

}

//2. submit()

// 方法功能 : 创建集群代理对象,并将job提交到指定集群,改变job状态为running

public void submit()

throws IOException, InterruptedException, ClassNotFoundException {

ensureState(JobState.DEFINE);

setUseNewAPI();

// 3. 创建连接,并创建 集群代理对象cluster

connect();

final JobSubmitter submitter =

getJobSubmitter(cluster.getFileSystem(), cluster.getClient());

status = ugi.doAs(new PrivilegedExceptionAction<JobStatus>() {

public JobStatus run() throws IOException, InterruptedException,

ClassNotFoundException {

// 4. 提交job 到指定集群

return submitter.submitJobInternal(Job.this, cluster);

}

});

state = JobState.RUNNING;

LOG.info("The url to track the job: " + getTrackingURL());

}

// 3. connect();

// 方法功能 : 根据配置信息 创建集群代理对象

private synchronized void connect()

throws IOException, InterruptedException, ClassNotFoundException {

if (cluster == null) {

cluster =

ugi.doAs(new PrivilegedExceptionAction<Cluster>() {

public Cluster run()

throws IOException, InterruptedException,

ClassNotFoundException {

// 创建提交job的集群代对象

// 根据上下文对象,判断 是创建 本地运行环境 还是yarn集群环境

return new Cluster(getConfiguration());

}

});

}

}

// 4. 提交job 到指定集群

// 方法功能 : 将job 提交到指定集群 的内部方法

JobStatus submitJobInternal(Job job, Cluster cluster)

throws ClassNotFoundException, InterruptedException, IOException {

//validate the jobs output specs

checkSpecs(job);

Configuration conf = job.getConfiguration();

addMRFrameworkToDistributedCache(conf);

//1. 在指定集群中 创建staging(暂存)目录,并返回路径

// 示例 : file:/tmp/hadoop/mapred/staging/dxm1446706250/.staging

Path jobStagingArea = JobSubmissionFiles.getStagingDir(cluster, conf);

//configure the command line options correctly on the submitting dfs

InetAddress ip = InetAddress.getLocalHost();

if (ip != null) {

submitHostAddress = ip.getHostAddress();

submitHostName = ip.getHostName();

conf.set(MRJobConfig.JOB_SUBMITHOST,submitHostName);

conf.set(MRJobConfig.JOB_SUBMITHOSTADDR,submitHostAddress);

}

//2. 创建JobID

JobID jobId = submitClient.getNewJobID();

job.setJobID(jobId);

//3. 根据Jobid 创建Job提交路径

// 示例 : file:/tmp/hadoop/mapred/staging/dxm870750042/.staging/job_local870750042_0001

Path submitJobDir = new Path(jobStagingArea, jobId.toString());

JobStatus status = null;

try {

conf.set(MRJobConfig.USER_NAME,

UserGroupInformation.getCurrentUser().getShortUserName());

conf.set("hadoop.http.filter.initializers",

"org.apache.hadoop.yarn.server.webproxy.amfilter.AmFilterInitializer");

conf.set(MRJobConfig.MAPREDUCE_JOB_DIR, submitJobDir.toString());

LOG.debug("Configuring job " + jobId + " with " + submitJobDir

+ " as the submit dir");

// get delegation token for the dir

TokenCache.obtainTokensForNamenodes(job.getCredentials(),

new Path[] { submitJobDir }, conf);

populateTokenCache(conf, job.getCredentials());

// generate a secret to authenticate shuffle transfers

if (TokenCache.getShuffleSecretKey(job.getCredentials()) == null) {

KeyGenerator keyGen;

try {

keyGen = KeyGenerator.getInstance(SHUFFLE_KEYGEN_ALGORITHM);

keyGen.init(SHUFFLE_KEY_LENGTH);

} catch (NoSuchAlgorithmException e) {

throw new IOException("Error generating shuffle secret key", e);

}

SecretKey shuffleKey = keyGen.generateKey();

TokenCache.setShuffleSecretKey(shuffleKey.getEncoded(),

job.getCredentials());

}

if (CryptoUtils.isEncryptedSpillEnabled(conf)) {

conf.setInt(MRJobConfig.MR_AM_MAX_ATTEMPTS, 1);

LOG.warn("Max job attempts set to 1 since encrypted intermediate" +

"data spill is enabled");

}

//4. 上传 configure files, libjars, jobjars, and archives pertaining(相关文档) 到指定路径

// rUploader.uploadResources(job, jobSubmitDir)

// 上传 -libjars, -files, -archives

copyAndConfigureFiles(job, submitJobDir);

Path submitJobFile = JobSubmissionFiles.getJobConfPath(submitJobDir);

// Create the splits for the job

LOG.debug("Creating splits at " + jtFs.makeQualified(submitJobDir));

//5. 根据输入文件,计算切片,并生成切片规划文件,并上传到stag路径

// 示例 job.split、job.splitmetainfo

// 返回切片个数

int maps = writeSplits(job, submitJobDir);

conf.setInt(MRJobConfig.NUM_MAPS, maps);

LOG.info("number of splits:" + maps);

int maxMaps = conf.getInt(MRJobConfig.JOB_MAX_MAP,

MRJobConfig.DEFAULT_JOB_MAX_MAP);

if (maxMaps >= 0 && maxMaps < maps) {

throw new IllegalArgumentException("The number of map tasks " + maps +

" exceeded limit " + maxMaps);

}

// write "queue admins of the queue to which job is being submitted"

// to job file.

String queue = conf.get(MRJobConfig.QUEUE_NAME,

JobConf.DEFAULT_QUEUE_NAME);

AccessControlList acl = submitClient.getQueueAdmins(queue);

conf.set(toFullPropertyName(queue,

QueueACL.ADMINISTER_JOBS.getAclName()), acl.getAclString());

// removing jobtoken referrals before copying the jobconf to HDFS

// as the tasks don't need this setting, actually they may break

// because of it if present as the referral will point to a

// different job.

TokenCache.cleanUpTokenReferral(conf);

if (conf.getBoolean(

MRJobConfig.JOB_TOKEN_TRACKING_IDS_ENABLED,

MRJobConfig.DEFAULT_JOB_TOKEN_TRACKING_IDS_ENABLED)) {

// Add HDFS tracking ids

ArrayList<String> trackingIds = new ArrayList<String>();

for (Token<? extends TokenIdentifier> t :

job.getCredentials().getAllTokens()) {

trackingIds.add(t.decodeIdentifier().getTrackingId());

}

conf.setStrings(MRJobConfig.JOB_TOKEN_TRACKING_IDS,

trackingIds.toArray(new String[trackingIds.size()]));

}

// Set reservation info if it exists

ReservationId reservationId = job.getReservationId();

if (reservationId != null) {

conf.set(MRJobConfig.RESERVATION_ID, reservationId.toString());

}

// Write job file to submit dir

//6. 上传 job.xml 到stag路径

writeConf(conf, submitJobFile);

//

// Now, actually submit the job (using the submit name)

//

printTokens(jobId, job.getCredentials());

//7. 提交job,并返回提交job的状态

status = submitClient.submitJob(

jobId, submitJobDir.toString(), job.getCredentials());

if (status != null) {

return status;

} else {

throw new IOException("Could not launch job");

}

} finally {

if (status == null) {

LOG.info("Cleaning up the staging area " + submitJobDir);

if (jtFs != null && submitJobDir != null)

jtFs.delete(submitJobDir, true);

}

}

}

分类:

Mapreduce

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 提示词工程——AI应用必不可少的技术

· 字符编码:从基础到乱码解决

· SpringCloud带你走进微服务的世界