Class9作业-Kaldi的aishell实例

获取数据

#!/usr/bin/env bash

# Copyright 2017 Beijing Shell Shell Tech. Co. Ltd. (Authors: Hui Bu)

# 2017 Jiayu Du

# 2017 Xingyu Na

# 2017 Bengu Wu

# 2017 Hao Zheng

# Apache 2.0

# This is a shell script, but it's recommended that you run the commands one by

# one by copying and pasting into the shell.

# Caution: some of the graph creation steps use quite a bit of memory, so you

# should run this on a machine that has sufficient memory.

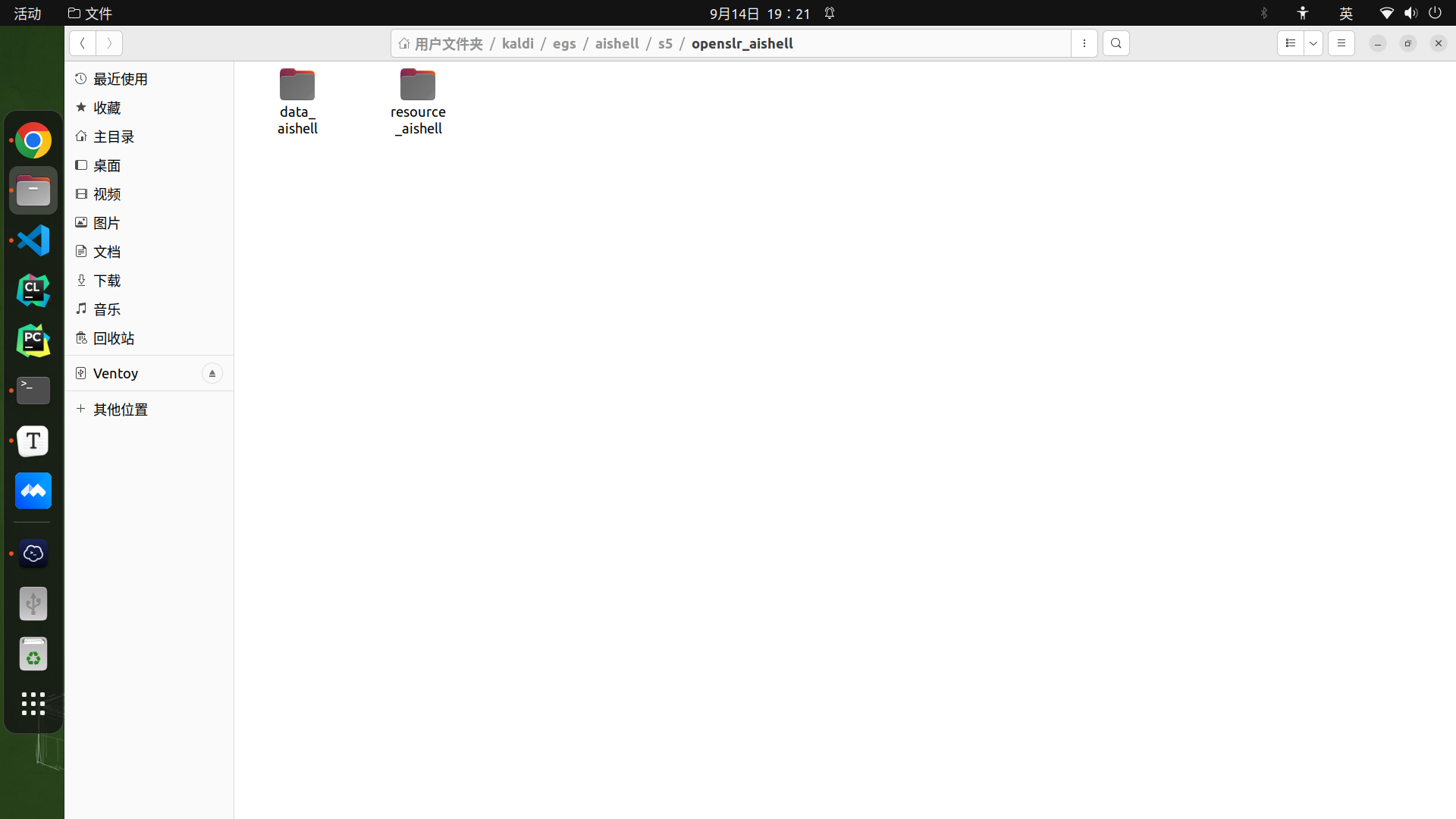

data=/home/baixf/kaldi/egs/aishell/s5/openslr_aishell

data_url=www.openslr.org/resources/33

. ./cmd.sh

##下载并解压aishell 178小时语料库,(音频和lexicon词典)

#local/download_and_untar.sh $data $data_url data_aishell || exit 1;

#local/download_and_untar.sh $data $data_url resource_aishell || exit 1;

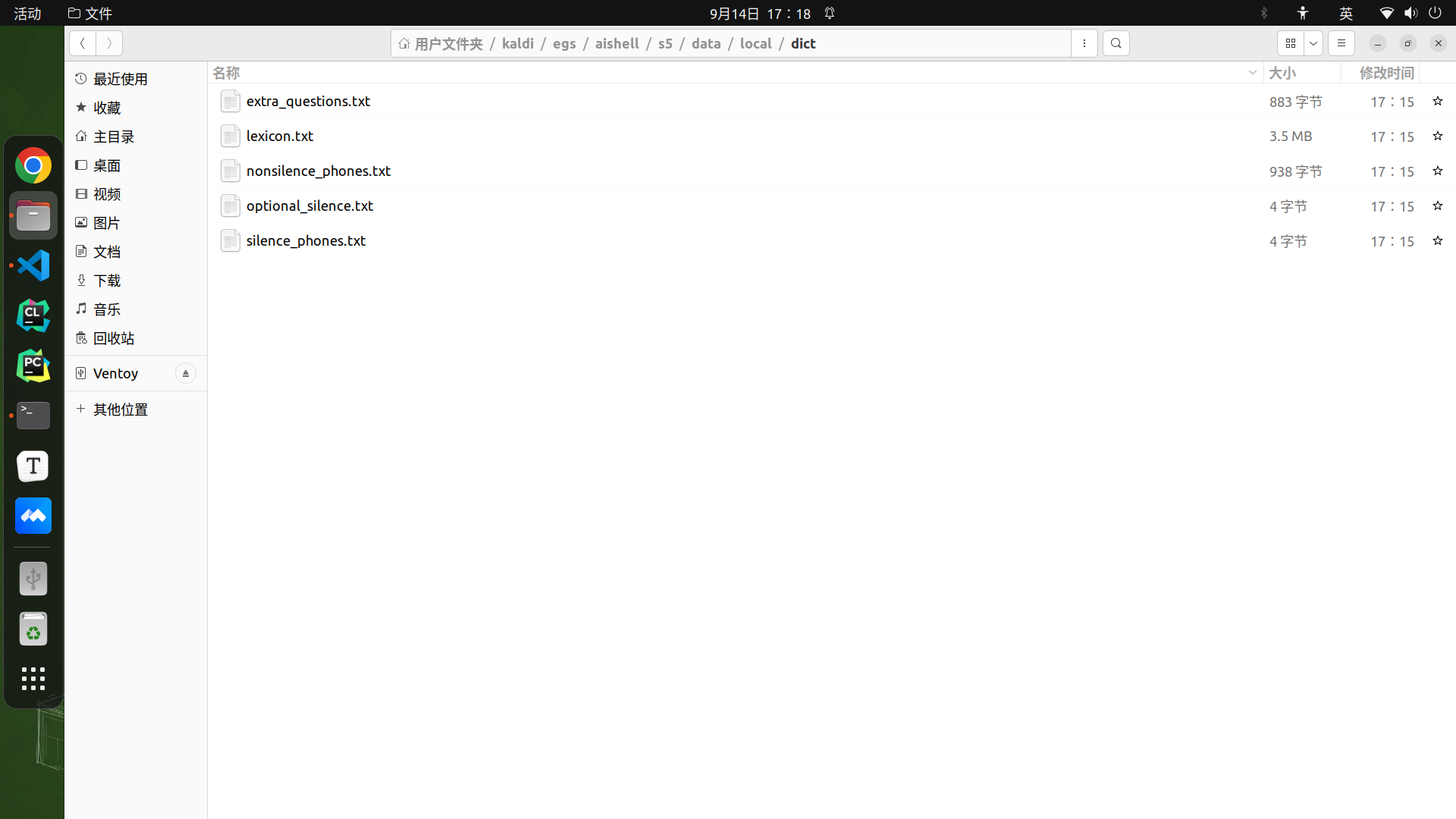

准备词典

#准备词典

# Lexicon Preparation,

local/aishell_prepare_dict.sh $data/resource_aishell || exit 1;

local/aishell_prepare_dict.sh: AISHELL dict preparation succeeded

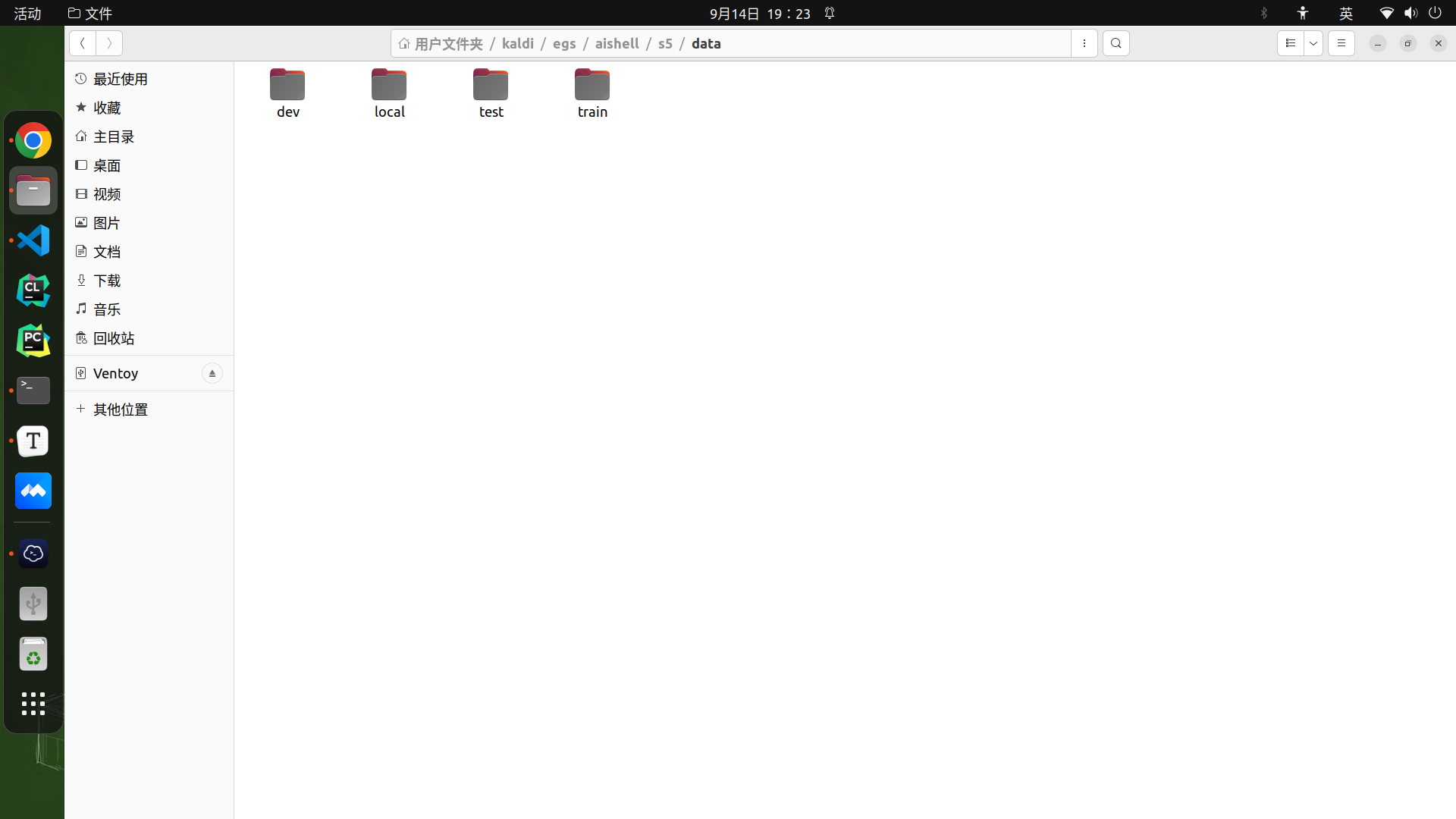

准备数据

#准备数据。分成test、dev、train集。

# Data Preparation,

local/aishell_data_prep.sh $data/data_aishell/wav $data/data_aishell/transcript || exit 1;

Preparing data/local/train transcriptions

Preparing data/local/dev transcriptions

Preparing data/local/test transcriptions

local/aishell_data_prep.sh: AISHELL data preparation succeeded

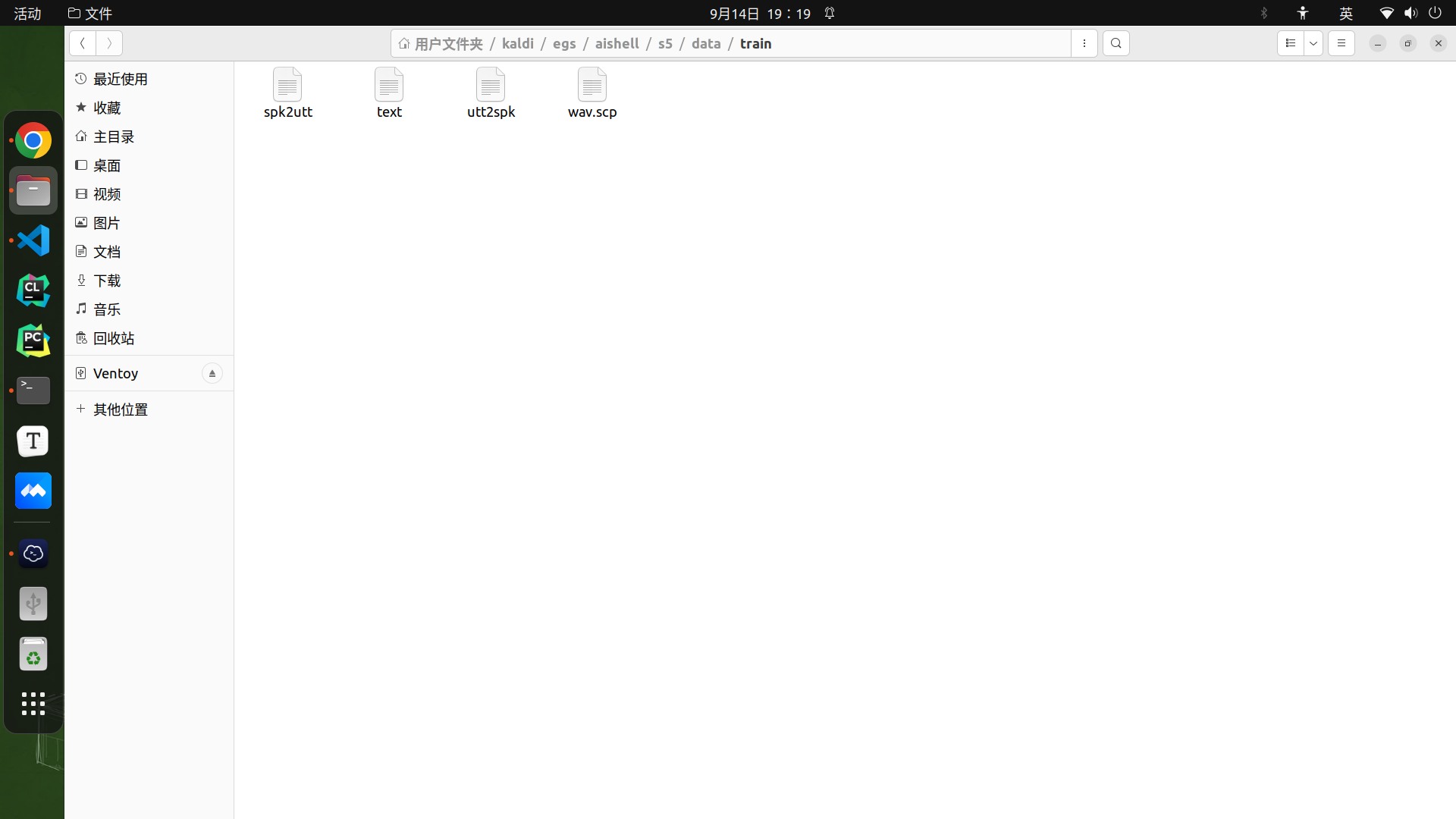

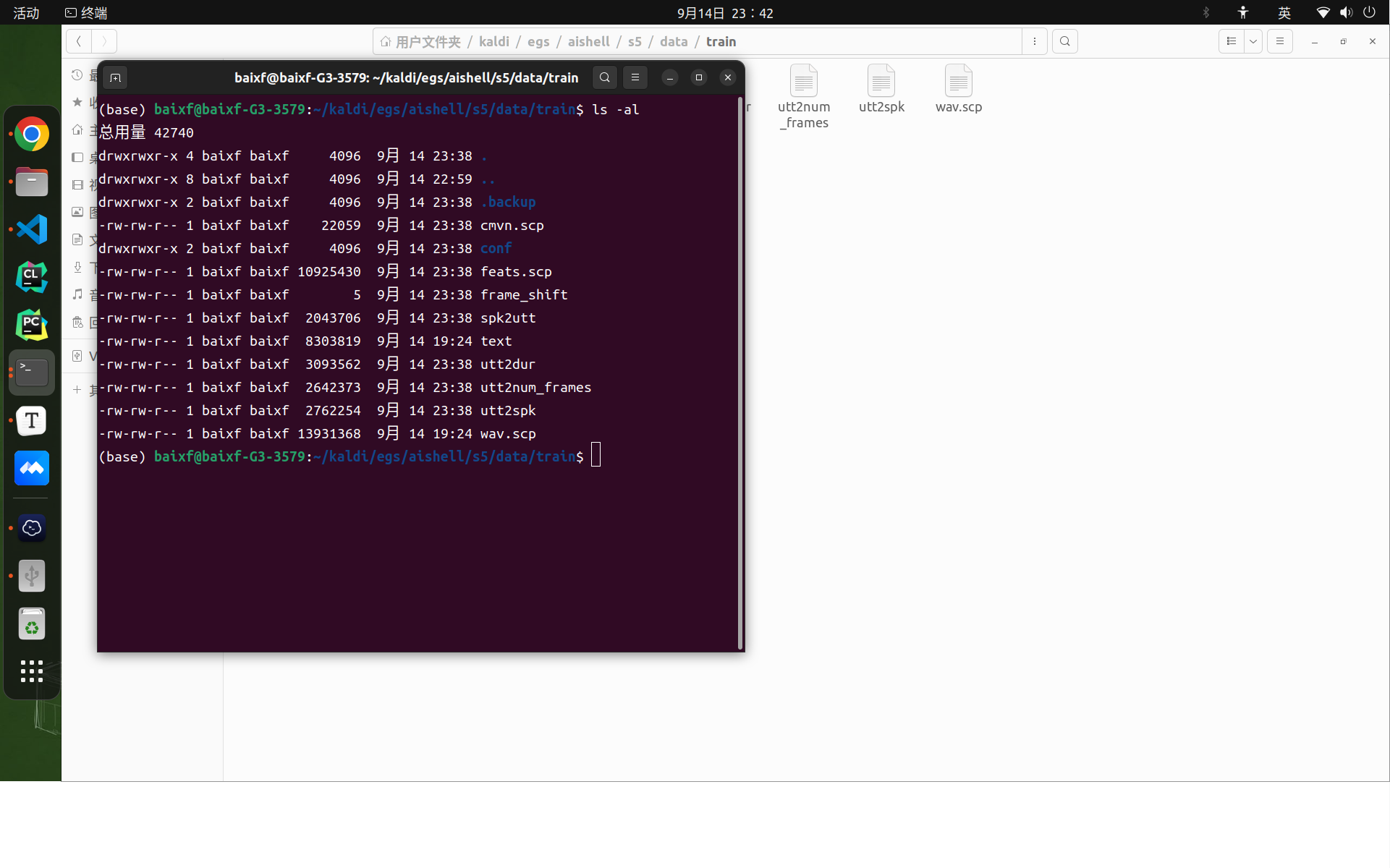

其中,数据关系保存在/data/{train,dev,test}里,文件解释如下:

- spk2utt 包含说话人编号和说话人的语音编号的信息

- text 包含语音和语音编号之间的关系

- utt2spk 语音编号和说话人编号之间的关系

- wav.scp 包含了原始语音的路径信息等

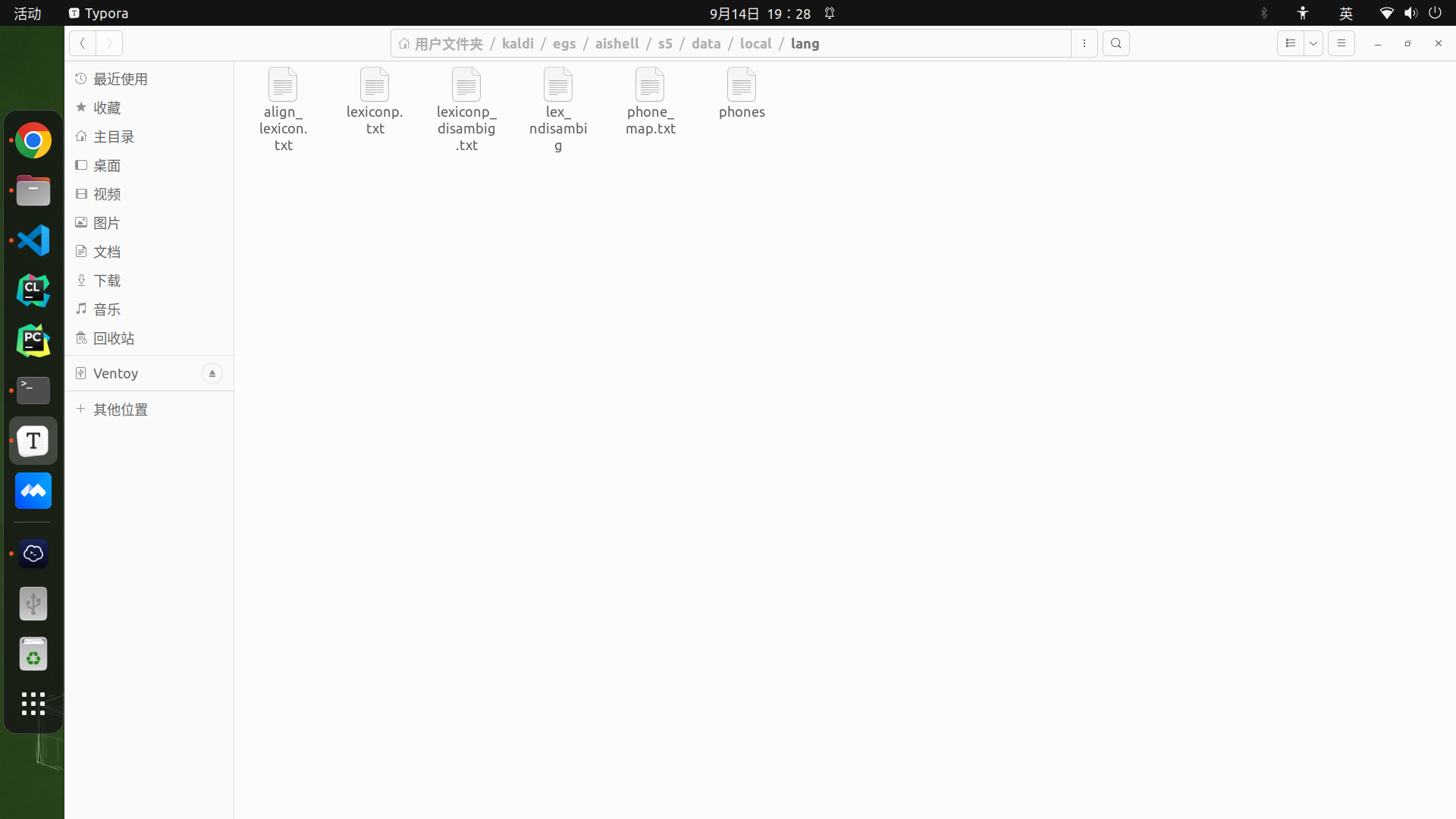

语言文件准备

#词典、语言文件准备,生成对应的数据关系

# Phone Sets, questions, L compilation

utils/prepare_lang.sh --position-dependent-phones false data/local/dict \

"<SPOKEN_NOISE>" data/local/lang data/lang || exit 1;

utils/prepare_lang.sh 对 data/dict 进行了处理,得到data/lang,目的是创建L.fst:音素词典(Phonetic Dictionary or Lexicon)模型,phone symbols作为输入,word symbols作为输出,其中fst是Finite State Transducers(有限状态转换器)的缩写。选项“position_dependent_phones”指明是否使用位置相关的音素,即是否根据一个音素在词中的位置将其加上不同的后缀:“_B”(开头)、“_E”(结尾)、“_I”(中间)、“_S”(独立成词)。参数“”取自 lexicon.txt,后续处理中所有集外词(Out Of Vocabulary,OOV)都用它来代替。

Checking data/local/dict/silence_phones.txt ...

--> reading data/local/dict/silence_phones.txt

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/local/dict/silence_phones.txt is OK

Checking data/local/dict/optional_silence.txt ...

--> reading data/local/dict/optional_silence.txt

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/local/dict/optional_silence.txt is OK

Checking data/local/dict/nonsilence_phones.txt ...

--> reading data/local/dict/nonsilence_phones.txt

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/local/dict/nonsilence_phones.txt is OK

Checking disjoint: silence_phones.txt, nonsilence_phones.txt

--> disjoint property is OK.

Checking data/local/dict/lexicon.txt

--> reading data/local/dict/lexicon.txt

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/local/dict/lexicon.txt is OK

Checking data/local/dict/extra_questions.txt ...

--> reading data/local/dict/extra_questions.txt

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/local/dict/extra_questions.txt is OK

--> SUCCESS [validating dictionary directory data/local/dict]

**Creating data/local/dict/lexiconp.txt from data/local/dict/lexicon.txt

fstaddselfloops data/lang/phones/wdisambig_phones.int data/lang/phones/wdisambig_words.int

prepare_lang.sh: validating output directory

utils/validate_lang.pl data/lang

Checking existence of separator file

separator file data/lang/subword_separator.txt is empty or does not exist, deal in word case.

Checking data/lang/phones.txt ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/lang/phones.txt is OK

Checking words.txt: #0 ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/lang/words.txt is OK

Checking disjoint: silence.txt, nonsilence.txt, disambig.txt ...

--> silence.txt and nonsilence.txt are disjoint

--> silence.txt and disambig.txt are disjoint

--> disambig.txt and nonsilence.txt are disjoint

--> disjoint property is OK

Checking sumation: silence.txt, nonsilence.txt, disambig.txt ...

--> found no unexplainable phones in phones.txt

Checking data/lang/phones/context_indep.{txt, int, csl} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 1 entry/entries in data/lang/phones/context_indep.txt

--> data/lang/phones/context_indep.int corresponds to data/lang/phones/context_indep.txt

--> data/lang/phones/context_indep.csl corresponds to data/lang/phones/context_indep.txt

--> data/lang/phones/context_indep.{txt, int, csl} are OK

Checking data/lang/phones/nonsilence.{txt, int, csl} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 216 entry/entries in data/lang/phones/nonsilence.txt

--> data/lang/phones/nonsilence.int corresponds to data/lang/phones/nonsilence.txt

--> data/lang/phones/nonsilence.csl corresponds to data/lang/phones/nonsilence.txt

--> data/lang/phones/nonsilence.{txt, int, csl} are OK

Checking data/lang/phones/silence.{txt, int, csl} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 1 entry/entries in data/lang/phones/silence.txt

--> data/lang/phones/silence.int corresponds to data/lang/phones/silence.txt

--> data/lang/phones/silence.csl corresponds to data/lang/phones/silence.txt

--> data/lang/phones/silence.{txt, int, csl} are OK

Checking data/lang/phones/optional_silence.{txt, int, csl} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 1 entry/entries in data/lang/phones/optional_silence.txt

--> data/lang/phones/optional_silence.int corresponds to data/lang/phones/optional_silence.txt

--> data/lang/phones/optional_silence.csl corresponds to data/lang/phones/optional_silence.txt

--> data/lang/phones/optional_silence.{txt, int, csl} are OK

Checking data/lang/phones/disambig.{txt, int, csl} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 105 entry/entries in data/lang/phones/disambig.txt

--> data/lang/phones/disambig.int corresponds to data/lang/phones/disambig.txt

--> data/lang/phones/disambig.csl corresponds to data/lang/phones/disambig.txt

--> data/lang/phones/disambig.{txt, int, csl} are OK

Checking data/lang/phones/roots.{txt, int} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 67 entry/entries in data/lang/phones/roots.txt

--> data/lang/phones/roots.int corresponds to data/lang/phones/roots.txt

--> data/lang/phones/roots.{txt, int} are OK

Checking data/lang/phones/sets.{txt, int} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 67 entry/entries in data/lang/phones/sets.txt

--> data/lang/phones/sets.int corresponds to data/lang/phones/sets.txt

--> data/lang/phones/sets.{txt, int} are OK

Checking data/lang/phones/extra_questions.{txt, int} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 7 entry/entries in data/lang/phones/extra_questions.txt

--> data/lang/phones/extra_questions.int corresponds to data/lang/phones/extra_questions.txt

--> data/lang/phones/extra_questions.{txt, int} are OK

Checking optional_silence.txt ...

--> reading data/lang/phones/optional_silence.txt

--> data/lang/phones/optional_silence.txt is OK

Checking disambiguation symbols: #0 and #1

--> data/lang/phones/disambig.txt has "#0" and "#1"

--> data/lang/phones/disambig.txt is OK

Checking topo ...

Checking word-level disambiguation symbols...

--> data/lang/phones/wdisambig.txt exists (newer prepare_lang.sh)

Checking data/lang/oov.{txt, int} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 1 entry/entries in data/lang/oov.txt

--> data/lang/oov.int corresponds to data/lang/oov.txt

--> data/lang/oov.{txt, int} are OK

--> data/lang/L.fst is olabel sorted

--> data/lang/L_disambig.fst is olabel sorted

--> SUCCESS [validating lang directory data/lang]

lang 中生成的文件:

-

phones.txt,将所有音素一一映射为自然数,即音素 ID,引入“”(epsilon)、消歧(Disambiguation)符号“#n”(n 为自然数), 便于 FST 处理,符号表(symbol-table)文件,符合 OpenFst 的格式定义,一般只有脚本 utils/int2sym.pl、 utils/sym2int.pl 和 OpenFst 中的程序fstcompile 和 fstprint 会读取这些文件。

-

words.txt,将词一一映射为自然数,即词ID,引入“”(epsilon)、消歧符号 “#0”、“”(句子起始处)、“”(句子结尾处),便于 FST 处理,符号表(symbol-table)文件,符合 OpenFst 的格式定义,一般只有脚本 utils/int2sym.pl、 utils/sym2int.pl 和 OpenFst 中的程序fstcompile 和 fstprint 会读取这些文件。

-

oov.txt,oov.int,集外词的替代者(此处为)及其在words.txt 中的ID。在训练过程中,所有词汇表以外的词都会被映射为这个词(UNK 即unknown)。“” 本身并没有特殊的地方,也不一定非要用这个词。重要的是需要保证这个词的发音只包含一个被指定为“垃圾音素”(garbage phone)的音素。该音素会与各种口语噪声对齐。在我们的这个特别设置中,该音素被称为,就是“spoken noise” 的缩写。

-

topo,各个音素HMM模型的拓扑图,第二章提过将一个音素(或三音素)表示成一个HMM,此文件确定了每个音素使用的HMM状态数以及转移概率,用于初始化单音素GMM-HMM,可根据需要自行进行修改(并用utils/validate_lang.pl校验),实验中静音音素用了5个状态,其他音素用了3个状态;

-

L.fst,L_disambig.fst,发音词典转换成的FST形式的词典,即输入是音素,输出是词,两个 FST的区别在于后者考虑了消歧,包含了为消歧而引入的符号,诸如#1、#2之类,以及为自环(self-loop) 而引入的#0。

-

phones/,是dict/ 的拓展,内部文件均可以文本形式打开查看,后缀为 txt/int/csl 的同名文件之间是相互转换的,其中 context_indep.txt 包含一个音素列表,用于建立文本无关的模型, wdisambig.txt/wdisambig_phones.int/wdisambig_words.int 分别标明了words.txt 引入的消歧符号(#0)及其在phones.txt 和words.txt 中的ID, roots.txt 定义了同一行音素的各个状态是否共享决策树的根以及是否拆分,对应的音素集则存放于sets.txt,extra_questions.txt 包含那些自动产生的问题集之外的一些问题,optional_silence.txt 只含有一个音素,该音素可在需要的时候出现在词之间,disambig.txt 包含一个“消岐符号” 列表 (见 Disambiguation symbols),silence.txt 和 nonsilence.txt 分别包含静音音素列表和非静音音素列表。。

消歧是为了确保发音词典能够得到一个确定性的(Deterministic) WFST。 如果有些词对应的音素串是另一些词音素串的前缀,比如 good 的音素串是 goodness 的前半段音素串,需要在前者对应的音素串后面加入消歧音素,破坏这种前缀关系,这样, WFST 中一个词的路径就不会包含于另一个词的路径中。

语言模型

语言模型方面,可以单独提供 ARPA 格式的统计语言模型,也可以由现有文本训练出来(如使用 Kaldi LM 工具或 SRILM 工具包的 ngram-count ,具体训练方法可参照本案例egs/aishell/s5/local/aishell_train_lms.sh)。

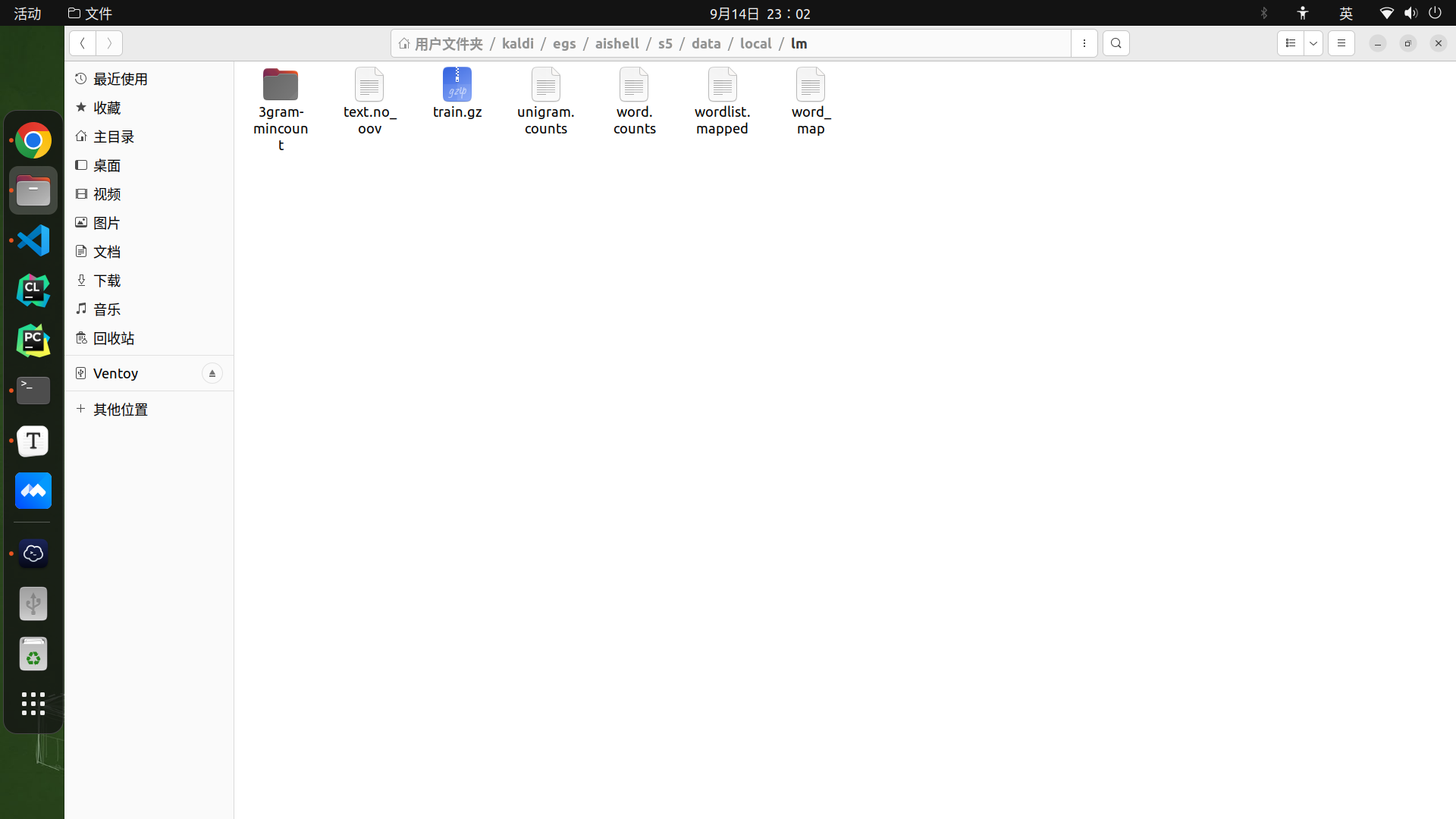

这个shell脚本读取data/local/train/text,data/local/dict/lexicon.txt 得到text的计数文件word.counts并以word.counts为基础添加lexicon.txt中的字(除了SIL)出现的次数到unigram.counts中。我就没深入看下去了,期间用到的脚本文件有:get_word_map.pl、train_lm.sh --arpa --lmtype 3gram-mincount $dir || exit 1;这个步骤的结果保存在data/local/lm/3gram-mincount/lm_unpruned.gz中。

# LM training

local/aishell_train_lms.sh || exit 1;

Getting raw N-gram counts

discount_ngrams: for n-gram order 1, D=0.000000, tau=0.000000 phi=1.000000

discount_ngrams: for n-gram order 2, D=0.000000, tau=0.000000 phi=1.000000

discount_ngrams: for n-gram order 3, D=1.000000, tau=0.000000 phi=1.000000

Iteration 1/6 of optimizing discounting parameters

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.675000 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.800000, tau=0.675000 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=0.825000 phi=2.000000

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.900000 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.800000, tau=0.900000 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.100000 phi=2.000000

discount_ngrams: for n-gram order 1, D=0.600000, tau=1.215000 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.800000, tau=1.215000 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.485000 phi=2.000000

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

Perplexity over 99496.000000 words is 573.088187

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 573.088187

real 0m2.755s

user 0m3.710s

sys 0m0.118s

Perplexity over 99496.000000 words is 571.860357

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 571.860357

real 0m2.865s

user 0m3.681s

sys 0m0.157s

Perplexity over 99496.000000 words is 571.430399

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 571.430399

real 0m2.962s

user 0m3.928s

sys 0m0.094s

Projected perplexity change from setting alpha=-0.413521475380432 is 571.860357->571.350704659834, reduction of 0.509652340166213

Alpha value on iter 1 is -0.413521475380432

Iteration 2/6 of optimizing discounting parameters

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.800000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=0.483845 phi=2.000000

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.800000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=0.645126 phi=2.000000

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.800000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=0.870921 phi=2.000000

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

Perplexity over 99496.000000 words is 570.548231

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 570.548231

real 0m2.819s

user 0m3.762s

sys 0m0.135s

Perplexity over 99496.000000 words is 570.909914

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 570.909914

real 0m2.870s

user 0m3.817s

sys 0m0.122s

Perplexity over 99496.000000 words is 570.209333

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 570.209333

real 0m2.936s

user 0m3.883s

sys 0m0.103s

optimize_alpha.pl: alpha=0.782133003937562 is too positive, limiting it to 0.7

Projected perplexity change from setting alpha=0.7 is 570.548231->570.0658029, reduction of 0.482428099999765

Alpha value on iter 2 is 0.7

Iteration 3/6 of optimizing discounting parameters

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.800000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.750000

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.800000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=2.000000

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.800000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=2.350000

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

Perplexity over 99496.000000 words is 570.070852

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 570.070852

real 0m2.858s

user 0m3.681s

sys 0m0.171s

Perplexity over 99496.000000 words is 570.135232

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 570.135232

real 0m2.960s

user 0m3.826s

sys 0m0.112s

Perplexity over 99496.000000 words is 570.074175

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 570.074175

real 0m3.036s

user 0m3.902s

sys 0m0.116s

Projected perplexity change from setting alpha=-0.149743638839048 is 570.074175->570.068152268062, reduction of 0.00602273193794645

Alpha value on iter 3 is -0.149743638839048

Iteration 4/6 of optimizing discounting parameters

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.850256

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.800000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.850256

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=1.080000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.850256

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

Perplexity over 99496.000000 words is 651.559076

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 651.559076

real 0m2.060s

user 0m2.633s

sys 0m0.083s

Perplexity over 99496.000000 words is 571.811721

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 571.811721

real 0m2.819s

user 0m3.796s

sys 0m0.074s

Perplexity over 99496.000000 words is 570.079098

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 570.079098

real 0m2.838s

user 0m3.780s

sys 0m0.092s

Projected perplexity change from setting alpha=-0.116327143544381 is 570.079098->564.672375993263, reduction of 5.40672200673657

Alpha value on iter 4 is -0.116327143544381

Iteration 5/6 of optimizing discounting parameters

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.706938, tau=0.395873 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.850256

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.706938, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.850256

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.706938, tau=0.712571 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.850256

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

Perplexity over 99496.000000 words is 567.407206

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 567.407206

real 0m2.902s

user 0m3.781s

sys 0m0.089s

Perplexity over 99496.000000 words is 567.980179

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 567.980179

real 0m2.935s

user 0m3.839s

sys 0m0.118s

Perplexity over 99496.000000 words is 567.231151

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 567.231151

real 0m2.987s

user 0m3.944s

sys 0m0.123s

Projected perplexity change from setting alpha=0.259356959958262 is 567.407206->567.206654822021, reduction of 0.20055117797915

Alpha value on iter 5 is 0.259356959958262

Iteration 6/6 of optimizing discounting parameters

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.706938, tau=0.664727 phi=1.750000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.850256

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.706938, tau=0.664727 phi=2.000000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.850256

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.706938, tau=0.664727 phi=2.350000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.850256

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

Perplexity over 99496.000000 words is 567.478625

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 567.478625

real 0m2.914s

user 0m3.796s

sys 0m0.153s

Perplexity over 99496.000000 words is 567.181130

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 567.181130

real 0m2.932s

user 0m3.816s

sys 0m0.154s

Perplexity over 99496.000000 words is 567.346876

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 567.346876

real 0m3.047s

user 0m4.029s

sys 0m0.099s

optimize_alpha.pl: alpha=2.83365708509299 is too positive, limiting it to 0.7

Projected perplexity change from setting alpha=0.7 is 567.346876->567.0372037, reduction of 0.309672299999761

Alpha value on iter 6 is 0.7

Final config is:

D=0.6 tau=0.527830672157611 phi=2

D=0.706938285164495 tau=0.664727230661135 phi=2.7

D=0 tau=1.09671484103859 phi=1.85025636116095

Discounting N-grams.

discount_ngrams: for n-gram order 1, D=0.600000, tau=0.527831 phi=2.000000

discount_ngrams: for n-gram order 2, D=0.706938, tau=0.664727 phi=2.700000

discount_ngrams: for n-gram order 3, D=0.000000, tau=1.096715 phi=1.850256

Computing final perplexity

Building ARPA LM (perplexity computation is in background)

interpolate_ngrams: 137074 words in wordslist

interpolate_ngrams: 137074 words in wordslist

Perplexity over 99496.000000 words is 567.320537

Perplexity over 99496.000000 words (excluding 0.000000 OOVs) is 567.320537

567.320537

Done training LM of type 3gram-mincount

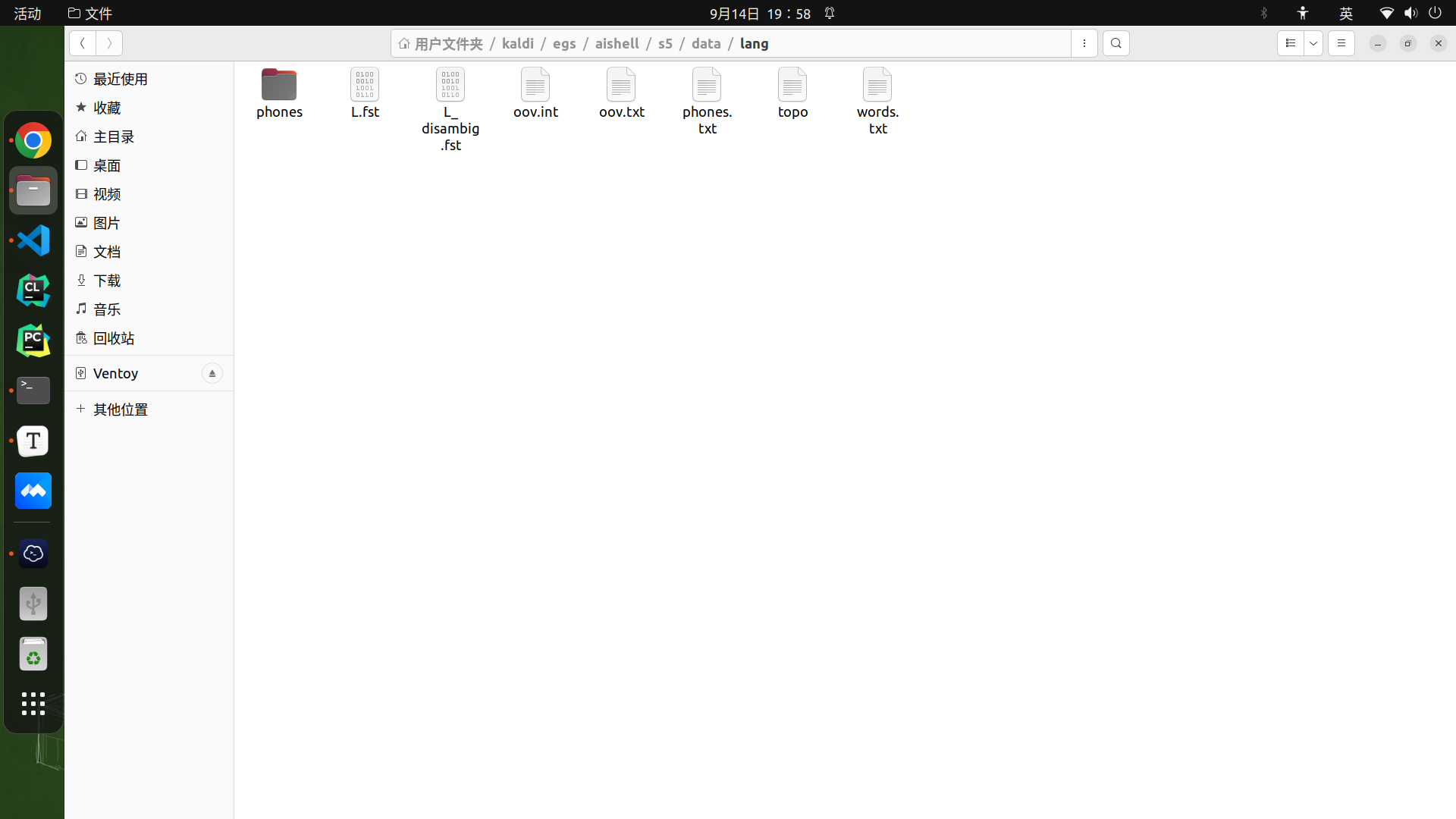

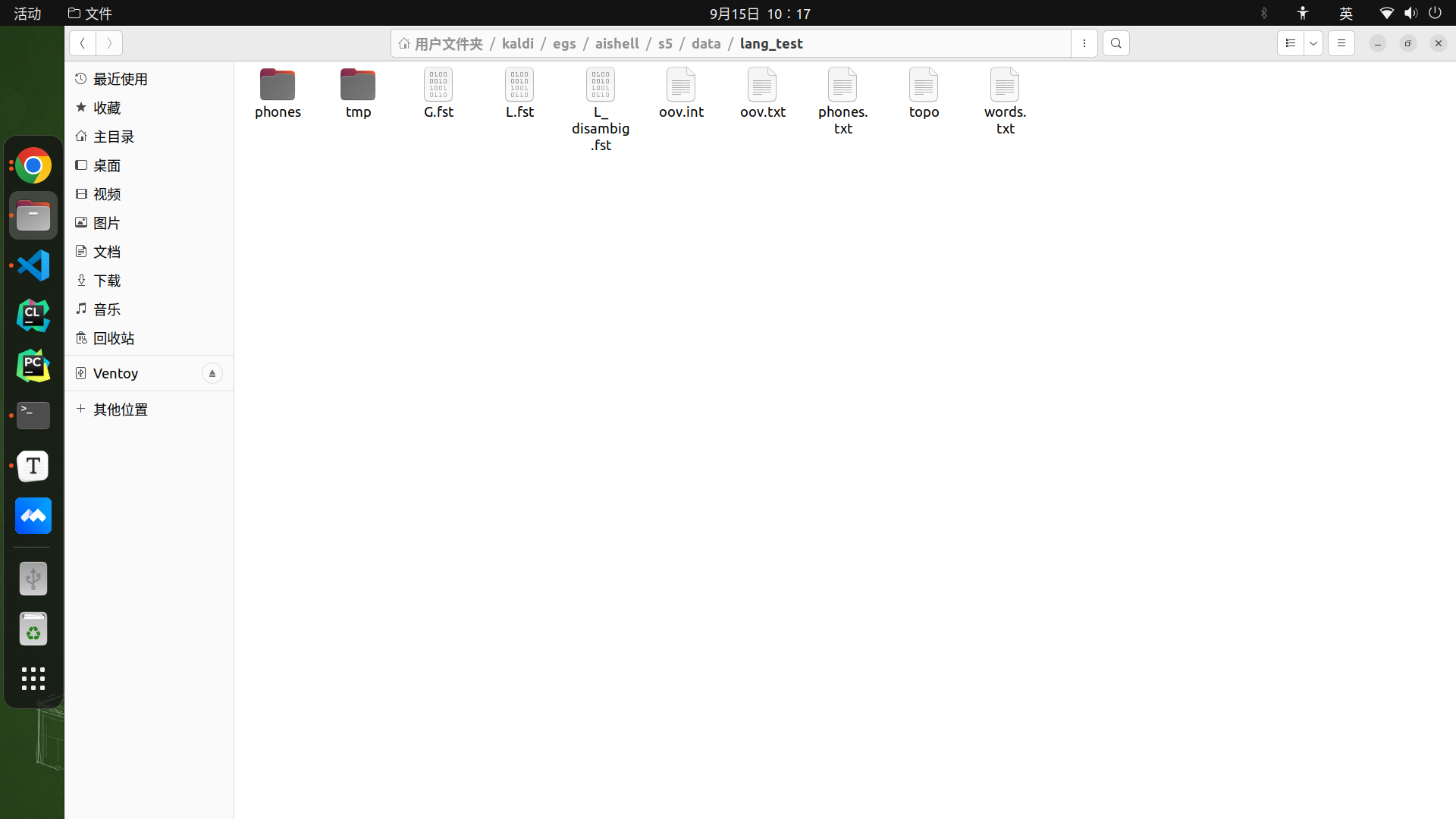

LG composition

utils/format_lm.sh 将该语言模型转换为G.fst,即输入是词,输出也是词,与 data/lang 中的文件一同放在 data/lang_test 下,用于后面制作解码图,与模型的训练无关。

# G compilation, check LG composition

utils/format_lm.sh data/lang data/local/lm/3gram-mincount/lm_unpruned.gz \

data/local/dict/lexicon.txt data/lang_test || exit 1;

Converting 'data/local/lm/3gram-mincount/lm_unpruned.gz' to FST

arpa2fst --disambig-symbol=#0 --read-symbol-table=data/lang_test/words.txt - data/lang_test/G.fst

LOG (arpa2fst[5.5.1050~1-0fb50]:Read():arpa-file-parser.cc:94) Reading \data\ section.

LOG (arpa2fst[5.5.1050~1-0fb50]:Read():arpa-file-parser.cc:149) Reading \1-grams: section.

LOG (arpa2fst[5.5.1050~1-0fb50]:Read():arpa-file-parser.cc:149) Reading \2-grams: section.

LOG (arpa2fst[5.5.1050~1-0fb50]:Read():arpa-file-parser.cc:149) Reading \3-grams: section.

LOG (arpa2fst[5.5.1050~1-0fb50]:RemoveRedundantStates():arpa-lm-compiler.cc:359) Reduced num-states from 561655 to 102646

fstisstochastic data/lang_test/G.fst

8.84583e-06 -0.56498

Succeeded in formatting LM: 'data/local/lm/3gram-mincount/lm_unpruned.gz'

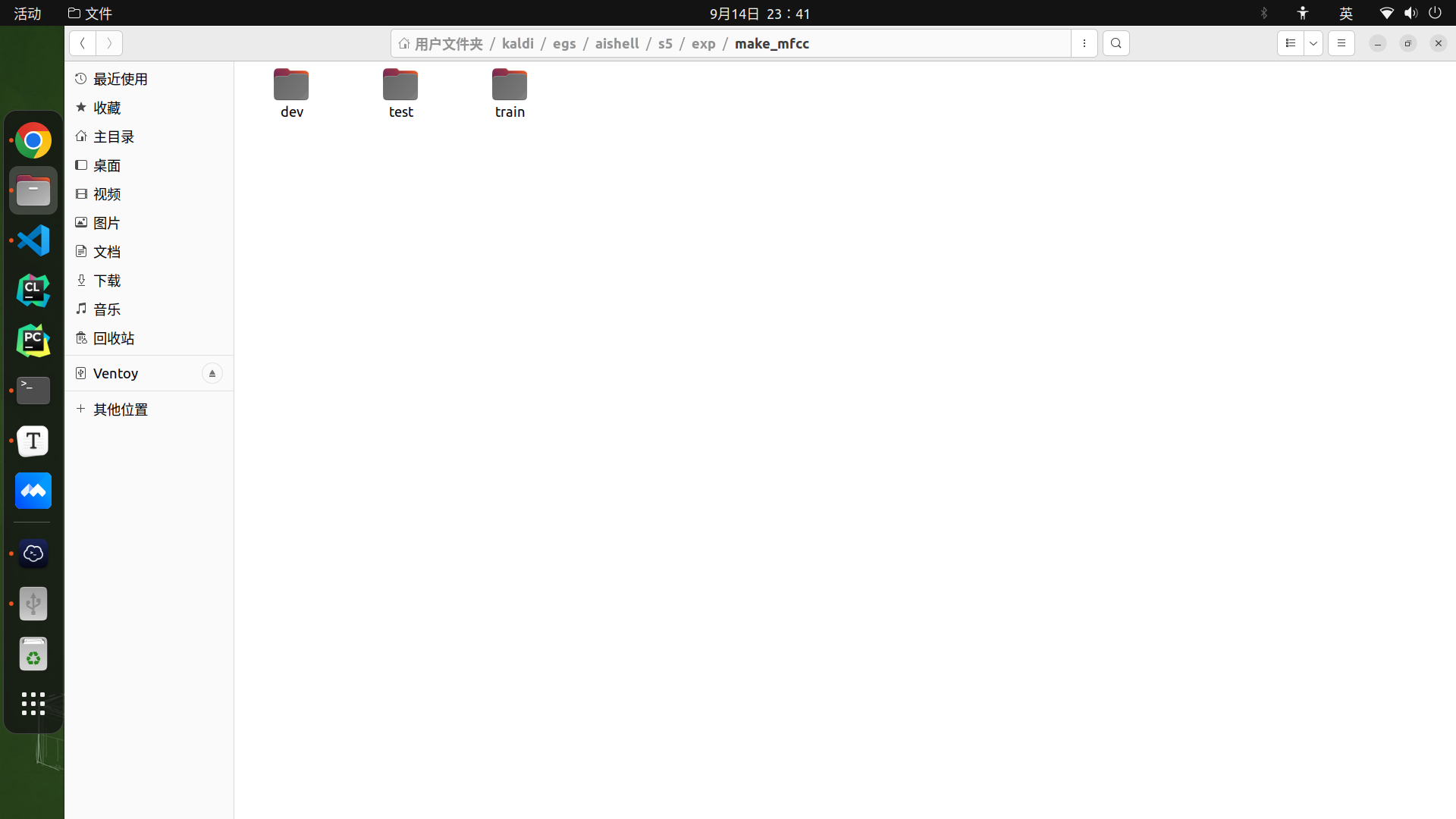

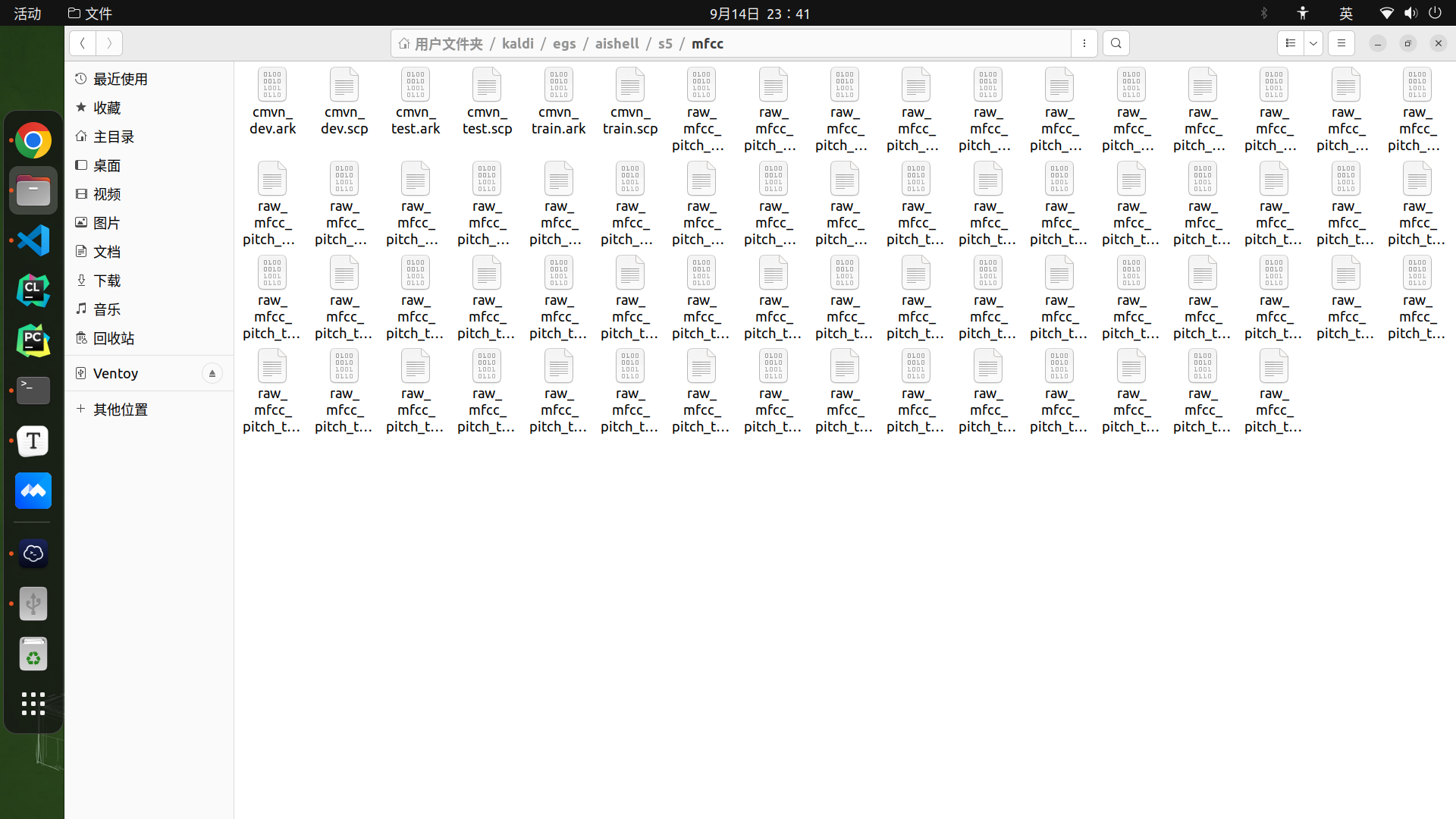

MFCC特征提取

这里还计算了倒谱均值方差归一化(Cepstral Mean and Variance Normalization,CMVN)系数用于声学特征的规整化,该方法旨在提高声学特征对说话人、录音设备、环境、音量等因素的鲁棒性。

#提取MFCC特征并算倒谱均值和方差归一化

# Now make MFCC plus pitch features.

# mfccdir should be some place with a largish disk where you

# want to store MFCC features.

mfccdir=mfcc

for x in train dev test; do

steps/make_mfcc_pitch.sh --cmd "$train_cmd" --nj 10 data/$x exp/make_mfcc/$x $mfccdir || exit 1;

steps/compute_cmvn_stats.sh data/$x exp/make_mfcc/$x $mfccdir || exit 1;

utils/fix_data_dir.sh data/$x || exit 1;

done

utils/validate_data_dir.sh: Successfully validated data-directory data/train

steps/make_mfcc_pitch.sh: [info]: no segments file exists: assuming wav.scp indexed by utterance.

steps/make_mfcc_pitch.sh: Succeeded creating MFCC and pitch features for train

steps/compute_cmvn_stats.sh data/train exp/make_mfcc/train mfcc

Succeeded creating CMVN stats for train

fix_data_dir.sh: kept all 120098 utterances.

fix_data_dir.sh: old files are kept in data/train/.backup

steps/make_mfcc_pitch.sh --cmd run.pl --nj 10 data/dev exp/make_mfcc/dev mfcc

utils/validate_data_dir.sh: Successfully validated data-directory data/dev

steps/make_mfcc_pitch.sh: [info]: no segments file exists: assuming wav.scp indexed by utterance.

steps/make_mfcc_pitch.sh: Succeeded creating MFCC and pitch features for dev

steps/compute_cmvn_stats.sh data/dev exp/make_mfcc/dev mfcc

Succeeded creating CMVN stats for dev

fix_data_dir.sh: kept all 14326 utterances.

fix_data_dir.sh: old files are kept in data/dev/.backup

steps/make_mfcc_pitch.sh --cmd run.pl --nj 10 data/test exp/make_mfcc/test mfcc

utils/validate_data_dir.sh: Successfully validated data-directory data/test

steps/make_mfcc_pitch.sh: [info]: no segments file exists: assuming wav.scp indexed by utterance.

steps/make_mfcc_pitch.sh: Succeeded creating MFCC and pitch features for test

steps/compute_cmvn_stats.sh data/test exp/make_mfcc/test mfcc

Succeeded creating CMVN stats for test

fix_data_dir.sh: kept all 7176 utterances.

fix_data_dir.sh: old files are kept in data/test/.backup

单音素训练

#单音素训练

# Train a monophone model on delta features.

steps/train_mono.sh --cmd "$train_cmd" --nj 10 \

data/train data/lang exp/mono || exit 1;

steps/train_mono.sh: Initializing monophone system.

steps/train_mono.sh: Compiling training graphs

steps/train_mono.sh: Aligning data equally (pass 0)

steps/train_mono.sh: Pass 1

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 2

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 3

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 4

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 5

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 6

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 7

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 8

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 9

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 10

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 11

steps/train_mono.sh: Pass 12

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 13

steps/train_mono.sh: Pass 14

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 15

steps/train_mono.sh: Pass 16

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 17

steps/train_mono.sh: Pass 18

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 19

steps/train_mono.sh: Pass 20

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 21

steps/train_mono.sh: Pass 22

steps/train_mono.sh: Pass 23

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 24

steps/train_mono.sh: Pass 25

steps/train_mono.sh: Pass 26

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 27

steps/train_mono.sh: Pass 28

steps/train_mono.sh: Pass 29

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 30

steps/train_mono.sh: Pass 31

steps/train_mono.sh: Pass 32

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 33

steps/train_mono.sh: Pass 34

steps/train_mono.sh: Pass 35

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 36

steps/train_mono.sh: Pass 37

steps/train_mono.sh: Pass 38

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 39

steps/diagnostic/analyze_alignments.sh --cmd run.pl data/lang exp/mono

steps/diagnostic/analyze_alignments.sh: see stats in exp/mono/log/analyze_alignments.log

1130 warnings in exp/mono/log/acc.*.*.log

36952 warnings in exp/mono/log/align.*.*.log

exp/mono: nj=10 align prob=-82.04 over 150.16h [retry=0.9%, fail=0.0%] states=203 gauss=987

steps/train_mono.sh: Done training monophone system in exp/mono

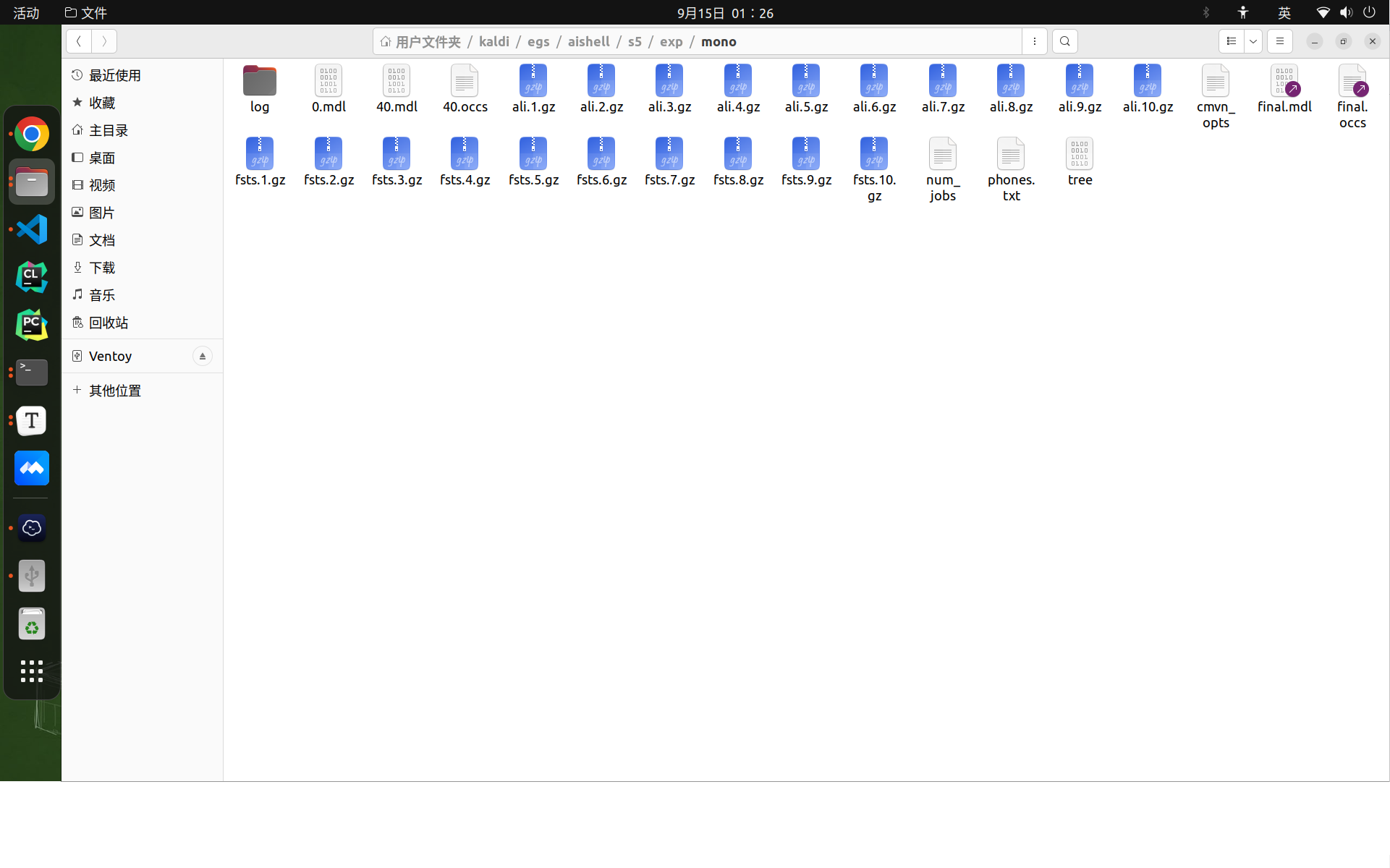

之后会在 exp 文件夹下产生一个 mono 的目录,里面以 .mdl 结尾的就保存了模型的参数。使用下面的命令可以查看模型的内容。

~/kaldi/src/gmmbin/gmm-copy --binary=false final.mdl - | less

~/kaldi/src/gmmbin/gmm-copy --binary=false final.mdl final.txt

构建单音素解码图

#构建单音素解码图

# Decode with the monophone model.

utils/mkgraph.sh data/lang_test exp/mono exp/mono/graph || exit 1;

tree-info exp/mono/tree

tree-info exp/mono/tree

fsttablecompose data/lang_test/L_disambig.fst data/lang_test/G.fst

fstpushspecial

fstminimizeencoded

fstdeterminizestar --use-log=true

fstisstochastic data/lang_test/tmp/LG.fst

-0.0663446 -0.0666824

[info]: LG not stochastic.

fstcomposecontext --context-size=1 --central-position=0 --read-disambig-syms=data/lang_test/phones/disambig.int --write-disambig-syms=data/lang_test/tmp/disambig_ilabels_1_0.int data/lang_test/tmp/ilabels_1_0.6866 data/lang_test/tmp/LG.fst

fstisstochastic data/lang_test/tmp/CLG_1_0.fst

-0.0663446 -0.0666824

[info]: CLG not stochastic.

make-h-transducer --disambig-syms-out=exp/mono/graph/disambig_tid.int --transition-scale=1.0 data/lang_test/tmp/ilabels_1_0 exp/mono/tree exp/mono/final.mdl

fsttablecompose exp/mono/graph/Ha.fst data/lang_test/tmp/CLG_1_0.fst

fstdeterminizestar --use-log=true

fstminimizeencoded

fstrmsymbols exp/mono/graph/disambig_tid.int

fstrmepslocal

fstisstochastic exp/mono/graph/HCLGa.fst

0.000244693 -0.132761

HCLGa is not stochastic

add-self-loops --self-loop-scale=0.1 --reorder=true exp/mono/final.mdl exp/mono/graph/HCLGa.fst

mkgraph.sh主要生成了HCLG.fst和words.txt这两个重要的文件,后续识别主要利用了三个文件,分别是final.mdl、HCLG.fst、words.txt。

单音素解码

#解码:分别针对开发集和测试集解码

steps/decode.sh --cmd "$decode_cmd" --config conf/decode.config --nj 10 \

exp/mono/graph data/dev exp/mono/decode_dev

steps/decode.sh --cmd "$decode_cmd" --config conf/decode.config --nj 10 \

exp/mono/graph data/test exp/mono/decode_test

解码的日志会保存在 exp/mono/decode_dev/log 和 exp/mono/decode_test/log 里。

decode.sh: feature type is delta

steps/diagnostic/analyze_lats.sh --cmd run.pl exp/mono/graph exp/mono/decode_dev

steps/diagnostic/analyze_lats.sh: see stats in exp/mono/decode_dev/log/analyze_alignments.log

Overall, lattice depth (10,50,90-percentile)=(1,16,133) and mean=49.7

steps/diagnostic/analyze_lats.sh: see stats in exp/mono/decode_dev/log/analyze_lattice_depth_stats.log

+ steps/score_kaldi.sh --cmd 'run.pl ' data/dev exp/mono/graph exp/mono/decode_dev

steps/score_kaldi.sh --cmd run.pl data/dev exp/mono/graph exp/mono/decode_dev

steps/score_kaldi.sh: scoring with word insertion penalty=0.0,0.5,1.0

+ steps/scoring/score_kaldi_cer.sh --stage 2 --cmd 'run.pl ' data/dev exp/mono/graph exp/mono/decode_dev

steps/scoring/score_kaldi_cer.sh --stage 2 --cmd run.pl data/dev exp/mono/graph exp/mono/decode_dev

steps/scoring/score_kaldi_cer.sh: scoring with word insertion penalty=0.0,0.5,1.0

+ echo 'local/score.sh: Done'

local/score.sh: Done

steps/decode.sh --cmd run.pl --config conf/decode.config --nj 10 exp/mono/graph data/test exp/mono/decode_test

decode.sh: feature type is delta

steps/diagnostic/analyze_lats.sh --cmd run.pl exp/mono/graph exp/mono/decode_test

steps/diagnostic/analyze_lats.sh: see stats in exp/mono/decode_test/log/analyze_alignments.log

Overall, lattice depth (10,50,90-percentile)=(1,20,156) and mean=57.9

steps/diagnostic/analyze_lats.sh: see stats in exp/mono/decode_test/log/analyze_lattice_depth_stats.log

+ steps/score_kaldi.sh --cmd 'run.pl ' data/test exp/mono/graph exp/mono/decode_test

steps/score_kaldi.sh --cmd run.pl data/test exp/mono/graph exp/mono/decode_test

steps/score_kaldi.sh: scoring with word insertion penalty=0.0,0.5,1.0

+ steps/scoring/score_kaldi_cer.sh --stage 2 --cmd 'run.pl ' data/test exp/mono/graph exp/mono/decode_test

steps/scoring/score_kaldi_cer.sh --stage 2 --cmd run.pl data/test exp/mono/graph exp/mono/decode_test

steps/scoring/score_kaldi_cer.sh: scoring with word insertion penalty=0.0,0.5,1.0

+ echo 'local/score.sh: Done'

local/score.sh: Done

单音素对齐

# Get alignments from monophone system.

steps/align_si.sh --cmd "$train_cmd" --nj 10 \

data/train data/lang exp/mono exp/mono_ali || exit 1;

steps/align_si.sh: feature type is delta

steps/align_si.sh: aligning data in data/train using model from exp/mono, putting alignments in exp/mono_ali

steps/diagnostic/analyze_alignments.sh --cmd run.pl data/lang exp/mono_ali

steps/diagnostic/analyze_alignments.sh: see stats in exp/mono_ali/log/analyze_alignments.log

steps/align_si.sh: done aligning data.

三音素模型

#训练上下文相关的三音素模型

# Train the first triphone pass model tri1 on delta + delta-delta features.

steps/train_deltas.sh --cmd "$train_cmd" \

2500 20000 data/train data/lang exp/mono_ali exp/tri1 || exit 1;

# decode tri1

utils/mkgraph.sh data/lang_test exp/tri1 exp/tri1/graph || exit 1;

steps/decode.sh --cmd "$decode_cmd" --config conf/decode.config --nj 10 \

exp/tri1/graph data/dev exp/tri1/decode_dev

steps/decode.sh --cmd "$decode_cmd" --config conf/decode.config --nj 10 \

exp/tri1/graph data/test exp/tri1/decode_test

# align tri1

steps/align_si.sh --cmd "$train_cmd" --nj 10 \

data/train data/lang exp/tri1 exp/tri1_ali || exit 1;

# train tri2 [delta+delta-deltas]

steps/train_deltas.sh --cmd "$train_cmd" \

2500 20000 data/train data/lang exp/tri1_ali exp/tri2 || exit 1;

# decode tri2

utils/mkgraph.sh data/lang_test exp/tri2 exp/tri2/graph

steps/decode.sh --cmd "$decode_cmd" --config conf/decode.config --nj 10 \

exp/tri2/graph data/dev exp/tri2/decode_dev

steps/decode.sh --cmd "$decode_cmd" --config conf/decode.config --nj 10 \

exp/tri2/graph data/test exp/tri2/decode_test

# Align training data with the tri2 model.

steps/align_si.sh --cmd "$train_cmd" --nj 10 \

data/train data/lang exp/tri2 exp/tri2_ali || exit 1;

#线性判别分析和最大似然线性转换

# Train the second triphone pass model tri3a on LDA+MLLT features.

steps/train_lda_mllt.sh --cmd "$train_cmd" \

2500 20000 data/train data/lang exp/tri2_ali exp/tri3a || exit 1;

# Run a test decode with the tri3a model.

utils/mkgraph.sh data/lang_test exp/tri3a exp/tri3a/graph || exit 1;

steps/decode.sh --cmd "$decode_cmd" --nj 10 --config conf/decode.config \

exp/tri3a/graph data/dev exp/tri3a/decode_dev

steps/decode.sh --cmd "$decode_cmd" --nj 10 --config conf/decode.config \

exp/tri3a/graph data/test exp/tri3a/decode_test

# align tri3a with fMLLR

steps/align_fmllr.sh --cmd "$train_cmd" --nj 10 \

data/train data/lang exp/tri3a exp/tri3a_ali || exit 1;

#用来训练发音人自适应,基于特征空间最大似然线性回归

# Train the third triphone pass model tri4a on LDA+MLLT+SAT features.

# From now on, we start building a more serious system with Speaker

# Adaptive Training (SAT).

steps/train_sat.sh --cmd "$train_cmd" \

2500 20000 data/train data/lang exp/tri3a_ali exp/tri4a || exit 1;

# decode tri4a

utils/mkgraph.sh data/lang_test exp/tri4a exp/tri4a/graph

steps/decode_fmllr.sh --cmd "$decode_cmd" --nj 10 --config conf/decode.config \

exp/tri4a/graph data/dev exp/tri4a/decode_dev

steps/decode_fmllr.sh --cmd "$decode_cmd" --nj 10 --config conf/decode.config \

exp/tri4a/graph data/test exp/tri4a/decode_test

# align tri4a with fMLLR

steps/align_fmllr.sh --cmd "$train_cmd" --nj 10 \

data/train data/lang exp/tri4a exp/tri4a_ali

# Train tri5a, which is LDA+MLLT+SAT

# Building a larger SAT system. You can see the num-leaves is 3500 and tot-gauss is 100000

steps/train_sat.sh --cmd "$train_cmd" \

3500 100000 data/train data/lang exp/tri4a_ali exp/tri5a || exit 1;

# decode tri5a

utils/mkgraph.sh data/lang_test exp/tri5a exp/tri5a/graph || exit 1;

steps/decode_fmllr.sh --cmd "$decode_cmd" --nj 10 --config conf/decode.config \

exp/tri5a/graph data/dev exp/tri5a/decode_dev || exit 1;

steps/decode_fmllr.sh --cmd "$decode_cmd" --nj 10 --config conf/decode.config \

exp/tri5a/graph data/test exp/tri5a/decode_test || exit 1;

# align tri5a with fMLLR

steps/align_fmllr.sh --cmd "$train_cmd" --nj 10 \

data/train data/lang exp/tri5a exp/tri5a_ali || exit 1;

DNN训练

#用nnet3来训练DNN

# nnet3

local/nnet3/run_tdnn.sh

#用chain训练DNN

# chain

local/chain/run_tdnn.sh

# getting results (see RESULTS file)

for x in exp/*/decode_test; do [ -d $x ] && grep WER $x/cer_* | utils/best_wer.sh; done 2>/dev/null

for x in exp/*/*/decode_test; do [ -d $x ] && grep WER $x/cer_* | utils/best_wer.sh; done 2>/dev/null

exit 0;

从上述的注释来看, GMM-HMM 训练了 5 次,得到一个相对比较不错的模型,然后训练 nnet3 模型以及 chain 模型,最后测试精度。

在 kaldi 训练过程中,DNN 的训练是依赖于 GMM-HMM 模型的,通过 GMM-HMM 模型得到 DNN 声学模型的输出结果(在 get_egs.sh 脚本中可以看到这一过程)。因此训练一个好的 GMM-HMM 模型是 kaldi 语音识别的关键。

run_tdnn.sh

#!/usr/bin/env bash

# This script is based on swbd/s5c/local/nnet3/run_tdnn.sh

# this is the standard "tdnn" system, built in nnet3; it's what we use to

# call multi-splice.

# At this script level we don't support not running on GPU, as it would be painfully slow.

# If you want to run without GPU you'd have to call train_tdnn.sh with --gpu false,

# --num-threads 16 and --minibatch-size 128.

set -e

stage=0

train_stage=-10

affix=

common_egs_dir=

# training options

initial_effective_lrate=0.0015

final_effective_lrate=0.00015

num_epochs=4

#开始时候的gpu个数,这里一开始用2个gpu。

num_jobs_initial=2

#最后用的gpu个数,训练中根据某个增加规则,慢慢增加gpu个数。

num_jobs_final=12

#每个job,就是每用一个gpu,就训练一批数据,并生成一个新的模型,一开始只训练两个模型,逐渐增加训练模型个数,因为一开始可能会面临模型分散的问题,后面逐渐不会有这个问题,因此可以把模型数目增加。

remove_egs=true

# feature options

use_ivectors=true

# End configuration section.

. ./cmd.sh

. ./path.sh

. ./utils/parse_options.sh

#gpu环境监测

if ! cuda-compiled; then

cat <<EOF && exit 1

This script is intended to be used with GPUs but you have not compiled Kaldi with CUDA

If you want to use GPUs (and have them), go to src/, and configure and make on a machine

where "nvcc" is installed.

EOF

fi

dir=exp/nnet3/tdnn_sp${affix:+_$affix}

#设置GMM输入参数目录

gmm_dir=exp/tri5a

train_set=train_sp

# 对齐后的特征集合目录

ali_dir=${gmm_dir}_sp_ali

graph_dir=$gmm_dir/graph

#提取i-vector说话人信息,作为神经网络输入特征的一部分,

local/nnet3/run_ivector_common.sh --stage $stage || exit 1;

if [ $stage -le 7 ]; then

echo "$0: creating neural net configs";

num_targets=$(tree-info $ali_dir/tree |grep num-pdfs|awk '{print $2}')

#kaldi DNN 网络配置,是用户比较容易读懂的模型结构描述,在Kaldi中也称作xconfig

mkdir -p $dir/configs

cat <<EOF > $dir/configs/network.xconfig

input dim=100 name=ivector

input dim=43 name=input

# please note that it is important to have input layer with the name=input

# as the layer immediately preceding the fixed-affine-layer to enable

# the use of short notation for the descriptor

fixed-affine-layer name=lda input=Append(-2,-1,0,1,2,ReplaceIndex(ivector, t, 0)) affine-transform-file=$dir/configs/lda.mat

# the first splicing is moved before the lda layer, so no splicing here

relu-batchnorm-layer name=tdnn1 dim=850

relu-batchnorm-layer name=tdnn2 dim=850 input=Append(-1,0,2)

relu-batchnorm-layer name=tdnn3 dim=850 input=Append(-3,0,3)

relu-batchnorm-layer name=tdnn4 dim=850 input=Append(-7,0,2)

relu-batchnorm-layer name=tdnn5 dim=850 input=Append(-3,0,3)

relu-batchnorm-layer name=tdnn6 dim=850

output-layer name=output input=tdnn6 dim=$num_targets max-change=1.5

EOF

#通过xconfig_to_configs.py 进行转换,转换为Kaldi训练过程中真正使用的nnet3网络配置文件

steps/nnet3/xconfig_to_configs.py --xconfig-file $dir/configs/network.xconfig --config-dir $dir/configs/

fi

if [ $stage -le 8 ]; then

if [[ $(hostname -f) == *.clsp.jhu.edu ]] && [ ! -d $dir/egs/storage ]; then

utils/create_split_dir.pl \

/export/b0{5,6,7,8}/$USER/kaldi-data/egs/aishell-$(date +'%m_%d_%H_%M')/s5/$dir/egs/storage $dir/egs/storage

fi

#执行train_dnn.py 来对 feats 内容进行训练

steps/nnet3/train_dnn.py --stage=$train_stage \

--cmd="$decode_cmd" \

--feat.online-ivector-dir exp/nnet3/ivectors_${train_set} \

--feat.cmvn-opts="--norm-means=false --norm-vars=false" \

--trainer.num-epochs $num_epochs \

--trainer.optimization.num-jobs-initial $num_jobs_initial \

--trainer.optimization.num-jobs-final $num_jobs_final \

--trainer.optimization.initial-effective-lrate $initial_effective_lrate \

--trainer.optimization.final-effective-lrate $final_effective_lrate \

--egs.dir "$common_egs_dir" \

--cleanup.remove-egs $remove_egs \

--cleanup.preserve-model-interval 500 \

--use-gpu true \

--feat-dir=data/${train_set}_hires \

--ali-dir $ali_dir \

--lang data/lang \

--reporting.email="$reporting_email" \

--dir=$dir || exit 1;

fi

#解码部分

if [ $stage -le 9 ]; then

# this version of the decoding treats each utterance separately

# without carrying forward speaker information.

for decode_set in dev test; do

num_jobs=`cat data/${decode_set}_hires/utt2spk|cut -d' ' -f2|sort -u|wc -l`

decode_dir=${dir}/decode_$decode_set

steps/nnet3/decode.sh --nj $num_jobs --cmd "$decode_cmd" \

--online-ivector-dir exp/nnet3/ivectors_${decode_set} \

$graph_dir data/${decode_set}_hires $decode_dir || exit 1;

done

fi

wait;

exit 0;

train_tdnn.py

get_egs.sh

补充

- 数据集中压缩文件太多,对于解压多个.gz文件的,用此命令:

#!/usr/bin/env bash

for i in $(ls *.tar.gz);do tar -xvf $i;done

- 运行aishell时出现:

local/aishell_train_lms.sh: train_lm.sh is not found. That might mean it's not installed

local/aishell_train_lms.sh: or it is not added to PATH

local/aishell_train_lms.sh: Use the script tools/extras/install_kaldi_lm.sh to install it

提示需安装kaldi-lm,进入你的kaldi/tools/extras目录下,并运行 :

./install_kaldi_lm.sh

成功安装kaldi_lm,需要添加路径

vim path.sh

export PATH=$PATH:/kaldi/tools/kaldi_lmS

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· AI 智能体引爆开源社区「GitHub 热点速览」

· 三行代码完成国际化适配,妙~啊~

· .NET Core 中如何实现缓存的预热?