Class0 kaldi安装

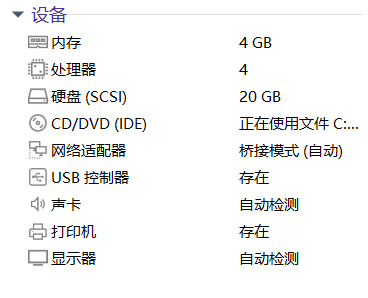

VM配置信息如下:

系统—CentOS7

内存—4G

处理器—4×1

硬盘—20G

网络适配器—桥接模式

P.S 如需建立SSH连接需要配置/etc/sysconfig/network-scripts/ifcfg-ens33文件 "ONBOOT=yes";

镜像(密码kaldi):https://baixf-my.sharepoint.com/:u:/g/personal/admin_baixf_tk/Ea0twF_tLPxAosExRgwtY4kB4zDQyHrgMr67vzLr-TFvPA?e=LJNNa7

一、安装教程

➢步骤一:下载kaldi的文件

git clone https://github.com/kaldi-asr/kaldi.git

➢步骤二:查看安装指南文档

[root@localhost ~]# cd kaldi/

[root@localhost kaldi]# ls

cmake COPYING egs misc scripts tools

CMakeLists.txt docker INSTALL README.md src windows

[root@localhost kaldi]# cat INSTALL

This is the official Kaldi INSTALL. Look also at INSTALL.md for the git mirror installation.

[Option 1 in the following does not apply to native Windows install, see windows/INSTALL or following Option 2]

Option 1 (bash + makefile):

Steps:

(1)

go to tools/ and follow INSTALL instructions there.

(2)

go to src/ and follow INSTALL instructions there.

Option 2 (cmake):

Go to cmake/ and follow INSTALL.md instructions there.

Note, it may not be well tested and some features are missing currently.

➢步骤三:进入tools,根据指令执行编译

[root@localhost kaldi]# cd tools/

[root@localhost tools]# cat INSTALL

To check the prerequisites for Kaldi, first run

extras/check_dependencies.sh

and see if there are any system-level installations you need to do. Check the

output carefully. There are some things that will make your life a lot easier

if you fix them at this stage. If your system default C++ compiler is not

supported, you can do the check with another compiler by setting the CXX

environment variable, e.g.

CXX=g++-4.8 extras/check_dependencies.sh

Then run

make

which by default will install ATLAS headers, OpenFst, SCTK and sph2pipe.

OpenFst requires a relatively recent C++ compiler with C++11 support, e.g.

g++ >= 4.7, Apple clang >= 5.0 or LLVM clang >= 3.3. If your system default

compiler does not have adequate support for C++11, you can specify a C++11

compliant compiler as a command argument, e.g.

make CXX=g++-4.8

If you have multiple CPUs and want to speed things up, you can do a parallel

build by supplying the "-j" option to make, e.g. to use 4 CPUs

make -j 4

In extras/, there are also various scripts to install extra bits and pieces that

are used by individual example scripts. If an example script needs you to run

one of those scripts, it will tell you what to do.

All done OK

➢步骤四:进入src,按照指令安装

[root@localhost kaldi]# cd src

[root@localhost src]# cat INSTALL

These instructions are valid for UNIX-like systems (these steps have

been run on various Linux distributions; Darwin; Cygwin). For native Windows

compilation, see ../windows/INSTALL.

You must first have completed the installation steps in ../tools/INSTALL

(compiling OpenFst; getting ATLAS and CLAPACK headers).

The installation instructions are

./configure --shared

make depend -j 8

make -j 8

Note that we added the "-j 8" to run in parallel because "make" takes a long

time. 8 jobs might be too many for a laptop or small desktop machine with not

many cores.

For more information, see documentation at http://kaldi-asr.org/doc/

and click on "The build process (how Kaldi is compiled)".

➢步骤五:检查是否确实安装成功

- 跑一个小程序

[root@localhost kaldi]# cd egs/yesno/s5/

[root@localhost s5]# ls

conf exp local path.sh steps waves_yesno

data input mfcc run.sh utils waves_yesno.tar.gz

[root@localhost s5]# sh run.sh

Preparing train and test data

Dictionary preparation succeeded

utils/prepare_lang.sh --position-dependent-phones false data/local/dict <SIL> data/local/lang data/lang

Checking data/local/dict/silence_phones.txt ...

--> reading data/local/dict/silence_phones.txt

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/local/dict/silence_phones.txt is OK

Checking data/local/dict/optional_silence.txt ...

--> reading data/local/dict/optional_silence.txt

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/local/dict/optional_silence.txt is OK

Checking data/local/dict/nonsilence_phones.txt ...

--> reading data/local/dict/nonsilence_phones.txt

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/local/dict/nonsilence_phones.txt is OK

Checking disjoint: silence_phones.txt, nonsilence_phones.txt

--> disjoint property is OK.

Checking data/local/dict/lexicon.txt

--> reading data/local/dict/lexicon.txt

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/local/dict/lexicon.txt is OK

Checking data/local/dict/extra_questions.txt ...

--> data/local/dict/extra_questions.txt is empty (this is OK)

--> SUCCESS [validating dictionary directory data/local/dict]

**Creating data/local/dict/lexiconp.txt from data/local/dict/lexicon.txt

fstaddselfloops data/lang/phones/wdisambig_phones.int data/lang/phones/wdisambig_words.int

prepare_lang.sh: validating output directory

utils/validate_lang.pl data/lang

Checking existence of separator file

separator file data/lang/subword_separator.txt is empty or does not exist, deal in word case.

Checking data/lang/phones.txt ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/lang/phones.txt is OK

Checking words.txt: #0 ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> data/lang/words.txt is OK

Checking disjoint: silence.txt, nonsilence.txt, disambig.txt ...

--> silence.txt and nonsilence.txt are disjoint

--> silence.txt and disambig.txt are disjoint

--> disambig.txt and nonsilence.txt are disjoint

--> disjoint property is OK

Checking sumation: silence.txt, nonsilence.txt, disambig.txt ...

--> found no unexplainable phones in phones.txt

Checking data/lang/phones/context_indep.{txt, int, csl} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 1 entry/entries in data/lang/phones/context_indep.txt

--> data/lang/phones/context_indep.int corresponds to data/lang/phones/context_indep.txt

--> data/lang/phones/context_indep.csl corresponds to data/lang/phones/context_indep.txt

--> data/lang/phones/context_indep.{txt, int, csl} are OK

Checking data/lang/phones/nonsilence.{txt, int, csl} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 2 entry/entries in data/lang/phones/nonsilence.txt

--> data/lang/phones/nonsilence.int corresponds to data/lang/phones/nonsilence.txt

--> data/lang/phones/nonsilence.csl corresponds to data/lang/phones/nonsilence.txt

--> data/lang/phones/nonsilence.{txt, int, csl} are OK

Checking data/lang/phones/silence.{txt, int, csl} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 1 entry/entries in data/lang/phones/silence.txt

--> data/lang/phones/silence.int corresponds to data/lang/phones/silence.txt

--> data/lang/phones/silence.csl corresponds to data/lang/phones/silence.txt

--> data/lang/phones/silence.{txt, int, csl} are OK

Checking data/lang/phones/optional_silence.{txt, int, csl} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 1 entry/entries in data/lang/phones/optional_silence.txt

--> data/lang/phones/optional_silence.int corresponds to data/lang/phones/optional_silence.txt

--> data/lang/phones/optional_silence.csl corresponds to data/lang/phones/optional_silence.txt

--> data/lang/phones/optional_silence.{txt, int, csl} are OK

Checking data/lang/phones/disambig.{txt, int, csl} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 2 entry/entries in data/lang/phones/disambig.txt

--> data/lang/phones/disambig.int corresponds to data/lang/phones/disambig.txt

--> data/lang/phones/disambig.csl corresponds to data/lang/phones/disambig.txt

--> data/lang/phones/disambig.{txt, int, csl} are OK

Checking data/lang/phones/roots.{txt, int} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 3 entry/entries in data/lang/phones/roots.txt

--> data/lang/phones/roots.int corresponds to data/lang/phones/roots.txt

--> data/lang/phones/roots.{txt, int} are OK

Checking data/lang/phones/sets.{txt, int} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 3 entry/entries in data/lang/phones/sets.txt

--> data/lang/phones/sets.int corresponds to data/lang/phones/sets.txt

--> data/lang/phones/sets.{txt, int} are OK

Checking data/lang/phones/extra_questions.{txt, int} ...

Checking optional_silence.txt ...

--> reading data/lang/phones/optional_silence.txt

--> data/lang/phones/optional_silence.txt is OK

Checking disambiguation symbols: #0 and #1

--> data/lang/phones/disambig.txt has "#0" and "#1"

--> data/lang/phones/disambig.txt is OK

Checking topo ...

Checking word-level disambiguation symbols...

--> data/lang/phones/wdisambig.txt exists (newer prepare_lang.sh)

Checking data/lang/oov.{txt, int} ...

--> text seems to be UTF-8 or ASCII, checking whitespaces

--> text contains only allowed whitespaces

--> 1 entry/entries in data/lang/oov.txt

--> data/lang/oov.int corresponds to data/lang/oov.txt

--> data/lang/oov.{txt, int} are OK

--> data/lang/L.fst is olabel sorted

--> data/lang/L_disambig.fst is olabel sorted

--> SUCCESS [validating lang directory data/lang]

Preparing language models for test

arpa2fst --disambig-symbol=#0 --read-symbol-table=data/lang_test_tg/words.txt input/task.arpabo data/lang_test_tg/G.fst

LOG (arpa2fst[5.5.1035~1-3dd90]:Read():arpa-file-parser.cc:94) Reading \data\ section.

LOG (arpa2fst[5.5.1035~1-3dd90]:Read():arpa-file-parser.cc:149) Reading \1-grams: section.

LOG (arpa2fst[5.5.1035~1-3dd90]:RemoveRedundantStates():arpa-lm-compiler.cc:359) Reduced num-states from 1 to 1

fstisstochastic data/lang_test_tg/G.fst

1.20397 1.20397

Succeeded in formatting data.

steps/make_mfcc.sh --nj 1 data/train_yesno exp/make_mfcc/train_yesno mfcc

utils/validate_data_dir.sh: WARNING: you have only one speaker. This probably a bad idea.

Search for the word 'bold' in http://kaldi-asr.org/doc/data_prep.html

for more information.

utils/validate_data_dir.sh: Successfully validated data-directory data/train_yesno

steps/make_mfcc.sh: [info]: no segments file exists: assuming wav.scp indexed by utterance.

steps/make_mfcc.sh: Succeeded creating MFCC features for train_yesno

steps/compute_cmvn_stats.sh data/train_yesno exp/make_mfcc/train_yesno mfcc

Succeeded creating CMVN stats for train_yesno

fix_data_dir.sh: kept all 31 utterances.

fix_data_dir.sh: old files are kept in data/train_yesno/.backup

steps/make_mfcc.sh --nj 1 data/test_yesno exp/make_mfcc/test_yesno mfcc

utils/validate_data_dir.sh: WARNING: you have only one speaker. This probably a bad idea.

Search for the word 'bold' in http://kaldi-asr.org/doc/data_prep.html

for more information.

utils/validate_data_dir.sh: Successfully validated data-directory data/test_yesno

steps/make_mfcc.sh: [info]: no segments file exists: assuming wav.scp indexed by utterance.

steps/make_mfcc.sh: It seems not all of the feature files were successfully procesed (29 != 31); consider using utils/fix_data_dir.sh data/test_yesno

steps/make_mfcc.sh: Less than 95% the features were successfully generated. Probably a serious error.

steps/compute_cmvn_stats.sh data/test_yesno exp/make_mfcc/test_yesno mfcc

Succeeded creating CMVN stats for test_yesno

fix_data_dir.sh: kept 29 utterances out of 31

fix_data_dir.sh: old files are kept in data/test_yesno/.backup

steps/train_mono.sh --nj 1 --cmd utils/run.pl --totgauss 400 data/train_yesno data/lang exp/mono0a

steps/train_mono.sh: Initializing monophone system.

steps/train_mono.sh: Compiling training graphs

steps/train_mono.sh: Aligning data equally (pass 0)

steps/train_mono.sh: Pass 1

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 2

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 3

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 4

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 5

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 6

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 7

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 8

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 9

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 10

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 11

steps/train_mono.sh: Pass 12

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 13

steps/train_mono.sh: Pass 14

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 15

steps/train_mono.sh: Pass 16

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 17

steps/train_mono.sh: Pass 18

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 19

steps/train_mono.sh: Pass 20

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 21

steps/train_mono.sh: Pass 22

steps/train_mono.sh: Pass 23

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 24

steps/train_mono.sh: Pass 25

steps/train_mono.sh: Pass 26

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 27

steps/train_mono.sh: Pass 28

steps/train_mono.sh: Pass 29

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 30

steps/train_mono.sh: Pass 31

steps/train_mono.sh: Pass 32

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 33

steps/train_mono.sh: Pass 34

steps/train_mono.sh: Pass 35

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 36

steps/train_mono.sh: Pass 37

steps/train_mono.sh: Pass 38

steps/train_mono.sh: Aligning data

steps/train_mono.sh: Pass 39

steps/diagnostic/analyze_alignments.sh --cmd utils/run.pl data/lang exp/mono0a

steps/diagnostic/analyze_alignments.sh: see stats in exp/mono0a/log/analyze_alignments.log

1 warnings in exp/mono0a/log/update.*.log

exp/mono0a: nj=1 align prob=-81.88 over 0.05h [retry=0.0%, fail=0.0%] states=11 gauss=371

steps/train_mono.sh: Done training monophone system in exp/mono0a

tree-info exp/mono0a/tree

tree-info exp/mono0a/tree

fstdeterminizestar --use-log=true

fstminimizeencoded

fsttablecompose data/lang_test_tg/L_disambig.fst data/lang_test_tg/G.fst

fstpushspecial

fstisstochastic data/lang_test_tg/tmp/LG.fst

0.534295 0.533859

[info]: LG not stochastic.

fstcomposecontext --context-size=1 --central-position=0 --read-disambig-syms=data/lang_test_tg/phones/disambig.int --write-disambig-syms=data/lang_test_tg/tmp/disambig_ilabels_1_0.int data/lang_test_tg/tmp/ilabels_1_0.4088 data/lang_test_tg/tmp/LG.fst

fstisstochastic data/lang_test_tg/tmp/CLG_1_0.fst

0.534295 0.533859

[info]: CLG not stochastic.

make-h-transducer --disambig-syms-out=exp/mono0a/graph_tgpr/disambig_tid.int --transition-scale=1.0 data/lang_test_tg/tmp/ilabels_1_0 exp/mono0a/tree exp/mono0a/final.mdl

fsttablecompose exp/mono0a/graph_tgpr/Ha.fst data/lang_test_tg/tmp/CLG_1_0.fst

fstminimizeencoded

fstdeterminizestar --use-log=true

fstrmepslocal

fstrmsymbols exp/mono0a/graph_tgpr/disambig_tid.int

fstisstochastic exp/mono0a/graph_tgpr/HCLGa.fst

0.5342 -0.000422432

HCLGa is not stochastic

add-self-loops --self-loop-scale=0.1 --reorder=true exp/mono0a/final.mdl exp/mono0a/graph_tgpr/HCLGa.fst

steps/decode.sh --nj 1 --cmd utils/run.pl exp/mono0a/graph_tgpr data/test_yesno exp/mono0a/decode_test_yesno

decode.sh: feature type is delta

steps/diagnostic/analyze_lats.sh --cmd utils/run.pl exp/mono0a/graph_tgpr exp/mono0a/decode_test_yesno

steps/diagnostic/analyze_lats.sh: see stats in exp/mono0a/decode_test_yesno/log/analyze_alignments.log

Overall, lattice depth (10,50,90-percentile)=(1,1,2) and mean=1.2

steps/diagnostic/analyze_lats.sh: see stats in exp/mono0a/decode_test_yesno/log/analyze_lattice_depth_stats.log

local/score.sh --cmd utils/run.pl data/test_yesno exp/mono0a/graph_tgpr exp/mono0a/decode_test_yesno

local/score.sh: scoring with word insertion penalty=0.0,0.5,1.0

%WER 0.00 [ 0 / 232, 0 ins, 0 del, 0 sub ] exp/mono0a/decode_test_yesno/wer_10_0.0

- 检查生成文件

[root@localhost kaldi]# cd src/bin/

[root@localhost bin]# ls

acc-lda est-lda.o

acc-lda.cc est-mllt

acc-lda.o est-mllt.cc

acc-tree-stats est-mllt.o

acc-tree-stats.cc est-pca

acc-tree-stats.o est-pca.cc

add-self-loops est-pca.o

add-self-loops.cc get-post-on-ali

add-self-loops.o get-post-on-ali.cc

align-compiled-mapped get-post-on-ali.o

align-compiled-mapped.cc hmm-info

align-compiled-mapped.o hmm-info.cc

align-equal hmm-info.o

align-equal.cc latgen-faster-mapped

align-equal-compiled latgen-faster-mapped.cc

align-equal-compiled.cc latgen-faster-mapped.o

align-equal-compiled.o latgen-faster-mapped-parallel

align-equal.o latgen-faster-mapped-parallel.cc

align-mapped latgen-faster-mapped-parallel.o

align-mapped.cc latgen-incremental-mapped

align-mapped.o latgen-incremental-mapped.cc

align-text latgen-incremental-mapped.o

align-text.cc logprob-to-post

align-text.o logprob-to-post.cc

ali-to-pdf logprob-to-post.o

ali-to-pdf.cc Makefile

ali-to-pdf.o make-h-transducer

ali-to-phones make-h-transducer.cc

ali-to-phones.cc make-h-transducer.o

ali-to-phones.o make-ilabel-transducer

ali-to-post make-ilabel-transducer.cc

ali-to-post.cc make-ilabel-transducer.o

ali-to-post.o make-pdf-to-tid-transducer

am-info make-pdf-to-tid-transducer.cc

am-info.cc make-pdf-to-tid-transducer.o

am-info.o matrix-dim

analyze-counts matrix-dim.cc

analyze-counts.cc matrix-dim.o

analyze-counts.o matrix-max

build-pfile-from-ali matrix-max.cc

build-pfile-from-ali.cc matrix-max.o

build-pfile-from-ali.o matrix-sum

build-tree matrix-sum.cc

build-tree.cc matrix-sum.o

build-tree.o matrix-sum-rows

build-tree-two-level matrix-sum-rows.cc

build-tree-two-level.cc matrix-sum-rows.o

build-tree-two-level.o phones-to-prons

cluster-phones phones-to-prons.cc

cluster-phones.cc phones-to-prons.o

cluster-phones.o post-to-pdf-post

compare-int-vector post-to-pdf-post.cc

compare-int-vector.cc post-to-pdf-post.o

compare-int-vector.o post-to-phone-post

compile-graph post-to-phone-post.cc

compile-graph.cc post-to-phone-post.o

compile-graph.o post-to-smat

compile-questions post-to-smat.cc

compile-questions.cc post-to-smat.o

compile-questions.o post-to-tacc

compile-train-graphs post-to-tacc.cc

compile-train-graphs.cc post-to-tacc.o

compile-train-graphs-fsts post-to-weights

compile-train-graphs-fsts.cc post-to-weights.cc

compile-train-graphs-fsts.o post-to-weights.o

compile-train-graphs.o prob-to-post

compile-train-graphs-without-lexicon prob-to-post.cc

compile-train-graphs-without-lexicon.cc prob-to-post.o

compile-train-graphs-without-lexicon.o prons-to-wordali

compute-gop prons-to-wordali.cc

compute-gop.cc prons-to-wordali.o

compute-gop.o scale-post

compute-wer scale-post.cc

compute-wer-bootci scale-post.o

compute-wer-bootci.cc show-alignments

compute-wer-bootci.o show-alignments.cc

compute-wer.cc show-alignments.o

compute-wer.o show-transitions

convert-ali show-transitions.cc

convert-ali.cc show-transitions.o

convert-ali.o sum-lda-accs

copy-gselect sum-lda-accs.cc

copy-gselect.cc sum-lda-accs.o

copy-gselect.o sum-matrices

copy-int-vector sum-matrices.cc

copy-int-vector.cc sum-matrices.o

copy-int-vector.o sum-mllt-accs

copy-matrix sum-mllt-accs.cc

copy-matrix.cc sum-mllt-accs.o

copy-matrix.o sum-post

copy-post sum-post.cc

copy-post.cc sum-post.o

copy-post.o sum-tree-stats

copy-transition-model sum-tree-stats.cc

copy-transition-model.cc sum-tree-stats.o

copy-transition-model.o transform-vec

copy-tree transform-vec.cc

copy-tree.cc transform-vec.o

copy-tree.o tree-info

copy-vector tree-info.cc

copy-vector.cc tree-info.o

copy-vector.o vector-scale

decode-faster vector-scale.cc

decode-faster.cc vector-scale.o

decode-faster-mapped vector-sum

decode-faster-mapped.cc vector-sum.cc

decode-faster-mapped.o vector-sum.o

decode-faster.o weight-post

draw-tree weight-post.cc

draw-tree.cc weight-post.o

draw-tree.o weight-silence-post

est-lda weight-silence-post.cc

est-lda.cc weight-silence-post.o

- 使用命令

[root@localhost kaldi]# cd src

[root@localhost src]# featbin/copy-feats

featbin/copy-feats

Copy features [and possibly change format]

Usage: copy-feats [options] <feature-rspecifier> <feature-wspecifier>

or: copy-feats [options] <feats-rxfilename> <feats-wxfilename>

e.g.: copy-feats ark:- ark,scp:foo.ark,foo.scp

or: copy-feats ark:foo.ark ark,t:txt.ark

See also: copy-matrix, copy-feats-to-htk, copy-feats-to-sphinx, select-feats,

extract-feature-segments, subset-feats, subsample-feats, splice-feats, paste-feats,

concat-feats

Options:

--binary : Binary-mode output (not relevant if writing to archive) (bool, default = true)

--compress : If true, write output in compressed form(only currently supported for wxfilename, i.e. archive/script,output) (bool, default = false)

--compression-method : Only relevant if --compress=true; the method (1 through 7) to compress the matrix. Search for CompressionMethod in src/matrix/compressed-matrix.h. (int, default = 1)

--htk-in : Read input as HTK features (bool, default = false)

--sphinx-in : Read input as Sphinx features (bool, default = false)

--write-num-frames : Wspecifier to write length in frames of each utterance. e.g. 'ark,t:utt2num_frames'. Only applicable if writing tables, not when this program is writing individual files. See also feat-to-len. (string, default = "")

Standard options:

--config : Configuration file to read (this option may be repeated) (string, default = "")

--help : Print out usage message (bool, default = false)

--print-args : Print the command line arguments (to stderr) (bool, default = true)

--verbose : Verbose level (higher->more logging) (int, default = 0)

➢步骤六:VM拍个快照存档!

二、记坑

1.内存分配过小

g++: fatal error: Killed signal terminated program cc1plus

compilation terminated.

make[3]: *** [Makefile:529: determinize.lo] Error 1

make[3]: Leaving directory '/home/baixf/kaldi/tools/openfst-1.7.2/src/script'

make[2]: *** [Makefile:370: install-recursive] Error 1

make[2]: Leaving directory '/home/baixf/kaldi/tools/openfst-1.7.2/src'

make[1]: *** [Makefile:426: install-recursive] Error 1

make[1]: Leaving directory '/home/baixf/kaldi/tools/openfst-1.7.2'

make: *** [Makefile:64: openfst_compiled] Error 2

这边建议是大于2G,越大越好,反正1G是跑不起来。

2.Tools/文件夹 make - j 4报错

建议分开编译安装

- OpenFst

Kaldi使用FST作为状态图的表现形式,安装方式如下:

cd tools

make openfst

- cub

cub是NVIDIA官方提供的CUDA核函数开发库,是目前Kaldi编译的必选工具,安装方式如下:

make cub

- 安装三种语言模型

首先安装irstlm,执行

extras/install_irstlm.sh

下面安装srilm,执行:

sudo extras/install_srilm.sh

- 进行编译

make -j 4

3.Ubuntu卡死

待解决~

三、Ubuntu22.04

2022.07.29更:最近新换了固态,装了个双系统,在双系统的Ubuntu上成功安装运行,看来是虚拟机性能不行。

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· DeepSeek 开源周回顾「GitHub 热点速览」

· 物流快递公司核心技术能力-地址解析分单基础技术分享

· .NET 10首个预览版发布:重大改进与新特性概览!

· AI与.NET技术实操系列(二):开始使用ML.NET

· 单线程的Redis速度为什么快?