scrapy练习

1、爬取cnblogs首页文章,打印出标题和连接地址

spiders/cnblogs.py

import scrapy class CnblogsSpider(scrapy.Spider): name = 'cnblogs' allowed_domains = ['www.cnblogs.com'] start_urls = ['http://www.cnblogs.com/'] def parse(self, response): # 爬取cnblogs首页文章,打印出标题和连接地址 # print(response.text) # 1 取出当前页所有文章对应的标签对象 # article_list = response.xpath('//article[contains(@class,"post-item")]') article_list = response.css('.post-item') # print(article_list) for article in article_list: # 2 取出标题,和标题对应的url title = article.css('.post-item-title::text').extract_first() title_url = article.css('.post-item-title::attr(href)').extract_first() print(""" 文章标题:%s 文章链接:%s """ % (title, title_url))

2、爬取cnblogs文章,把标题连接地址和文章内容保存到mysql,连续爬取n页

持续爬取下一页原理:

# 我们每爬一页就用css选择器来查询,是否存在下一页链接, # 存在:则拼接出下一页链接,继续爬下一页链接,然后把下一页链接提交给当前爬取的函数parse,继续爬取,继续查找下一页,知道找不到下一页,说明所有页面已经爬完,那结束爬虫

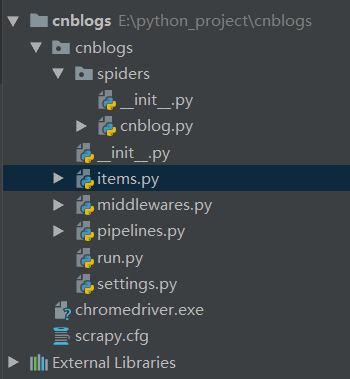

目录

spiders/cnblog.py

import scrapy from cnblogs.items import CnblogsItem from scrapy import Request from selenium import webdriver class CnblogSpider(scrapy.Spider): name = 'cnblog' allowed_domains = ['www.cnblogs.com'] start_urls = ['http://www.cnblogs.com/'] # bro = webdriver.Chrome(executable_path='../chromedriver.exe') ''' 爬取原则:scrapy默认是先进先出 -深度优先:详情页先爬 队列,先进去先出来 -广度优先:每一页先爬 栈 ,后进先出 ''' def parse(self, response): # print(response.text) div_list = response.css('.post-item') for div in div_list: item = CnblogsItem() title = div.css('.post-item-title::text').extract_first() item['title'] = title url = div.css('.post-item-title::attr(href)').extract_first() item['url'] = url desc = ''.join(div.css('.post-item-summary::text').extract()).strip() item['desc'] = desc print(desc) # 要继续爬取详情 # callback如果不写,默认回调到parse方法 # 如果写了,响应回来的对象就会调到自己写的解析方法中 yield Request(url, callback=self.parser_detail, meta={'item': item}) # 解析出下一页的地址 next = 'https://www.cnblogs.com' + response.css('#paging_block>div a:last-child::attr(href)').extract_first() # print(next) yield Request(next) def parser_detail(self, response): content = response.css('#cnblogs_post_body').extract_first() # print(str(content)) # item哪里来 item = response.meta.get('item') item['content'] = content yield item

items.py

# Define here the models for your scraped items # # See documentation in: # https://docs.scrapy.org/en/latest/topics/items.html import scrapy class CnblogsItem(scrapy.Item): title = scrapy.Field() url = scrapy.Field() desc = scrapy.Field() content = scrapy.Field()

pipelines.py

# Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html # useful for handling different item types with a single interface from itemadapter import ItemAdapter import pymysql class CnblogsMysqlPipeline: def open_spider(self, spider): # 爬虫对象 print('-------', spider.name) self.conn = pymysql.connect(host='127.0.0.1', user='root', password="123456", database='cnblogs', port=3306) def process_item(self, item, spider): cursor = self.conn.cursor() sql = 'insert into article (title,url,content,`desc`) values (%s,%s,%s,%s)' cursor.execute(sql, [item['title'], item['url'], item['content'], item['desc']]) self.conn.commit() return item def close_spider(self, spider): self.conn.close()

settings.py

# Scrapy settings for cnblogs project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://docs.scrapy.org/en/latest/topics/settings.html # https://docs.scrapy.org/en/latest/topics/downloader-middleware.html # https://docs.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'cnblogs' SPIDER_MODULES = ['cnblogs.spiders'] NEWSPIDER_MODULE = 'cnblogs.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent #USER_AGENT = 'cnblogs (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://docs.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'cnblogs.middlewares.CnblogsSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'cnblogs.middlewares.CnblogsDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://docs.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'cnblogs.pipelines.CnblogsMysqlPipeline': 300, } # Enable and configure the AutoThrottle extension (disabled by default) # See https://docs.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

run.py

from scrapy.cmdline import execute execute(['scrapy', 'crawl', 'cnblog', '--nolog'])

from selenium import webdriver import time import requests bro=webdriver.Chrome(executable_path='./chromedriver.exe') bro.implicitly_wait(10) bro.get('https://dig.chouti.com/') login_b=bro.find_element_by_id('login_btn') print(login_b) login_b.click() username=bro.find_element_by_name('phone') username.send_keys('18953675221') password=bro.find_element_by_name('password') password.send_keys('lqz123') button=bro.find_element_by_css_selector('button.login-btn') button.click() # 可能有验证码,手动操作一下 time.sleep(10) my_cookie=bro.get_cookies() # 列表 print(my_cookie) bro.close() # 这个cookie不是一个字典,不能直接给requests使用,需要转一下 cookie={} for item in my_cookie: cookie[item['name']]=item['value'] headers = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/84.0.4147.105 Safari/537.36', 'Referer': 'https://dig.chouti.com/'} # ret = requests.get('https://dig.chouti.com/',headers=headers) # print(ret.text) ret=requests.get('https://dig.chouti.com/top/24hr?_=1596677637670',headers=headers) print(ret.json()) ll=[] for item in ret.json()['data']: ll.append(item['id']) print(ll) for id in ll: ret=requests.post(' https://dig.chouti.com/link/vote',headers=headers,cookies=cookie,data={'linkId':id}) print(ret.text) 'https://dig.chouti.com/comments/create' ''' content: 说的号 linkId: 29829529 parentId: 0 '''