Unity中的shadows(三)receive shadows

本文是Unity中的shadows系列的第三篇文章。上一篇文章主要介绍了阴影投射相关的内容,这一篇则主要关注阴影接收的内容。

SHADOW_COORDS

我们可以使用unity内置的宏和API来对shadowmap进行采样,以便在forwardbase和forwardadd阶段正确地渲染阴影。首先,使用SHADOW_COORDS宏来定义fragement shader阶段需要的顶点阴影坐标:

struct Interpolators {

float4 pos : SV_POSITION;

float4 uv : TEXCOORD0;

float3 normal : TEXCOORD1;

float3 tangent : TEXCOORD2;

float3 binormal : TEXCOORD3;

float3 worldPos : TEXCOORD4;

SHADOW_COORDS(5)

};

SHADOW_COORDS的宏定义如下:

// *行光源

#if defined (SHADOWS_SCREEN)

#define SHADOW_COORDS(idx1) unityShadowCoord4 _ShadowCoord : TEXCOORD##idx1;

#endif

// 聚光灯光源

#if defined (SHADOWS_DEPTH) && defined (SPOT)

#define SHADOW_COORDS(idx1) unityShadowCoord4 _ShadowCoord : TEXCOORD##idx1;

#endif

// 点光源

#if defined (SHADOWS_CUBE)

#define SHADOW_COORDS(idx1) unityShadowCoord3 _ShadowCoord : TEXCOORD##idx1;

#endif

// 关闭阴影

#if !defined (SHADOWS_SCREEN) && !defined (SHADOWS_DEPTH) && !defined (SHADOWS_CUBE)

#define SHADOW_COORDS(idx1)

#endif

然后,使用TRANSFER_SHADOW宏在vertex shader阶段对阴影坐标进行填充:

Interpolators MyVertexProgram (VertexData v) {

Interpolators i;

...

TRANSFER_SHADOW(i);

return i;

}

TRANSFER_SHADOW

TRANSFER_SHADOW的宏定义如下:

//*行光源

#if defined (SHADOWS_SCREEN)

// 非屏幕空间的shadow map

#if defined(UNITY_NO_SCREENSPACE_SHADOWS)

#define TRANSFER_SHADOW(a) a._ShadowCoord = mul( unity_WorldToShadow[0], mul( unity_ObjectToWorld, v.vertex ) );

#else

#define TRANSFER_SHADOW(a) a._ShadowCoord = ComputeScreenPos(a.pos);

#endif

#endif

//聚光灯光源

#if defined (SHADOWS_DEPTH) && defined (SPOT)

#define TRANSFER_SHADOW(a) a._ShadowCoord = mul (unity_WorldToShadow[0], mul(unity_ObjectToWorld,v.vertex));

#endif

//点光源

#if defined (SHADOWS_CUBE)

#define TRANSFER_SHADOW(a) a._ShadowCoord.xyz = mul(unity_ObjectToWorld, v.vertex).xyz - _LightPositionRange.xyz;

#endif

//关闭阴影

#if !defined (SHADOWS_SCREEN) && !defined (SHADOWS_DEPTH) && !defined (SHADOWS_CUBE)

#define TRANSFER_SHADOW(a)

#endif

对于非屏幕空间的*行光阴影或聚光灯阴影,计算阴影坐标的方式很简单,就是将顶点从世界坐标系转到光源空间的剪裁坐标系中。而对于屏幕空间的*行光阴影,则需要将顶点转换到屏幕坐标,使用ComputeScreenPos可以将顶点的剪裁坐标xy范围从[-w,w]转换到[0, w]。对于点光源也要特殊处理,因为它的shadowmap是cubemap。这里的阴影坐标就是从点光源指向顶点位置的向量。

最后,使用SHADOW_ATTENUATION宏在fragment shader阶段采样阴影信息:

float4 MyFragmentProgram (Interpolators i) : SV_TARGET {

...

fixed shadow = SHADOW_ATTENUATION(i);

return fixed4(ambient + (diffuse + specular) * atten * shadow, 1.0);

}

*行光的SHADOW_ATTENUATION

SHADOW_ATTENUATION的宏定义如下:

//*行光源

#if defined (SHADOWS_SCREEN)

#define SHADOW_ATTENUATION(a) unitySampleShadow(a._ShadowCoord)

#endif

//聚光灯光源

#if defined (SHADOWS_DEPTH) && defined (SPOT)

#define SHADOW_ATTENUATION(a) UnitySampleShadowmap(a._ShadowCoord)

#endif

//点光源

#if defined (SHADOWS_CUBE)

#define SHADOW_ATTENUATION(a) UnitySampleShadowmap(a._ShadowCoord)

#endif

//关闭阴影

#if !defined (SHADOWS_SCREEN) && !defined (SHADOWS_DEPTH) && !defined (SHADOWS_CUBE)

#define SHADOW_ATTENUATION(a) 1.0

#endif

针对不同的光源,Unity使用不同的内置API进行处理。首先看*行光,类似地,Unity根据是否支持screen space shadow map使用了两套机制:

#if defined(UNITY_NO_SCREENSPACE_SHADOWS)

inline fixed unitySampleShadow (unityShadowCoord4 shadowCoord)

{

#if defined(SHADOWS_NATIVE)

fixed shadow = UNITY_SAMPLE_SHADOW(_ShadowMapTexture, shadowCoord.xyz);

shadow = _LightShadowData.r + shadow * (1-_LightShadowData.r);

return shadow;

#else

unityShadowCoord dist = SAMPLE_DEPTH_TEXTURE(_ShadowMapTexture, shadowCoord.xy);

// tegra is confused if we use _LightShadowData.x directly

// with "ambiguous overloaded function reference max(mediump float, float)"

unityShadowCoord lightShadowDataX = _LightShadowData.x;

unityShadowCoord threshold = shadowCoord.z;

return max(dist > threshold, lightShadowDataX);

#endif

}

#else // UNITY_NO_SCREENSPACE_SHADOWS

inline fixed unitySampleShadow (unityShadowCoord4 shadowCoord)

{

fixed shadow = UNITY_SAMPLE_SCREEN_SHADOW(_ShadowMapTexture, shadowCoord);

return shadow;

}

#endif

先看不支持screen space shadow map的情况,SHADOWS_NATIVE宏用来区分当前*台是否支持shadow comparison samplers,如果支持,我们就可以使用SamplerComparisonState类型的采样器,此时UNITY_SAMPLE_SHADOW的定义如下:

#define UNITY_SAMPLE_SHADOW(tex,coord) tex.SampleCmpLevelZero (sampler##tex,(coord).xy,(coord).z)

SampleCmpLevelZero函数会对指定的纹理坐标进行采样,将采样的结果与传入的参数z(当前texel在光源空间的深度)进行比较,小于等于z视为通过(说明此texel没被遮挡),否则视为不通过(说明此texel位于阴影中)。这里,采样的次数可能不止一次,会跟纹理过滤模式有关。函数对周围点进行采样比较并进行混合(例如双线性插值就是对周围的四个像素进行采样),最终返回一个 0 到 1 的值。相当于可以在硬件层面对阴影值进行混合,使得阴影的边缘变得更加*滑,避免硬阴影的效果(有可能周围点中一部分的深度比较通过,一部分不通过,这个就是阴影的边缘,需要*滑处理)。

得到阴影值之后,我们看到一个名为_LightShadowData的四维向量。通过查阅资料可得:

_LightShadowData = new Vector4(

1 - light.shadowStrength, // x = 1.0 - shadowStrength

Mathf.Max(camera.farClipPlane / QualitySettings.shadowDistance, 1.0f), // y = max(cameraFarClip / shadowDistance, 1.0) // but not used in current built-in shader codebase

5.0f / Mathf.Min(camera.farClipPlane, QualitySettings.shadowDistance), // z = shadow bias

-1.0f * (2.0f + camera.fieldOfView / 180.0f * 2.0f) // w = -1.0f * (2.0f + camera.fieldOfView / 180.0f * 2.0f) // fov is regarded as 0 when orthographic.

);

可知_LightShadowData.r就是光源的阴影强度,就是个简单的插值把shadow的值约束在[1- shadowStrength, 1]内。

如果当前*台不支持shadow comparison samplers,unity就转而使用SAMPLE_DEPTH_TEXTURE宏进行操作:

# define SAMPLE_DEPTH_TEXTURE(sampler, uv) (tex2D(sampler, uv).r)

接下来就是简单粗暴的深度比较,如果当前texel在阴影中,那么返回shadow值为1,否则返回最小的约束值1 - shadowStrength。shadowStrength值设置越大,阴影就越明显。

然后再看screen space shadow map的情况,Unity使用UNITY_SAMPLE_SCREEN_SHADOW宏进行屏幕空间采样:

#define UNITY_SAMPLE_SCREEN_SHADOW(tex, uv) tex2Dproj( tex, UNITY_PROJ_COORD(uv) ).r

实际上就是调用tex2Dproj,因为我们传入的参数就是[0, w]的坐标范围,除w就是[0, 1]了。

聚光灯的SHADOW_ATTENUATION

聚光灯定义的采样函数如下:

inline fixed UnitySampleShadowmap (float4 shadowCoord)

{

#if defined (SHADOWS_SOFT)

half shadow = 1;

// No hardware comparison sampler (ie some mobile + xbox360) : simple 4 tap PCF

#if !defined (SHADOWS_NATIVE)

float3 coord = shadowCoord.xyz / shadowCoord.w;

float4 shadowVals;

shadowVals.x = SAMPLE_DEPTH_TEXTURE(_ShadowMapTexture, coord + _ShadowOffsets[0].xy);

shadowVals.y = SAMPLE_DEPTH_TEXTURE(_ShadowMapTexture, coord + _ShadowOffsets[1].xy);

shadowVals.z = SAMPLE_DEPTH_TEXTURE(_ShadowMapTexture, coord + _ShadowOffsets[2].xy);

shadowVals.w = SAMPLE_DEPTH_TEXTURE(_ShadowMapTexture, coord + _ShadowOffsets[3].xy);

half4 shadows = (shadowVals < coord.zzzz) ? _LightShadowData.rrrr : 1.0f;

shadow = dot(shadows, 0.25f);

#else

// Mobile with comparison sampler : 4-tap linear comparison filter

#if defined(SHADER_API_MOBILE)

float3 coord = shadowCoord.xyz / shadowCoord.w;

half4 shadows;

shadows.x = UNITY_SAMPLE_SHADOW(_ShadowMapTexture, coord + _ShadowOffsets[0]);

shadows.y = UNITY_SAMPLE_SHADOW(_ShadowMapTexture, coord + _ShadowOffsets[1]);

shadows.z = UNITY_SAMPLE_SHADOW(_ShadowMapTexture, coord + _ShadowOffsets[2]);

shadows.w = UNITY_SAMPLE_SHADOW(_ShadowMapTexture, coord + _ShadowOffsets[3]);

shadow = dot(shadows, 0.25f);

// Everything else

#else

float3 coord = shadowCoord.xyz / shadowCoord.w;

float3 receiverPlaneDepthBias = UnityGetReceiverPlaneDepthBias(coord, 1.0f);

shadow = UnitySampleShadowmap_PCF3x3(float4(coord, 1), receiverPlaneDepthBias);

#endif

shadow = lerp(_LightShadowData.r, 1.0f, shadow);

#endif

#else

// 1-tap shadows

#if defined (SHADOWS_NATIVE)

half shadow = UNITY_SAMPLE_SHADOW_PROJ(_ShadowMapTexture, shadowCoord);

shadow = lerp(_LightShadowData.r, 1.0f, shadow);

#else

half shadow = SAMPLE_DEPTH_TEXTURE_PROJ(_ShadowMapTexture, UNITY_PROJ_COORD(shadowCoord)) < (shadowCoord.z / shadowCoord.w) ? _LightShadowData.r : 1.0;

#endif

#endif

return shadow;

}

SHADOWS_SOFT宏表示是否启用软阴影,如果开启,需要再判断硬件是否支持shadow comparison samplers,如果不支持,那需要我们手动去做一下阴影的边缘blend处理(percentage closer filtering)。shadowCoord.xyzw保存了光源空间下的阴影剪裁坐标,因此除w就是手动做一下透视除法。_ShadowOffsets是一个保存了4个四维向量的数组,表示采样点周围的偏移。查阅资料可知它的定义如下:

float4 offsets;

float offX = 0.5f / _ShadowMapTexture_TexelSize.z;

float offY = 0.5f / _ShadowMapTexture_TexelSize.w;

offsets.z = 0.0f; offsets.w = 0.0f;

offsets.x = -offX; offsets.y = -offY;

_ShadowOffsets[0] = offsets;

offsets.x = offX; offsets.y = -offY;

_ShadowOffsets[1] = offsets;

offsets.x = -offX; offsets.y = offY;

_ShadowOffsets[2] = offsets;

offsets.x = offX; offsets.y = offY;

_ShadowOffsets[3] = offsets;

简单来说,就是对采样点附*0.5个texel范围的点进行采样,对深度比较的结果手动进行融合,只不过这里是对4个采样结果进行*均处理。

在支持comparison samplers的情况下,如果是移动*台,Unity还会在硬件sample深度比较过滤之后,在上层跑一遍percentage closer filtering,对4个采样结果线性融合。

对于非移动*台的其他情况,Unity首先使用UnityGetReceiverPlaneDepthBias在fragment层面计算一次depth bias:

/**

* Computes the receiver plane depth bias for the given shadow coord in screen space.

* Inspirations:

* http://mynameismjp.wordpress.com/2013/09/10/shadow-maps/

* http://amd-dev.wpengine.netdna-cdn.com/wordpress/media/2012/10/Isidoro-ShadowMapping.pdf

*/

float3 UnityGetReceiverPlaneDepthBias(float3 shadowCoord, float biasMultiply)

{

// Should receiver plane bias be used? This estimates receiver slope using derivatives,

// and tries to tilt the PCF kernel along it. However, when doing it in screenspace from the depth texture

// (ie all light in deferred and directional light in both forward and deferred), the derivatives are wrong

// on edges or intersections of objects, leading to shadow artifacts. Thus it is disabled by default.

float3 biasUVZ = 0;

#if defined(UNITY_USE_RECEIVER_PLANE_BIAS) && defined(SHADOWMAPSAMPLER_AND_TEXELSIZE_DEFINED)

float3 dx = ddx(shadowCoord);

float3 dy = ddy(shadowCoord);

biasUVZ.x = dy.y * dx.z - dx.y * dy.z;

biasUVZ.y = dx.x * dy.z - dy.x * dx.z;

biasUVZ.xy *= biasMultiply / ((dx.x * dy.y) - (dx.y * dy.x));

// Static depth biasing to make up for incorrect fractional sampling on the shadow map grid.

const float UNITY_RECEIVER_PLANE_MIN_FRACTIONAL_ERROR = 0.01f;

float fractionalSamplingError = dot(_ShadowMapTexture_TexelSize.xy, abs(biasUVZ.xy));

biasUVZ.z = -min(fractionalSamplingError, UNITY_RECEIVER_PLANE_MIN_FRACTIONAL_ERROR);

#if defined(UNITY_REVERSED_Z)

biasUVZ.z *= -1;

#endif

#endif

return biasUVZ;

}

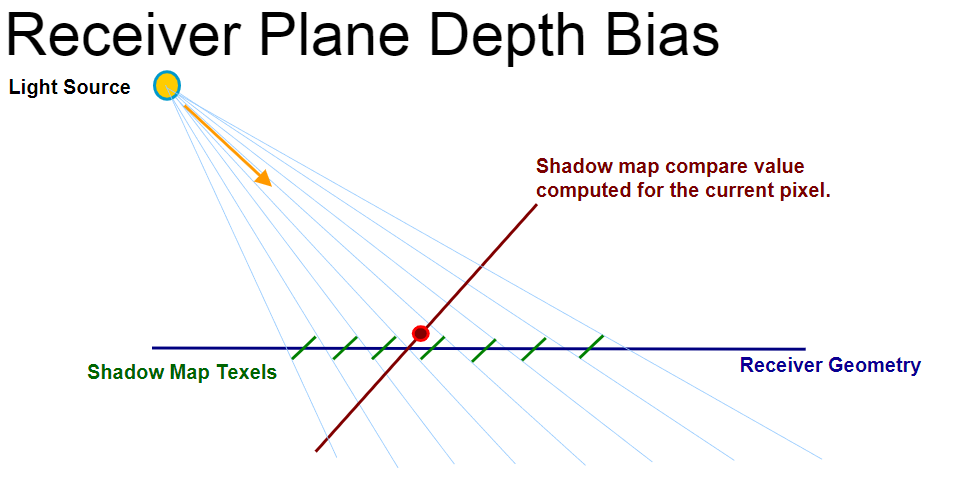

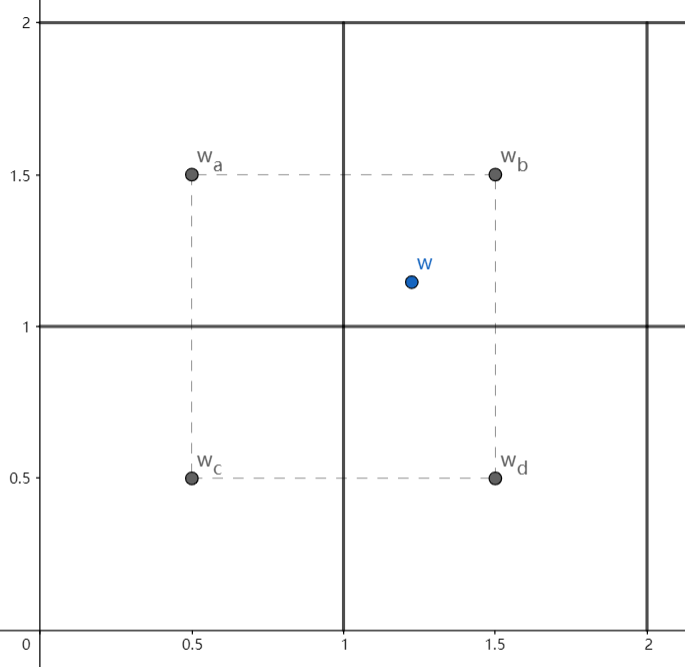

这个函数的实现参考了GDC 06的一篇分享,reference中也贴出来了。它是为了解决在PCF过程中,如果kernel比较大(即采样范围比较大),或者光照的角度*乎*行于*面(即掠角),会出现shadow acne的问题,如图:

可以看到,如果我们只计算一次当前texel在光源空间的深度值,而且只采样一次shadowmap,那么容易知道当前深度值肯定小于shadowmap中存储的值,也就是texel不在阴影内。但是在PCF中,情况可能会有所不同。我们可能还会采样到shadowmap左边若干texel的深度值,而左边这些值都要比当前texel的深度值要小,因此将采样结果blend以后,当前texel反而会被认为在阴影内。这个结果显然是不对的,原因就是我们只计算了一次当前texel在光源空间的深度值,却要拿它和周围若干深度值进行比较。

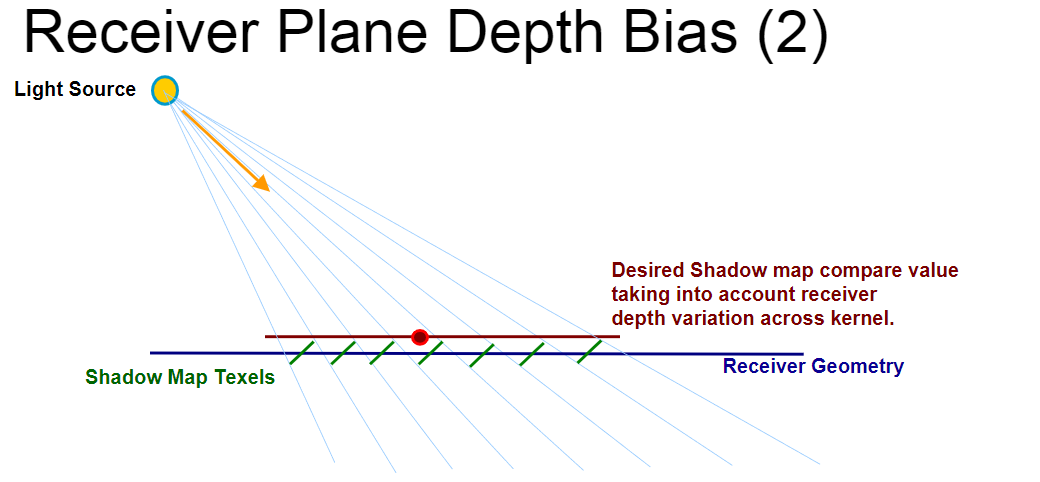

理想的情况如上图所示,对于PCF中每采样一次shadowmap,我们都重新计算一次depth bias,根据新的bias去调整当前texel在光源空间的深度值,来消除PCF可能带来的shadow acne。那么问题就转化成了如何去计算depth bias,如图:

由于这是已经在阴影接收pass的fragement阶段,所以这里的depth bias是往前而不是往后移动。CD是原*面,根据我们之前的计算可知,DF段要想消除acne,只需移动到GH即可,这里bias就是DG的长度。但是对于CF段,仅仅移动DG长度是不够的,这是因为还会采样到AJ区域的shadow map,采样的阴影深度值为LM,LM <= EH,进而CF段会被判定在阴影中。由图可知,我们将CF段移动到NI即可消除acne,此时的移动距离即bias为IF的长度。这个IF是什么?它是shadowmap中相邻两个texel的阴影深度值之差。更进一步来说,只要我们能够得到阴影深度随着纹理坐标变化的变化量,就可以轻松计算出采样当前texel周围范围内的阴影深度所需要的depth bias。这个变化量用数学的方式来表达,就是导数。因为纹理坐标是二维的,所以可以把dz写成向量:

好在fragment shader中提供了求导相关的指令,现代GPU在光栅化的时候不是一次只取一个pixel,而是以2*2块的方式去取pixel进行计算。在Unity shader中可以使用ddx,ddy两条指令,来求得fragment某个属性对x方向或对y方向的导数值。但是这里有个小问题,即ddx,ddy只能求得相对于屏幕空间x,y方向的导数,而我们需要的其实是纹理空间的,这要怎么办呢?

我们可以利用坐标系转换的思想,对导数空间进行变换:

写成矩阵的形式:

回到dz,从坐标系的角度理解,就是去计算dz在以du,dy为基底的切线空间下的坐标,而我们已知的其实是dz在dx,dy为基底的切线空间下的坐标,所以问题转化为:

至此,变换矩阵也已求出,那就可以正式地看代码了,有了前面数学的理解,可以发现代码里做的事情就是在计算dz。Unity提供了一个biasMultiply的参数来控制dz的scale,还对dz进行了clamp处理。对于reverse z的情况,bias的值为正数,否则为负数。

计算出bias的值之后,Unity将其传入了一个名为UnitySampleShadowmap_PCF3x3的函数,定义如下:

half UnitySampleShadowmap_PCF3x3(float4 coord, float3 receiverPlaneDepthBias)

{

return UnitySampleShadowmap_PCF3x3Tent(coord, receiverPlaneDepthBias);

}

/**

* PCF tent shadowmap filtering based on a 3x3 kernel (optimized with 4 taps)

*/

half UnitySampleShadowmap_PCF3x3Tent(float4 coord, float3 receiverPlaneDepthBias)

{

half shadow = 1;

#ifdef SHADOWMAPSAMPLER_AND_TEXELSIZE_DEFINED

#ifndef SHADOWS_NATIVE

// when we don't have hardware PCF sampling, fallback to a simple 3x3 sampling with averaged results.

return UnitySampleShadowmap_PCF3x3NoHardwareSupport(coord, receiverPlaneDepthBias);

#endif

// tent base is 3x3 base thus covering from 9 to 12 texels, thus we need 4 bilinear PCF fetches

float2 tentCenterInTexelSpace = coord.xy * _ShadowMapTexture_TexelSize.zw;

float2 centerOfFetchesInTexelSpace = floor(tentCenterInTexelSpace + 0.5);

float2 offsetFromTentCenterToCenterOfFetches = tentCenterInTexelSpace - centerOfFetchesInTexelSpace;

// find the weight of each texel based

float4 texelsWeightsU, texelsWeightsV;

_UnityInternalGetWeightPerTexel_3TexelsWideTriangleFilter(offsetFromTentCenterToCenterOfFetches.x, texelsWeightsU);

_UnityInternalGetWeightPerTexel_3TexelsWideTriangleFilter(offsetFromTentCenterToCenterOfFetches.y, texelsWeightsV);

// each fetch will cover a group of 2x2 texels, the weight of each group is the sum of the weights of the texels

float2 fetchesWeightsU = texelsWeightsU.xz + texelsWeightsU.yw;

float2 fetchesWeightsV = texelsWeightsV.xz + texelsWeightsV.yw;

// move the PCF bilinear fetches to respect texels weights

float2 fetchesOffsetsU = texelsWeightsU.yw / fetchesWeightsU.xy + float2(-1.5,0.5);

float2 fetchesOffsetsV = texelsWeightsV.yw / fetchesWeightsV.xy + float2(-1.5,0.5);

fetchesOffsetsU *= _ShadowMapTexture_TexelSize.xx;

fetchesOffsetsV *= _ShadowMapTexture_TexelSize.yy;

// fetch !

float2 bilinearFetchOrigin = centerOfFetchesInTexelSpace * _ShadowMapTexture_TexelSize.xy;

shadow = fetchesWeightsU.x * fetchesWeightsV.x * UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(bilinearFetchOrigin, float2(fetchesOffsetsU.x, fetchesOffsetsV.x), coord.z, receiverPlaneDepthBias));

shadow += fetchesWeightsU.y * fetchesWeightsV.x * UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(bilinearFetchOrigin, float2(fetchesOffsetsU.y, fetchesOffsetsV.x), coord.z, receiverPlaneDepthBias));

shadow += fetchesWeightsU.x * fetchesWeightsV.y * UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(bilinearFetchOrigin, float2(fetchesOffsetsU.x, fetchesOffsetsV.y), coord.z, receiverPlaneDepthBias));

shadow += fetchesWeightsU.y * fetchesWeightsV.y * UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(bilinearFetchOrigin, float2(fetchesOffsetsU.y, fetchesOffsetsV.y), coord.z, receiverPlaneDepthBias));

#endif

return shadow;

}

如果硬件不支持shadow comparison samplers,Unity将使用UnitySampleShadowmap_PCF3x3NoHardwareSupport进行采样:

/**

* PCF gaussian shadowmap filtering based on a 3x3 kernel (9 taps no PCF hardware support)

*/

half UnitySampleShadowmap_PCF3x3NoHardwareSupport(float4 coord, float3 receiverPlaneDepthBias)

{

half shadow = 1;

#ifdef SHADOWMAPSAMPLER_AND_TEXELSIZE_DEFINED

// when we don't have hardware PCF sampling, then the above 5x5 optimized PCF really does not work.

// Fallback to a simple 3x3 sampling with averaged results.

float2 base_uv = coord.xy;

float2 ts = _ShadowMapTexture_TexelSize.xy;

shadow = 0;

shadow += UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(base_uv, float2(-ts.x, -ts.y), coord.z, receiverPlaneDepthBias));

shadow += UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(base_uv, float2(0, -ts.y), coord.z, receiverPlaneDepthBias));

shadow += UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(base_uv, float2(ts.x, -ts.y), coord.z, receiverPlaneDepthBias));

shadow += UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(base_uv, float2(-ts.x, 0), coord.z, receiverPlaneDepthBias));

shadow += UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(base_uv, float2(0, 0), coord.z, receiverPlaneDepthBias));

shadow += UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(base_uv, float2(ts.x, 0), coord.z, receiverPlaneDepthBias));

shadow += UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(base_uv, float2(-ts.x, ts.y), coord.z, receiverPlaneDepthBias));

shadow += UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(base_uv, float2(0, ts.y), coord.z, receiverPlaneDepthBias));

shadow += UNITY_SAMPLE_SHADOW(_ShadowMapTexture, UnityCombineShadowcoordComponents(base_uv, float2(ts.x, ts.y), coord.z, receiverPlaneDepthBias));

shadow /= 9.0;

#endif

return shadow;

}

代码看上去很简单,就是对覆盖当前点3x3的区域范围进行采样,然后*均得到采样结果。Unity使用辅助APIUnityCombineShadowcoordComponents来生成每次采样需要的阴影坐标:

/**

* Combines the different components of a shadow coordinate and returns the final coordinate.

* See UnityGetReceiverPlaneDepthBias

*/

float3 UnityCombineShadowcoordComponents(float2 baseUV, float2 deltaUV, float depth, float3 receiverPlaneDepthBias)

{

float3 uv = float3(baseUV + deltaUV, depth + receiverPlaneDepthBias.z);

uv.z += dot(deltaUV, receiverPlaneDepthBias.xy);

return uv;

}

函数中的点乘运算,就是为了得到采样当前阴影texel时所需要的bias。

再看一下支持shadow comparison samplers的情况。代码首先对当前采样的纹素坐标进行四舍五入取整,然后计算取整后的坐标与当前纹素坐标的offset。这个offset的作用是什么呢?

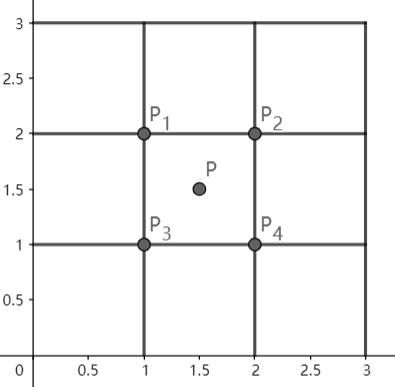

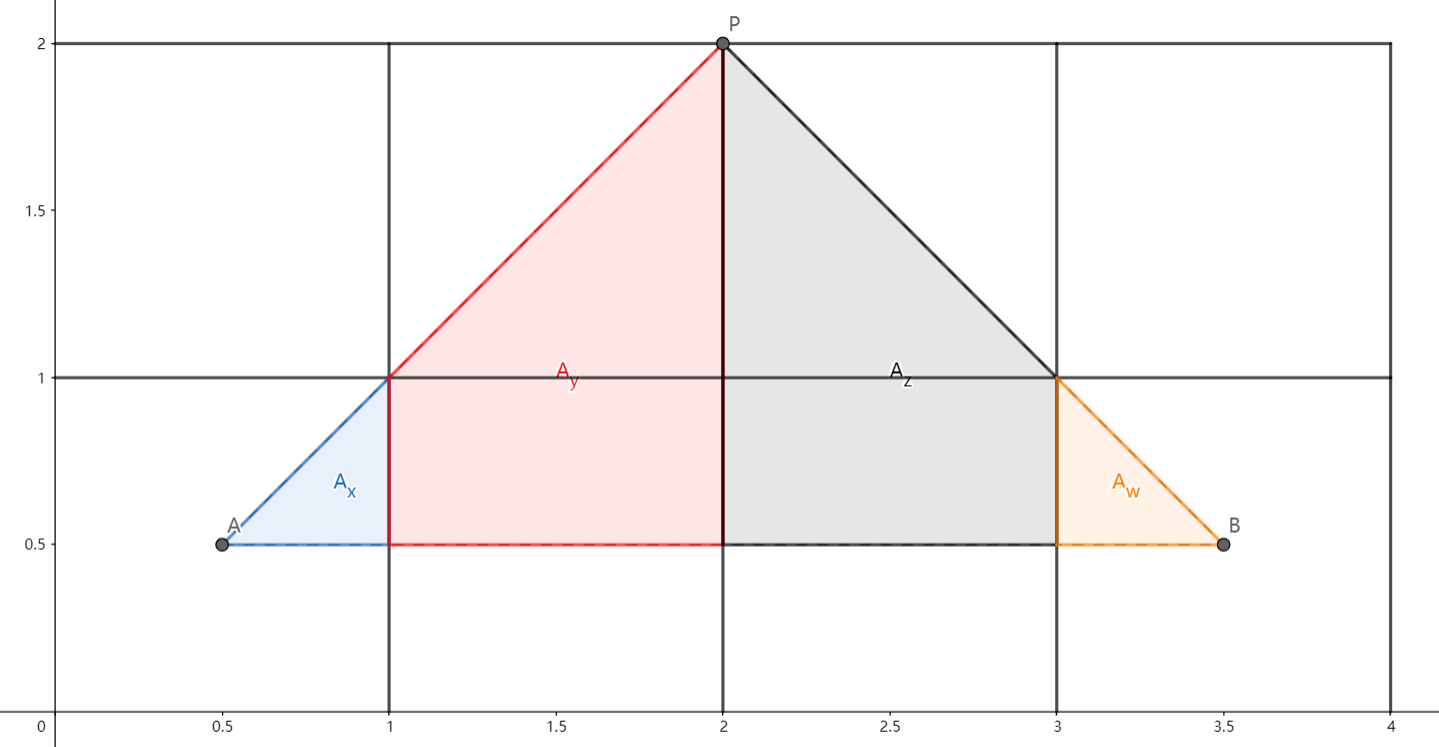

我们知道,最直接的PCF3x3算法就是将采样坐标往4个对角方向偏移0.5个texel,采样4次。在硬件支持的加成下,可以做到覆盖周围9个texel,只不过texel的比重不同:

由图可知,\(P_1,P_2,P_3,P_4\)会对它周围4个texel进行采样然后融合,那么易知这9个texel的权重为:

但是这种方式有以下的缺点,首先还是会在阴影边缘产生一些比较明显的边界,因为它本质上还是把texel看成是孤立的点,只是点和点之间的线性融合,它考虑的权重是点的权重,而不是texel整个面积所占的权重,当前采样点在当前texel区域内的偏移不会对这9个texel的权重产生影响。还有就是如果要实现NxN的PCF,需要进行(N-1)x(N-1)次采样,效率太低了。

因此Unity转而使用面积覆盖的方式来计算每个texel的权重,只需要进行\(\lceil \dfrac{N}{2} \rceil \times \lceil \dfrac{N}{2} \rceil\)次采样即可实现NxN的PCF。对于PCF3x3,在硬件支持的情况下,采样4次实际上是对最多周围16个texel进行采样,所以Unity使用了长为3个texel,宽为1.5个texel的等腰三角形,分别从uv两个方向计算权重,这就是_UnityInternalGetWeightPerTexel_3TexelsWideTriangleFilter:

/**

* Assuming a isoceles triangle of 1.5 texels height and 3 texels wide lying on 4 texels.

* This function return the weight of each texels area relative to the full triangle area.

*/

void _UnityInternalGetWeightPerTexel_3TexelsWideTriangleFilter(float offset, out float4 computedWeight)

{

float4 dummy;

_UnityInternalGetAreaPerTexel_3TexelsWideTriangleFilter(offset, computedWeight, dummy);

computedWeight *= 0.44444;//0.44 == 1/(the triangle area)

}

/**

* Assuming a isoceles triangle of 1.5 texels height and 3 texels wide lying on 4 texels.

* This function return the area of the triangle above each of those texels.

* | <-- offset from -0.5 to 0.5, 0 meaning triangle is exactly in the center

* / \ <-- 45 degree slop isosceles triangle (ie tent projected in 2D)

* / \

* _ _ _ _ <-- texels

* X Y Z W <-- result indices (in computedArea.xyzw and computedAreaUncut.xyzw)

*/

void _UnityInternalGetAreaPerTexel_3TexelsWideTriangleFilter(float offset, out float4 computedArea, out float4 computedAreaUncut)

{

//Compute the exterior areas

float offset01SquaredHalved = (offset + 0.5) * (offset + 0.5) * 0.5;

computedAreaUncut.x = computedArea.x = offset01SquaredHalved - offset;

computedAreaUncut.w = computedArea.w = offset01SquaredHalved;

//Compute the middle areas

//For Y : We find the area in Y of as if the left section of the isoceles triangle would

//intersect the axis between Y and Z (ie where offset = 0).

computedAreaUncut.y = _UnityInternalGetAreaAboveFirstTexelUnderAIsocelesRectangleTriangle(1.5 - offset);

//This area is superior to the one we are looking for if (offset < 0) thus we need to

//subtract the area of the triangle defined by (0,1.5-offset), (0,1.5+offset), (-offset,1.5).

float clampedOffsetLeft = min(offset,0);

float areaOfSmallLeftTriangle = clampedOffsetLeft * clampedOffsetLeft;

computedArea.y = computedAreaUncut.y - areaOfSmallLeftTriangle;

//We do the same for the Z but with the right part of the isoceles triangle

computedAreaUncut.z = _UnityInternalGetAreaAboveFirstTexelUnderAIsocelesRectangleTriangle(1.5 + offset);

float clampedOffsetRight = max(offset,0);

float areaOfSmallRightTriangle = clampedOffsetRight * clampedOffsetRight;

computedArea.z = computedAreaUncut.z - areaOfSmallRightTriangle;

}

/**

* Assuming a isoceles rectangle triangle of height "triangleHeight" (as drawn below).

* This function return the area of the triangle above the first texel.

*

* |\ <-- 45 degree slop isosceles rectangle triangle

* | \

* ---- <-- length of this side is "triangleHeight"

* _ _ _ _ <-- texels

*/

float _UnityInternalGetAreaAboveFirstTexelUnderAIsocelesRectangleTriangle(float triangleHeight)

{

return triangleHeight - 0.5;

}

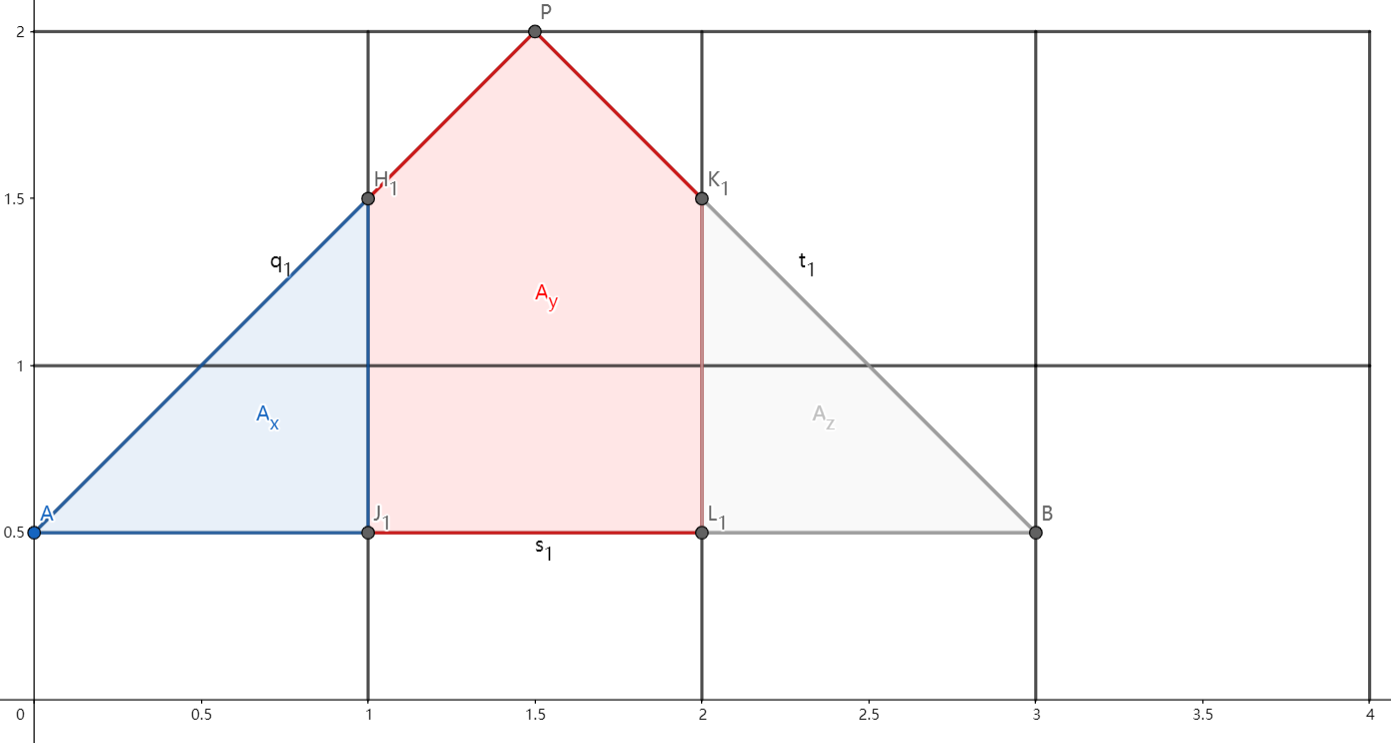

代码很长,我们只需要知道函数接收一个某个方向offset的参数,返回对应方向上4个texel的权重。首先我们知道offset的取值范围为[-0.5, 0.5],当offset为0时面积覆盖如图:

此时该方向4个texel分得的区域面积为(0.125, 1, 1, 0.125),除以三角形的面积2.25就可以算出权重。

当offset为-0.5时,面积覆盖如图:

此时该方向4个texel分得的区域面积为(0.5, 1.25, 0.5, 0)。

再看当offset为0.5的情况:

此时该方向4个texel分得的区域面积为(0, 0.5, 1.25, 0.5)。

推广到一般情况,其实就是个计算面积的数学题,这里就省去计算步骤,直接给出答案:

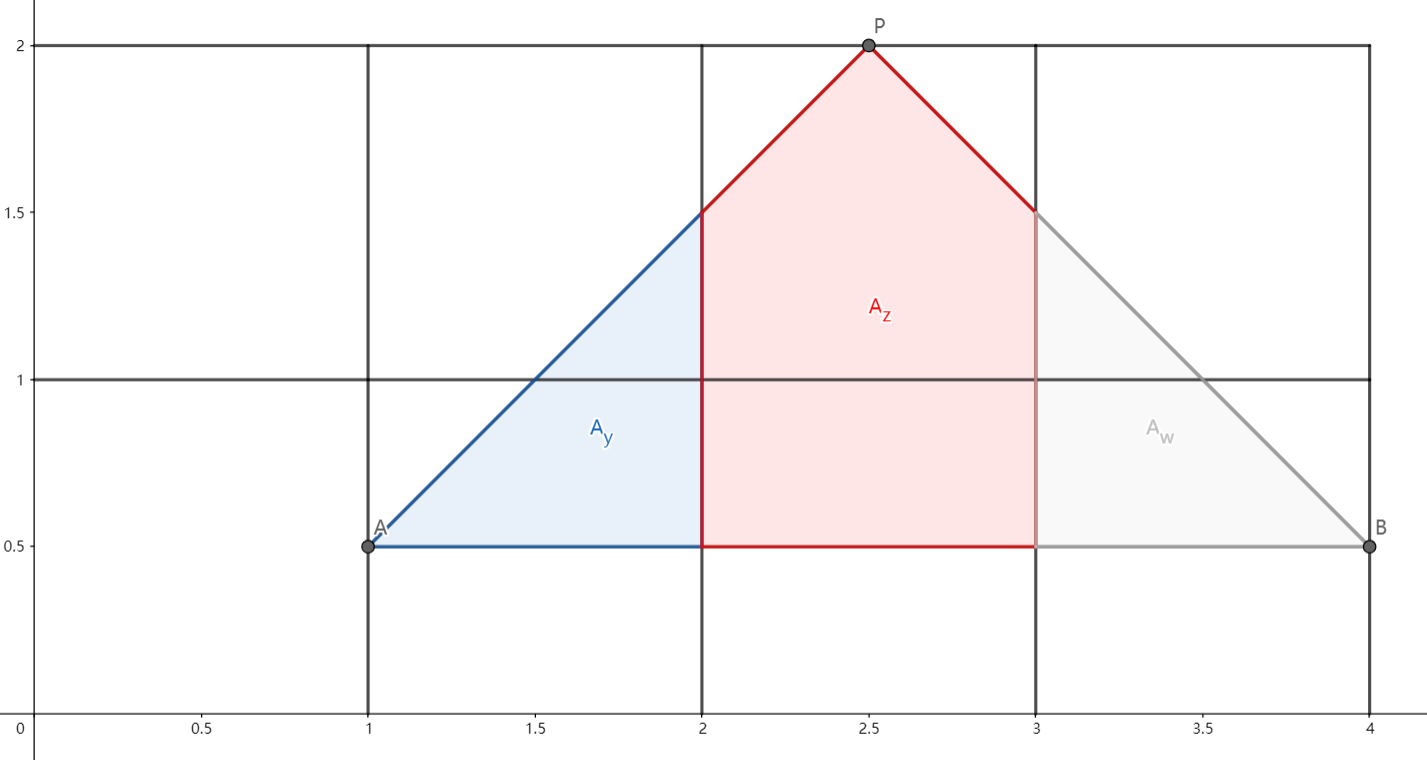

通过两次计算,我们最终可以得到u,v方向4个texel所占的权重。组合起来就可以得到16个texel各自的权重了。不过实际上我们只采样了4次,每次采样背后硬件还做了4次采样操作,因此可以把16个texel分为4块,每一块的权重就是对应4个采样点的每一个点的权重。

4个采样点的权重得到之后,我们接下来需要计算出4个采样点的位置。由于每个点的采样结果是由4个周围texel双线性插值之后得到的,而这4个texel的权重我们已经知道了,所以根据双线性插值,可以反推出采样点的位置:

问题转化为,已知4个texel在双线性插值中的权重,求采样点的位置:

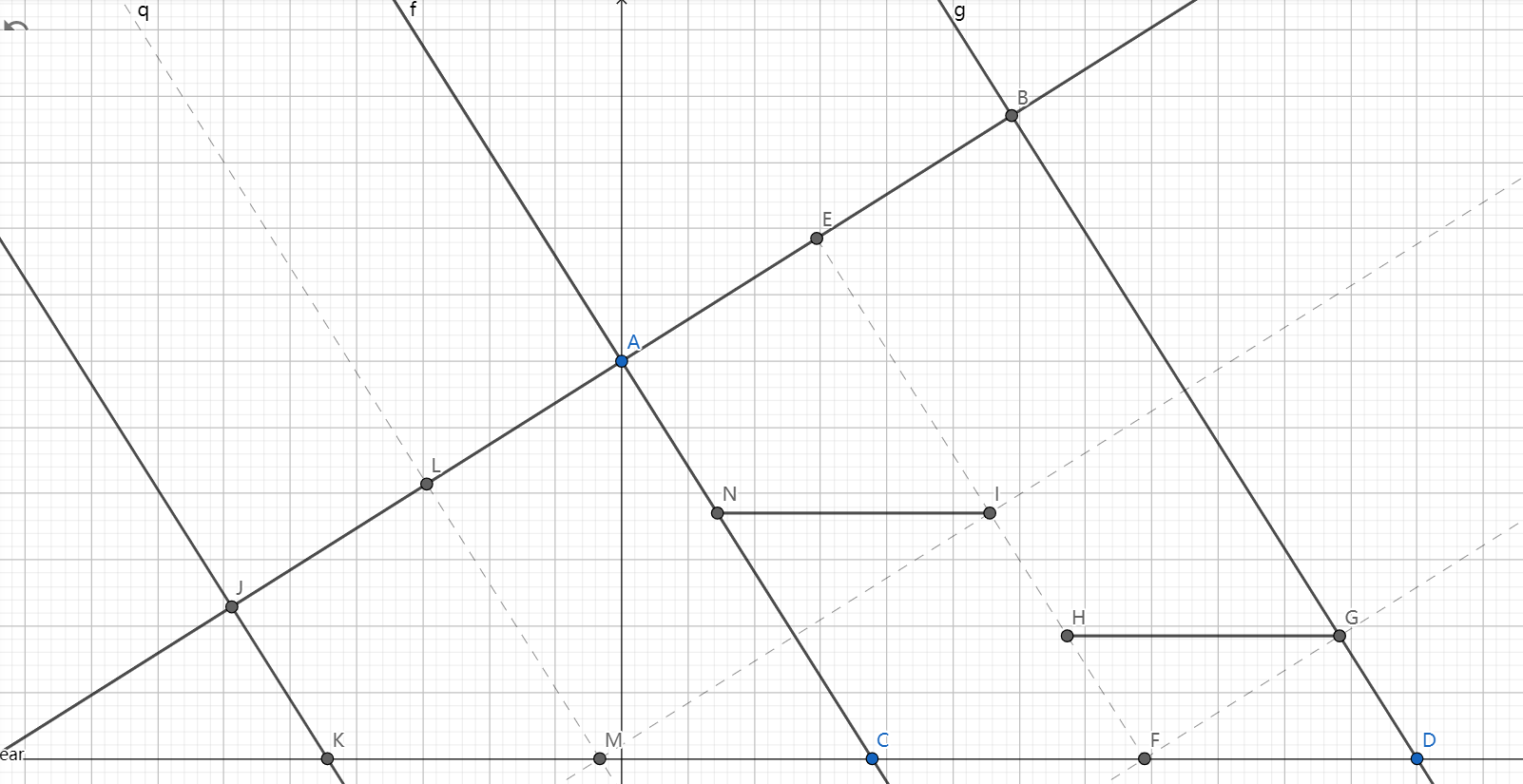

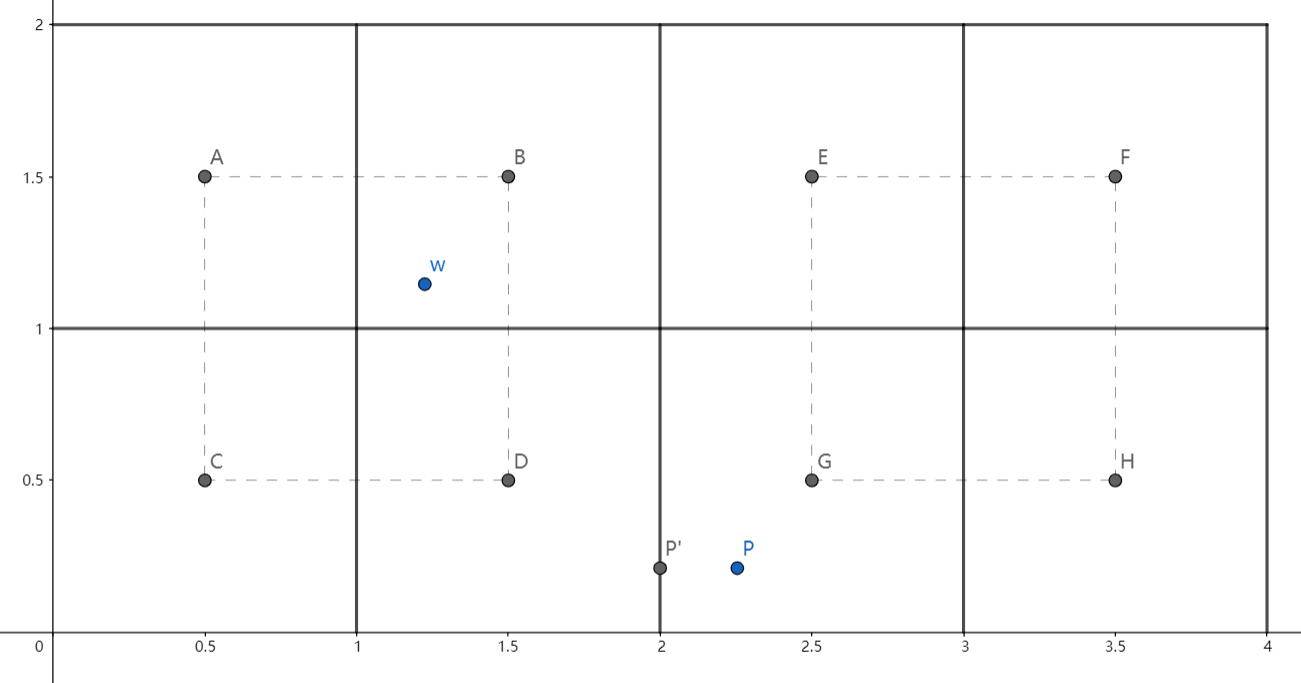

最终我们可以算出4个采样点的offset。因为这里的offset实际上是相对的偏移量,所以还需要加上一个(-1.5, 0.5)的修正,如图所示:

P‘是P点四舍五入取整后的点,显然P'到C点的水*距离是-1.5个texel,P'到G点的水*距离是0.5个texel。

至此,这个函数算是彻底啃完了。好漫长的路程,让我们回到聚光灯下的UnitySampleShadowmap函数,下面要看的部分是硬阴影的处理,要简单多了。针对硬件支持shadow comparison samplers的情况,使用UNITY_SAMPLE_SHADOW_PROJ宏处理:

#define UNITY_SAMPLE_SHADOW_PROJ(tex,coord) tex.SampleCmpLevelZero (sampler##tex,(coord).xy/(coord).w,(coord).z/(coord).w)

如果不支持的情况使用SAMPLE_DEPTH_TEXTURE_PROJ宏处理:

# define SAMPLE_DEPTH_TEXTURE_PROJ(sampler, uv) (tex2Dproj(sampler, uv).r)

点光源的SHADOW_ATTENUATION

好了,最后还剩下一个点光源,让我们迫不及待地看下它的UnitySampleShadowmap实现吧:

inline half UnitySampleShadowmap (float3 vec)

{

#if defined(SHADOWS_CUBE_IN_DEPTH_TEX)

float3 absVec = abs(vec);

float dominantAxis = max(max(absVec.x, absVec.y), absVec.z); // TODO use max3() instead

dominantAxis = max(0.00001, dominantAxis - _LightProjectionParams.z); // shadow bias from point light is apllied here.

dominantAxis *= _LightProjectionParams.w; // bias

float mydist = -_LightProjectionParams.x + _LightProjectionParams.y/dominantAxis; // project to shadow map clip space [0; 1]

#if defined(UNITY_REVERSED_Z)

mydist = 1.0 - mydist; // depth buffers are reversed! Additionally we can move this to CPP code!

#endif

#else

float mydist = length(vec) * _LightPositionRange.w;

mydist *= _LightProjectionParams.w; // bias

#endif

#if defined (SHADOWS_SOFT)

float z = 1.0/128.0;

float4 shadowVals;

// No hardware comparison sampler (ie some mobile + xbox360) : simple 4 tap PCF

#if defined (SHADOWS_CUBE_IN_DEPTH_TEX)

shadowVals.x = UNITY_SAMPLE_TEXCUBE_SHADOW(_ShadowMapTexture, float4(vec+float3( z, z, z), mydist));

shadowVals.y = UNITY_SAMPLE_TEXCUBE_SHADOW(_ShadowMapTexture, float4(vec+float3(-z,-z, z), mydist));

shadowVals.z = UNITY_SAMPLE_TEXCUBE_SHADOW(_ShadowMapTexture, float4(vec+float3(-z, z,-z), mydist));

shadowVals.w = UNITY_SAMPLE_TEXCUBE_SHADOW(_ShadowMapTexture, float4(vec+float3( z,-z,-z), mydist));

half shadow = dot(shadowVals, 0.25);

return lerp(_LightShadowData.r, 1.0, shadow);

#else

shadowVals.x = SampleCubeDistance (vec+float3( z, z, z));

shadowVals.y = SampleCubeDistance (vec+float3(-z,-z, z));

shadowVals.z = SampleCubeDistance (vec+float3(-z, z,-z));

shadowVals.w = SampleCubeDistance (vec+float3( z,-z,-z));

half4 shadows = (shadowVals < mydist.xxxx) ? _LightShadowData.rrrr : 1.0f;

return dot(shadows, 0.25);

#endif

#else

#if defined (SHADOWS_CUBE_IN_DEPTH_TEX)

half shadow = UNITY_SAMPLE_TEXCUBE_SHADOW(_ShadowMapTexture, float4(vec, mydist));

return lerp(_LightShadowData.r, 1.0, shadow);

#else

half shadowVal = UnityDecodeCubeShadowDepth(UNITY_SAMPLE_TEXCUBE(_ShadowMapTexture, vec));

half shadow = shadowVal < mydist ? _LightShadowData.r : 1.0;

return shadow;

#endif

#endif

}

在支持depth的cubemap情况下,Unity模拟了cubemap的采样过程,选择正值最大的那个分量作为采样的面。接下来把这个采样的面作为投影*面,手动计算了投影空间下的剪裁坐标。怎么看出来的呢?我们首先注意到有个内置变量_LightProjectionParams,通过查阅资料可以得到:

_LightProjectionParams = float4(zfar / (znear - zfar), (znear * zfar) / (znear - zfar), bias, 0.97)

这里的znear和zfar是点光源空间的裁剪面。然后我们看到有如下的计算:

熟悉投影矩阵的同学可能一下子就发现了,这不就是计算相机空间下投影之后的z坐标嘛!没看出来的同学也可以尝试用\(z_n,z_f\)这两个值代入到式子里去:

豁然开朗。最后别忘了根据是否reverse z去颠倒一下z的值就好。

对于不支持depth的cubemap情况,就只需简单地计算当前点和光源的距离即可。

继续往下看,Unity根据是否启用软阴影做了不同的处理,与聚光灯类似,软阴影会采样右上前方、左下前方、左上后方、右下后方4个位置进行PCF,根据硬件的支持程度UNITY_SAMPLE_TEXCUBE_SHADOW宏有不同的定义:

#define UNITY_SAMPLE_TEXCUBE_SHADOW(tex,coord) tex.SampleCmp (sampler##tex,(coord).xyz,(coord).w)

#define UNITY_SAMPLE_TEXCUBE_SHADOW(tex,coord) tex.SampleCmpLevelZero (sampler##tex,(coord).xyz,(coord).w)

#define UNITY_SAMPLE_TEXCUBE_SHADOW(tex,coord) ((SAMPLE_DEPTH_CUBE_TEXTURE(tex,(coord).xyz) < (coord).w) ? 0.0 : 1.0)

不支持depth的cubemap使用SampleCubeDistance函数进行采样:

inline float SampleCubeDistance (float3 vec)

{

return UnityDecodeCubeShadowDepth(UNITY_SAMPLE_TEXCUBE_LOD(_ShadowMapTexture, vec, 0));

}

#define UNITY_SAMPLE_TEXCUBE_LOD(tex,coord,lod) tex.SampleLevel (sampler##tex,coord, lod)

float UnityDecodeCubeShadowDepth (float4 vals)

{

#ifdef UNITY_USE_RGBA_FOR_POINT_SHADOWS

return DecodeFloatRGBA (vals);

#else

return vals.r;

#endif

}

inline float DecodeFloatRGBA( float4 enc )

{

float4 kDecodeDot = float4(1.0, 1/255.0, 1/65025.0, 1/16581375.0);

return dot( enc, kDecodeDot );

}

代码基本是自明的。再看关闭软阴影情况的处理,也很简单,就是只采样一次作为阴影值,只做一次深度比较作为最终的结果。

Reference

[1] Shadows

[2] SampleCmp (DirectX HLSL Texture Object)

[3] what is in float4 _LightShadowData?

[4] An introduction to shader derivative functions

[5] Shadow Mapping: GPU-based Tips and Techniques

[6] 阴影的PCF采样优化算法

如果你觉得我的文章有帮助,欢迎关注我的微信公众号(大龄社畜的游戏开发之路)